Migrating to Pydantic 2.0: Beanie for MongoDB

But just how much work is it to take a framework deeply built on Pydantic and make that migration? What are some of the pitfalls? On this episode, we welcome back Roman Right to talk about his experience converting Beanie, the popular MongoDB async framework based on Pydantic, from Pydantic v1 to v2. And we'll have some fun talking MongoDB as well while we are at it.

Episode Deep Dive

Guest Introduction and Background

Roman Right: Roman is the creator and maintainer of Beanie, an async ODM (object document mapper) that works with MongoDB and Pydantic. He originally began this project as a fun experiment to integrate Python’s async features with MongoDB (via Motor) and Pydantic for data validation and modeling. Over time, Beanie grew into a production-worthy tool embraced by many developers. Roman has lived in several countries, including Germany, and has recently moved to the United States.

What to Know If You're New to Python

If you’re just getting started and want to follow this conversation about Pydantic, MongoDB, and async Python, some foundational Python knowledge helps.

Key Points and Takeaways

1) Migrating from Pydantic v1 to v2 in Beanie

Beanie is deeply integrated with Pydantic, so migrating from v1 to v2 was a major overhaul. Roman shared that while Pydantic mostly maintained backward compatibility at the public API level, the underlying internals—especially around validation and forward references—changed considerably, requiring careful updates and testing. Once complete, the result was significant speed improvements and a more modern configuration system.

- Links and Tools

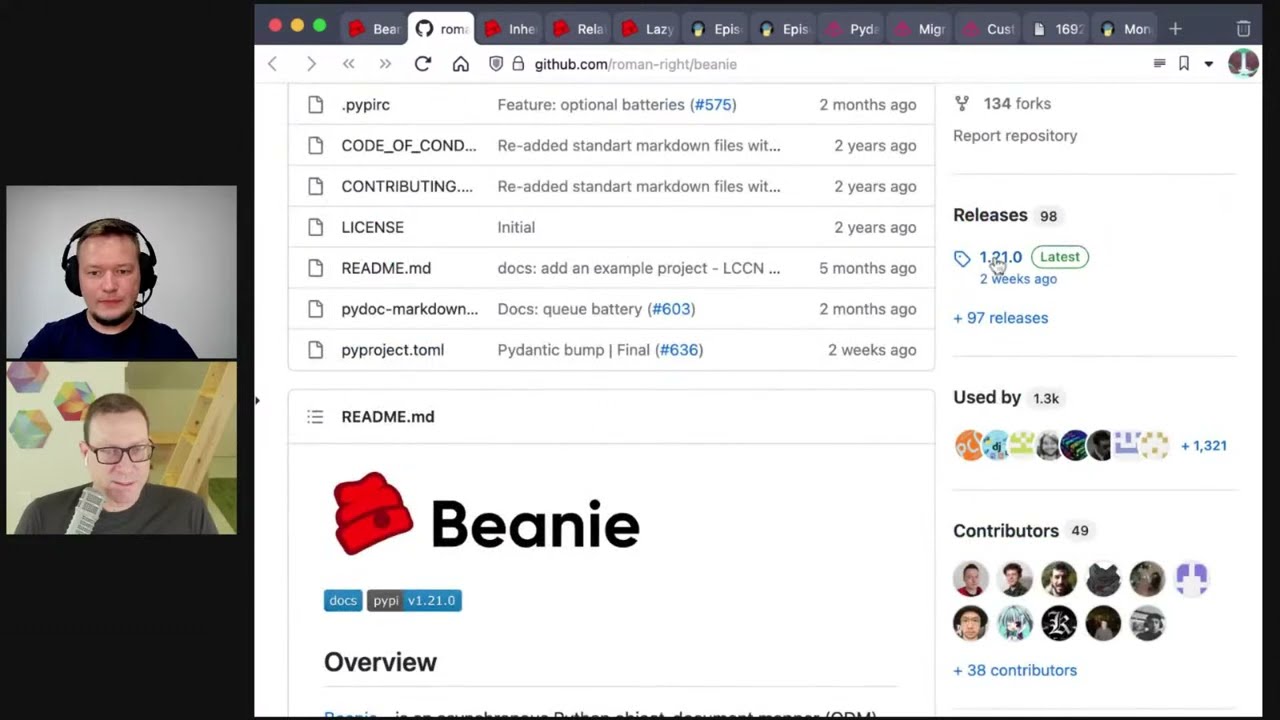

- Beanie: github.com/roman-right/beanie

- Pydantic: docs.pydantic.dev

2) Performance Boosts with Rust-based Pydantic Core

One of the biggest wins in Pydantic v2 is its new Rust-based validation engine. Pydantic’s performance gains are especially notable for large or deeply nested documents. Even though Beanie’s main bottleneck tends to be I/O with MongoDB, the new core can yield up to a 2x speedup for complex data structures.

- Links and Tools

- Pydantic v2 Announcement: blog.pydantic.dev/blog/pydantic-v2

- Motor (async MongoDB driver): motor.readthedocs.io

3) Deep Internals and Forward References

A big challenge for frameworks that rely on Pydantic is resolving type hints—especially when two classes reference each other. Previously, Pydantic’s Python-based reflection made it easier to peek at references. Now, the Rust-based approach means some details got hidden away. Roman ended up implementing his own forward-reference resolver for Beanie’s advanced features like document linking and inheritance.

- Links and Tools

- FastAPI’s GitHub (inspiration on how to handle forward refs)

4) Lazy Parsing vs. Full Validation

Beanie once relied heavily on a lazy parsing model to avoid slowing down apps fetching thousands of documents. But now, Pydantic v2’s faster validation often makes lazy parsing less necessary. However, for extreme high-load use cases with massive data sets, lazy parsing can still be beneficial.

- Links and Tools

5) Document Inheritance in MongoDB

Beanie supports storing multiple classes in one MongoDB collection using class inheritance. For example, a “Vehicle” base class can have “Car” or “Bicycle” subclasses all living in the same collection, each automatically storing an identifier for the subclass type. This design allows flexible queries over the full set or just a specific subtype.

- Links and Tools

6) Indexes and Optimizing MongoDB Performance

Even though Pydantic v2 delivers big performance boosts, indexing in MongoDB remains a critical factor. Many times, performance problems have nothing to do with Python or Pydantic overhead but rather suboptimal or missing indexes. Beanie allows specifying indexes, so you can speed up queries significantly.

- Links and Tools

7) Using Locust for Load Testing

To measure real performance improvements before and after the upgrade, the guest mentioned using Locust. Locust helps simulate large numbers of users hitting a web endpoint, letting you see how your app scales under load with both Pydantic v1 and v2.

- Links and Tools

8) Combining Async Python, FastAPI, and Beanie

Many Beanie users run it alongside FastAPI to handle both the inbound/outbound requests and database operations. Since FastAPI is also built on Pydantic, upgrading both FastAPI and Beanie to the new version of Pydantic can lead to a “double acceleration” effect: faster request validation and faster document parsing.

- Links and Tools

Interesting Quotes and Stories

- Roman on Beanie’s origins: “I just wanted to play with async and Pydantic. Suddenly, people started filing issues, asking for features, and I realized I’d made an open-source project.”

- On Pydantic v2’s speed: “It’s not often you get such a major speedup in your app by just upgrading a library—sometimes two times faster.”

- On the move to Rust under the hood: “I wondered how they’d do all that Python logic in Rust, but it’s super impressive to see.”

Key Definitions and Terms

- ODM (Object Document Mapper): Like an ORM, but for document databases such as MongoDB. It maps Python objects to documents and collections.

- Forward Reference: A type hint referring to a class that isn’t yet defined in the code at parse time, often used when classes reference each other.

- Aggregation Framework (MongoDB): A pipeline-based approach to transform and analyze data in MongoDB rather than retrieving all documents to Python first.

- Async / await: Python syntax for concurrent code execution, especially useful when you wait on I/O or external APIs, such as database calls.

Learning Resources

Here are some deeper resources to expand on what you learned in this episode:

- MongoDB with Async Python: For hands-on practice with Beanie, Pydantic, FastAPI, and async.

- Full Web Apps with FastAPI: Build powerful HTML-based apps using FastAPI and its Pydantic underpinnings.

Overall Takeaway

Migrating from Pydantic v1 to v2 can be challenging for projects deeply tied to its internals, as Roman discovered with Beanie. However, once updated, teams benefit from a major performance boost, cleaner interfaces, and more robust validation. This episode highlights that even in Python’s dynamic ecosystem, carefully designed upgrades and open-source collaboration make next-level improvements possible without rewriting your entire stack.

Links from the show

Beanie on GitHub: github.com

Roman on Twitter: @roman_the_right

Beanie Release 1.21.0: github.com

Talk Python's MongoDB with Async Python Course: talkpython.fm

Pydantic Migration Guide: docs.pydantic.dev

Customizing validation with __get_pydantic_core_schema__: docs.pydantic.dev

Bunnet (Sync Beanie): github.com

Generic `typing.ForwardRef` to support generic recursive types: discuss.python.org

Pydantic v2 - The Plan Episode: talkpython.fm

Future of Pydantic and FastAPI episode: talkpython.fm

Beanie Lazy Parsing: beanie-odm.dev

Beanie Relationships: beanie-odm.dev

Locust Load Testing: locust.io

motor package: pypi.org

Watch this episode on YouTube: youtube.com

Episode #432 deep-dive: talkpython.fm/432

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 By now, surely you've heard how awesome Pydantic version 2 is.

00:03 The team led by Samuel Colvin spent almost a year refactoring and reworking the core

00:07 into a high-performance Rust version while keeping the public API in Python and largely unchanged.

00:13 The main benefit of this has been massive speedups for the frameworks and devs using Pydantic.

00:18 But just how much work is it to take a framework deeply built on Pydantic and make that migration?

00:24 And what are some of the pitfalls?

00:26 On this episode, we welcome back Roman Wright to talk about his experience converting Beanie,

00:31 the popular MongoDB async framework based on Pydantic, from Pydantic 1 to 2.

00:36 And we'll have some fun talking about MongoDB while we're at it.

00:39 This is Talk Python To Me, episode 432, recorded August 16th, 2023.

00:45 Welcome to Talk Python To Me, a weekly podcast on Python.

01:01 This is your host, Michael Kennedy.

01:03 Follow me on Mastodon, where I'm @mkennedy, and follow the podcast using @talkpython,

01:08 both on fosstodon.org.

01:10 Be careful with impersonating accounts on other instances.

01:13 There are many.

01:14 Keep up with the show and listen to over seven years of past episodes at talkpython.fm.

01:19 We've started streaming most of our episodes live on YouTube.

01:23 Subscribe to our YouTube channel over at talkpython.fm/youtube to get notified about upcoming shows

01:29 and be part of that episode.

01:31 This episode is brought to you by Studio 3T.

01:34 Studio 3T is the IDE that gives you full visual control of your MongoDB data.

01:39 With a drag and drop visual query builder and multi-language query code generator,

01:44 new users will find they're up to speed in no time.

01:47 Try Studio 3T for free at talkpython.fm/Studio 2023.

01:52 And it's brought to you by us over at Talk Python Training.

01:56 Did you know we have over 250 hours of Python courses?

02:01 And we have special offers for teams as well.

02:03 Check us out over at talkpython.fm/courses.

02:07 Roman, welcome back to Talk Python To Me.

02:11 Hi.

02:12 Hey, so good to have you back on the show.

02:13 See you again.

02:14 Yeah, you as well.

02:15 Thank you.

02:15 How have you been?

02:16 So it was a nice adventure for me.

02:18 Like, these two years of building Python projects, of moving to other countries.

02:25 So, yeah.

02:26 Are you up for sharing that with people?

02:27 What are you up to?

02:28 Sorry?

02:28 Are you up for sharing where you've moved to?

02:30 Last time we spoke, you were in Berlin, I believe.

02:33 I was in Germany.

02:34 And now I'm in America.

02:35 So, completely different country.

02:38 Completely different continent.

02:40 Yeah.

02:41 Are you enjoying your time there?

02:42 Yeah.

02:42 So, I like it so much.

02:44 Honestly, I like Germany too.

02:46 So, Germany is a great country.

02:48 But I like different things.

02:50 Yeah, but you're probably looking forward to not freezing cold winters.

02:53 I want to try.

02:54 Honestly, I don't know if I will like it or not.

02:57 But I want to try until just a few years, for example.

03:00 I moved to San Diego, California a long time ago.

03:04 I didn't really enjoy the fact that there was no winters and there was no fall.

03:08 It was just always nice.

03:10 Always.

03:10 Until one day I realized, you know what?

03:12 We just headed out mountain biking in the mountains.

03:15 And the weather was perfect.

03:16 And it was February.

03:17 And we didn't even check if it was going to rain or be nice.

03:21 Because it's always nice.

03:21 You know what?

03:22 That's a good trade-off.

03:23 You could do a lot of cool stuff when you live in places like that.

03:26 So, I'm glad to hear.

03:27 I'm glad to hear.

03:28 You'll have to let us know how it goes.

03:30 And Beanie has been going really well as well.

03:35 Right?

03:35 So, when we first spoke, Beanie was just kind of a new project.

03:39 And the thing that caught my eye about it was two really cool aspects.

03:44 Asynchronous and Pydantic.

03:46 I'm like, oh, those things together plus MongoDB sound pretty awesome.

03:49 And so, it's been really fun to watch it grow over the last two years.

03:53 And it's gaining quite a bit of popularity.

03:55 I don't know.

03:55 It's kind of popular, but not that much.

03:58 As Pydantic itself, as FastAPI.

04:00 But, yeah, still popular in context of MongoDB.

04:02 I think it's a little bit different than a web framework, potentially.

04:05 Right?

04:06 Like, it's hard to say, you know, is it as popular as FastAPI or Flask or something like that.

04:11 Right?

04:12 Because those things, you know, those are the contexts in which this is used.

04:16 But not everyone uses Mongo.

04:17 Not everybody cares about async Mongo.

04:19 All that, right?

04:19 There's a lot of filters down.

04:21 But it's, I think you've done a really great job shepherding this project.

04:24 I think you've been really responsive to people.

04:27 I know I've seen the issues coming back and forth.

04:29 I've seen lots of releases on it.

04:31 And I guess the biggest news is around, yeah, you're welcome.

04:35 I think the biggest news is around, you know, when Pydantic 2 came out.

04:39 That kind of changed so many things.

04:41 You know, I spoke to Samuel Colvin about his plan there.

04:46 I spoke to Sebastian Ramirez from FastAPI about, like, what he was thinking and where that was going.

04:53 And it sounded like it was not too much work for people using Pydantic, but quite a bit of work for people like you that was, like, deep inside of Pydantic.

05:01 Yeah?

05:01 Yeah.

05:02 So, honestly, I have a Discord channel for support Bini users.

05:06 And there I was talking with other guys, like, probably, I'm sorry, but probably I will not support both versions at the same time.

05:14 Maybe I will have two different branches of Bini with V1 and V2 supporting.

05:19 But finally, I am ended, you know, in a single branch.

05:24 And this was very challenging, honestly, because...

05:27 Okay, interesting.

05:28 I didn't realize you were going backwards in that maintainability there for the people who didn't want to move to Pydantic 2.

05:35 Yeah, there are legacy stuff.

05:37 It must support new features for Pydantic 2.0 also, definitely, because it may be...

05:44 Maybe people want to move to Pydantic 2.0, but they could be stuck on other libraries that support only V1, for example.

05:50 And it can go for a while, like, months.

05:53 And even for me, it took, like, three weeks to make it work.

05:59 Just so people know, pockpython.fm and pythonbytes.fm, both of those are based on MongoDB and Bini.

06:07 And when you came out with the new one, I saw when the release for V2 came out, like, all right, how long until Bini supports this?

06:15 You know, and I saw that you were, like, right on top of it and working on that.

06:18 That was great.

06:18 And then when I went to upgrade it, it was really easy, right?

06:23 Just I use pip-tools and I use pip compile to just get all the latest versions of the dependencies and update the requirements.

06:29 So then I installed a new one and it wouldn't run because there's not because of anything that Bini did,

06:35 but just some changes to Pydantic 2.

06:37 For example, if I had a, let's say there's a database field that was URL and it was an optional string.

06:44 In Pydantic 1, you could say URL colon optional bracket str.

06:50 That's it.

06:50 But there's no default value explicitly set.

06:53 So in Pydantic 2, that's not accepted, right?

06:56 It says, no, no, no.

06:57 If you want it to be none by default, you have to set it to be none explicitly.

07:01 So I had to go through and, like, find all my database documents.

07:04 Basically, anytime there's an optional something, set it equal to none.

07:07 And then that was it.

07:08 That was the upgrade process.

07:10 And now the website runs faster.

07:11 Thank you.

07:12 Welcome.

07:12 Yeah, I invented a middleware, like a few classes and functions that just check if you use Pydantic

07:20 1 or Pydantic 2.

07:22 And based on this, you use a different kind of backends.

07:25 But interface is the same for both.

07:28 So I'm a unified interface inside of Mini.

07:31 So before we get too far down this conversation, give us two quick bits of background information

07:37 here.

07:37 First of all, why MongoDB?

07:39 There's a lot of excitement around things like MySQL, but especially Postgres, relational

07:46 databases, MongoDBs, document database.

07:49 Give us the elevator pitch.

07:50 Why do you like to work with Mongo?

07:52 Honestly, I like to work with all the databases.

07:54 I become databases fun and nerd.

07:57 So I like them all.

07:59 But yeah, MongoDB is a document database.

08:02 And it means the schema, the data scheme is much more flexible than in SQL databases.

08:08 Because in SQL, you use tables, plain tables, while in MongoDB, you use extra documents,

08:14 which could be nested.

08:16 And the level of this nestedness could be really, really high.

08:20 There are some trade-offs based on this.

08:23 The relation system for plain tables could be implemented much simpler than for documents

08:30 because this flexy structure is hard to make nice relations.

08:35 But I'd say it's much more useful for if you use nested data structures in your applications,

08:44 it's much simpler to keep this same data structure in your database.

08:49 And this makes all the processes of development much more easy and more simple to understand

08:56 them, I'd say.

08:57 You don't have the so-called object relational impedance mismatch, where it's like, well,

09:02 you break it all apart like this in the database, and you reassemble it into an object hierarchy

09:06 over here.

09:07 And then you do it again in the other way.

09:09 And all that stuff, it's kind of just mirrored the same, right?

09:12 True.

09:12 And I really like MongoDB to make small projects.

09:16 I mean, when I just want to play with something.

09:19 And I play with data structures a lot.

09:21 And using Postgres or MySQL, I have to do a lot of migrations.

09:26 Because when I change the type of field or just the number of fields, I have to do this

09:31 stuff.

09:31 And this is kind of annoying, because I just want to make fun and to play.

09:36 Yes, exactly.

09:36 Exactly.

09:37 For me, it's easy to make MongoDB fast.

09:41 And it's operationally almost trivial, right?

09:45 If I want to add a field to some collection, I just add it to the class and start using it.

09:50 And it just, it appears, you know, it just shows up.

09:52 And you want adding an asset object?

09:54 You just add it.

09:54 And it just, you don't have to keep running migrations and having server downtime and all

09:58 that.

09:59 It's just, it's glorious.

10:00 Okay, so that's the background where people maybe haven't done anything with Mongo.

10:03 What about Beanie?

10:04 What is Beanie?

10:05 Really quick for people.

10:06 We're talking about it a bit.

10:07 But give us the quick rundown on Beanie and why you built it.

10:10 Like there were other things that talked to MongoDB and Python before.

10:13 Yeah.

10:13 So there's a lot of tools.

10:15 There is Mongo Engine, which is nice and which is official.

10:18 Yeah, I like Mongo Engine too.

10:18 Yeah.

10:19 Yeah.

10:19 But one day I was playing again with new technologies and FastAPI was super new at

10:26 time.

10:26 It was like, it wasn't that famous at time, like three years ago.

10:30 And already was super nice.

10:32 And I wanted to play with it before I can use it in my production projects.

10:37 And I found that there is no nice, let's say, connector to MongoDB from FastAPI because

10:45 there is nothing that could support and Pydantic and asynchronous MongoDB driver motor.

10:50 And I decided like, but why?

10:52 I think I can implement it myself.

10:54 Why not?

10:55 And I made a very small, tiny ODM.

10:58 I even thought it would be tiny all the time.

11:01 It was like, you know, it could support only models of the documents.

11:05 It could insert them.

11:06 And all the operations of MongoDB, it wasn't hidden inside of Beanie.

11:13 You had to use MQL, Mongo query language there.

11:17 So I just released this.

11:18 And somehow in one month it got not that popular, but people just came to me and like, I like what

11:26 you did.

11:27 Could you please add this feature and that feature?

11:29 And this part works wrongly.

11:31 So please fix this.

11:33 And I was like, whoa, I didn't know, but I made an open source product.

11:37 Wow.

11:38 Yeah, that's cool.

11:38 Some weird podcaster guy goes, this is great, except for where the index is.

11:42 Yeah, this was like first or maybe second week after I published it.

11:46 And yeah, you came to my, to GitHub issues.

11:49 Could you add indexes?

11:50 And I was like, I forgot about indexes.

11:52 Yeah, I have to add them.

11:54 Yeah, indexes are like database magic.

11:56 They're just awesome.

11:57 So yeah, and this was kind of playground project.

12:00 And now this is nice.

12:01 So damn, I'm going to be.

12:03 It's been really, really reliable for all the work that we've been doing.

12:06 So good work on that.

12:07 Let's see.

12:08 I guess there's two angles to go here.

12:11 One, if we go over the releases, the big release is this 1.21.0, which says Pydantic V2 support.

12:20 So I want to spend a lot of time talking to you about like, what was your experience going from Pydantic 1 to 2?

12:25 Because as you said, there's the really famous ones like FastAPI and others.

12:30 But there's many, many projects out there that use Pydantic.

12:34 I wonder if we could get it to show it.

12:36 So, you know, GitHub has that feature where it shows used by 229,000 projects.

12:43 228,826 projects.

12:46 Yeah, they're all the projects with Pydantic now, right?

12:48 Exactly.

12:49 Just on GitHub, use Pydantic.

12:51 So, you know, many of them still haven't necessarily done this work to move to 2.

12:57 And so I want to make that the focus of our conversation.

12:59 However, since we had a nice episode on Beanie before, before we get into that aspect,

13:04 let's just do a catch up on like what's happened with Beanie in the last two years.

13:08 What are some of the cool new features and things that you want to highlight for folks?

13:12 I added a lot of features, honestly.

13:14 But there were a few really big.

13:17 I really like one.

13:18 I didn't know that it could be needed for anybody.

13:21 But I was continuously asked about, please add, add this.

13:25 And I didn't want to add.

13:26 But finally, I added.

13:28 And now I love this so much.

13:30 This is called inheritance.

13:32 You can inherit documents.

13:35 So you can make a big inherited structure like car, then vehicle, then from here you can inherit bicycle, by car, and from car inherit something else.

13:47 And the thing is, everything will be stored in the same collection, in the same MongoDB collection.

13:54 And if you want to make statistics over all the types, you can do it.

13:59 And when you need to operate only with a type or subtype, you can do it as well.

14:05 You can choose what you want to do.

14:07 And I know this feature is used in productions now in many projects.

14:12 And this is nice.

14:13 Yeah, this is really cool.

14:14 So when I first heard about it, my first impression was, okay, so instead of deriving from beanie.document, you create some class that has some common features, maybe properties and validation and stuff.

14:27 And then other documents can derive from it.

14:30 So, like you said, bicycle versus car.

14:33 But in my mind, those would still go into different collections, right?

14:37 They would go in different collections.

14:38 And that would just be a simpler way to have the code that would have a significant bit of reuse.

14:42 But the fact that they all go into the same collection and the documents are kind of supersets of each other, I think that's pretty interesting.

14:49 I hadn't really thought about how I'd use that.

14:51 This portion of Talk Python To Me is brought to you by Studio 3T.

14:57 Do you use MongoDB for your apps?

14:59 As you may know, I'm a big fan of Mongo and it powers all the Talk Python web apps and APIs.

15:04 I recently created a brand new course called MongoDB with Async Python.

15:08 This course is an end-to-end journey on getting fully up to speed with Mongo and Python.

15:13 When writing this course, I had to choose a GUI query and management tool to use and recommend.

15:18 I chose Studio 3T.

15:20 It strikes a great balance between being easy to use, very functional and remaining true to the native MongoDB shell CLI experience.

15:28 That's why I'm really happy that Studio 3T has joined the show as a sponsor.

15:32 Their IDE gives you full visual control of your data.

15:36 Plus, with a drag-and-drop visual query builder and a multi-language code generator, new users will find they're up to speed in no time.

15:43 For your team members who don't know MongoDB query syntax but are familiar with SQL,

15:47 they can even query MongoDB directly with Studio 3T using SQL and migrate tabular data from relational databases into MongoDB documents.

15:57 Recently, Studio 3T has made it even easier to collaborate, too.

16:01 Their brand-new team-sharing feature allows you to drag-and-drop queries, scripts, and connections into permission-based shared folders.

16:08 Save days of onboarding team members and tune queries faster than ever.

16:12 Try Studio 3T today by visiting talkpython.fm/studio2023.

16:18 The links in your podcast player show notes.

16:20 And download the 30-day trial for free.

16:24 Studio 3T.

16:24 It's the MongoDB management tool I use for talkpython.

16:27 You can do even two different...

16:31 If you want to count all the vehicles, you can do it without making requests to each of the collections,

16:37 because everything is in the single collection.

16:39 And you can do this with different fields there as well.

16:43 And you can make aggregations over all of them.

16:45 And even over cars separately.

16:48 This is nice.

16:49 And yeah, I like it.

16:50 Does the record have something, some kind of indicator of what...

16:53 Yeah, inside there is...

16:55 What class it is.

16:56 It's like, I'm a car class.

16:57 I'm a bike class.

16:58 Yeah, yeah.

16:58 You can specify...

16:59 Originally, it is called class name or something like this with underscore.

17:04 But you can specify which fields would work for this.

17:07 So you can specify the name of this field.

17:09 And in this field, it stores not only the name of the class, but the structure itself.

17:14 Like for bus, it will keep vehicle, car, bus.

17:19 Yeah.

17:19 So in this field.

17:20 Okay.

17:21 That's why it will be able to...

17:22 Even on the database level, it will understand the hierarchy of this object.

17:27 Right.

17:27 And so if you want to do data science-y things, you could use the aggregation framework to run a bunch of those types of queries on it.

17:34 Right.

17:34 Yeah.

17:34 And it's better to do all this stuff on the database layer because Python is not that fast with iterations.

17:41 While MongoDB is super fast.

17:43 Yeah.

17:43 And plus, you know, you don't need necessarily to pull all the data back just to read some field or whatever.

17:49 Right.

17:50 So, yeah, that's really cool.

17:51 This is not what I expected when I first heard about it, but this is quite cool.

17:55 First time I heard this about this feature, I was like, nobody wants this.

18:00 Why do you try to...

18:02 But then I found how flexible this is getting to be.

18:05 And so, yeah, this is nice.

18:07 The reason I guess it's a surprise to me is it leverages an aspect of MongoDB that's in document databases in general that are interesting, but that I don't find myself using very much is in that you don't have to have...

18:19 There's not a real structured schema.

18:21 And a lot of people say that and kind of get a sense for it.

18:24 For me, that's always meant like, well, the database doesn't control the schema, but my code does.

18:28 And that's probably going to be the same.

18:30 Right.

18:30 So there's kind of an implicit static schema at any given time that matches the code.

18:36 But you can do things like put different records into the same collection.

18:41 You wouldn't do it just like, well, here's a user and here's a blog post and just put them in the same collection.

18:46 That would be insane.

18:48 But there's, you know, if you have this commonality of this base class, I can see why you might do this.

18:52 It's interesting.

18:53 Yeah.

18:53 And in this context, blog post or video post could be different by structure, but could be stored in the single collection.

18:59 One other thing on the page here that we could maybe talk about is link.

19:02 I want to tell people about what link.

19:04 MongoDB is not a non-relational database, but you can force it to work with relations.

19:10 There are even, there is a data type in MongoDB called db-ref, db-reference, which is used to work with this link type in Bini.

19:21 So in Bini, with this generic type link, you can put inside of the link any document type.

19:26 It can make relations based on this link.

19:29 So you can fetch linked documents from another collections using just standard find operations in Bini.

19:36 This is, there is kind of magic under the hood.

19:39 I use, instead of using find operations, MongoDB find operations, I use aggregation framework of MongoDB, but it is hidden under the hood of Bini.

19:48 And so, yeah, and you can use relations then.

19:51 And the nice thing about new features, because link already was implemented, I think, two years ago.

19:57 But again, I don't come up with my own features, I think.

20:02 Every feature somebody asked me for.

20:04 And I was asked for another feature to make backtracking, back references for these links.

20:11 Like, if you have a link from one document to another, another document should be able to have to fetch this relation backwards.

20:19 So in this case, you've got an owner which has a list of vehicles.

20:23 But given a vehicle, you would like to ask who is its owner, right?

20:26 True.

20:27 Okay.

20:27 And I implemented this.

20:28 I named backlinks, backlink, and it can just fetch it in reverse direction.

20:34 That's cool.

20:35 And the nice thing about this is it only uses the magic of aggregations, and it doesn't store anything for backlinks fields in the collection itself.

20:45 Okay.

20:45 In the MongoDB document, you never will find this field for backlink, because everything you need is on the link.

20:52 And this is nice.

20:53 Yeah, that is really cool.

20:54 In the queries, in the find statement, you add fetch underscore link equals true.

20:59 And that's kind of like a join.

21:00 Is that how that works?

21:01 There are options.

21:02 Eager versus lazy loading type of thing.

21:04 When you find without this option, default it is false.

21:07 You will see in the field, in the link at field, you will see only link itself.

21:11 It will be linked with ID inside of the object.

21:15 But if you put fetch, and you can fetch it manually, like with method dot fetch, it will work.

21:22 But when you put fetch links true, it will fetch everything automatically on the database layer.

21:28 And yeah, it will return all the link documents.

21:30 That's really cool.

21:31 Other one?

21:31 Lazy parsing.

21:33 I mean, we all want to be lazy.

21:34 But what are we doing here?

21:35 What is this one?

21:36 Yeah, so this is, in some cases, Bini could be used for really high load projects.

21:42 And sometimes you need to fetch like thousands of documents in a moment.

21:47 And the nature of Pydantic is synchronous, not synchronous, because it uses CPU bound operations there.

21:55 And when you fetch hundreds of documents, even thousands of documents, you completely block your system.

22:02 Because a lot of loops to parse data, to validate data, and etc.

22:06 And if you use it in asynchronous framework, this is not behavior that you like to have.

22:12 And to fix this problem.

22:14 Maybe even in a synchronous framework, it might be the behavior you don't want to have as well.

22:18 Yeah.

22:19 Right?

22:19 Even then.

22:19 Yeah, yeah.

22:20 This is true.

22:20 But even in asynchronous, you don't accept.

22:23 Yeah, exactly.

22:24 But it's totally reasonable to think, well, I'm going to do a query against this document.

22:28 And it's got some nested stuff.

22:30 Maybe it's a big sort of complex one.

22:32 But you really just want three fields in this case.

22:35 Now, you can use projections, right?

22:38 Like that is the purpose of projections.

22:39 But it limits the flexibility.

22:42 Because you only have those fields that were projected.

22:44 And in different situations, maybe you don't really know what parts you're going to use, right?

22:50 You can have a complicated approach.

22:51 Yeah.

22:52 So this kind of lets the consumer of the query use only what they need, right?

22:56 Yeah.

22:56 And so when you use this lazy parsing, Pydantic doesn't parse anything on the initial call.

23:02 Like you receive everything and store everything in a raw format in dictionaries, in Python dictionaries there.

23:07 And when you call any field of the document, just parse it using Pydantic tools to parse as Pydantic do it internally.

23:16 So is this lazy parse primarily implemented by Pydantic?

23:20 Or is this something you've done on top of Pydantic?

23:22 I implemented my own library for this.

23:24 Like it's on top of Pydantic for sure.

23:26 But it uses, in Pydantic, there are tools and different in V1 and V2.

23:31 So the name of this tool is different, but you can parse something into type, not into base model, but just into type.

23:38 You can provide a type and the value to be parsed into this type and it can parse it.

23:44 So I use this.

23:45 And additionally, I had to handle with all the validator stuff because this is a very important part of Pydantic and you have to be able to validate things.

23:54 And with lazy parsing, if it sees that there are root validators, then it will validate it against any field.

24:01 Or if there is a field-specific validator, it will validate it against the field if this field was called.

24:07 So yeah, I had to do some magic with Pydantic there.

24:11 But it, especially with Pydantic V1, which was slower than V2, significantly slower, it was very helpful for people who have to fetch really big amounts of documents and to not block their pipeline in this step.

24:28 This is a nice feature also.

24:30 Yeah, I think this is a really nice feature.

24:32 What's the harm?

24:33 And does it make certain things slower if you're going to use every field or, you know, why not just turn this on all the time, right?

24:41 That's my question.

24:42 Yes, for sure.

24:42 There are trade-offs.

24:44 If you will use this all the time, it would be like around twice slower than just Pydantic validation if you will use all the fields.

24:54 If you will use just a few fields, it would be faster.

24:57 But I didn't turn it on by default because in general case, when people just want to fetch 10, maybe 20 documents and use all the fields of them, it would be slower.

25:06 That's kind of what I expected.

25:08 But if you've got a really complicated document and you only use a few fields here and there, then it seems like a real win.

25:13 But you're going to use everything anyway.

25:15 And especially for Pydantic V2, when all the validation happens on the Rust layer.

25:21 But here I cannot do this because I cannot, you know, I cannot put the logic into the Rust layer because there is no Rust layer for Beanie.

25:27 And if you will fetch all the fields of the documents using this lazy parsing, everything will happen on the Python layer instead of the Rust layer.

25:35 And it would be as slow as V1.

25:37 So we will not see benefits.

25:39 It's interesting, even in the V1 version of Pydantic, but now with Pydantic 2 being roughly 22 times faster, that all of a sudden you want to let Pydantic do its thing if it can.

25:49 Yeah, true.

25:50 Speaking of Pydantic getting some speed up from Rust, is any part of Beanie some other runtime compilation story than pure Python?

26:00 Is there like a Cython thing or a Numba or any of those?

26:04 The thing about the speed of Beanie is, Beanie is not about, so as Pydantic is very CPU bound, all the stuff happens on the CPU layer.

26:15 While Beanie uses mostly input-output operations because it interacts with the database.

26:21 And for this, just default, I think, await pattern of Python uses, works the best.

26:28 And all the time, if there are any delays, it's most likely about this interaction process between application and MongoDB.

26:37 It could be network, it could be just delay from the query and et cetera, but not Beanie because Beanie doesn't compute anything.

26:45 Beanie doesn't do very much, I guess, right?

26:47 It coordinates motor, the asynchronous engine from MongoDB, and it coordinates Pydantic.

26:54 It kind of clicks those together using async and await, a nice query API that you put together.

27:00 And so, right, it's more about letting motor be fast and letting Pydantic be fast and getting out of the way, I suppose.

27:07 This is true, so yeah.

27:08 Beanie is mostly about making some magic and convert Python syntax into MongoDB syntax.

27:14 And thank you for that.

27:15 That's really nice.

27:16 It's super nice the way that the syntax works, right?

27:19 The fact that you're able to use native operators, for example, right?

27:23 You do the queries.

27:24 I really like that.

27:25 Yeah, it was.

27:25 It is.

27:26 I don't like when somebody uses this in production applications.

27:29 I mean, when, because it's hard to find problems.

27:32 But when we are talking about libraries, this is really nice when it supports Python syntax.

27:37 So that's why I decided to implement it.

27:39 People shouldn't get too crazy with overloading their own operators.

27:43 But as an API, it's really good.

27:44 So for example, in this case, you have a sample document and it has a number.

27:47 And so the query is sample.find.

27:49 And then the argument is sample.number =10, right?

27:54 Which is exactly the way you would do it in an if statement.

27:57 You know, contrast that with other languages or other frameworks such as Mongo Engine, which I used previously and is nice.

28:04 But you would say sample.find and then just number_eq =10, right?

28:10 And you're like, I know what that means.

28:13 But it's not speaking to me the same way as if I was just doing a raw database query or writing pure Python, right?

28:20 Yeah, it sounds like you have to learn another one of English, right?

28:23 Exactly.

28:23 You've got to like, if you want to do a nested thing, it's the double underscore, you know, it'd be like number double underscore item underscore eq =10.

28:32 You're like, oh, my goodness.

28:34 So yeah, that's kind of tricky.

28:35 Definitely true.

28:36 Cool.

28:36 Okay, well, let's talk about this, this upgrading story for the 22, 229,000 other folks out there who maybe haven't done this.

28:44 So a while ago, back in 2022, almost exactly to the day, a year ago, I had Samuel Colvin on to talk about the plan to move to Pydantic v2, why he did it.

28:56 It was really interesting.

28:57 So that's worth listening to.

28:58 If people want to learn more, as well as I had Sebastian Ramirez and Samuel Colvin on to talk about it live at PyCon.

29:04 And that was fun too.

29:05 So if people want background on what is the story of Pydantic 2, they can check that out.

29:10 But the big announcement was on June 30th, a couple months ago, I guess that's a month and a half ago, Pydantic v2 is here after just one year of hard work.

29:19 That was a huge project for the Pydantic folks, which, you know, they've done a great job on it.

29:25 And I guess the big takeaway really is that Pydantic v2 is quite a bit faster.

29:32 Maybe you could speak to that.

29:33 And it's mostly but not exactly the same because, as you already pointed out, the core of it is rewritten in Rust for performance reasons.

29:40 I've made a lot of tests.

29:41 It is much faster when you talk about validation of the models itself, especially when you validate parts really nested and complicated models.

29:52 Then it's much, much faster than Python, then v1 implementation.

29:56 While with Bini, you still can see significant upgrade, performance upgrade, but not that much because with Bini works with MongoDB.

30:06 And there is this input output operation, which is slow and which could not be upgraded by just by decreasing processing time.

30:14 Sure.

30:15 When we are talking about simple documents, it's not that visible, like 10, sometimes 20% faster.

30:23 But when we talk about nested documents, when there are nested dictionaries or nested lists of dictionaries, then it's much, much faster.

30:32 In my Allo test, it is twice faster, v2 against v1.

30:37 And I was super impressed by this because I was expecting this, expecting this, that it would not be that, that faster than as a pedantic v2 itself because of this output operation.

30:49 But yeah, this is so this crazy.

30:51 Right.

30:52 Because it's not just the parsing that Bini does, right?

30:55 Bini sends the message over to Mongo.

30:58 The network does some stuff.

30:59 Mongo does its thing, sends it back, serialized as Bison.

31:03 And then it's got to deserilize into objects somehow.

31:07 And then the pedantic part kicks in.

31:09 Right.

31:09 And plus all the extra bits you've already talked about.

31:11 Right.

31:12 So it, it can only affect that part.

31:14 But I think it, your example here shows, you know, standard computer science answer.

31:19 It depends, right?

31:20 As a, as a faster, it depends.

31:22 I would guess the more complicated your document is, the bigger bonus you get, which you've already said.

31:28 And the more documents you return.

31:31 So if I return one record from the database, it's got five fields.

31:35 The amount of that processing that is pydantic is small.

31:39 But if I return a thousand records and there's all that serialization, like, you know, the database

31:43 has kind of done more or less the same amount of work.

31:46 It's streamed the stuff back.

31:47 But at this, when it gets to Python, it's like, whoa, I've got a lot of stuff to validate and parse.

31:52 And I suspect that also matters how many records are coming back.

31:54 Yeah, this is true. That's why this affected this case for lazy parsing that was implemented for

31:59 v1. And now, now it's not necessary for many cases, only for very extreme, extreme high load.

32:06 That's really cool.

32:07 What makes me smile from this is the more pydantic you use, the more awesome this upgrade to 2 becomes.

32:15 And it's like I said, it's almost no work.

32:17 Technically, I had to set all the optionals to be none.

32:20 That's not a beanie thing.

32:21 That's a pydantic thing, but it's not a big deal.

32:23 So upgrading basically means all the parts of your frame, all the frameworks you use that are

32:28 built on pydantic get faster.

32:30 So for me, when I upgraded the website, it went about, I don't know, 40% faster or something like

32:35 that, which is a huge speed up for very little work.

32:39 It's already really fast, right?

32:40 If you go to, you know, the podcast, you pull up an episode page.

32:43 It's like 30 milliseconds.

32:44 You go to the courses and you pull up a video to play.

32:48 It's got many queries.

32:49 It does, but it's probably 20 milliseconds.

32:51 So now it's 15 milliseconds or 14 milliseconds.

32:54 But still to get that much of a speed up and do basically no work on my part.

32:59 That's awesome.

33:00 And I'm not using a framework like FastAPI where the other side of that story is also pydantic.

33:06 So if you're using FastAPI and beanie, which I think is probably a common combination,

33:11 both the database side that gets you the pydantic things is faster.

33:14 And then the outbound and inbound processing that the API itself does is a lot faster because

33:20 of pydantic.

33:21 And so you get this kind of multiplicative doubling of the speed on both ends, right?

33:26 The numbers you just told about your website is like this time is only about this communication.

33:33 I mean, how bits are going from one computer to another.

33:38 There is no computation there probably.

33:40 It's super, super impressive.

33:42 You know, people say, well, Python is not fast enough.

33:45 That may be true for a few very rare, you know, extremely high load situations, but I would bet

33:52 it's fast enough for most people.

33:54 You know, if your website end to end processing is responding in 15 milliseconds, like, you know,

34:00 you've got other parts of your system like CDNs and an amount of JavaScript you send to worry about,

34:05 not your, not your, pretty awesome.

34:07 Because of indexes also not, don't forget your indexes.

34:10 Yeah.

34:11 Each time, each time when I have any requests about my things are slow and probably I have

34:17 to switch Python to anything else.

34:19 Usually the problem is about the data model, not about the processing because, you know, people could

34:24 store things a bit, a bit wrongly, a bit too nested or less nested than it should be.

34:30 And yeah, indexes.

34:31 Okay.

34:32 Yeah.

34:32 Indexes.

34:33 Or you've done a query where you return a hundred things of huge documents and you only want,

34:38 like say the title and last updated, but you don't do a project projection.

34:42 And so you're sending way too much data.

34:43 It's like select star instead of select title, comma, date, you know, something like that.

34:47 Sometimes, sometimes, sometimes also works to, to make protection on the database layer,

34:53 like to, to make some, like to find maximum elements or minimum elements and to do this

34:58 stuff on the database is better than in Python, because in Python, you have to iterate towards

35:02 the objects to, to find things which is not that efficient.

35:06 So we just have to, you got to pull them all back, deserialize them and validate them and then iterate over

35:11 it rather than just let that happen on just the fields in the database.

35:14 That's, that's a good point.

35:15 And maybe the query is just bad as well.

35:16 Okay.

35:17 So what was your experience?

35:19 You know, they, they shipped the migration guide about the things that you've got to do.

35:23 And if you look at the scroll bar here, the migration guide is large.

35:28 I don't know how many pages.

35:29 Let's see if I press print, if it'll tell me how many pages.

35:33 No, sadly, it doesn't want to tell me how many pages it would print, but there are many pages here, I guess, if I were to print this out,

35:39 it was this a daunting experience.

35:41 How did it go for you?

35:42 It was very.

35:43 So when I just, we should, we can install a potential tool.

35:47 Or you're like, let's see if it just works.

35:48 Come on.

35:49 And just run tests and everything was.

35:52 Firstly, it was like only one error.

35:56 Nothing can work.

35:57 I don't run any tests.

35:59 So one here.

36:00 Yeah.

36:01 I handled this and each test was read and everything.

36:05 It was really interesting and challenging.

36:07 Interesting because it's kind of really computer science problem sometimes there.

36:12 So Pydantic moved a lot of logic to the Rust layer and it got hidden for me as a user of Pydantic.

36:21 For example, there is in Python, there is a thing called forward ref.

36:26 What is it?

36:27 And even you have two classes in a single module, for example, and in one class you have field of the second class.

36:33 And in the second class, you have field of type of the first class.

36:37 You cannot just put these names without any magic.

36:40 You can make input from annotation or you can just write it as a string instead of the class itself.

36:46 I see.

36:47 But then Python will understand it as a forward ref, forward reference for another class.

36:52 And Pydantic can resolve it and make it an actual class finally in the base model.

36:59 And in V1, this mechanism was implemented in Python and I could use it and I could use the results of this.

37:06 While in Pydantic V2, everything is in the Rust layer.

37:09 And when it updates this reference, it updates inside of the Rust and I cannot see the actual class finally.

37:16 And I had to implement my own resolver there.

37:18 And there are a few stuff like this.

37:20 Another one big change was about...

37:23 That does not sound easy.

37:25 Yeah.

37:26 I was like, so will I?

37:28 Oh no.

37:28 Am I going to do this?

37:29 Yes, exactly.

37:31 Exactly.

37:32 And, you know, for people who maybe haven't played with this, it's really important.

37:37 Because Pydantic and hence Beanie depends on the types, right?

37:42 The parsing and the validation and all of those things, you have to know exactly what type a thing is, right?

37:48 And so if you lose access to the forward reference resolution, that's going to be bad.

37:53 Yeah.

37:54 Yeah.

37:54 For example, in links or in backward links, if you don't clearly understand which document is linked to this document,

38:01 you cannot build a query for this.

38:03 So yeah.

38:04 And I have made this resolver.

38:05 Honestly, I just was reading Pydantic with one code and was...

38:09 Hold on.

38:10 I didn't copy paste, but I was using nearly the same algorithm.

38:14 And another big feature was about how validation of the custom types happens.

38:20 Yeah.

38:21 And get Pydantic core schema.

38:23 So everything is in the schema inside of the Rust layer now.

38:26 And you can write instructions in the Python, which would be important to this Rust layer and would be called from there.

38:35 That's why the whole syntax of this completely changed.

38:40 Completely.

38:40 And that's why I was thinking like, but so how can I have two completely different syntaxes for the same thing inside of the same class?

38:49 And I was thinking like, maybe I have to switch branches now and have Beanie V2 after Pydantic V2 and Beanie V1 and support all the new features in both versions.

39:02 And it was, oh no, it will be a nightmare.

39:04 You're already busy with one project.

39:06 Do you need...

39:06 Because I already have Bonnet.

39:07 Yeah.

39:08 Do you want two projects?

39:09 Yeah.

39:09 And I already have Bonnet, which is a synchronous version of Beanie, which also would be split into two then.

39:15 And I will have four projects, which is too crazy.

39:19 Yeah.

39:20 No kidding.

39:21 I can split myself.

39:22 This is a problem.

39:23 And yeah, finally, finally, finally, I found...

39:26 I honestly, I just went to FastAPI code and I was reading how they deal with this.

39:31 And like, nice idea.

39:33 I will do the same.

39:34 Thank you guys.

39:35 And that's the power of open source.

39:38 Yeah, it is.

39:40 I feel like Sebastian and FastAPI are kind of examples for a lot of different projects and different people.

39:45 You know, people look to them for kind of an example.

39:49 Yeah, this is true.

39:49 And so, and finally, finally, I solved all the problems.

39:53 It took me to solve all the problems.

39:55 It took me like one, maybe one and a half weeks.

39:58 But then I published a better version and there were performance problems with better version.

40:03 There were some corner cases that I didn't catch and community found them.

40:09 And this is great also about open sourcing, because honestly, I would not be able to find

40:14 all of these problems myself for this short period of time.

40:17 Definitely.

40:18 I guess how much of this is at runtime and how much of it, I guess it's all at runtime,

40:23 but how much of this is startup versus kind of once the app is up and running.

40:27 So, you know, you've got your beanie init code where you pass the motor async client over and you pass the models.

40:34 And it's got to like verify that they all click together correctly.

40:37 You know, they all have the settings that say, you know, what database they go to and the indexes are set up correctly and whatever else is in there.

40:45 And then once I imagine once that gets processed, it kind of just knows it and runs.

40:50 So how much of this stuff that you're talking about was kind of the setup, get things working and how much of it is happening on every query, every insert?

40:59 Most of this was about runtime of about every query and every, not even this way.

41:04 Not even this way.

41:05 Like how do I set up the document itself?

41:08 How do I set up validators?

41:10 Because I also have validators in the documents to make things simpler and this index changed and et cetera.

41:17 So yeah, there are, for example, the config, there was config nested class called config before in Pedantic V1.

41:25 And now this is a field called model config, which is, you see, it's completely different interface again, because this is not a class.

41:32 This is just a field now.

41:33 And I had to, and I was using and still use this config stuff.

41:37 And I had to not only switch to the new syntax, but support config class if you use Pedantic V1.

41:43 And that's why inside of my classes, I have conditions like which field I want to define based on the version of Pedantic.

41:51 And yeah, most of the, most of the changes were about, about this, the tabs of the documents and the tabs of the types, but you use it not on the initialization layer, but on the runtime.

42:03 Yeah.

42:03 All these things.

42:04 You also talked about the performance story.

42:07 Do you do any profiling or have any tools like that?

42:12 Honestly, I didn't.

42:13 So there are P profile and, and other tools to do this stuff.

42:19 I was measuring using just time, start, time, end, but I was doing this for, for different parts of the code, just to see what's happening there and here.

42:29 And honestly, it was when I faced a performance problem.

42:33 And this was because there are some methods in Pedantic V2 that they keep, but marked as deprecated.

42:41 They keep from, from V1.

42:42 In some cases, I just didn't switch it to the, to the new versions.

42:46 And this was the performance problem.

42:48 And when I found all the places where I use deprecated methods, then everything, first of all, then.

42:53 You switch it to the new, more intended one.

42:56 And that's got the, you know, fully optimized version or something like that.

43:00 Yeah.

43:00 Yeah.

43:01 Yeah.

43:01 True.

43:01 Because, because Bini is, is working with a lot of internal things of Pedantic and it uses this very heavily.

43:08 And, sometimes I just don't know that in this, I mean, don't remember that in this, specific part, there is something internal for Pedantic and, I use this also.

43:18 And, so I had to check everything and Pedantic code basis is big already.

43:22 So it's hard to keep everything in mind.

43:24 Yeah.

43:24 I feel like that's the challenge for people like you and FastAPI and others.

43:29 You have your, your code way deeper in the internals of Pedantic than people who just consume FastAPI or consume Beanie like I do.

43:37 This is very interesting.

43:39 Like, I mean, this is true computer science problem.

43:41 Like when you have to swap interfaces and, and you don't even know where all these interfaces are used and you have to detect them.

43:49 So yeah, this is nice.

43:50 It's super interesting.

43:51 Yeah.

43:51 And again, on your, your side, you've got to do that to adapt to the new Pedantic, but you've also got to present some kind of consistent forward looking view to people consuming Beanie.

44:01 So they don't have to rewrite all their code too.

44:03 Right?

44:03 Yeah.

44:04 True.

44:04 So, and, and all the interfaces, all the, I didn't change any Beanie interface when I was working with this, like all the interfaces,

44:13 still the same for Beanie and, nobody should change their code, which uses Beanie.

44:18 I implemented kind of middleware between Pedantic and Beanie and this middleware, have static interfaces.

44:25 And inside of this, there are these conditions like if Pedantic V2, then, and, and yeah, this kind of logic.

44:34 Nice.

44:35 It's pretty good question on the audience from Marwan here.

44:37 It says, other than getting the code to execute correctly, were there any gnarly parts you had to figure out to appease type checkers and linters and that kind of stuff?

44:46 I don't, remember any changes about this time, about these things like, my pie and, rough.

44:53 I use rough for instead of flake eight currently.

44:56 Mm-hmm.

44:57 I didn't fix this stuff.

44:58 everything was okay.

45:00 It was, it was new Pedantic.

45:01 I think, I think, guys made a really great job about this to, to make everything work because yeah, I have, I have other checks about my pipe and about rough and everything went smoothly about this.

45:12 So yeah, this is, I can't imagine being Samuel and team to have to rewrite Pedantic with such a major refactoring realizing quarter million other projects depend on this.

45:23 And then people depend on those projects, you know, and like, how are we going to do this without the world just completely breaking, you know?

45:29 Currently they have a discord channel also for Pedantic.

45:33 And I see, I see many people asking for, for help for, and, some questions about new, new syntax, but I see that it could be much more because so current, current syntax is, is very similar to, to the previous syntax, but the change it's completely rewritten.

45:51 Right.

45:51 Oh yeah.

45:51 This is, this is very impressive work.

45:53 Yeah.

45:53 Completely rewritten a good part of it in another language.

45:56 Yeah.

45:57 Yeah.

45:57 Broken into multiple modules and still it seems to go pretty well.

46:01 Yeah.

46:01 And the assumptions about the default values for, for optionals, that was the only thing that seemed to have caught me out.

46:06 You can put in, in, in the configs that, you, you can have options, like the same, use the same logic as for V1, but you just have to mention it in the, in the config now.

46:17 But I don't remember.

46:18 Honestly, there was a few other things that maybe I ran across, like, and honestly, I don't know why some of these changed.

46:23 So it used to be able to call dot JSON on an object.

46:26 And now it's model underscore dump underscore JSON or there was dict model dump, right?

46:33 Everything is, has prefix model currently in Pydantic.

46:36 And I believe this is to not make conflicts with the user logic, because when you, when you have your own, suffix or prefix, then yeah.

46:46 Then it's simpler for, for users to, to have their own methods.

46:50 And I like this solution.

46:51 This, this is really nice.

46:52 I guess this is the other thing that I ran into is I have a few places I was calling dot dict, I think.

46:57 For me as well.

46:58 And I have like conditions.

46:59 I see.

47:00 Anything else you want to talk about, about the migration, like what's, go okay for you or any other thing you want to highlight about how it went?

47:07 Honestly, I think I mentioned everything and yeah, it, it, it, it, it's when, it's when very, it's mostly thanks to, to, to Podentic team.

47:14 And, yeah, I really, I really like, Podentic V2.

47:17 I still really like Podentic V1 actually, like both, but this completely, this completely different libraries inside and very similar,

47:27 libraries, I regard the interfaces.

47:28 So I really like how, how it's.

47:30 Yeah.

47:31 It's not too often that you get that massive of a speed up of your code and you didn't have to do anything.

47:36 Right.

47:36 You just, I was using this library and it was really right in the core of all the processing and now it's a lot faster.

47:41 So, so it was my code.

47:43 When you read the code, the Rust part of the, of Podentic, I started to read it just to learn.

47:48 And, this is really nice how it can interact with Python parts.

47:53 I mean, I can, I can write Rust itself, just logic.

47:56 I even implemented database in the Rust, but how to do all this Python stuff inside of another language.

48:03 So this is super impressive.

48:05 It is super impressive.

48:06 We'll get a little short on time here.

48:07 Let's see what, I guess I'll give a shout out to this course.

48:10 I recently released MongoDB with async Python, which of course uses Beanie as well.

48:17 And I created this course on Beanie one dot, something prior to the one dot 21 that switches to Pydantic two and it's all Pydantic one.

48:27 And so I was always wondering like, well, how, you know, how tricky is it going to be to upgrade?

48:31 And there was really either zero or very little changes.

48:34 I think maybe the default values on optionals was also a thing I had to adjust on there.

48:39 But if people want to learn all the stuff that we're talking about, you know, just talk Python.fm, go to courses, check out the MongoDB async course.

48:47 It's all about Beanie and stuff like that.

48:49 I was reading this course also and I can highly recommend it.

48:52 They are.

48:53 Thank you so much.

48:54 One thing I do want to give a shout out to is I use Locus.

48:57 Are you familiar with the Locus?

48:58 Locus IO?

48:59 Yeah.

48:59 Yeah.

48:59 What a cool, what a cool project.

49:01 So this lets you do really nice modeling of how users interact with your site using Python.

49:07 And then what you get is, I don't know if there's any cool pictures that show up on here in terms of the graphs,

49:13 but you get really nice graphs that show you real time about how, you know, how many requests per second and different scenarios you get.

49:19 And on this one, I'm pretty sure when I upgraded it to Pydantic 2 and ran it again,

49:25 trying to think of all the variations that you knew, there could be something that has changed that I wasn't aware of.

49:30 Like maybe, maybe I recorded on my M1 Mac mini and then ran it again on my M2 Pro Mac mini.

49:36 So that could affect it by like a little bit as well, like 20%.

49:39 But I think just so using Beanie and FastAPI and upgrading all those, those paths to Pydantic 2 and the respective Beanie and

49:49 FastAPI versions.

49:50 I think it went 50% or double fast, two times as fast, 100% faster just by making that change.

49:56 So that was pretty awesome.

49:57 This is great.

49:59 Yeah.

49:59 Yeah.

49:59 I see, I see, I see this 50% also.

50:02 And yeah, all I did is I just reran pip-tools to get the new versions of everything and reran the load

50:09 tests and look how much faster they are.

50:11 And so that's a real cool example of kind of what I was talking about.

50:14 So yeah, if you want to see all this stuff in action, check out the MongoDB with async Python course.

50:19 Thanks for coming on the show and updating us on Beanie and especially giving us this look into your

50:25 journey of migrating based on Pydantic 1 to 2.

50:28 I think that's really cool.

50:29 Yeah.

50:29 Thank you very much.

50:30 Thank you very much for having me here.

50:31 Of course.

50:32 So before you get out of here, I got a PIPI project library to recommend to people something

50:38 besides of course Beanie and Pydantic, which are pretty awesome and obvious.

50:42 I would recommend to use motor. This is this is kind of by Mongo, but asynchroneous.

50:47 Integrates with async and await perfectly, which is real, real nice.

50:51 If you want to do something more low levels and then Beanie, then you have to at least meet with

50:57 motor because this is really nice library. And even after that many years, it's still very actual.

51:04 And what else? Honestly, I don't have anything.

51:06 Yeah. Pydantic is good stuff. Awesome.

51:11 Okay. I'll throw Locus out there for people. They can check out Locus. That's pretty cool.

51:15 If you are going through the same process, you've got code built on Beanie or just Pydantic in general,

51:21 and you want to see, you know, how does my system respond before and after?

51:26 Locus is like ridiculously easy to set up, run it against your code, pip install, upgrade,

51:31 run it again and just see what happens. I think that'll be a really good recommendation too.

51:36 Yeah. Final call to action. People want to get started with Beanie. Maybe people out there already

51:40 using Beanie want to upgrade their code. What do you tell them?

51:42 Just pip install it. Everything would be, would work fine. So just try it. But at least you have to

51:48 upgrade everything. So please write tests.

51:51 Absolutely.

51:51 And if something will go wrong, go to my Discord channel and me or other people will answer your

51:59 questions. Sounds good. All right. Well, congrats on upgrading Beanie. You must be really happy to have

52:04 it done. Yeah, I think. Thank you very much.

52:05 Yeah, you bet. See you later. See you.

52:07 This has been another episode of Talk Python To Me. Thank you to our sponsors. Be sure to check out

52:14 what they're offering. It really helps support the show. Studio 3T is the IDE that gives you full

52:19 visual control of your MongoDB data. With a drag and drop visual query builder and multi-language query

52:25 code generator, new users will find they're up to speed in no time. You can even query MongoDB directly with

52:31 SQL and migrate tabular data easily from relational DBs into MongoDB documents. Try Studio 3T for free

52:39 at talkpython.fm/studio2023. Want to level up your Python? We have one of the largest catalogs of

52:46 Python video courses over at Talk Python. Our content ranges from true beginners to deeply advanced topics

52:52 like memory and async. And best of all, there's not a subscription in sight. Check it out for yourself

52:57 at training.talkpython.fm. Be sure to subscribe to the show. Open your favorite podcast app and search

53:03 for Python. We should be right at the top. You can also find the iTunes feed at /itunes, the Google Play

53:09 feed at /play, and the direct RSS feed at /rss on talkpython.fm. We're live streaming most of our

53:17 recordings these days. If you want to be part of the show and have your comments featured on the air,

53:21 be sure to subscribe to our YouTube channel at talkpython.fm/youtube. This is your host,

53:27 Michael Kennedy. Thanks so much for listening. I really appreciate it. Now get out there and write

53:31 some Python code.

53:41 "The RSS feed on YouTube and check out the video. I'll see you next time. I'll see you next time. I'll see you next time."

53:52 "The RSS feed on YouTube and I'll see you next time." "The RSS feed on YouTube and I'll see you next time."