Optimizing PostgreSQL DB Queries with pgMustard

On this episode, we're joined by Michael Christofides, co-creator of pgMustard, to discuss and explore the EXPLAIN command for Postgres and other databases as well as all the recommendations you might dig into as a result of understanding exactly what's happening with you queries.

Episode Deep Dive

Guests Introduction and Background

Michael Christofides is the co-creator of pgMustard and has a rich background in database tooling. He started his career at Redgate, working on popular solutions for SQL Server, MySQL, and Oracle, among others. Later, Michael became deeply involved with Postgres, especially its performance and query optimization side. His passion for making databases more approachable led him to build pgMustard, a tool that transforms Postgres query plans into actionable insights for developers and DBAs alike.

What to Know If You're New to Python

Here are a few notes to help newcomers get the most out of this episode:

- Understanding how to install and interact with Python packages (e.g., pip or Poetry) helps when installing database connectors and ORMs.

- Basic knowledge of SQL and how Python can talk to databases (for example, using libraries like psycopg2 or SQLAlchemy) will make the discussion about query optimization easier to follow.

- Familiarity with relational database concepts (tables, indexes, queries) will help you grasp the performance techniques mentioned during the episode.

- If you’re looking for a solid foundation in Python itself, check out the “Learning Resources” section below.

Key Points and Takeaways

Optimizing Postgres Queries with pgMustard

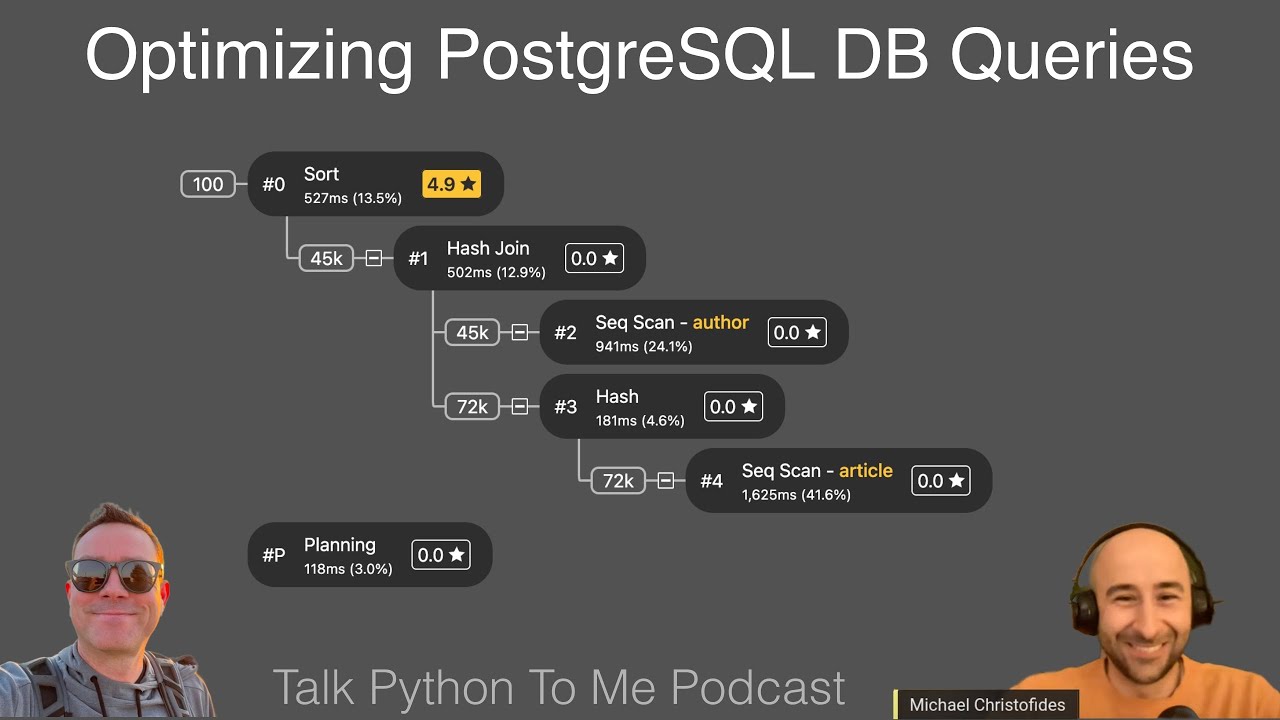

pgMustard converts raw Postgres “EXPLAIN” plans into friendly, visual insights. Rather than just color-coding the slow parts, it offers specific advice on tuning, indexing, or adjusting configuration. This guidance can be a significant time-saver for developers who only occasionally dive into DB internals.- Links and Tools:

Why Database Performance Matters

A laggy database can bottleneck the entire application experience. Whether you run a massive online platform or a hobby project, small performance gains often translate into a noticeably snappier, more reliable user experience. A well-optimized DB also reduces infrastructure costs by making more efficient use of hardware.EXPLAIN vs. EXPLAIN ANALYZE

Postgres’sEXPLAINcommand shows how the database plans to run your query, whileEXPLAIN ANALYZEactually runs the query and reveals real performance metrics like total time. If you see operations that are unexpectedly expensive, that’s usually your first clue about missing indexes or mismatched row estimates.- Links and Tools:

GUI Tools vs. Command Line

For quick one-off checks, the command-line toolpsqlis handy, but graphical tools can help visualize schema and query plans faster. Tools like dBeaver, DataGrip, Beekeeper, and Archetype give you features such as autocomplete, ER diagrams, and direct plan visualizations, which can be crucial for complex queries.Generating Realistic Test Data

It’s crucial to test against a realistic data volume. Tools like Makaroo or Python’s Faker library help generate sufficiently large and varied data sets to match real-world usage. This ensures the query plan chosen in development closely resembles that in production.- Links and Tools:

Reading Query Plans “Inside Out”

Postgres reports its plan from the “outer” operation down, but execution generally starts with the innermost operations. Understanding this indentation is vital: look for big row counts and time spent at the most nested steps. Tools like PEV2 can highlight time-consuming parts in color-coded diagrams.- Links and Tools:

Common Pitfalls: N+1 Queries, Over-Selecting Columns, and Missing Indexes

The famous N+1 pitfall arises when you make one query to get a list of items, followed by a new query for each item. Also, pulling more columns than needed (like aSELECT *) often forces Postgres to do extra sorting or scanning. Missing indexes show up as large, sequential scans when you only need a fraction of the rows.Tuning Postgres Configurations (e.g., WorkMem)

Postgres has conservative defaults for memory and parallel settings, especiallywork_mem. If your queries sort or hash large data sets, you might see them spill to disk. Tweaking memory-related settings can drastically reduce disk I/O, boosting performance. Always verify changes withEXPLAIN ANALYZEto ensure no negative side effects.Keeping Statistics Fresh

TheANALYZEcommand updates table statistics that guide the query planner. Stale or incomplete stats make Postgres choose suboptimal plans. If you upgrade or import large data sets, runANALYZE(or rely on the auto-vacuum system) to keep row estimates in sync.Advanced Extensions and Logging

BeyondpgMustard, Michael recommended turning onpg_stat_statementsfor global query insights, plusauto_explainfor capturing plans of especially slow queries. These extensions can help identify whether a particular query is globally problematic before you jump into the details.

Interesting Quotes and Stories

- Slow Websites and Missing Indexes: “Remain calm—somebody probably forgot an index somewhere. It’ll eventually finish.” This quip captures how small mistakes can lead to big slowdowns.

- Local vs. Production: The story of discovering that a local toy data set is fine but production is crawling is a reminder that scale changes everything. “If you’ve got ten rows, you’ll never see that your query is actually doing thousands of sorts on disk.”

Key Definitions and Terms

- EXPLAIN: A Postgres command that shows the intended query plan without actually running it.

- EXPLAIN ANALYZE: Runs the query and measures the actual performance details, including time and row counts.

- N+1 Problem: A common query issue where you run one main query, then loop with an additional query for each result item.

- WorkMem: A Postgres setting for how much RAM each operation can use before spilling to disk.

- pg_stat_statements: An extension that captures statistics about every executed query, useful for spotting slow or frequent statements.

- auto_explain: An extension to automatically log the execution plans of queries exceeding a specified threshold.

Learning Resources

- Python for Absolute Beginners: Ideal if you’re completely new to Python and want to get comfortable with the language fundamentals before tackling database optimization.

- Building Data-Driven Web Apps with Flask and SQLAlchemy: A great way to learn how Python can interact with databases (like Postgres) through a modern web framework and an ORM.

Overall Takeaway

Database optimization may feel daunting, but with the right tools and strategies, even occasional DB users can quickly improve query performance. Paying attention to realistic testing, indexing strategies, and reading your query plans (especially with a helper like pgMustard or other visualization tools) provides a clear path to speeding up your Postgres-backed apps. By focusing on understanding the underlying operations—rather than treating the database as a black box—you’ll deliver faster, more reliable software to your users.

Links from the show

Datagrip: jetbrains.com

pgMustard: pgmustard.com

pgMustard example 1: app.pgmustard.com

pgMustard example 2: app.pgmustard.com

pgMustard example 3: app.pgmustard.com

Arctype: arctype.com

Postico: eggerapps.at/postico

Laetitia Avrot Secrets of 'psql'— Video: youtube.com

Beekeeper Studio: beekeeperstudio.io

DBeaver: dbeaver.io

SQLite Browser: sqlitebrowser.org

Michael's new Up and Running with Git course: talkpython.fm/git

Watch this episode on YouTube: youtube.com

Episode #366 deep-dive: talkpython.fm/366

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 Does your app have a database? Does that database play an important role in how the app operates and

00:05 how users perceive its quality? Most of you probably said yes to that first question and

00:10 definitely to the second. But what if your database isn't doing as well as it should?

00:15 How do you know? And once you know, what do you do about it? On this episode, we're joined by

00:20 Michael Christofides, co-creator of pgmustard, to discuss and explore the explain command for

00:26 Postgres and other databases, as well as all the recommendations you might dig into as a result of

00:32 understanding exactly what's happening with your queries. This is Talk Python To Me, episode 366,

00:38 recorded May 11th, 2022.

00:53 Welcome to Talk Python To Me, a weekly podcast on Python. This is your host, Michael Kennedy.

00:58 Follow me on Twitter where I'm @mkennedy and keep up with the show and listen to past episodes at

01:03 talkpython.fm and follow the show on Twitter via at Talk Python. We've started streaming most of our

01:09 episodes live on YouTube. Subscribe to our YouTube channel over at talkpython.fm/youtube to get

01:15 notified about upcoming shows and be part of that episode. Transcripts for this and all of our episodes

01:21 are brought to you by Assembly AI. Do you need a great automatic speech to text API? Get human level

01:27 accuracy in just a few lines of code. Visit talkpython.fm/assemblyai. Hey there, before we jump into the

01:34 conversation with Michael, let me just tell you about a new course we just released, Up and Running with

01:39 Git. Look, you're probably thinking, Michael, there are a ton of Git courses out there. Why create a course on

01:46 Git? Well, this course takes an unconventional and pragmatic approach to Git and source control that'll

01:52 get you up to speed super quickly. Many of those Git courses that are out there, they take a least

01:58 common denominator approach. They decide that you need to learn pure Git. That is, you open up the

02:05 command prompt and you do Git there and that's it. And yet, is that how most of us work? No, we have

02:12 really great Git and source control features built right into the tools that we're using. So with Up and

02:17 Running with Git, that course teaches you how to use Git by leveraging the best UI tools from common

02:23 editors such as PyCharm and Visual Studio Code, as well as using highly polished Git apps such as

02:30 Atlassian's source tree. And we even throw in a little terminal magic at the end. If you want a

02:36 practical and friendly introduction to Git and get stronger with source control, check out Up and

02:42 Running with Git over at talkpython.fm/git git. All right, you ready to talk Postgres?

02:48 Michael, welcome to Talk Bython to me. Thank you. Thank you for having me. It's a pleasure. Yeah,

02:53 it's a pleasure to have you. I'm honestly pretty psyched to talk about database stuff. I feel like databases are

02:59 these magical centerpieces of so many applications and yet, yet they are so unoptimized in many

03:07 situations that if you could just sprinkle a few of these ideas that we're going to talk about on them,

03:13 they'll make the entire app and the entire experience for everyone involved better, right?

03:17 Easier for the developers to have code that goes fast if their queries are fast. The customers will be

03:21 happy that it's not like, wait, did I click the button? Oh yeah, it's still spinning. That's right. You know,

03:26 like all of these experiences, just like every time I have them, I'm just like, remain calm. Somebody

03:31 didn't put an index somewhere. There should have been one and this thing will probably work eventually.

03:36 I don't know if you have those feelings as you go around the web. Yes, 100%. I might have taken it a

03:41 bit far in trying to fix them, but yes, absolutely. I'm going to send a support request and here's the

03:47 explain document that I've come up with for you. Fantastic. All right. Well, we're going to dive into

03:52 specifically Postgres, but we were chatting a bit before we hit record and much of this guidance

03:59 expands beyond Postgres, right? Even beyond relational potentially.

04:03 Yeah, absolutely. And going back to something you said just briefly then, the beauty of databases is

04:08 they've worried so much about performance and you can get up and running. You can get a lot of

04:13 performance out of them without doing even the basics right sometimes, but at a certain scale that

04:18 starts to fall down. So yeah, once you're past the first few stages, quite often the performance

04:24 problems you're hitting tend to be database related. So yeah, that's what I'm excited about. But it's not,

04:30 yes, most of my recent experiences with Postgres, but the performance is mostly about trying to work

04:37 out how to do less work or how to not do the work in the first place. And that's the same whether you're,

04:43 whichever database you're working with or yeah, data in general, basically.

04:47 Yeah. Databases seem magical. They take all this data and they come up with answers incredibly quickly,

04:53 but they're not magical. They just have four or five algorithms for sorting and different mechanisms for

04:59 filtering. And then they know how to sort of estimate and plan those together and take guesses.

05:04 And our conversation today will be how to understand those different things that databases can do,

05:10 how we can interrogate them to tell us what they're trying to do, and then what changes we can make to

05:15 make them faster and some tools along the way. But before we do that, how'd you get into databases and

05:22 being associated with PG mustard and all the stuff you're doing?

05:25 Yes. So I, it goes, well, back in the university, I studied mathematics. So that involved a tiny bit

05:32 of programming, but really not much, just a little bit of statistics that I quickly dropped.

05:36 Yeah.

05:37 And a tiny bit of mechanics that I dropped as well. Very pure.

05:40 Yeah, exactly.

05:41 There must be something about math in the air. The last three episodes I've done have all been like

05:46 people with PhDs in math and doing all sorts of interesting stuff. So, and I have a math

05:50 background as well, but yeah.

05:51 Amazing.

05:52 Yeah, it is. So it started, it started in math. Yeah.

05:54 Yeah, absolutely. No PhD here, but I then went to a, well, I thought I wanted to be a management

06:01 consultant for my sins back in the day. And then the financial crisis happened. So luckily that got

06:06 scuppered and I ended up finding a job at a company called Redgate, which I understand you're actually

06:12 familiar with. So they did database tools and well, predominantly for SQL server, but also developer

06:19 tools for .NET as you've seen, and then expanded out from there. So I started at a time where their most

06:26 popular product was called SQL compare for comparing databases. And just a few months into when I was there,

06:33 they launched a product called SQL source control. So it got into kind of the change management side of things.

06:38 And luckily got so many opportunities there to do various roles and landed mostly in product management.

06:45 luckily got a chance to take on their Oracle tools team. So it was a tiny team compared to the rest of the company.

06:51 And it was the first foray into their tools for Oracle. And we also span off a couple of tools for MySQL as well.

06:57 And in working in that small team, we started to see, I wouldn't say the rise of Postgres because it's been going for so long, but the resurgence and the slow and steady improvements from that. So that was my kind of first five years of getting to know databases. And then, yeah.

07:15 What a cool place to do it. Right. I mean, there are some very hot database companies, the MongoDB folks, obviously Oracle, Microsoft SQL server, like where they make them. But then there's only a few places that where they really say, and what was missing? Let's just fix all of that. Right. Let's make the developer experience really right on databases. And I feel like Redgate was very much like that, like SQL compare and the source control stuff. That was all very neat.

07:39 Yeah, absolutely.

08:09 Okay.

08:10 Yeah.

08:10 Yeah.

08:10 Okay.

08:10 Yeah.

08:10 Yeah.

08:10 Yeah.

08:11 Yeah.

08:11 And even if you realize they're not magic, they're still complicated or there's certain complexity to dealing with them. And if you can make that easier for folks, or at least help them with better defaults, like people, people designing databases are exceptionally smart and they pick the defaults for a reason. But in the real world, sometimes you can afford to be slightly less conservative or have choose a certain perspective on something and make a tool that's only for a certain subset of the users. So you can be a bit more opinionated as tools vendor.

08:40 So you can be a bit more on a tool vendor versus a platform provider. And I like that kind of space where you can differentiate a little bit more on usability and performance, maybe a little bit less on the absolute fundamentals.

08:53 Well, and there's so many different situations in which databases are used, right? Are you Google? Are you Talk Python? Or are you a single little app that one person is using? Like these are all very unrecognizable to each other in significant ways.

09:07 Yeah, absolutely. And the mad thing is they can all run off the same type of database. So you could run any...

09:14 Yeah, that is mad. Yeah. Yeah, that's a really good point that you can run off. Basically, everyone installs the same database, does some sort of schema creation thing and goes from there.

09:25 Yeah, absolutely. Or even Mongo, right? Run well. I think that's the key thing. Run well, probably most... I mean, Facebook still runs off, albeit admittedly, highly modified, very impressive, MySQL setup.

09:40 And I think just today we saw a couple... Well, maybe in the last two days, we've seen a couple of really impressive companies launching SQLite on the edge, you know. So there's really pretty much any database that's made it to this point can probably support you at any scale if you know enough about it or if you run it well.

10:00 Yeah. Yeah, for sure. Interesting. All right. Now, before we get into optimizing these... First, let's get the order right. Understanding the performance and then optimizing, right? Not take a shot at optimizing, then we'll figure out if we got the right spot.

10:15 Just like profiling and performance optimization of code. Let's just talk for a minute about some of the... We were debating what the nomenclature was. I have GUI tools for managing databases. Because I saw that you were using... In some presentations you're doing, you're using dBeaver, which is pretty interesting. And I feel like a lot of people don't end up using these tools. So maybe you could highlight a couple that I think sort of stand out for just interesting database stuff.

10:45 I do apologize. I got a tiny bit of lag there. So, but yes. So if you can hear me clearly, then I do like dBeaver for doing demos. It's very easy to run multiple queries in a row that you don't have to type out or copy paste around. It's also exceptional for post GIS, which is for geographic data. And there are a few people really keen on its visualizations for that. I tend to not use it day to day anymore, but only because there's so many tools out there that you can use. I think you've

11:15 Yeah, you've listed a few, but a lot of people are very keen on the default one that ships with Postgres called Psequel, which is command line interface. Very, very powerful. Can do a lot of things that other tools can't, but has quite a steep learning curve, in my opinion.

11:29 There's some people doing tutorials on that. I think Letitia is doing some really good conference talks at the moment on this and has done them in the past and has a website around Psequel tips. So yeah, there's loads of good GUIs out there. At the moment, I'm using a really modern one called Archetype. They've only recently launched, but I tend to try out tools probably earlier than most would.

11:53 But wait, this looks nice. Right?

11:55 This looks real nice. Archetype, A-R-C-T-Y-P-E dot com.

12:01 You got it.

12:01 Yeah, right on. This is cool. Okay. And this works on the different databases that you might.

12:07 So they stripped back down to a couple, maybe a year or two ago. So it was Postgres and MySQL, I believe, but I think they're adding some back in. Not 100% sure though. Sorry. I'm Postgres through and through.

12:19 Yeah, it solves the problem. What else do we need to worry about, right?

12:24 Absolutely. And if we're talking specifics, actually, because I'm on Postgres on a Mac, I think probably the best long lived option and one that's been around for years, I would say is Postico, which is a Mac only app. But yes, it's lightning fast, which I, so Postico with an O at the end.

12:42 Postico. Okay. Interesting.

12:45 Oh, Mac only. Okay. Yeah. Yeah. That's, that's pretty nice. But this other one, this archetype looks really nice.

12:51 But looks aren't everything, right?

12:52 No, no, you're absolutely right. They're not, but they need to work well. So to the two others that I was going to throw out there that are kind of nice. This one clearly is not for Postgres because it's DB browser for SQLite. But at the same time, I feel like a lot of people are interested in databases, generally not just Postgres, right? You can come across SQLite all over the place. So I think this one is nice. This one is free. And then have you done anything with Beekeeper?

13:17 No, I have seen it around.

13:19 Yeah. Beekeeper is pretty nice. It gives you like autocomplete for your queries and ways to explore your schemas. But what it seems to not have really is any sort of exploration of the schema visually, right? There's not like a UML looking diagram type thing.

13:33 Yeah. One last one before we, I think a company that are doing great things in IDE space in general are JetBrains and their DataGrip product is very good as well. And very, very cross platform in terms of different support for different databases.

13:48 Yeah, absolutely. I think DataGrip is really great, right? Like if you use PyCharm, for example, when you're doing the database features of PyCharm, it really is just DataGrip, right? If you just want the standalone edition. So super cool. Yeah. These are all really nice. And I discovered a few myself as well from this. So awesome.

14:08 Awesome. I just think it's kind of important for people to have these tools. And maybe you end up just on CLI tools like P-SQL. But when you're starting, I think that that can be the difference of I can do this or I can't do this.

14:20 You know what I mean? Rather than efficiency. People might just look at it and go, that's too much for me. I'm out.

14:25 Yeah, well, it's also about discovery. I think sometimes you don't know what's there and what's possible. And in a command line tool, it's really on you to figure that out. Whereas in a GUI, they can leave you hints, they can show you the tree of things, you can start to realize what is and isn't possible. And they've got a lot more freedom to educate you as a new user. Whereas in a command line tool, there's very little they can do on that front.

14:52 So yeah, not all of them. Not all of the GUIs take advantage of that, but the good ones do.

14:57 The possibility is there. Yeah, the good ones do. All right. I was talking about schema and I'd like to hear your thoughts on this. I'm sure you're more well versed in it than I am. When I think about schemas, I think about the tables, maybe the columns, the types of columns that are there. So this one is a varchar 16. This one is a float or whatever. Would you consider indexes part of the schema?

15:20 I would, yes. Yeah. Yeah. I guess it depends on definitions are hard to mathematicians discussing definitions.

15:26 I guess when I'm thinking about schema changes and deployment scripts and things like that, I think it's just as important for the functioning of your app. An index existing or not can be the difference between you effectively being up or being down. And if it's that important, then I'd probably say it does. It is.

15:49 Yeah, yeah, for sure. Quick audience question from William. What's the most powerful utility you personally got out of P-SQL?

15:56 Oh, good question.

15:58 Or what's the most powerful thing that you find yourself doing with P-SQL maybe is a good way to phrase it.

16:03 I'm probably not the best person to ask. I tend to use it for really quick things. So it's more of like it's by far the easiest and quickest to throw a quick query into and get a really quick result back out of. There's zero load time or anything like that.

16:19 Right. And you get text output straight back. It's, you know, it's, it's, you don't have to worry about any formatting or anything.

16:25 Right. Open your terminal, P-SQL, some query, you see the answers right there.

16:30 Yeah. But that's not like, there's probably people ripping their hair out and screaming at the screen saying, why didn't you mention this amazing feature of P-SQL?

16:38 But the truth is I don't, I'm not a heavy database user in the grand scheme of things. You know, people doing DBA work, I make and help design and run a tools company, not a, we don't have a massive database. I don't do tons of analytical queries day to day.

16:53 Sure.

16:53 So those, yeah, it's the DBAs of this world and the people that have got a lot of hands on database experience that probably do a lot more of that. But I would, in terms of recommendations, I think Leticia Avro does a, has a website called P-SQL tips. And I think if you go to it, it just loads a random tip of about a hundred and something that she keeps adding to. And I think that's a great, like, that's a fun way to get some discovery or learn some features in a tool.

17:21 This portion of Talk Python To Me is brought to you by Sentry. How would you like to remove a little stress from your life? Do you worry that users may be encountering errors, slowdowns, or crashes with your app right now? Would you even know it until they sent you that support email? How much better would it be to have the error or performance details immediately sent to you, including the call stack and values of local variables and the active user recorded in the report?

17:49 With Sentry, this is not only possible, it's simple. In fact, we use Sentry on all the Talk Python web properties. We've actually fixed a bug triggered by a user and had the upgrade ready to roll out as we got the support email. That was a great email to write back. Hey, we already saw your error and have already rolled out the fix. Imagine their surprise. Surprise and delight your users. Create your Sentry account at talkpython.fm/sentry. And if you sign up with the code Talk Python, all one word.

18:19 It's good for two free months of Sentry's business plan, which will give you up to 20 times as many monthly events as well as other features. Create better software, delight your users, and support the podcast. Visit talkpython.fm/sentry and use the coupon code Talk Python.

18:39 You spoke about being in a tools place. You want to just give a quick shout out to PG Mustard and we'll come back and talk about it more later?

18:45 Yeah, sure.

18:46 That's sort of the second question I was going to ask you. We're not making speedy progress, which is great. So you were at Redgate and now you've gone on to start your own company, co-found your own company around database tools.

18:58 Yeah, absolutely. So I worked at a couple of companies in between, one of which was a big fan of Postgres. So there was a company called GoCardless and the team there were exceptional, loved my time there. And they ran quite a big payments company by the time I left on a single Postgres database. And it was, yeah, the team were big fans and completely converted me.

19:19 I was already keen on Postgres or the idea of it from Redgate time and hearing what customers were saying about it. So I was pretty sold on the idea. I loved the platform. I loved how extensive it was. I loved that you could see the source code. You could even contribute back to it. So I was a big fan of the platform. But my first ideas for a business were pretty lukewarm, let's say. So there's a Redgate product I love called P-SQL. So you mentioned Autocomplete in, I think it was Beekeeper.

19:47 They had an Autocomplete tool in SSMS, which is SQL Server Management Studio, which people loved. And it's an everyday use tool and you could easily see yourself making it better and better for years. So that was my kind of ideal tool. But building it would have entailed either integrating into the 25 IDEs that we've looked at so far or picking one and really maybe not being that useful. So ended up trying to build something a little bit more standalone.

20:15 There's a few really good open source query plan visualization tools. They do a lot of work to help you see where the time's going in a query and give you some basic kind of guidance. Or maybe not basic, maybe super DBA friendly guidance. So it's often built by DBAs for DBAs type tools.

20:35 Advanced, advanced, but maybe basic in this presentation.

20:37 Yeah.

20:38 Something like that, right? It's just like, here's a wall of text. It speaks your language. Go figure it out.

20:42 Yeah, exactly. And I quite often saw on, in different places, people would post links to them and say, I've put it into this tool, but what can I do to speed it up?

20:52 So there was clearly still a missing step of what can we do about it? Okay, here's where the time's going, but what can I actually do about that?

21:00 So tried to build something that would help solve that problem. And this is, this is what we came up with.

21:05 Yeah, it's really cool. And it, the way it works is you give it, explain, you have Postgres explain your query plan to it, to you. And then you give that query plan to PG Mustard and it puts it into a graphical form and into a more digestible form.

21:22 And that's interesting. But what's really interesting is it gives you advice. It says, you know what? This sort is happening without a query, without an index. And that's where you're spending most of your time. Here's your big opportunity for a win.

21:34 We'll come back to this, like I said, after we talk about finding these problems. But I just want to point out, I had, we'd covered this on Talk Python. One of our guests mentioned it. I think it was Hennick.

21:45 And then we also talked about it on Python Bytes. My other show is just one of the topics. And I don't remember which place we talked about it, but somebody made the joke of, oh, this is my new consulting job.

21:57 What I'm going to do is I'm going to buy a license to PG Mustard and I'm going to go around and optimize people's databases by dropping the query plans in here and just taking its advice, which I thought was hilarious, but also plausible.

22:09 We have some consultants that use us.

22:12 I can imagine. It's really good visualizations and interesting, often useful advice that it gives.

22:18 So, all right. That is all super cool. But let's, let's go back more broadly to Hostgres, but then maybe even, like I said, more general.

22:27 Like I feel like a lot of this applies to MySQL, Microsoft SQL Server, and even Mongo.

22:32 So let's just start out about maybe you're the unfortunate person who is running one of these websites that makes me think about the optimizations that were missed as I wait for the spinning thing to load.

22:43 And it's your job to figure out, you know, what is wrong?

22:47 I know. So maybe we could talk about some of the techniques for finding problems with your database.

22:52 Let's just start there. My database is slow.

22:55 Perfect. So a lot of databases will have some version of a slow query log or built in monitoring view or, or you'll have a monitoring tool set up yourself to log and aggregate this kind of thing.

23:12 And normally the starting point. So I guess there's a few starting points, either custom reports, customer or team member or somebody reports something slow or your database is on fire.

23:24 It's, you know, maxed out on something.

23:27 CPU, memory, disk, some combination thereof.

23:30 Yeah, exactly. Or in the cloud, maybe you've run out of your provision.

23:33 Yeah. Anyway. So, so there's that, but yeah, let's take the, let's take the, I've got one slow query.

23:38 So in that case, you probably know what can get from the query log, the actual query that was being run.

23:45 Yeah. Let me just make a little side comment here is like, if you're using an ORM, it can be a little bit harder to see what that is.

23:53 Right. You might have to attach some kind of real time logging tool to the database or get it to log it to a file and go track it down.

24:01 But also a lot of the ORMs have features that you can make it spit out the queries like in super verbose mode.

24:09 So for example, SQLAlchemy, when you create the engine, you can say echo equals true, but it's not just SQLAlchemy.

24:14 You can do it with many of them and it'll, it'll start printing the queries.

24:17 Maybe that's a place to start, right?

24:19 Yeah, absolutely. And some of them have, there's some tools now increasingly that let you even do the explain.

24:25 So yeah, the first step is get the query, but in some of these frameworks and ORMs, you can even ask for the explain plan directly as well.

24:34 Increasingly, even with, and this is where we start to get a little bit more technical and a bit more database specific.

24:39 But I think it's the same, it's the same across many databases, but you want explain will give you the plan of what the, what the database has chosen to be.

24:49 Probably the optimal route to do that query or to get you that data or to insert that data.

24:55 But if you want to get the performance information and get really start to dig into exactly why it's slow, you need to run something called explain analyze, which will also run the query.

25:07 So be careful around that. If you're running kind of big delete or something and probably not run it on production, please, please, please.

25:14 Wait, why is this delete slow? Oh, wait, why is there no data? It's fast now. That's great. Wait, why is it fast?

25:20 Yeah.

25:21 Or you go to run it a second time and don't, and don't understand why it's not.

25:25 Yeah.

25:25 That it's got, yeah, it's different somehow.

25:27 Yeah. So explain analyze will run the query behind the scenes, but instead of getting the data or instead of, let's say, getting the data back or getting a normal return, you get a query plan back with performance data in it with a few, quite a lot of statistics.

25:42 So yes, there's a, you, you want, you just append your, that to the beginning of your query, run it as normal, and then it gives you the query plan.

25:50 So that's step. Step one is get that information from your database.

25:55 Right. And the way that that looks is instead of writing select star from table where you write, explain select star from that or explain analyze, depending on whether you want that timing information, right?

26:07 Exactly. So if you have, and this is probably, hopefully not many of you in this situation ever, but if you have a query that never returns, let's say it's going to take six hours, maybe.

26:17 You probably want to get the explain plan just to get a query plan because explain analyze is going to take as long as your query because it is executing behind the scenes.

26:25 It runs it and figures out how much IO it's doing and stuff like that. Right.

26:29 Yeah, exactly. Oh, good question.

26:31 So to add IO information, at least in Postgres, you need to include an additional parameter.

26:37 So it would be explain analyze buffers.

26:40 And this is part of my advice to people is especially when, well, probably everybody, but especially when you're new and you might need to help ask for help from somebody else.

26:49 If you add as many parameters you can to that, explain it.

26:52 If you're in an IDE, just select them all.

26:54 There's not once you're getting timing, there's not that much additional overhead to getting the other information as well.

26:59 But in Postgres, we recommend buffers for Bose settings.

27:04 And there's even a new that gives you right ahead log information as well.

27:07 So if you ask for all of it, then you only have to get it once.

27:10 And even if you have to go to ask, maybe you're placing that into a tool, but maybe you're asking a colleague for help.

27:15 They might spot something in that extra information that helps them help you.

27:19 Right, right, right.

27:20 OK, Michael, in the audience, asked a question, which makes me think of my next one here.

27:25 It says Google Cloud SQL just added some sweet query monitoring and planning visualization.

27:30 So that's pretty nice.

27:32 Yeah, Google Cloud SQL doing some really cool things.

27:34 They also just recently announced that you can do major version upgrades much more easily now, which they didn't have before.

27:42 So, yeah, the cloud providers are doing some really interesting things.

27:46 The query plan visualization stuff is obviously very interesting to us, but there is a natural tradeoff.

27:53 With performance, as always, there's a tradeoff in general between monitoring constantly for these things versus getting them when you need them.

28:03 There's a small overhead.

28:05 Well, I say small, but normally small overhead to constantly profiling, constantly monitoring for these things.

28:11 And getting timings does have some overhead.

28:13 So there is some advice out there to not do this in general for most applications.

28:18 But we have customers that get the query plan for it.

28:21 They don't log it for every query, but they get it for every query so that if it's slow, put it into the logs and it can be investigated.

28:27 Okay.

28:27 Interesting.

28:28 Yeah.

28:29 So my question is, let's suppose I'm going to get some cloud database type thing, right?

28:35 Managed Postgres, let's say.

28:37 And I also happen to have Postgres running locally, or maybe I'm fully insane and I have SQLite local, but then Postgres, and that's going to make you worry, I'm sure.

28:47 But I run one of these explain, analyze plans against them.

28:51 Are they comparable?

28:52 Do I need to make sure that I point it at my production system when I'm asking these questions?

28:57 Yeah.

28:57 Let's take.

28:58 What do you think?

28:58 Let's start with a Postgres, Postgres example.

29:00 That's going to be easier.

29:02 And yeah, the main thing that's tricky there is, well, there's a few things to get right.

29:08 Naturally, the schema being as similar as possible is pretty important.

29:12 The config of the database as well, as similar as possible.

29:16 So there's a bunch of config changes that you might have made on production that it's pretty important to have locally as well so that the planner is making similar decisions.

29:23 And then the big one that we see people do, sadly, far too often, is having a really tiny data set locally.

29:32 So, you know, generating 100 rows or even worse, inserting three rows or not having any data in a table.

29:38 And databases, as we've discussed, magical or let's say clever.

29:43 And they know that the amount of data is really important to them in terms of their choices.

29:49 So you mentioned different, I think, join algorithms.

29:53 Postgres, for example, has multiple join algorithms it can use.

29:57 And if there's very little data, it will choose one that has a very low startup cost, even if it would get expensive at lots of data.

30:05 Because it knows there's or it thinks there's not much.

30:07 Whereas in a world, if locally you've got not much data, but then on production you've got a lot of data, it might choose a different join algorithm.

30:16 So the choices it's making are very different depending on the amount of data.

30:20 So that tends to be the one that trips people up most.

30:23 But it's not just algorithms.

30:25 It's also whether it would pick an index or not.

30:28 That's the one that really confuses people.

30:30 So you're like, I know there's an index in here.

30:33 Why isn't it using it?

30:34 Exactly.

30:34 It's a super common question.

30:36 And it turns out you've only got 10 rows there.

30:39 They all fit on a single block.

30:40 And it's much faster for Postgres just to return that single block to you than it is to look up each entry in an index.

30:47 So yeah, exactly.

30:49 Yeah.

30:49 Okay.

30:50 So I did want to give a shout out to a couple of tools for having fake data.

30:55 Maybe you've got some recommendations as well.

30:57 But there's really cool tools like this one at makaroo.com.

31:01 Are you familiar with makaroo?

31:02 I'm not, no.

31:03 So what's cool about this, like you can go and generate fake data, right?

31:06 So of all sorts of things.

31:08 But one of the problems is like, well, the fake data is too fake.

31:10 It doesn't look like the right structure or it doesn't validate or, you know, it's also good just for developing things.

31:17 But like, for example, you can have all sorts of stuff in here.

31:20 And it gives you, it has, the thing that's cool is there's a library of types of things that I can get.

31:25 So I could get like all sorts of stuff to do with cars that has real car makes and models and years or VIN numbers or credit cards or genders or those types of things.

31:36 And then you can go and you can export the schema as CSV or JSON or a create table SQL insert script, which is pretty cool.

31:47 So if you need more real data, you know, places like this for generating them or faker, which is a package for Python and these other ones that you can just make enough real fake data, right?

31:58 Yeah, exactly.

31:59 And this is super powerful in terms of, you know, getting as, getting as similar data as possible to your production system without having to worry about personal sensitive information.

32:09 Yeah, that's a big concern, right?

32:11 It's like, if you take production data, let's suppose it's not that massive and you put it on, you give it to all the developers, somebody's going to lose it.

32:18 Somebody's going to lose their laptop without the drive being encrypted.

32:21 They're going to put it on a USB stick to transfer it.

32:23 That's just like fat 32 with no security or something terrible, right?

32:27 Yeah, absolutely.

32:28 And then you might not even want people to even have select access to some of that stuff in certain environments.

32:34 So, yeah, but it's, you know, probably the more important thing in my world is, or the big, the first summing block is the sheer amount of data.

32:43 So just number of rows, right?

32:45 Yeah, so even if some of these fields aren't perfect, maybe you've got numbers in as names and things like that, it's probably not going to make a huge difference to performance unless you're really far out in terms of the size of that data.

32:57 So if you're inserting kind of multi-kilobyte blobs instead of, you know, gender, then you're going to maybe see some differences.

33:06 Not as much as if there were a million rows versus if there were two.

33:10 So step one is make sure you've got enough and then step two, maybe if you need to make it a lot more realistic.

33:17 Size and data type, but not necessarily super precise.

33:21 Yeah.

33:21 Great point on data type as well.

33:23 Yes.

33:23 Especially when it comes to indexing.

33:25 Right, exactly.

33:26 Because indexing a string case insensitive, it's different than a number.

33:29 Yes.

33:30 Yes.

33:31 Great point on case insensitivity.

33:32 The other thing I wanted to call out on the Postgres side of this is it's got a really powerful generate series function that can be used to mock up.

33:40 data quite quickly and easily.

33:42 Not as powerful as some of these, but simpler and built into Postgres already.

33:47 That's quite cool.

33:48 And Ryan Booz from Timescale has been doing some quite fancy demos of that, of using that to do quite interesting time series.

33:58 You know, huge amounts of data being populated via it.

34:02 So it is surprisingly powerful as well.

34:04 All right.

34:04 Speaking of time series, Michael asks, any tips for storing machine learning embeddings in Postgres?

34:10 Best thing he's seen is storing them as binary blobs.

34:13 I have no advice here.

34:15 I'm sorry.

34:16 I don't have any experience in it.

34:17 How about you?

34:18 I think I fully understood what would be different about it.

34:21 In terms of binary blobs, though.

34:23 I think generally what happens with the machine learning stuff is people will train up these models and they just save them as just binary blobs.

34:31 So it's more of a tips for storing binary data, perhaps, in general.

34:36 So this is...

34:38 Maybe JSON they could be as well.

34:39 This is secondhand.

34:40 This is not something I have direct experience of, but I've read a lot about performance, believe it or not, over the years.

34:46 It seems to me that the advice is, in the early days, keep it simple.

34:51 Maybe you want to store blobs in the database, but you could also...

34:55 There's very cheap blob storage out there and you can store IDs to those in the database.

35:00 But if you want to, if you want to only have one tool to start with, you can store them.

35:04 The database allows you to.

35:06 There's specific types in Postgres for that.

35:09 Even B support in Postgres is amazing.

35:11 And I think probably led to...

35:14 Well, it's partly responsible for why it's popular today, I think.

35:17 Especially...

35:18 I think so, too.

35:19 I think people see it as a significant...

35:22 A really good choice that's like some middle ground between a purely relational database and document databases like Mongo.

35:28 They're like, but we can kind of have both, right?

35:30 Yeah.

35:30 I don't even know if you need to say the word kind of.

35:33 Because I think maybe there's...

35:35 I don't know many, but maybe there's some use cases where Mongo is going to...

35:39 really outperform Postgres using JSONB.

35:42 I've not seen them and I don't know what they are.

35:44 It's super powerful.

35:46 And I guess the key difference between that and pure blob storage is you can index it.

35:51 So you can...

35:52 Yeah.

35:52 Yeah.

35:53 You can index it and query it.

35:54 You can index into go to this object in JSON and then here and then here.

35:59 And if they have that value, I want it back as an index.

36:01 That's the magic.

36:02 Right.

36:03 It's not as performant as splitting those out into columns in a schema and indexing those.

36:07 But that...

36:09 It's a trade-off again.

36:10 Back to trade-offs.

36:11 Yeah.

36:12 Yeah.

36:13 Do you have a billion of those things or a hundred of them?

36:15 Because if you got a hundred, you're probably fine.

36:17 I mean, probably way more than a hundred, but billions of stuff, you might be pushing your luck.

36:21 Yeah.

36:21 Okay.

36:22 Pretty interesting there.

36:23 Other real quick shout out just to like another thing that these guys...

36:26 Disclosure.

36:27 They were sponsors of Talk By Thon, but I found them before they were sponsors.

36:30 I thought they were cool and started talking to them.

36:32 Is this place called Tonic that you connect it to your real live data and it will generate like PPI cleaned up or stuff that looks like your real data, but it's not actually your real data.

36:42 So you sort of base it off of your production data.

36:45 Anyway, if people are looking for fake data, there's...

36:48 I guess my whole reason of going down that is there's not a lot of choices or not a lot of reasons to not have enough data.

36:54 There's a lot of options for getting enough data at various versions of realism.

36:59 Yeah.

36:59 Good point.

37:00 Yeah.

37:00 All right.

37:01 So we were talking about, can I run this explain, analyze against my local machine and against, say, and compare the results to how it's going to be in production?

37:10 So one of your big pieces of advice is make sure you have enough data and data types that are representative and probably relations, right?

37:18 If you're doing joins, like you want to have like similar amount of related data and whatnot.

37:23 Yeah, ideally.

37:24 But again, in step one and that website you talked about that has a spinning loader, they're going to be fine.

37:31 They're going to spot their problems without very accurate, you know.

37:36 Yeah.

37:36 So if a foreign key isn't indexed and they're doing a lookup on that, they'll notice it even if the distribution's slightly off.

37:45 So, yeah.

37:46 The nice thing about performance is you don't...

37:49 Often you're talking about 10x difference, 100x difference, you know, thousands sometimes.

37:54 All the blog posts talk about, you know, how we may postgres 20,000 times faster.

37:58 All it took was adding an index, right?

38:00 Look at it go.

38:00 Yeah.

38:01 And there's other things, right?

38:03 There are...

38:04 Performance isn't just indexing.

38:05 But when you're talking about the absolute basics, it's normally...

38:10 You're probably going to get pretty close as soon as you've got even...

38:14 Like as soon as you've not just got 10 rows in your database.

38:16 Sure.

38:17 Sure, sure.

38:18 Okay.

38:18 Because there were interesting things that you'll find...

38:21 Maybe you'll only find this if you do the buffers also, in addition to just analyze, is if you're doing a sort and you have a ton of data, it might say there's too much data to sort in memory.

38:32 So it might do a...

38:33 What was it called?

38:34 A merged sort on disk.

38:35 That's crazy.

38:36 It writes data to the disk and sorts it as the database.

38:39 You're like, wait a minute.

38:40 What is it doing?

38:40 It's taking it out of memory?

38:42 Are you insane?

38:42 What is this?

38:43 Well, yeah.

38:44 I mean, it's super clever, as you said.

38:45 But the alternative is risking an out-of-memory exception and the whole thing...

38:51 Do you want it fast and wrong or right, slow and correct and working, right?

38:57 Like, which...

38:57 Take your pick, right?

38:58 At some point, it can't do it otherwise.

39:00 But that goes back to the real data.

39:02 If you don't have enough data, you'll never see the problem as it's going to disk because why would three records go to disk?

39:07 That would be crazy.

39:08 Yeah, exactly.

39:09 So, well, this is a really good example because Postgres determines what it will do in memory versus on disk by a couple of parameters now.

39:20 But the main one being Workmem or working memory.

39:23 And that's set very low for most production workloads.

39:27 So that's four megabytes by default.

39:29 And I've seen a really good...

39:31 One of the top Postgres consultants giving a talk at a conference, even a few years ago.

39:36 So this might even be out of date saying they just automatically change that to 16 megabytes wherever.

39:42 Just always as a starting point.

39:44 And sometimes, you know, for analytical workloads, it could be a lot, lot higher than that.

39:48 But there's loads of formulas you can see out there.

39:50 But that's an example of a default that probably is a little bit low in Postgres in general.

39:55 But if it's running on the cheapest server you can get at somewhere like Linode and it's got, you know, 200 megs total, well, then you want it to still be right and slow, not wrong and crashing or fast and crashing.

40:07 Yeah, exactly.

40:09 Yeah, you do need to know these defaults.

40:11 I think part of being successful is like, okay, this is my context.

40:15 So these are the defaults that make sense.

40:17 And these are the ones that I should be tweaking.

40:19 So in another presentation I've seen you give, you talked about the process that you go through for finding these.

40:28 You sort of determining there's a problem, figuring out what it is, and then, you know, solving it.

40:32 You want to talk through your process there?

40:34 Yeah, absolutely.

40:35 And this is a process I recommend and I'm guilty of not always following.

40:39 But this helped me as well.

40:42 So, yeah.

40:42 Learn the rules first so then you can break them later.

40:44 There you go.

40:45 Right, exactly.

40:46 But I tend to regret breaking these, actually.

40:48 So, yeah, the first couple I think we've already covered briefly were run on a realistic data set.

40:54 And then the second one being make use of the parameters that Explain has in your database.

41:01 So for Postgres, use as many as your version supports is my, it tends to be my recommendation.

41:06 So get a lot of information is the short version of that.

41:10 With Postgres query plans, I think, especially if people are used to reading text format ones, they'd be very easily forgiven for starting at the English speaking world.

41:20 Start at the top, read left to right.

41:23 Makes loads of sense.

41:24 But the plans are indented.

41:26 So they're a list of operations that Postgres is doing.

41:29 And the first one you see is the last one it does to give you the data back.

41:33 Right.

41:33 Think about it like an onion, right?

41:35 It's the outer shell of the thing that you're being given.

41:38 Yeah, exactly.

41:39 It'd be difficult to start in the inside of an onion, wouldn't it?

41:43 So, yes, my advice tends to be.

41:46 So firstly, you need to know that the plan is executed from the most indented inwards just to be able to understand what's going on.

41:54 But secondly, it reports some really important statistics right at the bottom.

41:59 So the total execution time being an obvious example, but also the amount of time it spends on planning, on triggers and on just in time compilation.

42:10 So these are don't worry if you don't know what all of those mean.

42:14 I didn't.

42:15 But those can all in rare cases be the dominant issue.

42:20 So if planning time is 300 milliseconds and your execution time is one millisecond, but you've got 20 operations that the query is done in that one millisecond, you could spend easily an hour trying to understand that entire query plan.

42:34 Maybe more.

42:35 Maybe you've been interrupted a few times and then you get to the bottom and you see that planning time was the problem the whole time.

42:42 You're like, I have five seconds, five milliseconds I could possibly save.

42:46 Yeah.

42:47 Yeah, exactly.

42:48 Do things like cache the query plans or stuff like that?

42:51 Yes.

42:51 So that's a whole topic in itself.

42:53 But a lot of IRMs do that kind of thing for you.

42:56 Yeah.

42:57 But the big advice there is not so much on the planning time front.

43:01 It's more check out that bottom.

43:02 If you're on Postgres especially, check out that bottom section first and look for dominant issues before you spend ages reviewing the rest of the query plan.

43:10 Okay.

43:10 So that's the third of the five.

43:13 And the fourth is then the bit that I sometimes mess up and forget to do.

43:17 Which is work out which.

43:20 So if you then have to look through that tree of operations, don't look at any of the statistics until you've worked out where the time is going or where the majority of the work is happening.

43:32 So Postgres especially is difficult in this area because it will report.

43:37 I think MySQL does the same.

43:39 I'm not sure about Mongo and others.

43:41 It will report all of the operations, including the statistics of their children.

43:46 So you have to do a bunch of subtraction or and some slightly more complicated stuff than that, sadly, to work out where this time is going.

43:53 And it's very easy to get distracted by something, a pattern that you've seen before.

43:58 Like, you know, the obvious, the one that gets me and lots of other people is spot a sequential scan on a relatively big table.

44:06 And you start to meet.

44:07 You just jump to that and think that's probably where the problem is.

44:10 Miss index.

44:10 We're going to go fix that.

44:11 Yeah, exactly.

44:12 But in a big enough query, a scan of even a few hundred thousand rows might be them, especially if it's returning.

44:19 Most of them might be optimal.

44:20 It definitely might not be the bottleneck.

44:23 There might be bigger problems.

44:24 Sure.

44:25 So, yeah, my advice is work out where the time's going or the work's being done first.

44:29 And then the last step is once you've found like, is there a big bottleneck?

44:34 Where's the time going?

44:36 Then a narrow down into looking at just that operation or that cluster or that subtree.

44:41 Like, and it could be a programming pattern.

44:44 It could be, well, this query is returning 200,000 rows.

44:49 When it gets back to the app, the app is iterating over the first 10 and deciding it's had enough.

44:55 Right.

44:55 But meanwhile.

44:56 Yeah.

44:57 And yeah, exactly.

44:58 So, yeah.

44:59 I mean, it could be that.

45:01 Yeah.

45:01 And the fix would be a simple limit 10 and skip a certain amount.

45:05 Just put paging in the app as part of the query and you're good to go.

45:08 Yeah, exactly.

45:09 Pagination is huge, especially once you've got good indexing.

45:12 It can be so, so powerful.

45:14 Yeah.

45:14 But yeah, I mean, to be fair, we're probably, this process is mostly for a query that you've worked out is slow and you want to make faster.

45:22 And that would be a great example.

45:23 But then there are also other application side things.

45:26 So it might not be that any of these queries are slow necessarily, but you're firing off, you know, the typical N plus one example.

45:33 You know, you're firing off a lot when you don't need to.

45:35 You could, instead of going, doing a lot of round trips.

45:38 Yeah.

45:39 Instead of doing like an eager join, eager load on an ORM relationship, you're doing, I got a 50 of these back and then I'm doing the relationship 50 times.

45:48 Yeah.

45:48 Yeah, exactly.

45:49 Yeah.

45:49 And that echoing out the database calls of your ORM, you'll just see stuff ripping by you.

45:55 Like, why is there so much database stuff here?

45:57 This seems insane.

45:57 And they're like, well, N plus one.

45:59 Yeah.

45:59 That's the problem.

46:00 Really quick.

46:00 I want to just follow up on that.

46:01 There was a question out in the audience saying, what would you like to use?

46:05 Would you want to use maybe Postgres and SQLAlchemy or Psycho PG2?

46:09 Maybe the async version these days would be really nice to use.

46:13 I said, well, you might also consider a SQL model.

46:16 But with either of those SQL model built on top of SQLAlchemy or just SQLAlchemy, the question was, can it handle getting millions of rows back in a short amount of time?

46:24 Yes and no.

46:25 I like to hear what your experience is with this, Michael, if you have any.

46:29 What I've seen is a lot of times the database will really quickly give those results back and actually even ship them over the network to the app.

46:36 But the deserialization of like, here is a record and I'm going to make a class out of it.

46:41 And here's a record.

46:42 And you're making a million classes and setting probably 10 properties on each one of them.

46:46 You know, 10 million assignments.

46:48 Like that's, it doesn't matter how fast your database is going to be.

46:51 Just don't return 10 million rows into an RM almost ever.

46:55 Very, very rarely, let's say.

46:58 Yeah, exactly.

46:58 The general advice I think is if you're going to be doing aggregation work, try to do it database side.

47:04 They're designed to do that stuff.

47:06 They have incredibly smart algorithms built into them.

47:08 I'm sure there's exceptions, but it seems to be the wise way of doing things.

47:13 I mean, of course, analytical stuff.

47:15 Maybe you are presenting a report that needs to be hundreds of thousands of lines long, but there aren't many apps that there is a good argument for that, I don't think.

47:23 Yeah.

47:23 And so many of these, these ORMs have a way to limit the returned fields, right?

47:29 Say I only need the title and the ID, or I need the title, the price and the ID, because I'm going to do some local data sciencey stuff with price or whatever.

47:37 Right.

47:38 But you don't need the full description and all the user reviews or whatever.

47:41 Right.

47:41 So that can save a lot.

47:43 That's such a good point.

47:44 And yes, I think I might even see that one more often.

47:47 So requesting, you know, it's effectively the select staff from equivalent.

47:51 Yeah, it is.

47:52 Yeah.

47:52 You'd be surprised how many different things that can affect.

47:55 The number of operations down that tree that can be affected by the number of columns you've requested is huge.

48:03 Interesting.

48:04 It affects things like, oh, by the way, the same goes for how many columns you ask for it to be sorted or ordered by.

48:12 So it can make the difference between being able to do, for example, an index only scan versus an index scan.

48:19 That can be a huge performance difference.

48:21 Just that one alone.

48:23 In terms of aggregation, it can make a big difference.

48:26 There's so many things.

48:27 You'd be kind of the knock on effects of passing that many data.

48:31 We talked about sorts.

48:32 If you're having to keep that many rows in memory and each one's bigger, they're going to use that work memory more quickly.

48:39 Right.

48:40 It might fall back to one of those write to disk and sort it rather than sort it in memory.

48:44 Exactly.

48:44 Interesting.

48:45 Okay.

48:46 I hadn't thought about the knock on effects as much.

48:48 Let's talk a little bit about fixing this.

48:51 All right.

48:51 We've set up the problem.

48:52 Things you shouldn't do.

48:54 Yeah.

48:54 But how do you know whether you're doing them?

48:56 Right.

48:57 So you've got a couple of free tools that people can use that you might give a shout out to.

49:03 And then let's talk about PG Mustard and maybe talk through some examples there as well.

49:07 Sure.

49:07 So, yeah, there's, I mean, there's so many career plan visualization tools.

49:11 A lot of the IDEs we discussed also have them inbuilt as well.

49:15 Amazingly, a lot of them seem to choose to visualize it quite differently.

49:18 So it's quite, it'd be quite interesting looking through some of them.

49:22 But the one you've got up on screen at the moment is a free tool written by a French team at Postgres Consultancy called, I don't know how to pronounce it.

49:32 It's either Dalibo or Dalibo.

49:34 And they, this tool is inspired, I think it's even a fork of a product that was written many years ago by a great guy called Alex Tatyans.

49:43 So a lot of guys, a lot of people will recognize the Tatyans name from the URL.

49:48 That was a tool called PEV.

49:49 So back in the day when I started, the two tools were Depeze and Tatyans.

49:55 So Depeze being the one you just flipped to briefly there.

49:57 Yeah, yeah, yeah.

49:58 This is like the origin, well, this is the first one I'm aware of.

50:02 Let's start with the OG first then.

50:03 Yeah, okay.

50:04 This guy is a bit of a legend in the Postgres community.

50:07 He's so helpful on so many of the chat forums.

50:10 And he's a Postgres DVA and has been for many, many years.

50:13 And he built this tool because the text format of Explain, I think he described it as unreadable.

50:19 So he's written a whole great blog series on maybe six parts now on reading Explain plans.

50:26 And this tool he's using to highlight in red here kind of big problems or where a lot of

50:33 the time is going.

50:33 So that's that step four that we talked about.

50:35 Work out where the time is going first.

50:38 So if you've seen the Explain text, it's kind of indented and talks about the problem and

50:44 it has things about timing.

50:45 But here's an HTML table that has columns that shows you the timing and then the number of

50:50 rows is very important.

50:52 And then it has, again, still the actual text.

50:54 And it sounds to me like you think this is pretty important to still have the text that

50:58 people expect.

50:58 I think that text is super important for experts and people that have been doing Postgres performance

51:04 work for years.

51:05 So they can fall back to that text if the left hand side is not helping them.

51:10 Or in the case we're looking at right now, there's a lot of red.

51:12 It might be tricky to know where to start.

51:15 You might fall back to looking at the right hand side for something.

51:18 Interestingly, that rows column is a row count estimate multiplication.

51:23 So it's how many rows did Postgres expect to get versus how many actually came back.

51:28 And that's super important for the cases we discussed briefly around which join algorithms

51:35 they're going to choose or multiple other.

51:37 Join order is really important as well.

51:39 It will try and filter out as many as possible in the first few joins so that there's less data

51:44 to worry about in the future ones.

51:45 So if it's really far out in an estimate somewhere, that's really important.

51:50 So yeah, this tool is doing some of those things and doing it really neatly.

51:54 But that text format, I think, is super expert friendly.

51:57 People that are very used to the Postgres text format, this is probably the tool they're going to like best.

52:03 Yeah, it's really nice.

52:05 Okay.

52:05 And then there's the Dalibo, other one that's similar, right?

52:09 Yes.

52:10 So this one's the one that's based on PEV.

52:12 So they call it PEV2, I think.

52:14 And it's open source GitHub repo is PEV2.

52:17 And this is their hosted tool.

52:20 Again, free to use, open source.

52:22 And like the other one, this supports both text format explains and JSON format explains.

52:27 So I think that, yeah, that's what they're saying up at the top there.

52:30 This is much more visual as a tool.

52:33 So this displays the query plan.

52:35 Sadly, they don't have an example that I can just pull up.

52:38 I'd have to like put it all together and drop it in there.

52:40 Or do they?

52:41 I didn't see one when I clicked around on it.

52:42 At the bottom, they might have sample plans.

52:45 There you go.

52:45 So if you, the gray button on the right.

52:49 Oh, yeah, yeah.

52:49 Okay, you're right.

52:50 Okay.

52:50 Let's just go with that one.

52:51 Cool.

52:52 And then I'll submit it.

52:53 Yeah.

52:53 How did it?

52:54 Oh, well, it's gray.

52:54 That's why I missed it.

52:55 Okay.

52:55 Yeah.

52:55 This looks fantastic.

52:56 Yes.

52:57 So this is a visualization.

52:59 Very similar color scheme to the last one.

53:01 So you'll see things in red.

53:02 They're saying are really bad.

53:04 Orange, if they're medium, bad.

53:05 And then yellow, if they're maybe a problem.

53:08 Slowly?

53:08 Yeah, exactly.

53:09 Slightly bad?

53:10 Don't worry about it for now.

53:12 Get back to it later.

53:13 Yeah.

53:13 So this is good for visual stuff.

53:16 If you're that way inclined.

53:17 The other thing, some people that are still extremely competent at this love the left-hand

53:23 side, especially.

53:24 So it looks unassuming, but those little bars are kind of like a flame chart on its side.

53:33 And they'll show you, in this case, we're looking at the amount of time spent by each

53:37 operation.

53:38 But you might be able to say buffers there is a really popular way of looking at it.

53:43 And that's a feature that's not in a lot of the other tools.

53:48 So that's a common reason that people really like this tool.

53:51 Fantastic.

53:51 Okay.

53:52 So these are both totally free, web-hosted.

53:55 Sounds like some of them are open source.

53:56 You can probably download them as well.

53:58 They look nice.

53:59 Let's talk PG Mustard a little bit.

54:02 So like I said, when I came across this, I thought, wow, this is really a lot nicer than

54:07 the other tools that I've seen for doing this.

54:09 And maybe the best way to understand it would be to like sort of talk about two things real

54:13 quick.

54:13 You can tell me how you want to talk about it.

54:15 But I think maybe the performance, talk about the performance advice.

54:19 What do you think about that?

54:20 Like we could maybe go through that real quick.

54:22 Some of the things it'll find for you.

54:24 Well, yes.

54:24 Yeah.

54:25 I know there's a lot listed there.

54:27 But the short version is that we're also trying to, we've got some strong opinions on the

54:32 visual side of things.

54:33 You know, the red gate trying to keep things as simple as possible has not left.

54:37 So my co-founder of this is a guy called Dave.

54:40 And we worked together for a couple of years, almost full time building this.

54:44 So we've also spent a lot more time than some of the others on building this.

54:48 So the big differences are on the visualization front.

54:51 We try and keep things that bit simpler.

54:53 We're also a lot more opinionated.

54:55 We assume you are trying to speed up your query.

54:57 That might sound stupid, but it's not the only reason you would look at a query plan.

55:02 So there are reasons why that we might not be a good fit for people.

55:08 Let's say you're working on the Postgres source code and you're trying to implement a new feature

55:11 and you just want to look at some explain plans.

55:14 You probably want the text format and you probably really want the costs.

55:17 So they're the things that the plan is using to choose between plans.

55:21 You probably want those highlighted.

55:22 And we don't highlight those.

55:24 We hide those really far away from you because most of the time when you're trying to speed up a query,

55:28 that's not what you're going to be wanting to see.

55:31 Right.

55:32 So yeah, so it's super opinionated.

55:33 Yeah.

55:33 So which ones of these really do you feel like are both highly valuable, highly recommended,

55:38 or maybe some that are like, wow, I can't believe it would find that.

55:41 I would have never found that.

55:42 Good question.

55:42 Sadly, the most valuable are the index related ones.

55:46 So as you mentioned that we call it high index potential when there's a lot of rows being

55:52 filtered versus the amount being scanned.

55:54 So that's the big thing to look out for in the text format is when there's higher numbers

55:58 of rows being filtered, not just on sequential scans, but also if you've got an index scan that's

56:04 still having to filter out a lot of rows that we call that an inefficient index, but it's only

56:09 inefficient for that query.

56:10 It's there's nothing wrong with the index.

56:12 It's more that your query doesn't suit that.

56:15 It's just Postgres.

56:16 That's the best one Postgres could use.

56:17 Right, right.

56:18 So yeah, the indexing one's super valuable.

56:20 Operations on disk, we've already discussed a few times that comes up a lot and poor row

56:24 estimates.

56:24 These are the most common.

56:26 All of the others are either rarer or less valuable when you hit them.

56:32 But sometimes when they're rare, they can be extremely helpful.

56:35 So sure.

56:36 Oh, once you pass the advice of look for table scans and then add an index and, you know,

56:41 don't select star if you got a big result set so it doesn't go to disk.

56:45 Like those are pretty not super easy, but those are somewhat easy.

56:49 Right.

56:49 Yeah, exactly.

56:50 And if it's not fast enough, you're like, well, now what?

56:52 It's not fast.

56:53 I had an index.

56:53 It's not fast enough.

56:54 Now what?

56:55 Yeah.

56:55 One more that I'd love to discuss actually is something we talked about really early on

56:59 is the idea of trying to do less work.

57:01 So either not doing the work.

57:04 So what I think something I'm quite proud of is that we'll try and not highlight anything

57:09 if it's pretty optimal already.

57:11 Now that's if your query is pretty optimal already and doing a lot of work and still not

57:15 fast enough for what you want.

57:17 You kind of need to rethink.

57:18 You either need to think, should we estimate this number?

57:21 Do we need to materialize it and run it once every few minutes and have every user look at

57:27 kind of a cached version of it?

57:29 So that's quite powerful.

57:31 And then the other side of it is if it's not efficient, it might be a sign of something else

57:37 going wrong.

57:37 So in Postgres, one of the things that it gets beaten with is effectively its equivalent of

57:44 garbage collection.

57:45 So the way it does multi-version concurrency control, it ends up bloating the tables and the

57:51 indexes a little bit, sometimes a lot, which is a problem.

57:54 It has automatic systems that people sometimes disable around that.

57:58 But also in older versions, especially indexes on heavily updated tables, for example, can

58:04 quite quickly and easily bloat.

58:06 So pointing out, so if you include the buffers flag we talked about, you can spot when Postgres

58:12 is doing a lot of reads versus the amount of data it's returning.

58:15 And that's sometimes a sign that, for example, an index has got really badly bloated and rebuilding

58:21 that index, ideally concurrently if you're on production.

58:25 But yeah, rebuilding that index.

58:26 This is how you fix it, Michael.

58:28 What you do is you put that little banner up there and you say, we're going to be down

58:31 for four hours on Sunday.

58:32 So if you just don't mind, don't use our website on Sundays because that's when we rebuild the

58:38 index.

58:38 Those are often on the same site that have the really bad queries when they're not offline.

58:43 Anyway, yeah.

58:44 So we're trying to help them and trying to educate and trying to teach them how to avoid

58:48 those things.

58:49 But yeah, I know exactly what you mean.

58:50 So yeah, those are the kind of...

58:52 Yeah, that's good.

58:52 Yeah.

58:52 And that I think the more I talk to people that really know this topic and they've spent

58:57 20, 30 years on it, they focus mostly on work done and really trying to minimize and reduce

59:04 that as much as possible.