Solving Negative Engineering Problems with Prefect

Episode Deep Dive

Guests introduction and background

Chris White is a seasoned mathematician, data scientist, and backend engineer who helped found Prefect. Coming from a research and finance background (working in banks and academia), Chris spent years solving data engineering and data science problems across large organizations. As the CTO of Prefect, he and his team build open-source and commercial tools to help developers handle what they call “negative engineering” more effectively. In this episode, he shares his insights on automating and managing workflows in Python and beyond.

What to Know If You're New to Python

Below are a few recommendations and ideas from the conversation to help you get ready:

- Understanding basic function usage, decorators (

@something), and Python’s packaging is important to follow how Prefect’s decorators and tasks work. - Knowing about error handling with

try/exceptwill clarify how "negative engineering" is basically scaled-up defensive programming. - Becoming familiar with simple concurrency in Python (such as using

asyncandawait) will help you follow the discussion about distributing tasks efficiently.

Key points and takeaways

1) Negative Engineering Explained

Negative engineering refers to the extra code and processes you have to build just to avoid unwanted outcomes, rather than to achieve your primary goal. This includes retries, logging, error handling, observability, and more. Prefect is designed to reduce or eliminate this layer of code for data scientists, data engineers, and other Python developers. By explicitly naming these “defensive” tasks as negative engineering, developers can better pinpoint and automate them.

- Links and tools:

- Prefect

- Sentry (observability example)

- Kubernetes (failover and scaling example)

2) Data Engineering and Workflow Challenges

Data engineering involves moving, cleaning, and preparing data—often in diverse or distributed environments. Common pitfalls include job scheduling, error-prone data transfers, schema mismatches, and debugging ephemeral failures. Many organizations still rely on crontab or legacy scheduling tools, which can quietly fail and leave you with broken data pipelines. Addressing these pain points, Prefect handles dependencies, retries, and logging so engineers can focus on data rather than plumbing.

- Links and tools:

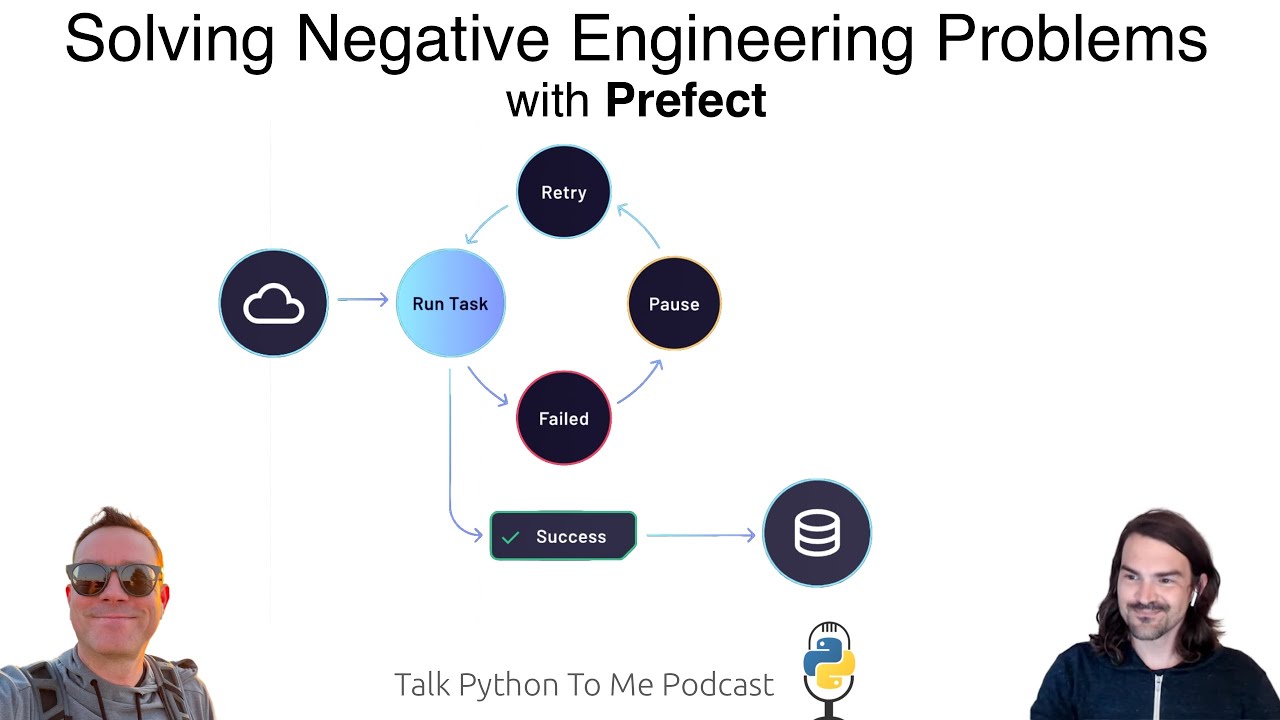

3) Prefect’s Approach to Negative Engineering

Prefect automatically handles issues such as task failures, retries, logging, caching, and alerts. Instead of rewriting the same defensive code, you simply apply Python decorators (like @task and @flow) to your existing functions. This gives you “invisible” negative engineering coverage so your code focuses on business logic. By bridging local development (on your laptop) with production-scale orchestration, Prefect reduces friction and unifies many scattered tasks under one system.

- Links and tools:

- Prefect GitHub Repo

- HTTPX (example of async network tasks in Prefect)

4) Prefect 1.0 vs. 2.0

Early Prefect used a context manager style (with Flow(...) as f:) to build a Directed Acyclic Graph (DAG). In Prefect 2.0, flows and tasks are defined purely with decorators, removing the need for a DAG-building context manager. This new approach is more flexible, allowing conditionals, loops, and dynamic code flow in plain Python. It also simplifies local development, automatically tying into the Prefect Cloud or an on-prem API if desired.

- Links and tools:

5) Async Capabilities and Modern Python

Prefect 2.0 adds robust support for Python’s async and await, enabling highly concurrent tasks—particularly for I/O-bound operations like API calls and database queries. Data engineers can drastically speed up tasks like extracting data from multiple APIs in parallel. Prefect takes care of the event loop complexities, letting you just write async def tasks as normal Python code.

- Links and tools:

6) Open-Source Licensing and Business Model

Prefect is Apache 2.0 licensed, meaning developers can freely use and modify the core engine. The company’s commercial model is built around offering a managed cloud service, extra features (e.g., role-based permissions, enterprise support), and scaling. Chris explained that their revenue model is not about selling the code, but selling the service and support—crucially important for regulated industries and large-scale enterprise teams.

- Links and tools:

7) Bridging Local Development and Cloud Orchestration

A major insight from Prefect is that orchestration can be seen as metadata—where tasks live, how they connect, and how they’re scheduled. Prefect Cloud only deals with metadata; your real code and data can stay in private infrastructure. This “hybrid” approach is especially appealing to industries with strict data regulations and helps teams scale up from a local machine to large Kubernetes clusters without rewriting logic.

- Links and tools:

- Zapier (mentioned as a simpler “no-code” consumer-friendly orchestrator)

- Prefect Cloud (managed service)

8) Incremental Adoption of Prefect

Chris described how you can start small, simply wrapping one Python function in a @flow decorator, while continuing to use your existing crontab or scheduler. Over time, you can move more tasks into Prefect, until it fully replaces your old scheduling or workflow systems. This approach keeps developer friction low and shows immediate value, rather than forcing a large-scale migration in one go.

- Links and tools:

- Cron (classic scheduling tool)

- Prefect 2.0 Docs

9) Observability and Visibility

Prefect’s UI and dashboard give a visual overview of each flow’s runs, states, and logs. This single pane of glass makes diagnosing problems—like an out-of-memory error or an unexpected network blip—easier to track and fix. The UI is built so that if everything is going well, you barely need it. But when there is a problem, you can dive deep with logs and failure states at your fingertips.

- Links and tools:

- Prefect UI

- Datadog (mentioned as an infrastructure observability solution)

10) Building an Open-Source Community

Prefect invests in open-source across multiple fronts: sponsoring conferences, sending pizza to local user groups, and even investing in other open-source projects (like Textualize’s Rich and Textual). They also run a vibrant Slack community with thousands of members, plus a dedicated “Club 42” advocate program. All these efforts make the workflow and data orchestration community stronger while bringing more feedback into Prefect’s own ecosystem.

- Links and tools:

Interesting quotes and stories

“Negative engineering got this sentiment like it’s just anything I don’t want to do. Actually, it’s much more specific: it’s any code you write to ensure outcomes you already expect.” – Chris White

“You can literally add a single

@flowdecorator to your existing code and, in seconds, you’re getting all the reporting, logging, and reliability you didn’t even know you needed.” – Chris White

Key definitions and terms

- Negative Engineering: The work done to avoid bad outcomes, such as error handling, retries, and logging, rather than focusing on the main objective of an application.

- DAG (Directed Acyclic Graph): A structure many workflow tools create, where tasks are represented as nodes and dependencies as edges, ensuring no cycles.

- Observability: The practice of collecting metrics and logs to diagnose and understand complex systems at runtime.

- Flow (in Prefect): A container for tasks and their dependencies, capable of being scheduled, retried, and monitored.

- Task (in Prefect): The smallest unit of work, usually a function, decorated to allow caching, retries, and advanced orchestration features.

Learning resources

- Python for Absolute Beginners: Learn the fundamentals of Python from scratch.

- Async Techniques and Examples in Python: Dive deeper into parallel and async programming in Python, complementing what Chris explained about concurrency.

- Fundamentals of Dask: Although only briefly mentioned in the episode, if you’re curious about scaling data workflows further, Dask is a solid tool to explore in combination with Prefect.

Overall takeaway

Prefect aims to automate and minimize the “negative engineering” baggage that often accompanies data pipelines and complex workflows in Python. Through simple decorators, robust scheduling, and a hybrid on-prem-plus-cloud architecture, developers can focus on their core logic rather than endless scaffolding code. As Chris emphasizes, solving these pain points for data engineering and beyond is about leveraging tools that reduce repetitive defensive tasks and offer rich observability—ultimately freeing teams to spend more time on innovation and less time chasing errors.

Links from the show

Prefect: prefect.io

Fermat's Enigma Book (mentioned by Michael): amazon.com

Prefect Docs (2.0): orion-docs.prefect.io

Prefect source code: github.com

A Brief History of Dataflow Automation: prefect.io/blog

Watch this episode on YouTube: youtube.com

Episode #365 deep-dive: talkpython.fm/365

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 How much time do you spend solving negative engineering problems?

00:03 And can a framework solve them for you?

00:05 Think of negative engineering as things you do to avoid bad outcomes in software.

00:09 At the lowest level, this can be writing good error handling with try-accept.

00:13 But it's broader than that.

00:15 Logging, observability, like with Sentry Tools, retries, failovers, as in what you might get from Kubernetes, and so on.

00:23 We have a great chat with Chris White about Prefect, a tool for data engineers and data scientists, meaning to solve these problems automatically.

00:31 It's also a conversation I think is applicable to the broader software development community as well.

00:36 This is Talk Python To Me, episode 365, recorded May 9th, 2022.

00:55 Welcome to Talk Python To Me, a weekly podcast on Python.

00:58 This is your host, Michael Kennedy.

01:00 Follow me on Twitter, where I'm @mkennedy, and keep up with the show and listen to past episodes at talkpython.fm.

01:06 And follow the show on Twitter via at Talk Python.

01:09 We've started streaming most of our episodes live on YouTube.

01:13 Subscribe to our YouTube channel over at talkpython.fm/youtube to get notified about upcoming shows and be part of that episode.

01:21 This episode is sponsored by Microsoft for Startups Founders Hub.

01:25 Check them out at talkpython.fm/founders hub to get early support for your startup.

01:31 And it's brought to you by us over at Talk Python Training.

01:34 Did you know we have one of the largest course libraries for Python courses?

01:39 They're all available without a subscription.

01:41 So check it out over at talkpython.fm.

01:44 Just click on courses.

01:47 Transcripts for this and all of our episodes are brought to you by Assembly AI.

01:50 Do you need a great automatic speech-to-text API?

01:53 Get human-level accuracy in just a few lines of code.

01:56 Visit talkpython.fm/assemblyai.

01:58 Chris, welcome to Talk Python To Me.

02:01 Yeah, thanks for having me.

02:02 Happy to be here.

02:03 I'm happy to have you here.

02:04 It's going to be a lot of fun.

02:05 We're going to talk a lot of data engineering things.

02:08 Try to loop that back to the more traditional software development side.

02:12 You have a really cool open source project and startup in Prefect.

02:16 And we're both going to talk about the product as well as, you know, making an open source successful business model, which is really cool.

02:24 Yeah.

02:24 I'm very into that and very, you know, that's very important to me.

02:27 And I love to highlight cases of people doing that.

02:29 Well, it looks like you all are.

02:30 Yeah, excellent.

02:31 Yeah.

02:31 It's a, as we'll see as we get into it, it's a core part of how we view the world.

02:35 Yeah, that's awesome.

02:36 Before we get to all that, though, let's start with your story.

02:38 How'd you get into programming in Python?

02:40 So I think like a lot of, so I was born in the 80s.

02:44 I feel like a lot of people in my generation, right?

02:46 We got into HTML and building websites on AngelFire and GeoCities.

02:50 So, you know, from the early days, I was into playing around with computers.

02:54 I would say didn't really get into Python until probably high school is when I first started dabbling.

03:03 And it was really just, I was working at a bank.

03:06 I was like trying to automate some small things.

03:08 And to be clear, I did not get very far.

03:11 It was a mildly successful undertaking.

03:14 And then college, kind of similar story.

03:16 Like it was one of those things that I just would, I had a couple of books.

03:19 I would play around with it.

03:20 It was always just a fun activity for me, but I never had any serious focus on it.

03:25 I think in college, when it started to get into really econometrics, it's when I started to play more seriously and start to try to understand some of the performance implications of what I was doing and things like that.

03:37 And then taking kind of the next level in grad school, a friend of mine and myself thought that we were smart enough to build some machine learning models for trading.

03:47 And we did all of that in combination of Python and Otter.

03:50 Surprise.

03:51 Okay.

03:51 That's amazing.

03:52 Yeah.

03:53 Lost some money.

03:54 But it was a great lesson in understanding data and exactly what you're doing.

04:00 And genuinely, so I was studying pure math in grad school.

04:04 And that experiment and kind of the actual like visceral outcome of it is what really got me into studying machine learning more deeply.

04:12 Because I was like, wait a second, there's something interesting here.

04:14 And so I started to dig in more.

04:16 That must have been a really cool experience.

04:18 Even if you did lose money.

04:19 It's important to put some skin in the game.

04:21 Exactly.

04:22 Right.

04:22 Like it's a hobby until you start to take it seriously and try to get real outcomes.

04:27 Because there's always these layers of these levels, right?

04:30 Like I'm going to learn this thing and poke around and kind of get it to work.

04:34 Or I'm going to learn this thing so that it actually works.

04:37 Or I'm going to learn this thing so I can explain it when there's three ways.

04:41 They all kind of do the same thing.

04:42 I can explain when to choose which one, right?

04:45 And the more seriously you take it and the more is on the line, the more you kind of, you get that real understanding of it.

04:50 I mean, this is a little off of Python, but I think that resonates with me so much on every dimension when it comes to learning something.

04:57 You have to, it's not a passive activity.

05:00 You have to engage with it.

05:01 Right.

05:02 And it's in my opinion, the only way to learn anything.

05:03 Math, programming, business, whatever.

05:06 Totally agree.

05:06 Speaking of math, you have a math background, right?

05:09 I do.

05:10 Proudly so, yes.

05:11 Yeah, awesome.

05:12 Yeah.

05:12 So do I.

05:12 I just last episode spoke with the SymPy, S-Y-M-Py guys, about doing symbolic math with Python.

05:20 And that was pretty fascinating stuff.

05:23 Have you played with any of the symbolic stuff?

05:25 I have played with it, yeah.

05:26 I had no serious project.

05:28 Like genuinely just playing around with it to see kind of what it's capable of.

05:31 It is really cool.

05:33 I would definitely go look up that episode, yeah.

05:35 Awesome.

05:36 What kind of math did you study?

05:37 So I started my PhD program focusing on arithmetic geometry, which for people out there, it's one of the more abstract forms of math.

05:46 I still really like that stuff a lot, honestly.

05:48 But what I found was that-

05:51 Is it like manifolds and stuff like that?

05:53 Not quite.

05:55 It's a little bit-

05:56 So they use a lot of the same-

05:58 A lot of their arguments are kind of by analogy with things like manifolds.

06:02 But you're studying geometric structures that are way more discrete than a manifold.

06:08 And so like the spectrum of prime numbers on the integers is like a geometric object for the purposes of arithmetic geometry.

06:16 Wow.

06:17 Okay.

06:17 Hard to visualize, but it just turns out a lot of the formal definitions of geometry have these really deep analogs and arithmetic.

06:24 And you can actually learn a lot.

06:26 And the most famous example of this that I think a lot of people will probably be familiar with is Fermat's last theorem.

06:31 Yeah, that's right.

06:32 So that was all arithmetic geometry.

06:34 It was all using some of those techniques.

06:35 Yeah, that's right.

06:36 So they're really heavy duty to solve very simple two-state problems.

06:39 There was a really good book about Fermat's last theorem by this guy named, something like Amol or something like that.

06:49 Maybe you'll find it for the show notes.

06:50 It's just absolutely fascinating book.

06:52 Oh, that's awesome.

06:53 It just talks about the struggle that guy-

06:54 Yeah, yeah.

06:55 It really talks about the struggle that guy who solved it went through.

06:58 Cool.

06:58 Well, how about now?

07:00 You're at Prefect, right?

07:01 What do you do day to day?

07:02 I didn't finish where I went with grad school, which is relevant to how I got to Prefect, which I ended up going into optimization theory, still on the pure side.

07:10 So still very much proof-based.

07:11 But that was right when machine learning was becoming a thing, right after I had done this experiment with my friend and started getting into it.

07:17 And so that's kind of how I started to head more towards industry.

07:22 And for me, I consider myself a problem solver.

07:24 And so I was always very good at solving problems.

07:27 But I'll admit, I wasn't always the best at maybe justifying a grant proposal or something like that.

07:32 And so that's kind of when I started to think more about industry and started doing some consulting and test the waters.

07:38 And so anyways, long story short, got into data, got into machine learning, got into tooling for machine learning, got into backend engineering for hosting the tooling, et cetera, et cetera.

07:50 And then met with Jeremiah, who's the CEO of Prefect.

07:52 Yeah.

07:53 Just sucks you in, right?

07:55 Exactly.

07:55 It's a black hole.

07:56 Yeah.

07:57 Yeah.

07:57 Yeah, cool.

07:58 So you started out working at banks doing sort of homegrown data engineering, right?

08:06 Is that maybe a good way to describe it?

08:08 Homegrown a lot of things, yes.

08:10 So homegrown data engineering, one of them.

08:12 Homegrown model building was another big, big thing.

08:15 Homegrown data governance at one point.

08:18 Homegrown data platform.

08:20 And actually, the data platform was particularly interesting because looking back, it kind of felt like a microcosm of a lot of the,

08:27 the tool explosion we've seen lately.

08:28 Where the platform we were building was for data scientists to deploy their model to and connect it up to data sources that, you know, we would keep up to date.

08:36 And so that's where the data engineering comes in.

08:38 And then business analysts are the actual downstream users.

08:41 And they would interact with these models through an API that we would build for them on top of the models.

08:45 It was still in Python.

08:47 So they would actually have to write Python, which was really interesting.

08:51 So you got to teach them classes on it.

08:53 That is interesting.

08:53 Yeah.

08:55 Yeah.

08:55 I've seen, you know, non-developer types do that before.

08:58 I've seen it in like real-time stock trading, you know, like brokerages and hedge funds.

09:03 Right.

09:04 And yeah, it's, you're going to learn Python because you need to talk to the tool.

09:08 I've also seen people learn SQL who have no business knowing SQL otherwise, but for sort of a similar reason.

09:13 Yeah.

09:13 Yeah.

09:14 And SQL is a little bit more approachable, I think.

09:16 Yeah.

09:16 But Python, Python is just a little, it's easier to kind of shoot yourself in the foot with Python, I think, if you don't really know it well.

09:22 It's just more open-ended, right?

09:24 It's just, it's way more open-ended.

09:26 Cool.

09:26 All right.

09:27 So you talk a lot about negative engineering concepts and how you've structured Prefect to help alleviate, eliminate, solve, prevent some of those problems.

09:39 So maybe we should start the conversation and sort of twofold, like maybe give us a quick overview of what you might call data engineering.

09:47 And then what are these negative engineering things that live in that space?

09:51 So, yeah, and I think these two concepts are related, but also, but I think negative engineering is definitely more general.

09:55 So for, well, let me start with negative engineering and then we'll kind of, kind of drill in.

09:59 Yeah.

09:59 So negative engineering, we've got a blog post that we published, I think three years ago at this point on negative engineering.

10:06 Encourage anyone who's interested, go read it.

10:08 I think since we have released that blog post, we have refined kind of our own understanding and thinking about this.

10:14 And one thing that I kind of noticed is negative engineering got this sentiment, like it's just anything I don't really like to do.

10:20 And that's not accurate.

10:22 There's a lot of things.

10:22 Those are negative engineering.

10:23 I'll tell you what.

10:24 Yeah, exactly.

10:25 Got nothing done in those meetings.

10:26 Yeah.

10:26 Exactly.

10:26 And like, I don't think so.

10:28 And so, yeah, to be really precise with the way we think about this, positive engineering is code or interacting with software systems that you do explicitly to achieve an outcome.

10:38 So I run a SQL query to populate my dashboard or something like that.

10:45 It's a very concrete connection to some sort of outcome.

10:48 And then negative engineering are code you write systems you interact with that ensure those outcomes.

10:55 I am sort of like insurance.

10:58 And so defensive code is a great example of negative engineering.

11:02 It's something that you're writing.

11:04 When you're writing those try accepts and everything, you're really hedging against anticipated failure modes that you're trying to account for right now.

11:12 If the data was always well formed, it would never crash.

11:15 If the servers was always up, it would never crash.

11:18 Exactly, exactly.

11:19 But then you get the reports, the sentry messages or whatever.

11:23 Exactly.

11:24 And so observability, I think.

11:26 Complete negative engineering.

11:28 Observability is not something you do for its own sake.

11:30 You do it in anticipation of an unknown future failure mode.

11:35 It allows you to recover the outcome.

11:37 That you want to avoid.

11:38 Yeah, failure is only like the first class citizen here, right?

11:40 Something failed and you want to figure out what happens so that you can fix it.

11:45 To really tie it to insurance even more directly, all of the things we're talking about are situations where a small error has a disproportionately large negative impact on an outcome.

11:57 So scheduling is an example here.

11:59 If you have Cron running on a server, running a Python script, and something you do, maybe you load just far too much data in your script and the machine crashes out of memory.

12:09 You don't get an alert.

12:10 You wake up the next morning and 30 jobs have not run.

12:14 You don't know why.

12:15 You have to figure out why.

12:16 By the time you figure it out, you're five hours deep.

12:18 Maybe not that long, but two hours deep.

12:20 And using a service or a system, and we'll talk about the details, like Prefect or some other type of observability negative engineering tool, you would potentially get a text alert.

12:29 Or at a minimum, you would wake up and immediately see, oh, that happened at 1 a.m.

12:33 I know what happened.

12:34 Let me just fix it really quickly.

12:35 And you're back up to speed.

12:36 Yeah, interesting.

12:36 Yeah.

12:37 Frank out in the audience says, defensive programming, that means good handling on exceptions and so forth.

12:43 And I think that's interesting, Frank.

12:45 I think I do agree.

12:47 But it sounds to me, Chris, like you're even talking like way broader.

12:50 Like, do you, if you're writing an API, do you have to even think about hosting that or making sure that it's scaled out correct?

12:58 Or like, you know, observability as tracking error reporting in the broad sense of sure, you should be doing the small defensive programming, but also to deal with these negative engineering problems.

13:08 But like, there's whole businesses around dealing with segments of it.

13:12 Exactly, exactly.

13:13 And I think putting a word to it, as simple as it may seem, really helps, especially for building a company and a product like refine and target.

13:23 Like, what are the features that are important to us and which ones are not important, at least at this time?

13:27 And especially in orchestration and data engineering.

13:30 I mean, it's very tempting to build cool stuff because there's lots of cool stuff you can build.

13:34 But are you guaranteeing an outcome?

13:36 Are you ensuring against some outcome?

13:38 Like, are you sure you know exactly what you're providing here when you build that cool thing?

13:42 Right.

13:42 So you guys use, you try to identify some of these areas of negative engineering that data engineers run into.

13:49 And you're like, how do we build a framework such that they don't have to worry about or think about that?

13:53 Exactly, exactly.

13:54 And so for data engineering, I think of this as it's any software engineering that you do that either moves data, cleans data, or prepares data.

14:06 Either for another person to ingest or maybe another system to ingest, but it's all the activities surrounding that.

14:12 And I think for, I know, maybe not everyone listening is in the data space specifically.

14:18 So just as the easiest example, we have a production database running behind some web server, some API, and you want to do analytics on it.

14:25 Well, maybe you're using Postgres, not the best analytics database.

14:29 And also you don't want to actually write a query that takes down the database.

14:31 So what do you do?

14:32 You take the data out, you put it into BigQuery or Snowflake or somewhere else.

14:36 You run your analytics over there.

14:37 The schema is there.

14:38 Right.

14:39 You probably totally change the schemas because you want to, in a relational database, somewhat in the document database, maybe a little less, but definitely in a relational database, your job is third normal form.

14:50 Like, how do I not have any data that repeats?

14:53 I'll have a 10-way join rather than have something repeat.

14:56 But when you want to do reporting, those joins are killers.

15:00 You just want, like, I want to do a straight query where, you know, this column is that and just, like, wreck the normalization for performance reasons, right?

15:07 Right.

15:07 You just want to have fun.

15:08 Yeah.

15:08 Exactly.

15:09 So you can ask the questions in very interesting ways, like many ways, in simple queries rather than being a SQL master.

15:18 Exactly.

15:18 Exactly.

15:19 And keeping that system running, keeping the data fresh, keeping the schemas in sync, that's a lot of work, actually.

15:25 And that's one of the classic examples of data engineering.

15:28 There's a lot of other stuff, too, but that's the classic.

15:30 This portion of Talk Python To Me is brought to you by Microsoft for Startups Founders Hub.

15:37 Starting a business is hard.

15:39 By some estimates, over 90% of startups will go out of business in just their first year.

15:44 With that in mind, Microsoft for Startups set out to understand what startups need to be successful and to create a digital platform to help them overcome those challenges.

15:53 Microsoft for Startups Founders Hub was born.

15:56 Founders Hub provides all founders at any stage with free resources to solve their startup challenges.

16:03 The platform provides technology benefits, access to expert guidance and skilled resources, mentorship and networking connections, and much more.

16:11 Unlike others in the industry, Microsoft for Startups Founders Hub doesn't require startups to be investor-backed or third-party validated to participate.

16:21 Founders Hub is truly open to all.

16:23 So what do you get if you join them?

16:25 You speed up your development with free access to GitHub and Microsoft Cloud computing resources and the ability to unlock more credits over time.

16:33 To help your startup innovate, Founders Hub is partnering with innovative companies like OpenAI, a global leader in AI research and development, to provide exclusive benefits and discounts.

16:44 Through Microsoft for Startups Founders Hub, becoming a founder is no longer about who you know.

16:48 You'll have access to their mentorship network, giving you a pool of hundreds of mentors across a range of disciplines and areas like idea validation, fundraising, management and coaching, sales and marketing, as well as specific technical stress points.

17:02 You'll be able to book a one-on-one meeting with the mentors, many of whom are former founders themselves.

17:07 Make your idea a reality today with the critical support you'll get from Founders Hub.

17:12 To join the program, just visit talkpython.fm/foundershub, all one word, no links in your show notes.

17:19 Thank you to Microsoft for supporting the show.

17:21 One of the things I see stand out, I don't want to get the API right away, but I just see like coming out of the API that you all build is there's like retries like right front and center.

17:32 Like here's a task and I wanted to retry with this plan, right?

17:36 You have this number of times or there's probably like a back off story and stuff.

17:40 Exactly.

17:41 And that's another great example of small error, the tiniest network blip.

17:46 Kubernetes, I don't know, I've seen kubedms sometimes just doesn't do what it's supposed to do.

17:50 Somebody was flipping over the load balancer and you hit it at just the wrong time and there it goes, right?

17:55 Exactly.

17:55 And now next thing you know, I mean, a lot of different things can happen depending on the script you wrote.

17:59 Maybe you did a lot of good defensive programming yourself and the try accept was a little bit too much.

18:04 And so your next task actually runs despite the first one failing.

18:08 And maybe it passes the exception downstream.

18:10 And now you have this cascade of errors that you have no idea what they mean.

18:13 And another thing in negative engineering is dependency management, making sure that if this fails, things that depend on it do not run unless they are configured to run only on failure.

18:23 Yeah.

18:23 Worst case scenario, they say, yes, this is a good investment.

18:26 You should buy it.

18:26 Or yes, this is a good decision.

18:28 And they like.

18:28 Exactly.

18:29 Exactly.

18:30 It's really cheap.

18:31 Well, it's zero because the task failed to find the price.

18:33 So, of course, you should buy it.

18:34 Exactly.

18:35 Exactly.

18:35 And you want to know that it happened and make sure that the effect, the blast radius is minimized.

18:39 And that's really what it's all about.

18:40 And like retry is a perfect example of just one of those small things that can cascade weird, unexpected ways.

18:45 Yeah.

18:46 What are some of the other areas of crufter of these problems you see in data engineering?

18:50 Logging is a big one.

18:52 Just having a place where you can see some centralized set of important logs.

18:56 Any and all.

18:58 The more you use like Kubernetes are more like you kind of.

19:00 Oh, yeah.

19:01 Distributed systems.

19:02 Microservice it out.

19:03 The harder it is to know what's going on in the logging story.

19:06 Well, right.

19:06 And the definition we work with in the modern data stack are data tools that deliver their feature over an API.

19:12 And so if you think about that, you're dealing with inherently this giant microservice system that you want to like coordinate and see in some centralized place.

19:21 In some meaningful way.

19:22 And collaboration, versioning, those are all other things.

19:26 Caching.

19:26 So just configurable like storage locations for things.

19:31 And then maybe the biggest one that is simple, but I see people building this internally all the time, which is just exposing an API, a parametrized API, which is triggering some type of job.

19:43 Next thing you know, it needs to be available, you know, throughout your whole network.

19:47 It needs to be up.

19:48 It needs to be monitored and tracked and audited and all these things.

19:51 Maybe versioned.

19:52 Maybe versioned.

19:53 Exactly.

19:53 Exactly.

19:54 Oh my gosh.

19:55 Yeah.

19:55 It just gets, all of a sudden you're like, okay, I'm building an entire system.

19:58 My job is not this.

20:00 Yeah.

20:01 It's one of those things that seems so simple.

20:03 Like I would love it if you would just really help us out, you know, Michael here, if you could just give me a quick little, little API that we could just call that API.

20:11 I mean, look, I'll just, here's the JSON.

20:13 It's like that big.

20:14 And if I could just call it, boy, things would just unlock.

20:17 And then, then it's like a holiday and it's not working.

20:20 And now I'm dealing, like, how did I get this job?

20:23 Right.

20:23 Right.

20:24 Right.

20:24 Yeah.

20:25 And the second someone says just anything, you're like, oh, I'm on edge.

20:28 What do you mean?

20:29 Are you sure?

20:30 Exactly.

20:31 I don't want it.

20:32 Give it to someone else.

20:33 Give it to someone else.

20:34 Awesome.

20:35 All right.

20:35 Well, you know, maybe that's a good time to talk a little bit more in detail about Prefect.

20:40 So you all have on the GitHub page, if I track it down, you've got an interesting way to discuss it.

20:48 It says Prefect is the new workflow management system designed for modern infrastructure and powered by the open source Prefect core.

20:56 Users organize tasks into flows and Prefect takes care of the rest.

21:00 So there's a lot of stuff here that I thought might be fun to dive into.

21:03 So new workflow management system, as opposed to what was there before.

21:08 So maybe we could sort of take this apart a bit.

21:10 Yeah.

21:11 So we actually.

21:12 What do you mean by new workflow?

21:13 We have.

21:14 I know you also have a new, new one coming as well, right?

21:16 Yeah.

21:17 Yeah.

21:17 We have new, new.

21:18 You always got to keep rebuilding.

21:19 We have a great post on at least part of this that encourage people to go check out called the history of data flow automation that really will get our head of product wrote it.

21:27 And it's just a great kind of tour through the history.

21:29 But so for us, a lot of the different workflows, so workflow management, right?

21:34 You have some set of businessy business logic tasks that are stringed together with some dependency.

21:40 It could be a lot of conditionals or something like that.

21:42 You want to run it usually on a schedule, but sometimes ad hoc or maybe event based.

21:46 And there's a lot of different systems for managing these quote unquote workflows.

21:51 Okay.

21:51 Many of them.

21:52 I guess one way to think about it is they're cut by context.

21:55 What context are you operating in?

21:57 Is this like a data context or like Zapier, for example, is a very consumer facing?

22:01 And what is the user persona?

22:03 Right.

22:04 If you think about Zapier with all these different automations, all these triggers, and then all these actions, it's just like.

22:09 Right.

22:10 I mean, if that must be insane.

22:11 Yeah, exactly.

22:12 And that's a workflow.

22:13 It's totally valid.

22:14 And their user persona is a no code person.

22:16 Also totally valid.

22:17 And so for us, new workflow management system means kind of the next generation after a lot of the Hadoop tooling.

22:26 So Hadoop, as you can kind of see in this post too, Hadoop caused an explosion of just really cool new tools.

22:34 And Airflow, Luigi, Azkaban, I like this another one, maybe Uzi.

22:40 A lot of these kind of came out of that error to manage these distributed jobs.

22:45 And so they're kind of like, I think of them as like distributed state-based Cron.

22:51 You can put them on a well-defined schedule.

22:54 They manage the depend.

22:55 So it's actual dependency management, which Cron does not do.

22:58 And they can do it kind of across multiple computers, which is really convenient.

23:03 Yeah.

23:04 In a real simple way, it's like kind of the Cron.

23:06 It is kind of like Cron, right?

23:07 Like just look here for data and then just run this process against it.

23:11 But it's so much more with the dependencies and then pass it to here.

23:16 And then, yeah, right.

23:17 It's just the flow of it.

23:18 Exactly.

23:19 And you would be insane to try that with just timing.

23:21 Exactly.

23:22 Exactly.

23:22 And so new for, I mean, new for us can mean a lot of different things, but I'll just, I'll

23:27 just say, we just talked about it.

23:28 It's really taking approach of scheduling is important and alerting on failures of scheduling

23:33 is important, but we're like expanding the vision there.

23:35 And it's much more about this negative engineering, which includes observability, configuration management,

23:40 event-driven work, not just scheduled work.

23:43 Scale is really important because data scientists have a lot of the same needs as data engineers

23:47 and those tools were not meant for data scientists.

23:50 Right.

23:50 Yeah.

23:50 Yeah.

23:51 You were talking, I heard you speak about wanting to run a bunch of experiments, like hundreds

23:55 of thousands of experiments as a data scientist.

23:58 And some of the other tools would talk about running operations in tens per minute or tens,

24:05 something like that.

24:06 And you're like, I need something that does it in tens or hundreds per second.

24:09 Yeah.

24:09 I mean, anything that, you know, allows you to just explore a search space of hyper parameters

24:15 and do so in a way that is easy to quickly find, you know, some subset of those parameters

24:20 and see whether they succeeded or failed.

24:21 You can define that criteria.

24:23 You can raise an exception, for example, if like some output just violates some assumption

24:28 you have.

24:28 And then that way it shows up as red is like, you're not going to look at that.

24:31 And managing an interface to the infrastructure is another big part of this.

24:36 So I guess maybe I'm jumping ahead, but the next part is design for modern infrastructure.

24:40 And I think modern infrastructure can mean a lot of different things.

24:44 For us, it means first off that there's a diverse array of infrastructure people use.

24:50 And so creating a system that can plug and play with a lot of them.

24:53 So we support, for example, some of the more popular ways of deploying prefig flows are in

24:57 Kubernetes and Fargate.

25:00 So kind of like a serverless style model.

25:02 So you can do it on your local machine.

25:03 And so just having that kind of unified interface to interact with all these things is one aspect of modern.

25:08 Another is that local development to cloud development story.

25:14 That's really important, right?

25:15 You want to make sure that these are as close as possible to each other so that you can debug things locally and things like that.

25:23 And so that's another aspect as we try really hard.

25:26 And 2.0 gets this way better than 1.0 for the record of mirroring what code is exactly running in prod versus your local.

25:34 Something that always makes me nervous when I hear people talking about, oh, this is cloud native.

25:40 And you can just, there's like 50 different services in this particular cloud.

25:44 And so why don't you just leverage like nine or 10 of them?

25:46 And I just always think, you know, well, what is the development going to feel like for that?

25:51 You know, how, if I'm on a spotty internet connection or something like that, is it just inaccessible to work on?

25:58 Do I have to just completely sort of live in this cloud world?

26:01 And it sounds like there's a more sort of local version that you can try and work with as well here.

26:07 Yeah.

26:08 And one of the things that we achieved with 2.0 is we refactored kind of where orchestration, different aspects of orchestration takes place.

26:15 And so all of the true orchestration logic that we want to own runs behind an API.

26:21 And the reason that I'm saying, I'm like emphasizing that is in 1.0, that's not 100% true.

26:27 And so when you run a workflow locally, it's talking to an API.

26:31 Maybe it's your self-hosted open source API.

26:33 So it's maybe responding slightly differently.

26:36 But the code path running on your machine and its requirements and everything else is exactly the same as what's going to run in production.

26:44 It just might talk to a different URL.

26:45 Sure.

26:45 Let me stick on this.

26:46 We were halfway through your sentence.

26:48 I do want to talk more about like the cloud and stuff.

26:51 So powered by the open source preflect prefect core workflow engine.

26:56 Tell us about that.

26:58 So since day one, always wanted to put as much open source as is reasonable.

27:03 And one of kind of the ways that we think about what we put in the open source, and then I'll tell you what kind of this workflow engine is, are like, what are the things that we are maximally leveraged to support extensions of and, you know, new configurations of?

27:18 And our core workflow engine is definitely one of those things, right?

27:21 We're the experts in it.

27:22 And that engine is the thing that manages, for example, that a downstream dependency can't run if it's upstream failed or maybe just hasn't completed yet.

27:31 The caching logic is a part of that workflow engine.

27:34 The triggering logic for the workflow, the scheduling of the workflow, all of that stuff is open source.

27:39 That's the UI visibility sort of tracking bit as well, right?

27:44 A hundred percent.

27:45 Yep.

27:45 That is all open source.

27:46 And we build it as a part.

27:47 So we actually have a dedicated front-end team and we build the UI and package it up in the packages of pre-built website.

27:55 Yeah.

27:56 I'm not sure of where I would.

27:57 Oh, here we go.

27:58 I found a cool little UI picture.

28:00 Oh, there we go.

28:01 That's, yeah, that's the 2.0 one.

28:02 Yeah.

28:02 Yeah.

28:03 So, I mean, this UI to see what's working, what's not working, how often has it succeeded, you know, what's succeeded, what's failing, what jobs are unhealthy, for example.

28:13 Like, that's all negative engineering, right?

28:15 Your job wasn't to start out to build this observability web front-end.

28:19 Your job was to get the data in and then get it into the database and start doing it for analysis or whatever.

28:25 Exactly.

28:25 Predictions.

28:26 But here you are in Vue.js going after it, right?

28:30 Or whatever it is.

28:30 No, it is Vue.

28:31 Good call.

28:32 Good call.

28:32 Yeah.

28:32 Right on.

28:33 And one of the ways I think about this dashboard view is it gives you this landing page to, you have some mental model of your expectations.

28:39 You can check quickly if they are violated here.

28:42 And then if so, dig in further, click around.

28:44 And if not, you know, we are more than happy when people exit out of the UI and are like, we're moving on.

28:49 It's like, perfect.

28:50 We did our job then.

28:51 Yeah, that's good.

28:52 But yeah, that's pretty neat.

28:53 So that's part of the core engine.

28:55 Yep.

28:56 A hundred percent.

28:56 And so things like auth, for example, are not part of that.

28:59 So in that case, like a lot of ways auth can get extended.

29:03 There's a lot of different ways that we might implement it.

29:05 And that's not exactly right.

29:07 Our competitive advantage, supporting different ways that you might deploy auth securely.

29:11 And so it's like, nope, that's our platform feature.

29:13 We can do it in the way we know best and can do it securely.

29:17 Sure.

29:18 And so it's worth pointing out, I suppose, that the way it works is there's the open source engine and then there's the Python API.

29:26 And then you talked about different ways to run it and to host it, right?

29:30 So one way to host it is to just use your cloud, right?

29:34 You've got the Preflect cloud where it just runs with all these things there.

29:39 And then the others, I could run it.

29:40 I could self-host that core workflow engine or just run it on my laptop or whatever.

29:45 So it's a little bit more complicated than that, actually, in an interesting way.

29:49 All right.

29:49 All right.

29:50 Tell us about it.

29:50 So Jeremiah and I both come from finance world.

29:54 And so a lot of our first kind of early design partners and advisors come from that world.

29:58 And one of the challenges one of our advisors gave us was very genuinely, I don't want to learn your tech stack so that I can host it within my tech stack.

30:07 And there's no way I'm ever going to give you my code or data because it's highly proprietary.

30:11 That's your problem.

30:13 And we're like, okay, well, that sounds impossible, right?

30:16 But we thought about it.

30:17 I think companies are already so freaked out about losing the data without even meaningfully giving it to someone else, right?

30:23 They're already like, well, we might lose this.

30:26 We might, you know, might be ransomware.

30:27 There might be other things, right?

30:28 And so the idea of just handing it over does seem probably pretty far out there for a lot of them.

30:33 Exactly.

30:34 And so what we designed after a long time, we really like thought about it, but we did this back in 2018, maybe beginning of 2018.

30:43 We came up with a model where orchestration takes place over an API.

30:48 And if you really think about it, think of other orchestrators, Cron, Kubernetes is a container orchestrator.

30:52 They operate on metadata.

30:53 They operate on container registry locations and specs for how you expect it to run.

30:58 And once we had that insight, we designed the system so that the cloud hosted API that we run operates purely in metadata, result locations, flow names, flow versions, things like that.

31:10 And then you run an open source agent anywhere that you want.

31:14 And it operates on a pure outbound pull it model.

31:17 So all of our features are based on the agent polling and then your workflow also potentially doing some communication.

31:24 And because of that, you know, there's still this outbound connection you have to think about.

31:28 You still have to trust us with some of your parameters.

31:30 And, you know, there's definitely still some security surface area that we have to think about.

31:35 But we do not post your data and we do not have access to your execution.

31:40 And that unlocked this problem for us.

31:43 And so as long as we have enough agents that can be deployed in lots of different places, then, you know, we can deliver a lot of value.

31:50 Yeah, that's pretty excellent.

31:51 So if you want to host it in AWS or Kubernetes or Linode or wherever, you just, that's up to you, huh?

31:58 Exactly.

31:59 100% up to you.

32:00 Is there a way where I can do it somewhat offline?

32:03 Like, for example, with the open source core engine, does that still go back to you guys or is that sort of local?

32:10 No, that's totally local.

32:11 And it's designed with the same hybrid approach.

32:13 So you could have, you know, your platform team, maybe your DevOps team hosting the API for you and the database behind it.

32:19 And then you as the data team can manage your agents.

32:22 And just as long as you have access to the API, you can set it up the exact same way internally if you want.

32:26 And we've seen places do that for sure.

32:29 Sure.

32:32 If you're a regular listener of the podcast, you've surely heard about Talk Python's online courses.

32:37 But have you had a chance to try them out?

32:39 No matter the level you're looking for, we have a course for you.

32:42 Our Python for Absolute Beginners is like an introduction to Python, plus that first year computer science course that you never took.

32:48 Our data-driven web app courses build a full PyPI.org clone along with you right on the screen.

32:55 And we even have a few courses to dip your toe in with.

32:58 See what we have to offer at training.talkpython.fm or just click the link in your podcast player.

33:07 Let's maybe talk through a quick example of using it.

33:11 Oh, hold on.

33:11 The last part of that sentence is users organize tasks into flows.

33:17 And so let's look at a quick example maybe of the code that you might do here.

33:23 Let's see.

33:24 Sure, here.

33:25 This probably isn't.

33:25 It's always tricky to talk about code on audio formats.

33:30 But just give us a sense of what does it look like to write code for Prefect.

33:35 Yeah.

33:35 So one of our design principles, right?

33:37 We talked a little while ago about this negative engineering problem.

33:39 It kind of emerges.

33:40 And eventually you're doing all those activities that you didn't care about.

33:44 And kind of in an interesting way, we try to mirror that with the way Prefect gets adopted.

33:48 So I love to call it incremental adoption.

33:50 I want the complexity of what you're trying to achieve and the amount of code you have to write to scale.

33:57 I mean, ideally like sublinearly or something, but, you know, scaled together.

34:00 And so an example you have here, our 2.0 takes this way further.

34:04 But we operate on this decorator model.

34:06 So just really simple.

34:07 You have Python functions.

34:08 You already wrote them.

34:09 You presumably already even have this script.

34:11 You just want to sprinkle in some Prefect so that you get some observability into it.

34:15 And then if you want to start to do more and more things, you might have to write more and more code.

34:19 But it's, you know, appropriate for the activity that you're trying to achieve.

34:23 And so, yeah, we try to be really simple.

34:26 We like it when people kind of get the feeling that this is like a toy kind of package that you play around that just has these heavy duty impacts.

34:34 So, yeah, tasks are the smallest unit of work that we can look at.

34:37 Tasks can do things like retry.

34:39 They can cache.

34:40 They have well-defined inputs and outputs.

34:41 Flows are containers for managing dependencies of tasks.

34:46 They also have well-defined inputs and outputs, also have their own states.

34:51 But flows are the things that can be scheduled and triggered via API.

34:56 And tasks are kind of just the smaller, more granular units of orchestration within those workflows.

35:02 And so the way that this looks is it looks just like a function.

35:06 And you kind of just call it with the arguments or whatever.

35:10 Yep.

35:10 And then you put a task decorator on there, which is pretty interesting.

35:14 And that's where the retry thing can be.

35:16 Exactly.

35:16 Then you also have a context manager, which I think is a nice pattern.

35:20 So you have a context manager to create the flow.

35:22 And then you basically simulate doing all the work with an abstract parameter.

35:26 And then you kind of set it off, right?

35:28 So that is true in 1.0.

35:31 However, what we found.

35:33 Something new is coming.

35:34 Yeah, this is important.

35:35 That context manager, all that code runs like you called out.

35:39 And so it compiles this DAG.

35:42 That's everyone.

35:43 Directed acyclic graph.

35:44 What we realized in talking to a lot of our users on 1.0 is that confronting the DAG.

35:51 Because sometimes people would write their own Python code that wasn't prefect in that context manager.

35:56 And it would actually run.

35:57 It wouldn't be deferred.

35:58 And they would get confused.

35:59 They're like, why do I have to care about this?

36:01 And we started to realize that this DAG model really came most likely out of the constraints of YAML flat file formats.

36:10 And they were mirrored in all the different tools that were built on top of that.

36:14 And then all of a sudden, everyone's talking about DAGs.

36:16 Data engineer, when they're writing a script, to move data around, should focus on the script.

36:20 They shouldn't focus on this abstract program concept of can't do control flow, essentially,

36:24 without really thinking deeply about it.

36:26 And so in 2.0, we remove this context manager.

36:30 Flows are also now specified via decorator.

36:33 So the deferred computation is just function definition.

36:36 And now we will discover the tasks at runtime.

36:39 And you can implement native Python logic in flows.

36:42 And that's totally fine by us.

36:44 So it just unlocks the expressiveness of what you can write in Prefect really natively.

36:49 natively.

36:49 That's awesome.

36:50 So you can have loops or if statements or whatever you want to write.

36:54 Oh, yeah.

36:54 While statements even.

36:56 Yeah.

36:56 You can have flows that change structure from run to run.

36:59 All of it.

37:00 Okay.

37:00 So the thing that strikes me here is you kind of write regular Python code.

37:04 And you put a decorator or two on it.

37:06 And it just works in a different but similar way.

37:09 That's a little bit of that negative engineering influence as well.

37:13 It's like, how do I take normal stuff without too much work and make it more general for pipelines?

37:19 Exactly.

37:20 We call it workflows as code instead of code as workflows.

37:23 I'm sorry.

37:23 Code as workflows.

37:25 Because you have the code.

37:27 It is the workflow.

37:28 And now you just want us to care about it.

37:31 And so we should be minimally invasive when we do that.

37:34 Because the second you have to refactor your code significantly, you're back in negative engineering.

37:38 You have to think about the consequences of the refactor and everything else.

37:41 And we want to avoid that as much as humanly possible.

37:43 You should have to do a little bit.

37:44 Yeah.

37:44 A couple of things that I saw that stood out to me checking out your API here that was interesting.

37:49 One was I can have async methods and async execution of these things.

37:54 So async and await style, async def methods and await operations.

37:58 You want to talk about that support?

38:00 Yeah.

38:00 So if you actually go to orion-docs.prefect.io, that's where a lot of our 2.0 docs are currently

38:06 located while we're still in beta.

38:08 But they will, of course.

38:09 And then hyphen docs.

38:10 Yeah.

38:11 So this async works.

38:12 That's probably where I saw it.

38:12 Yeah.

38:13 Yeah.

38:13 Yeah.

38:14 Cool.

38:14 Big shout out to our prefect engineer, Michael Adkins, who really took a lot of time to dig

38:19 into the guts of async.

38:21 And he set it up so that you can do crazy things.

38:24 You can have synchronously defined flows with asynchronous tasks.

38:29 And our engine, the executor, will manage it all for you just to make sure that they're

38:34 running in the right event loops and things like that.

38:35 It's really cool.

38:36 We've got to create a loop and just run this in a way because internally it's synchronous

38:40 or something like that, right?

38:41 Exactly.

38:42 Exactly.

38:42 And so it's really slick.

38:44 And it gives at least users who know how to write async code, kind of this native feeling

38:48 of parallelism.

38:49 We all know it's not quite parallelism, but it gives you at least that feeling, especially

38:53 when you're doing a modern data stack.

38:54 If it's all API driven, you've got a lot of network IOs.

38:58 It's talking to databases.

38:59 It's talking to file IO.

39:01 It's talking to external APIs.

39:02 Like all of those are perfectly scalable.

39:04 Exactly.

39:05 Exactly.

39:06 Yeah.

39:06 Yeah.

39:06 Cool.

39:06 So you can have at task and say, I'm just going to do an async def some function.

39:12 The example you have in your docs, your Orion docs is using HTTPX async clients to go talk

39:19 to the GitHub stuff.

39:20 Oh, yeah.

39:21 Oh, yeah.

39:21 And here you also have, here's your at flow decorator, right?

39:24 For this thing.

39:25 And another thing too, that we did that I'm really proud of that I've already started

39:29 to see kind of be one of the ways people onboard into Prefect is previously with the API, you

39:35 had to pre-register your flow and tell the API this thing exists, you know, get ready for

39:40 it.

39:40 And then runs had to get created server side before they could run client side.

39:45 With the new model, we set everything up and all of this was this like deep study in bookkeeping.

39:51 Like how can we create stable indices or stable identifiers for things that, you know, we can

39:55 identify across processes and runs.

39:57 And so in the new model, you can take this flow.

40:00 And if you are just pointing to our cloud API, you can call it as a function interactively,

40:05 and it will still communicate with cloud API just as opposed to deployed workflow.

40:09 And so what that means though, sorry, just going back to the incremental adoption story is you

40:14 can use cron and then you can just put one line of code on your main function at flow, keep

40:22 cron running with that Python script.

40:24 And you've immediately gotten a really pretty kind of record of all of the jobs that cron's

40:28 running.

40:29 And if it fails, you'll get the failure alerts and everything else.

40:32 And cron's still your scheduler, which is totally fine by us.

40:34 Sure.

40:34 Oh, that's interesting.

40:35 Yeah.

40:36 And then, you know, at some point you want to start to see into the future and that's when

40:39 you have to use our scheduler instead of cron.

40:41 But yeah.

40:41 But once again, incremental adoption.

40:43 Yeah.

40:44 The API here is pretty wild.

40:45 You're exploding a list comprehension of calls to the task to an ASIC IO gather.

40:52 That's a pretty intense line right there, but I like it.

40:55 It's not intense in the way that it's like, oh my gosh, what is this insanity?

40:59 But no, yeah.

41:00 It's a lot going on there.

41:01 Yeah.

41:01 Yeah.

41:01 Yeah.

41:01 You know, there's the joke t-shirt maybe you've seen.

41:04 It says, I learned Python.

41:06 It was a great weekend.

41:07 Right?

41:08 Like, that's true for like variables and loops and functions, but like, then you see

41:12 stuff like it.

41:13 It's like, oh wait.

41:13 Yeah.

41:14 There's more patterns here.

41:15 Maybe it's longer than a week.

41:15 It might be more than a weekend.

41:17 Give me a moment.

41:17 Yeah.

41:18 No, this is really cool.

41:19 I really like this new API.

41:20 So when's 2.0 a thing?

41:22 When is it released in the main way of working?

41:26 Our planned release date, or I shouldn't say date, but just like target, you know, you can

41:30 expect it's one of the weeks or something around is July 1st.

41:33 But we are still releasing.

41:35 So anyone out there is intrigued by this, especially if you're completely new to Prefect,

41:40 I definitely encourage you to just start with one of our beta 2.0 releases.

41:44 They're way slicker, way easier to get your head around, more interesting.

41:49 And there's still like everything, you know, working.

41:52 We just, there's some critical paths that we haven't fully released yet that we want to

41:56 make sure are there and tested heavily before we go into GA.

41:59 Right.

41:59 But if what's there works for people that they could, they could use it.

42:02 Oh yeah.

42:03 Should definitely work.

42:04 And if you run into weird bugs like that, let's not.

42:06 Yeah.

42:06 How does it plug into the cloud visibility layer and all that is if I run some one and want

42:14 some two, is it going to go crazy or?

42:16 No.

42:16 So they both will be configured to talk to the right API.

42:19 And so you won't be able to see them in the same place.

42:22 So that's unfortunate if you will, but you can definitely run them side by side.

42:28 I mean, the environments aren't compatible.

42:30 So you'll have to have different Python environments that you're running them in.

42:32 But yeah.

42:33 But otherwise, yeah.

42:34 I mean, I think some of our 1.0 clients, for example.

42:37 Because what?

42:38 pip install prefix is equal, equal one, something or equal, equal two or something along those

42:43 lines.

42:43 Right.

42:44 You'd like need different libraries.

42:45 Yeah.

42:45 So if you just did pip install prefix right now, you'd get a official 1.0 release.

42:50 I don't remember the number.

42:51 So you'll have to make sure that you allow for pre-release in your pip command.

42:55 So either, I think if you just specify equals equals 2.0, I think we're at like B3 right

43:01 now.

43:01 Then you'll get it.

43:02 But yeah, you have to explicitly call it out since it's not, since it's still in beta.

43:06 Sure.

43:06 I always like going to pipi.org.

43:09 It's just like, it's 375,000.

43:12 I know.

43:12 It's impressive.

43:13 Yeah.

43:15 So 1.2.1 is the current one.

43:17 But in here, yeah, you're 2.0, beta 3.

43:20 And we are planning to cut another release later this week.

43:23 So you can expect B4.

43:24 Probably it'll be B4 by the time people get around to hearing this and shipping and so on.

43:30 Yeah.

43:30 Yeah.

43:31 But still, really cool.

43:32 So basically, your advice to people who are like, hey, this sounds interesting.

43:36 I want to check it out.

43:37 Like, just start with two.

43:38 Yeah.

43:38 I'd say just start with two.

43:39 It's working, easier to grok, and I think is more powerful and more flexible for different

43:45 use cases, especially if you're thinking outside of data.

43:47 Sure.

43:47 So when I hear people talk about data engineering, you know, if you go into that world, you see

43:53 all these amazing tools that people have built that look like, wow, these are really

43:58 amazing.

43:58 And to me, they feel quite similar, like Prefect and Friends.

44:02 It feels real similar to the web frameworks, right?

44:05 Like Flask or Django.

44:07 And you're like, okay.

44:08 So for example, what I mean by that is in Flask, all I have to do is I have to say, here's a

44:12 function that goes to this URL.

44:13 And I just write the code and return a dictionary or something like that.

44:17 I don't have to think about headers, cookies, connect, you know, like stay connected header,

44:23 HTTP2 traffic.

44:25 Like I just do the little bit and it just, it puts it all together for me.

44:29 And in the data engineering world, there's a bunch of stuff like that, that I feel many

44:34 people are wholly unaware probably.

44:36 Yeah.

44:36 There is an explosion of tooling in data engineering right now.

44:41 And also in kind of the adjacent analytics world.

44:44 This kind of goes back to what I was saying about how we kind of crystallize this concept

44:48 of negative engineering.

44:48 And it's just important.

44:50 I think all of these tools come from some very real use case, right?

44:54 And I think it's just important to figure out, like the way I talk to people about this

44:57 stuff is you shouldn't really pick a tool just on its current feature set.

45:01 You should pick it on its vision as well as whether it works for you today, because you're

45:05 going to change a lot and you want to make sure that the tool is changing with you because

45:08 these tools, especially the explosion of startups, we're all changing quite quickly.

45:12 And you want to make sure that we're changing in an aligned way.

45:14 And having that fleshed out vision is important.

45:17 And if it's just a tool that like seems cool, but like, what exactly is this doing for me?

45:21 Exactly.

45:21 Precisely.

45:22 If you can't really articulate that, then, you know, that's not to say you shouldn't keep

45:26 using it or something, but just that's, that's always my exercise that I do with

45:29 new tools.

45:29 Yeah.

45:30 When it's something as fundamental as this, you kind of have to think about, I'm going

45:34 to live with this for a while.

45:35 Exactly.

45:36 Do I want to have this as my roommate when I come to work?

45:39 Right.

45:39 Do I want to debug this?

45:41 Do I want to exactly extend it?

45:42 You know, you're definitely going to do something weird with it.

45:44 We've all done weird things with every tool we use.

45:46 Oh yeah, absolutely.

45:49 All right.

45:50 So my question to you about this sort of like parallel to Flask and the web frameworks and

45:56 various other things.

45:57 This is solving a lot of negative engineering problems for data scientists and data engineers.

46:01 What do you see?

46:03 Where do you see maybe people like me who mostly do APIs and web apps and things along those lines?

46:11 Should I be using stuff like this?

46:12 And where do you see the solving problems for people who don't like traditionally put on the

46:16 data science, data engineering hat?

46:18 So there's two, two places that I think are relevant.

46:21 I think the first is just like really kind of tactical, just tracking of background work,

46:27 tracking of background tasks, right?

46:29 Like Celery is a popular example for something like this.

46:31 Yeah.

46:31 Let me give you an example.

46:32 So like for, in my world, I've, I might hit a button, have to send out thousands of emails

46:36 like because of that.

46:37 Right.

46:38 And then maybe based on, on that, I might, if it bounces, take them out of the email list

46:42 or whatever.

46:43 Right.

46:43 Exactly.

46:44 You want to record the fact it's a perfect example.

46:47 So just anything like that for a background task.

46:49 And that's one of the things too, that we're going to try to make even simpler because we

46:52 have focused a little bit on the data space and the very easy changes we can make to

46:56 kind of extend that.

46:57 And then the second thing, and this is, this is the way I always kind of

47:01 kind of like to think about Prefect.

47:02 It's one way you can consider everything I've been saying is we're kind of like the

47:06 SRE toolkit at the business logic layer.

47:09 And it's something that kind of everybody could just use just that single pane of glass.

47:13 You get alerts, you get notifications, you can collaborate with people and it's just

47:17 kind of all right there for you.

47:18 And at the end of the day, like, you know, you don't really have to manage the code that

47:22 much if you're just using the UI.

47:23 And so I think that's how we can expand by just kind of giving people that value.

47:28 You want to look at the things that are happening.

47:30 You want to see a place where all of your systems are just right there.

47:33 And it's at the business logic layer.

47:34 You're not looking at CPU and memory all the time, although you could display that if you

47:37 wanted to.

47:38 So how about this as an example?

47:39 I created an e-commerce site and I want to track, I just want visibility into people buying

47:45 stuff.

47:46 What's working?

47:47 What's failing?

47:47 What's the rate?

47:49 The bosses.

47:50 I need something on the web that I can look at this.

47:53 Exactly.

47:54 Get reporting.

47:55 And the key thing here, right, that you said that is like puts it in Prefect's camp

47:59 and not in, say, Datadog's camp is you want to track the user button click, for example,

48:06 like some business logic thing.

48:09 Whereas something like Datadog is an SRE or observability tool that's going to tell you

48:14 your API throughput.

48:15 Prefect isn't trying to do negative engineering for your raw infrastructure.

48:19 It's trying to do it for your business logic.

48:21 Got it.

48:21 Okay.

48:22 Yeah.

48:22 Very interesting.

48:23 So Prefect, open source, if I want, I could just take it and do my own thing, right?

48:27 Oh yeah.

48:28 Go for it.

48:29 It is Apache 2.0 licensed as of maybe a month ago.

48:35 So before we had a few different licenses floating around, but now we're all in, all Apache 2.0.

48:39 Okay.

48:39 Give me the elevator pitch on Apache 2.0.

48:41 So what does that mean that I can do?

48:43 It means you can do quite literally anything as long as you don't violate trademarks, essentially.

48:49 And so, you know, don't violate, don't use logos or something like that.

48:52 So with MIT or something like that.

48:53 Yeah, exactly.

48:54 It's very, very generous.

48:56 You know, you don't have to check with us or anything like that.

48:58 Sure.

48:58 Okay.

48:59 Excellent.

48:59 Yeah.

49:00 You guys are doing a lot of stuff, not just with Prefect, but with other projects out there

49:04 as well, right?

49:04 Yeah, we are.

49:05 We really kind of, like I said at the beginning, tried to instill this kind of open source ethos

49:10 that even at the business layer, like we're trying really hard to genuinely deliver value,

49:15 right?

49:16 And that includes to our customers and users, but also to just the broader ecosystem that

49:20 we find ourselves in, which is exploding right now.

49:23 And so we have a lot of different efforts that we, yeah, I can definitely go through and list

49:27 them all like ways we try to contribute back to open source.

49:30 Yeah.

49:31 Give us a little bit.

49:31 So we do a few different things.

49:33 So one thing that we do is we will send pizza to basically any conference or meetup talk

49:39 that has a talk featuring Prefect.

49:41 And so you just have to submit a quick application.

49:44 We'll probably reach out to you.

49:45 And then, you know, that's pretty much it there.

49:48 If you are a Prefect engineer, we have kind of this like advocacy program.

49:52 And if you get involved with that, we've sent people to conferences before that are not

49:57 Prefect employees.

49:57 So that's another thing that we try to give back.

50:02 More concretely on the business side, we, every engineering team at Prefect, so right

50:06 now there are five kind of distinct teams.

50:08 They each get a $10,000 annual budget to sponsor any and all open source projects or just maintainers

50:15 directly that they think are impactful, maybe for their work or maybe for our ecosystem.

50:20 And so some of them, just to give you an example, one of the ones that kind of kicked this whole

50:24 thing off was we sponsor MKDocs material theme, just really slick theming.

50:28 And so that was, that was the first one.

50:30 We also sponsor a lot of view projects and we're going to be expanding this to like fast

50:34 API and some other ones that we just have to, you know, dot our eyes and everything and

50:39 cross our T's.

50:40 And then, so this is kind of an escalating rate intensity.

50:44 And the last thing is we've actually Prefect, the company has gotten into investing in certain

50:51 open source tools that we think are very compatible with some of the things we want to do.

50:55 So the big one, the headline one here is Textualize.

50:58 So Will, who the author of, yeah, exactly.

51:02 I always be afraid to say this a lot of things, I'm afraid I'm going to say it wrong.

51:04 So he's the author of Rich and Textual.

51:07 And as everyone knows now, it's all out there.

51:10 So he's building the service Textualize for hosting these text-based terminal applications and

51:15 distributing them through the web.

51:16 So in kind of an interesting sense, it's like spiritually similar to the hybrid model,

51:21 right?

51:21 You can kind of run one of these agents.

51:22 And we've always wanted to expose richer interactions with Prefect agents running in

51:27 your infrastructure through our UI.

51:28 And like, when we talked to Will, it was like, oh, this is it.

51:31 This is perfect.

51:32 And it's got all the right like theming differences.

51:35 So you'll be able to tell this is something you wrote.

51:37 It's like very text-driven compared to kind of our more branded, you know, assets lurking around

51:42 the UI.

51:43 And so, yeah, we invested in his company, yeah, in their seed realm.

51:46 I'm really glad to hear that.