Web Frameworks in Prod by Their Creators

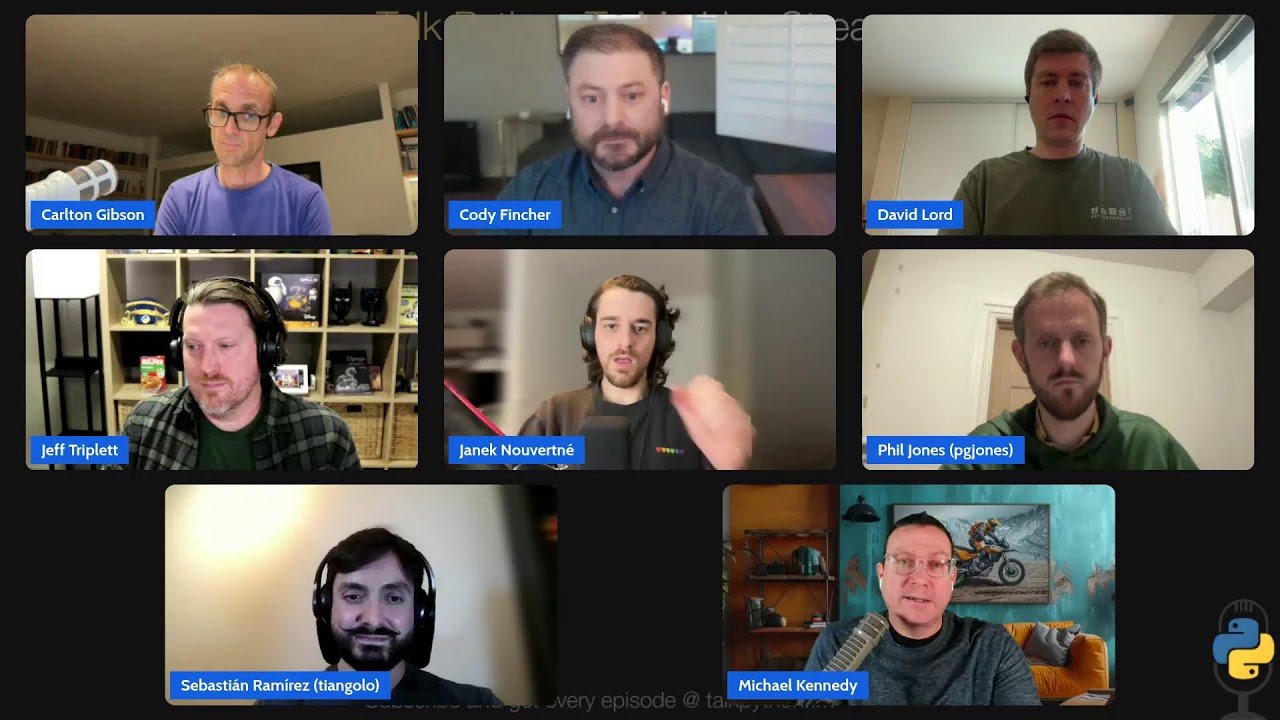

Panelists

Episode Deep Dive

Guests and Background

This episode brings together an exceptional panel of Python web framework creators and maintainers for a deep dive into production deployment. Here are the guests:

Carlton Gibson - A former Django Fellow who spent five years working on Django itself. Carlton now builds production applications with Django and is part of the Django Steering Council. He maintains several packages in the Django ecosystem and recently contributed template partials to Django 6.0.

Jeff Triplett - Based in Lawrence, Kansas, Jeff is the newly elected president of the Django Software Foundation and a consultant at Revolution Systems. With 20 years of Django experience, he maintains Django Packages, produces the Django News newsletter, and has used nearly all the frameworks discussed in this episode.

Sebastian Ramirez - The creator of FastAPI, one of Python's fastest-growing web frameworks. Sebastian is now building FastAPI Cloud, a deployment platform designed to make deploying FastAPI applications as simple as running a single command.

David Lord - Lead maintainer of Pallets, the organization behind Flask, Jinja, Click, Werkzeug, ItsDangerous, and MarkupSafe. David has been the lead maintainer since 2019 and has recently created several new Flask extensions including Flask-SQLAlchemy-Lite and Flask-Email-Simplified.

Phil Jones - Creator of Quart (Flask with async/await support), author of the Hypercorn ASGI server, and a contributor to the Pallets organization. Phil uses Quart at work and has been instrumental in exploring HTTP/3 support in Python.

Janek Nouvertne - A Litestar maintainer for three years who works with Django, Flask, FastAPI, Quart, and Litestar deployments professionally. He brings a unique perspective of recommending different frameworks based on use cases rather than allegiance.

Cody Fincher - A Litestar maintainer for about four years who currently works at Google. He previously worked on cloud migrations for enterprises and has contributed many of Litestar's "optional batteries" that work across multiple frameworks.

What to Know If You're New to Python

Before diving into this episode's production-focused discussion, here are some foundational concepts that will help you follow along:

WSGI vs ASGI: WSGI (Web Server Gateway Interface) is the traditional Python web server protocol for synchronous apps, while ASGI (Asynchronous Server Gateway Interface) supports async/await patterns. Understanding this distinction is crucial for choosing the right server and deployment strategy.

async/await in Python: The async programming model allows handling many concurrent connections efficiently, but requires understanding when code is "blocking" versus "non-blocking." This episode heavily discusses the gotchas around this topic.

Process vs Thread Scaling: Python web apps can scale by running multiple processes (separate memory spaces) or multiple threads (shared memory). The GIL (Global Interpreter Lock) historically limited thread-based scaling, but free-threaded Python is changing this.

ORM and N+1 Queries: Object-Relational Mappers like Django ORM and SQLAlchemy can accidentally generate many database queries when iterating over related objects. Learning to spot and fix these patterns is essential for production performance.

Key Points and Takeaways

1. The Foundation: Choose Your Server Stack Wisely

The panel unanimously agreed that production deployments should start simple and scale as needed. Carlton Gibson articulated the "old school" approach that remains highly effective: Nginx as a reverse proxy, a WSGI server with pre-fork workers for the core application, and an ASGI sidecar for long-lived connections like WebSockets or Server-Sent Events. The key insight is that you do not need to overcomplicate your stack - a single well-configured server can handle substantial traffic. Sebastian Ramirez noted that most developers should not be dealing with Kubernetes and hyperscaler complexity for typical applications.

- Links and Tools:

- uvicorn.org - ASGI server that can now manage its own worker processes

- gunicorn.org - Battle-tested WSGI server for production

- github.com/emmett-framework/granian - Rust-based ASGI server with HTTP/2 support

- hypercorn.readthedocs.io - ASGI server with HTTP/3 support

- caddyserver.com - Modern reverse proxy alternative to Nginx

- nginx.org - Industry standard reverse proxy

2. Database Performance Is Your Biggest Lever

Multiple panelists emphasized that database interactions dominate most web application performance bottlenecks. Cody Fincher highlighted two critical issues: N+1 query problems where SQLAlchemy or Django ORM accidentally executes hundreds of queries for what should be one, and oversized connection pools that consume database CPU and RAM just managing connections. Carlton Gibson recommended using Django Debug Toolbar to identify duplicate queries and running SQL EXPLAIN to find missing indexes. A proper index on a frequently filtered column can turn a full table scan into an instant lookup - potentially a 100x improvement.

- Links and Tools:

- github.com/jazzband/django-debug-toolbar - Essential tool for seeing database queries in development

- postgresql.org/docs/current/pgstatstatements.html - Postgres extension for tracking slow queries

3. The Critical Async Gotcha: Never Block in Async Code

Janek Nouvertne identified the single most common mistake in async Python applications: accidentally running blocking code in async functions. When you mark a function as async but call blocking operations inside it, your entire application server stops handling requests until that blocking call completes. Sebastian Ramirez seconded this, noting that frameworks like FastAPI, Litestar, and others automatically handle sync functions by running them in thread workers. The practical advice is to use regular def functions unless you are absolutely certain your code is fully non-blocking - let the framework handle the thread pool execution.

- Links and Tools:

- github.com/tiangolo/asyncer - Sebastian's library for converting blocking functions to async while preserving type information

- anyio.readthedocs.io - Async compatibility layer with thread pool utilities

4. Django 6.0's Game-Changing Background Tasks API

Django 6.0 introduced a pluggable task framework that provides a standard API for background tasks. Carlton Gibson explained that this is like "an ORM for tasks" - third-party library authors can now use Django's task API without tying themselves to Celery, Django-Q2, or any specific queue implementation. Application developers then choose their preferred backend. Jeff Triplett recommended Django-Q2 for smaller projects because it can use the database itself as the queue, eliminating the need for Redis or other infrastructure. David Lord also emphasized the pattern of deferring non-urgent work to background tasks to keep request/response cycles fast.

- Links and Tools:

- docs.djangoproject.com/en/6.0/topics/tasks/ - Django's new task framework documentation

- github.com/GreenBuildingRegistry/django-q2 - Database-backed task queue for Django

- github.com/django-tasks/django-tasks - Reference task backend by Jake Howard

5. HTMX and Template Partials Are Transforming Web Development

The panel expressed strong enthusiasm for HTMX as a way to add interactivity without full JavaScript frameworks. Carlton Gibson shared that his startup has "hardly a JSON endpoint in sight" after adopting HTMX - it changed how he writes websites entirely. Django 6.0 now includes template partials (originally Carlton's django-template-partials package), which allow defining reusable template fragments that work perfectly with HTMX partial updates. Janek Nouvertne noted that HTMX fills the gap between static HTML and full SPAs, making it possible to add reactivity with minimal overhead.

- Links and Tools:

- htmx.org - Hypermedia-driven interactivity

- data-star.dev - Datastar, another hypermedia approach mentioned by David Lord

- github.com/carltongibson/django-template-partials - Template fragments for Django (now in core)

6. Upgrade Your Python Version for Free Performance

Sebastian Ramirez shared striking benchmarks: running FastAPI on Python 3.14 versus Python 3.10 shows nearly double the performance. This improvement comes from the Faster CPython initiative's continuous optimizations. David Lord almost convinced himself to drop the C extension from MarkupSafe because pure Python on modern versions got so much faster. Beyond CPU speed, newer Python versions also use significantly less memory, which compounds when running multiple worker processes.

- Links and Tools:

- docs.python.org/3/whatsnew/ - Python version change logs

- github.com/faster-cpython - The Faster CPython project

7. Coolify: Self-Hosted Platform-as-a-Service

Jeff Triplett introduced Coolify as a "boring service" that simplifies deployment significantly. It provides one-click installs for Postgres, automatic backups, and easy Docker container orchestration. Once you have one Django or Flask site working with it, duplicating that setup for new projects becomes trivial. Coolify can run as open-source self-hosted or as a managed service for around five dollars per month. The key value is abstracting away the rsync-files-and-hope deployment pattern while remaining simpler than Kubernetes.

- Links and Tools:

- coolify.io - Self-hostable Heroku/Vercel alternative

- docs.coolify.io - Coolify documentation

8. Free-Threaded Python: The Exciting (and Cautious) Future

The entire panel expressed excitement about free-threaded Python removing the GIL, while acknowledging real challenges ahead. Carlton Gibson noted that Django's sync-first nature means proper threads will help tremendously. Janek Nouvertne is "super excited" but cautious - msgspec only recently gained full free-threading support after significant work from core developers. The consensus is that third-party C extension libraries will be the sticking point. Phil Jones believes WSGI apps may benefit more than ASGI apps initially. David Lord ran Flask's test suite with pytest-freethreaded successfully, suggesting Flask applications may adapt well due to years of emphasizing thread-safe patterns.

- Links and Tools:

- github.com/tonybaloney/pytest-freethreaded - Anthony Shaw's tool for testing free-threading compatibility

- peps.python.org/pep-0703/ - PEP 703: Making the GIL Optional

9. Simplify Deployment with Container-Based Approaches

David Lord emphasized that for many applications, a single Docker container running Flask is dramatically more performant than legacy systems. His projects often have fewer than 100 users, and clients are surprised how little infrastructure is needed. Phil Jones runs Hypercorn behind AWS load balancers in ECS, noting that it is usually the database that needs scaling, not the application servers. Sebastian Ramirez pointed to pythonspeed.org for excellent Docker optimization guidance - following their patterns results in a 20-line Dockerfile that performs well.

- Links and Tools:

- pythonspeed.com/docker/ - Docker optimization articles for Python

- fly.io - Simple container deployment platform

- render.com - Developer-friendly hosting

- railway.app - Infrastructure platform

10. Performance Quick Wins: JSON Serializers and uvloop

Phil Jones mentioned two low-effort performance improvements. First, swapping the JSON serializer to a faster alternative like orJSON can provide noticeable speedups since JSON serialization is common in API responses. Second, using uvloop as the event loop provides measurable performance gains for async applications. David Lord added that Flask now has a pluggable JSON provider, making it easy to substitute faster serializers without changing application code.

- Links and Tools:

- github.com/ijl/orjson - Fast, correct JSON serialization

- github.com/MagicStack/uvloop - Ultra fast asyncio event loop

11. CDNs: Make Scaling Someone Else's Problem

Jeff Triplett emphasized that the best way to handle scale is to not handle it at all. Putting a CDN like Cloudflare or Fastly in front of your application means cached content never hits your servers. This is particularly valuable for content-heavy sites. Learning proper cache headers and vary headers is an investment that pays dividends - once configured correctly, traffic spikes become the CDN's problem rather than yours.

- Links and Tools:

- cloudflare.com - CDN and security services

- fastly.com - Edge cloud platform

12. SQLite in Production: Yes, Really

David Lord revealed that the Pallets website runs Flask with SQLite, inspired by Andrew Godwin's "static dynamic sites" concept. Markdown files are loaded into an in-memory SQLite database at startup for fast querying. Janek Nouvertne mentioned running SQLite in the browser with DuckDB for analysis - deploying just static files to Nginx. The panel agreed that SQLite is perfectly viable for many production use cases when concurrency requirements are modest.

- Links and Tools:

- sqlite.org - The most deployed database engine

- duckdb.org - Analytical database that can query SQLite files

Interesting Quotes and Stories

"I'm literally having the time of my life. I spent five years as a Django Fellow working on Django and I just built up this backlog of things I wanted to do. And every day I sit down on my computer thinking, oh, what's today? And every day, a delight." -- Carlton Gibson on building with Django instead of building Django

"If you maintain a framework yourself, you tend to always recommend it for everything. But I noticed it's not actually true. There's actually quite a few cases where I don't recommend Litestar. I recommend, you know, just use Django for this or use Flask for that or use FastAPI for this because, well, they are quite different after all." -- Janek Nouvertne on framework recommendations

"We suffer all the cloud pains so that people don't have to deal with that. And yeah, it's painful to build, but it's so cool to use it." -- Sebastian Ramirez on building FastAPI Cloud

"I almost convinced myself that I can drop a C extension for just a Python upgrade instead. That was pretty impressive." -- David Lord on Python performance improvements

"If you make something an async function, you should be absolutely sure that it's non-blocking. Because if you're running an ASGI app and you're blocking anywhere, your whole application server is blocked completely." -- Janek Nouvertne on the critical async gotcha

"The best way to scale something is just to not do it, avoid the process completely." -- Jeff Triplett on CDNs and caching

"It'll run on a potato." -- David Lord on Flask's minimal resource requirements

"The other day I had to change the account email in one of the AWS accounts. I think I spent four hours." -- Sebastian Ramirez on hyperscaler complexity

"HTMX really changed the way I write websites. We're three years in, we've hardly got a JSON endpoint in sight." -- Carlton Gibson on the impact of HTMX

"I would rather somebody start with HTMX than I would start with React if you don't need it. Because React can be total overkill." -- Jeff Triplett on choosing the right frontend approach

Key Definitions and Terms

WSGI (Web Server Gateway Interface) - The traditional standard interface between Python web applications and web servers. Synchronous by design, it processes one request at a time per worker.

ASGI (Asynchronous Server Gateway Interface) - The async counterpart to WSGI that supports long-lived connections, WebSockets, and concurrent request handling within a single worker.

N+1 Query Problem - A database anti-pattern where fetching N records results in N+1 queries because related objects are fetched one at a time in a loop instead of in a single batch query.

Connection Pooling - Managing a pool of database connections that are reused across requests. Oversized pools waste database resources; undersized pools create bottlenecks.

Pre-fork Workers - A scaling pattern where the main server process forks multiple worker processes at startup, each handling requests independently with its own memory space.

GIL (Global Interpreter Lock) - A mutex in CPython that allows only one thread to execute Python bytecode at a time. Free-threaded Python removes this limitation.

Free-Threaded Python - Python builds without the GIL, enabling true parallel execution of Python code across multiple threads. Available experimentally starting in Python 3.13.

Template Partials - Reusable named fragments within templates that can be rendered independently, enabling efficient partial page updates with HTMX.

Hypermedia - An architectural style where the server returns HTML (or other hypermedia) instead of JSON, letting the browser handle rendering and reducing client-side JavaScript.

Learning Resources

If you want to go deeper on the topics covered in this episode, here are some courses from Talk Python Training that can help build your foundation:

Modern APIs with FastAPI and Python: Learn to build production-ready APIs with FastAPI, covering deployment to cloud VMs and best practices for async Python development.

HTMX + Django: Modern Python Web Apps, Hold the JavaScript: Discover how to build interactive web applications using HTMX with Django, the same approach Carlton Gibson praised for transforming his development workflow.

HTMX + Flask: Modern Python Web Apps, Hold the JavaScript: The Flask counterpart for building modern, interactive web apps without heavy JavaScript frameworks.

Django: Getting Started: Build your first Django project and understand the ORM, views, and templating system that the Django panelists discussed.

Async Techniques and Examples in Python: Master the async and parallel programming concepts the panel emphasized, including asyncio, threading, and avoiding the blocking code pitfalls Janek and Sebastian warned about.

Full Web Apps with FastAPI: Learn to build complete HTML web applications with FastAPI, not just APIs, as Sebastian builds with his own framework.

Building Data-Driven Web Apps with Flask and SQLAlchemy: Comprehensive Flask training covering database integration with SQLAlchemy - essential for understanding the ORM performance tips discussed.

Overall Takeaway

This episode delivers a powerful message: production Python web development in 2026 is remarkably accessible, performant, and flexible. The creators behind FastAPI, Flask, Django, Quart, and Litestar demonstrated both deep expertise and refreshing pragmatism - they recommend competing frameworks when appropriate and emphasize simplicity over complexity.

The recurring theme was "start simple and scale as needed." A single Docker container, a Postgres database, and a well-chosen WSGI or ASGI server can handle far more traffic than most applications will ever see. The biggest performance gains come not from exotic infrastructure but from fundamentals: proper database indexes, avoiding N+1 queries, caching aggressively, and understanding when async helps versus hurts.

Looking ahead, free-threaded Python promises to unlock new levels of performance for synchronous code, potentially eliminating the need for multiple worker processes. The panel's measured optimism - excited yet cautious about third-party library compatibility - reflects mature engineering judgment.

Whether you are deploying your first web app or optimizing a high-traffic production system, the message is clear: Python's web framework ecosystem has never been stronger, the tools have never been better documented, and the community of maintainers represented in this episode is actively working to make your deployments simpler and faster. Trust the fundamentals, measure before optimizing, and remember that sometimes the best scaling strategy is simply getting a bigger box.

Links from the show

David Lord

Janek Nouvertné

Cody Fincher

Philip Jones

Jeff Triplett

Carlton Gibson

Carlton Gibson - Django: github.com

Sebastian Ramirez - FastAPI: github.com

David Lord - Flask: davidism.com

Phil Jones - Flask and Quartz(async): pgjones.dev

Yanik Nouvertne - LiteStar: github.com

Cody Fincher - LiteStar: github.com

Jeff Triplett - Django: jefftriplett.com

Django: www.djangoproject.com

Flask: flask.palletsprojects.com

Quart: quart.palletsprojects.com

Litestar: litestar.dev

FastAPI: fastapi.tiangolo.com

Coolify: coolify.io

ASGI: asgi.readthedocs.io

WSGI (PEP 3333): peps.python.org

Granian: github.com

Hypercorn: github.com

uvicorn: uvicorn.dev

Gunicorn: gunicorn.org

Hypercorn: hypercorn.readthedocs.io

Daphne: github.com

Nginx: nginx.org

Docker: www.docker.com

Kubernetes: kubernetes.io

PostgreSQL: www.postgresql.org

SQLite: www.sqlite.org

Celery: docs.celeryq.dev

SQLAlchemy: www.sqlalchemy.org

Django REST framework: www.django-rest-framework.org

Jinja: jinja.palletsprojects.com

Click: click.palletsprojects.com

HTMX: htmx.org

Server-Sent Events (SSE): developer.mozilla.org

WebSockets (RFC 6455): www.rfc-editor.org

HTTP/2 (RFC 9113): www.rfc-editor.org

HTTP/3 (RFC 9114): www.rfc-editor.org

uv: docs.astral.sh

Amazon Web Services (AWS): aws.amazon.com

Microsoft Azure: azure.microsoft.com

Google Cloud Run: cloud.google.com

Amazon ECS: aws.amazon.com

AlloyDB for PostgreSQL: cloud.google.com

Fly.io: fly.io

Render: render.com

Cloudflare: www.cloudflare.com

Fastly: www.fastly.com

Watch this episode on YouTube: youtube.com

Episode #533 deep-dive: talkpython.fm/533

Episode transcripts: talkpython.fm

Theme Song: Developer Rap

🥁 Served in a Flask 🎸: talkpython.fm/flasksong

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00

00:05

00:10

00:15

00:17

00:21

00:23

00:28

00:32

00:33

00:55

00:57

01:01

01:02

01:07

01:08

01:11

01:14

01:18

01:21

01:22

01:26

01:30

01:35

01:39

01:44

01:46

01:50

01:56

02:00

02:05

02:11

02:19

02:22

02:30

02:36

02:42

02:47

02:51

02:56

03:01

03:06

03:11

03:16

03:20

03:25

03:30

03:36

03:42

03:46

03:50

03:51

03:52

03:54

03:56

03:57

04:01

04:04

04:05

04:07

04:08

04:09

04:14

04:14

04:15

04:18

04:19

04:21

04:23

04:27

04:28

04:33

04:35

04:36

04:39

04:40

04:40

04:41

04:42

04:47

04:52

04:57

04:59

05:02

05:03

05:05

05:09

05:12

05:17

05:21

05:25

05:29

05:32

05:35

05:37

05:40

05:44

05:45

05:46

05:47

05:48

05:48

05:49

05:49

05:55

05:56

06:02

06:04

06:08

06:09

06:10

06:11

06:14

06:15

06:15

06:18

06:21

06:22

06:24

06:28

06:28

06:32

06:32

06:33

06:34

06:37

06:42

06:44

06:47

06:50

06:53

06:57

06:58

07:00

07:07

07:13

07:14

07:15

07:20

07:21

07:23

07:26

07:27

07:29

07:31

07:32

07:39

07:40

07:41

07:42

07:44

07:48

07:53

07:53

07:57

08:01

08:04

08:06

08:08

08:10

08:15

08:17

08:23

08:29

08:33

08:36

08:38

08:42

08:47

08:52

09:00

09:04

09:08

09:13

09:17

09:21

09:22

09:24

09:28

09:29

09:30

09:31

09:32

09:34

09:37

09:40

09:43

09:44

09:46

09:50

09:51

09:56

09:59

10:03

10:08

10:10

10:14

10:16

10:18

10:25

10:30

10:36

10:41

10:44

10:50

10:54

10:59

11:03

11:07

11:12

11:15

11:19

11:22

11:24

11:29

11:32

11:35

11:37

11:39

11:40

11:42

11:45

11:48

11:50

11:53

11:54

11:56

11:58

12:02

12:04

12:09

12:12

12:16

12:18

12:21

12:23

12:25

12:27

12:31

12:32

12:36

12:38

12:39

12:42

12:46

12:50

12:55

13:00

13:03

13:08

13:11

13:14

13:17

13:20

13:24

13:32

13:36

13:37

13:39

13:44

13:49

13:52

14:00

14:05

14:10

14:14

14:18

14:22

14:29

14:34

14:39

14:45

14:51

14:58

15:03

15:08

15:09

15:12

15:13

15:13

15:16

15:16

15:18

15:20

15:24

15:26

15:28

15:30

15:31

15:33

15:34

15:38

15:39

15:43

15:50

15:53

15:54

15:56

16:00

16:05

16:12

16:19

16:23

16:26

16:30

16:32

16:34

16:35

16:36

16:38

16:40

16:42

16:43

16:46

16:49

16:52

16:56

16:59

17:02

17:06

17:08

17:12

17:15

17:18

17:21

17:26

17:27

17:30

17:33

17:35

17:40

17:44

17:46

17:48

17:53

17:54

17:58

18:01

18:05

18:06

18:12

18:14

18:16

18:19

18:22

18:24

18:26

18:28

18:31

18:38

18:42

18:47

18:53

18:57

19:01

19:05

19:09

19:13

19:14

19:19

19:25

19:28

19:31

19:35

19:39

19:42

19:44

19:50

19:52

19:53

19:57

20:02

20:05

20:11

20:16

20:21

20:26

20:33

20:39

20:42

20:44

20:47

20:49

20:50

20:53

20:59

21:01

21:05

21:10

21:14

21:20

21:21

21:23

21:28

21:34

21:35

21:38

21:42

21:47

21:52

21:58

22:06

22:12

22:14

22:16

22:16

22:17

22:22

22:24

22:26

22:30

22:34

22:38

22:41

22:48

22:59

23:03

23:07

23:08

23:11

23:14

23:16

23:17

23:18

23:22

23:28

23:31

23:36

23:38

23:45

23:51

23:54

23:58

24:03

24:05

24:08

24:13

24:19

24:32

24:38

24:41

24:44

24:48

24:52

24:55

24:59

25:00

25:04

25:09

25:13

25:14

25:18

25:22

25:23

25:26

25:28

25:30

25:30

25:31

25:34

25:38

25:41

25:44

25:51

25:56

26:02

26:08

26:12

26:18

26:23

26:27

26:32

26:38

26:43

26:48

26:53

26:59

27:04

27:10

27:16

27:21

27:27

27:34

27:36

27:39

27:41

27:42

27:47

27:50

27:52

27:56

27:57

27:59

28:03

28:05

28:10

28:13

28:15

28:17

28:21

28:22

28:25

28:29

28:30

28:31

28:32

28:43

28:48

28:51

28:54

28:58

29:03

29:06

29:10

29:13

29:19

29:23

29:25

29:30

29:34

29:38

29:41

29:42

29:47

29:48

29:52

29:57

30:00

30:02

30:08

30:14

30:15

30:21

30:27

30:32

30:36

30:43

30:50

30:51

30:55

30:57

30:59

31:05

31:12

31:14

31:22

31:25

31:27

31:34

31:35

31:37

31:41

31:44

31:48

31:51

31:53

31:56

32:00

32:05

32:08

32:13

32:15

32:17

32:20

32:27

32:31

32:36

32:39

32:42

32:47

32:52

32:52

32:59

33:01

33:01

33:05

33:11

33:13

33:20

33:26

33:27

33:30

33:32

33:36

33:42

33:48

33:49

33:55

33:56

33:58

34:02

34:06

34:10

34:12

34:15

34:18

34:23

34:26

34:28

34:29

34:32

34:38

34:45

34:49

34:55

34:59

35:04

35:10

35:15

35:21

35:26

35:33

35:35

35:44

35:45

35:46

35:46

35:47

35:48

35:52

35:52

35:53

36:02

36:05

36:10

36:20

36:26

36:30

36:35

36:41

36:46

36:53

36:59

37:04

37:11

37:18

37:26

37:33

37:39

37:42

37:49

37:52

37:57

37:57

37:58

37:59

38:02

38:04

38:13

38:17

38:20

38:26

38:30

38:35

38:38

38:41

38:46

38:50

38:54

38:58

39:01

39:03

39:05

39:11

39:14

39:17

39:23

39:23

39:27

39:31

39:35

39:40

39:42

39:45

39:46

39:51

39:55

39:58

40:02

40:05

40:12

40:14

40:17

40:29

40:40

40:40

40:42

40:44

40:46

40:50

40:53

40:57

40:58

41:04

41:05

41:11

41:13

41:17

41:18

41:23

41:26

41:30

41:32

41:32

41:38

41:39

41:44

41:46

41:48

41:53

41:59

42:04

42:05

42:06

42:08

42:09

42:11

42:12

42:15

42:21

42:22

42:27

42:28

42:31

42:35

42:36

42:36

42:37

42:43

42:45

42:47

42:49

42:57

43:00

43:07

43:12

43:16

43:17

43:22

43:25

43:31

43:35

43:39

43:40

43:43

43:43

43:51

43:53

43:57

43:58

44:01

44:07

44:08

44:11

44:14

44:18

44:22

44:25

44:30

44:32

44:37

44:40

44:43

44:44

44:48

44:51

44:55

44:57

45:00

45:02

45:05

45:08

45:11

45:14

45:16

45:21

45:22

45:26

45:30

45:34

45:36

45:40

45:43

45:45

45:47

45:49

45:53

45:54

45:56

46:01

46:03

46:03

46:06

46:12

46:16

46:21

46:27

46:31

46:35

46:37

46:38

46:42

46:44

46:45

46:46

46:47

46:49

46:49

46:55

46:56

46:57

47:00

47:01

47:01

47:02

47:03

47:28

47:33

47:38

47:41

47:48

47:51

47:57

47:59

48:06

48:14

48:18

48:34

48:37

48:39

48:45

48:50

48:52

48:57

48:59

49:02

49:10

49:15

49:20

49:24

49:29

49:33

49:37

49:41

49:45

49:47

49:51

49:54

49:59

50:00

50:02

50:03

50:07

50:10

50:14

50:15

50:17

50:17

50:19

50:20

50:25

50:34

50:38

50:44

50:49

50:52

50:58

51:02

51:08

51:12

51:18

51:24

51:29

51:36

51:43

51:47

51:51

51:56

52:02

52:08

52:13

52:17

52:22

52:26

52:31

52:36

52:39

52:43

52:50

52:53

52:59

53:05

53:10

53:15

53:17

53:19

53:22

53:26

53:26

53:31

53:36

53:37

53:39

53:42

53:45

53:46

53:47

53:48

53:49

53:51

53:55

53:56

53:59

54:05

54:07

54:08

54:13

54:15

54:16

54:16

54:18

54:21

54:23

54:24

54:28

54:31

54:36

54:38

54:43

54:50

54:51

54:56

55:00

55:06

55:12

55:16

55:18

55:26

55:33

55:40

55:42

55:44

55:49

55:52

55:55

55:57

56:02

56:09

56:13

56:18

56:22

56:24

56:28

56:39

56:48

56:50

56:52

56:57

57:00

57:04

57:06

57:09

57:12

57:15

57:19

57:25

57:29

57:33

57:36

57:37

57:39

57:42

57:44

57:47

57:48

57:52

57:55

57:56

57:58

58:02

58:03

58:06

58:07

58:11

58:16

58:19

58:23

58:24

58:27

58:31

58:36

58:39

58:42

58:45

58:49

58:53

58:59

59:03

59:06

59:10

59:18

59:20

59:23

59:25

59:26

59:27

59:32

59:38

59:40

59:43

59:47

59:48

59:49

59:52

59:55

01:00:01

01:00:04

01:00:06

01:00:08

01:00:12

01:00:14

01:00:15

01:00:16

01:00:17

01:00:18

01:00:22

01:00:23

01:00:24

01:00:25

01:00:26

01:00:31

01:00:32

01:00:35

01:00:37

01:00:37

01:00:37

01:00:38

01:00:39

01:00:39

01:00:40

01:00:40

01:00:41

01:00:42

01:00:42

01:00:44

01:00:47

01:00:48

01:00:49

01:00:51

01:00:57

01:01:00

01:01:03

01:01:06

01:01:09

01:01:14

01:01:16

01:01:17

01:01:20

01:01:23

01:01:24

01:01:26

01:01:27

01:01:38 I started to meet.

01:01:40 And we're ready to roll.

01:01:43 Upgrade the code.

01:01:45 No fear of getting whole.

01:01:48 We tapped into that modern vibe over King Storm.

01:01:53 Talk Python To Me, I-Sync is the norm.

01:02:24 Редактор субтитров А.Семкин Корректор А.Егорова