Serverless Python in 2024

Episode Deep Dive

Guest and Background

Tony Sherman

Tony began his career in the cable industry, doing everything from door-to-door installations to outside-plant work. After realizing the limited growth opportunities in that field, he took an introduction to programming class in Python and became captivated by software development. Now, Tony works as a seasoned Python developer focusing on serverless programming—especially with AWS Lambda—in domains such as IoT and school bus safety applications.

1. What Is Serverless?

- Definition: “Serverless” does not mean “no servers,” but rather that you, as the developer, do not manage the underlying server infrastructure. Instead, you focus on writing functions or code that runs in a fully managed environment.

- AWS Lambda as the Flagship Example: AWS Lambda is one of the earliest and most popular serverless function services. Other notable providers include Google Cloud Functions, Azure Functions, Vercel Functions, DigitalOcean Functions, and Cloudflare Workers.

2. Why Choose Serverless?

- Spiky or Irregular Traffic: For workloads like school bus IoT, usage is high only at certain times of day (morning and afternoon). Serverless scales automatically for these “bursty” or unpredictable workloads, saving cost and ops overhead.

- Reduced DevOps: You no longer need to provision, patch, and secure entire virtual machines or Kubernetes clusters. The cloud provider takes care of scaling and server maintenance.

- Cost Model: AWS Lambda, for example, often has a free tier of 1 million monthly requests and then charges per millisecond of usage. It can be cost-effective if you do not have heavy, constant workloads.

3. AWS Lambda Considerations

Packaging and Deployment

- Size Constraints: Zipped packages have size limits (around 50 MB compressed, 250 MB uncompressed). Container-based Lambdas can go bigger (up to 10 GB), though potentially with some cold-start penalty.

- Optimizing with AWS Lambda Power Tuner:

- AWS Lambda Power Tuning helps find the ideal CPU/memory setting for cost or performance. It tries multiple memory allocations, times them, and graphs the tradeoffs.

- Dependency Management with Pants:

- Pants Build can automatically bundle only the necessary Python code and libraries for each function based on imports, preventing bloated packages and helping reduce cold-start times.

Cold Starts and Performance

- Cold Starts: The first request to a new or scaled-out Lambda instance takes longer because the environment must load libraries and establish connections.

- Warm Lambdas: Once “warmed,” additional requests on the same Lambda run faster. Anything defined outside the handler (e.g., database connections) may be reused across invocations.

Databases

- DynamoDB: A serverless NoSQL database that scales well for spiky, high-volume usage. No connection pooling needed.

- RDS (Relational Databases): To mitigate issues with large numbers of connections, consider RDS Proxy for connection pooling when Lambdas interact with MySQL/PostgreSQL in AWS.

4. Observability: Logging & Tracing

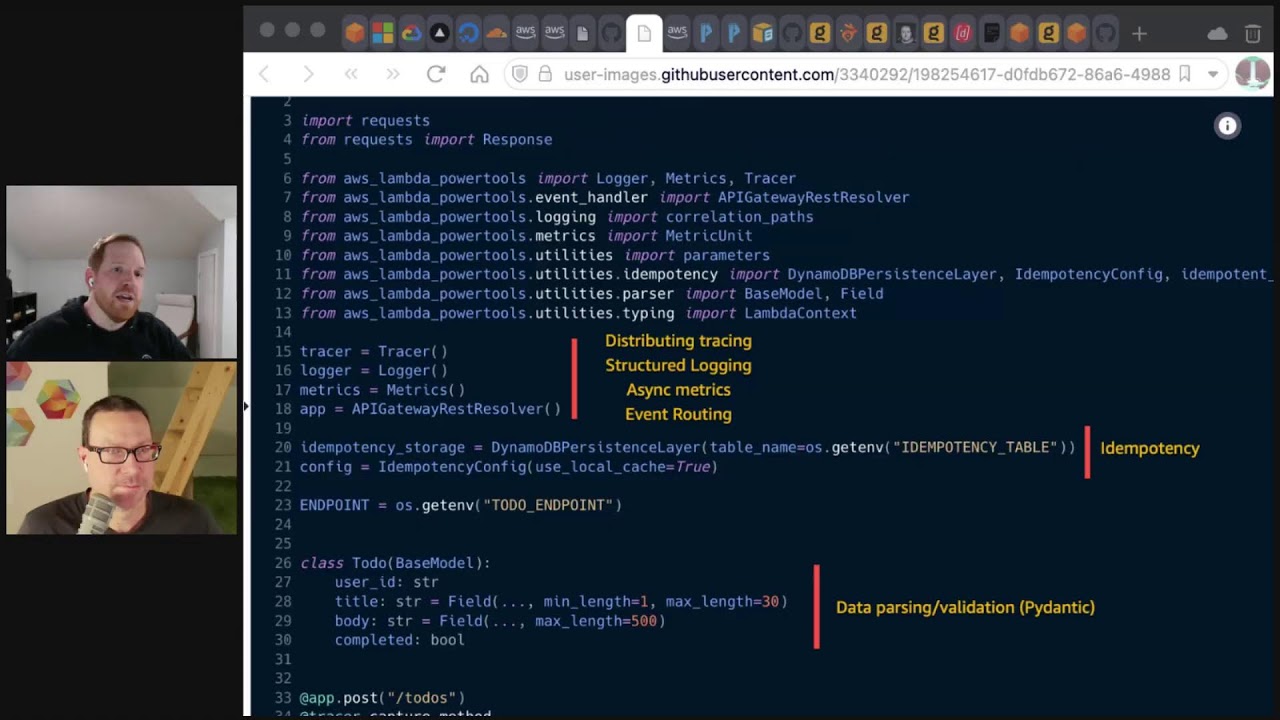

AWS Lambda Powertools

- AWS Lambda Powertools for Python is a toolkit that codifies best practices for logging, tracing, and structured events on AWS Lambda.

- Offers a logging utility that outputs structured JSON for easier querying in services like CloudWatch or external log aggregators (Datadog, Splunk, etc.).

- Facilitates integrating with

Pydanticfor input validation and event parsing.

Tracing Tools

- AWS X-Ray can trace Lambda performance, including cold starts and external calls.

- DataDog APM automatically detects SQL queries and other actions, showing you breakdowns of execution time.

- Tracing is especially useful in microservices architectures where many services are called in one request.

5. Testing Serverless Code

- Local Testing: Basic unit tests can be run locally with standard Python tooling (e.g.,

pytest). However, simulating the entire AWS environment (Lambda runtime + AWS services) can be cumbersome. - Integration Testing: Often involves deploying to a real or QA/staging environment in the cloud to verify interactions with queues (SQS), databases, or other services. Some teams spin up separate, temporary environments (e.g., ephemeral environments per pull request) to run more robust tests.

Relevant Tools and Links

AWS Lambda

https://aws.amazon.com/lambda/AWS Lambda Power Tuning

https://github.com/alexcasalboni/aws-lambda-power-tuningAWS Lambda Powertools for Python

https://awslabs.github.io/aws-lambda-powertools-python/Datadog (APM / Observability)

https://www.datadoghq.com/Pants Build

https://www.pantsbuild.org/RDS Proxy

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/rds-proxy.html

Overall Takeaway

Serverless computing in Python continues to mature, with AWS Lambda among the most widely adopted options. Tools like AWS Lambda Powertools simplify structured logging, tracing, and data validation, while build systems such as Pants help minimize package size and manage dependencies more intelligently. Organizations that deal with spiky traffic, or prefer a managed environment over self-hosting servers, stand to benefit from a serverless approach. By pairing thorough unit tests with limited integration or QA deployments, teams can streamline development while achieving high reliability and performance.

Links from the show

Tony Sherman: linkedin.com

PyCon serverless talk: youtube.com

AWS re:Invent talk: youtube.com

Powertools for AWS Lambda: docs.powertools.aws.dev

Pantsbuild: The ergonomic build system: pantsbuild.org

aws-lambda-power-tuning: github.com

import-profiler: github.com

AWS Fargate: aws.amazon.com

Run functions on demand. Scale automatically.: digitalocean.com

Vercel: vercel.com

Deft: deft.com

37 Signals We stand to save $7m over five years from our cloud exit: world.hey.com

The Global Content Delivery Platform That Truly Hops: bunny.net

Watch this episode on YouTube: youtube.com

Episode #458 deep-dive: talkpython.fm/458

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 What is the state of serverless computing and Python in 2024?

00:03 What are some of the new tools and best practices?

00:06 Well, we're lucky to have Tony Sherman, who has a lot of practical experience

00:11 with serverless programming on the show.

00:13 This is Talk Python To Me, episode 458, recorded January 25th, 2024.

00:19 Welcome to Talk Python To Me, a weekly podcast on Python.

00:37 This is your host, Michael Kennedy.

00:39 Follow me on Mastodon, where I'm @mkennedy, and follow the podcast using @talkpython,

00:44 both on fosstodon.org.

00:46 Keep up with the show and listen to over seven years of past episodes at talkpython.fm.

00:52 We've started streaming most of our episodes live on YouTube.

00:55 Subscribe to our YouTube channel over at talkpython.fm/youtube to get notified about upcoming shows

01:01 and be part of that episode.

01:03 This episode is brought to you by Sentry.

01:05 Don't let those errors go unnoticed.

01:07 Use Sentry like we do here at Talk Python.

01:09 Sign up at talkpython.fm/sentry.

01:12 And it's brought to you by Mailtrap, an email delivery platform that developers love.

01:18 Try for free at mailtrap.io.

01:21 Tony, welcome to Talk Python To Me.

01:23 Thank you.

01:24 Thanks for having me.

01:25 Fantastic to have you here.

01:26 It's going to be really fun to talk about serverless.

01:29 You know, the joke with the cloud is, well, I know you call it the cloud,

01:33 but it's really just somebody else's computer.

01:35 But we're not even talking about computer.

01:37 We're just talking about functions.

01:38 Maybe it's someone else's function.

01:40 I don't know.

01:40 We're going to find out.

01:40 Yeah, I actually, I saw a recent article about server-free recently with somebody trying to,

01:46 yeah, move completely.

01:48 Yeah.

01:49 Yeah.

01:49 Because as you might know, serverless doesn't mean actually no servers.

01:53 Of course.

01:54 Of course.

01:54 Server-free.

01:56 All right.

01:56 So we could just get the thing to run on the BitTorrent network.

02:00 Got it.

02:01 Okay.

02:01 Yeah.

02:02 I don't know.

02:03 I don't know.

02:03 We'll figure it out.

02:04 But it's going to be super fun.

02:06 And we're going to talk about your experience working with serverless.

02:10 We'll talk about some of the choices people have out there and also some of the tools that we can use to do things like observe and test our serverless code.

02:18 Yeah.

02:19 For that, though, tell us a bit about yourself.

02:21 Sure.

02:22 So I'm actually a career changer.

02:26 So I worked in the cable industry for about 10 years and doing a lot of different things

02:34 from installing, you know, knock out the door cable guy to working on more of the outside plant.

02:40 But at some point, I was seeing limits of career path there.

02:46 And so my brother-in-law is a software engineer.

02:50 And I had already started kind of going back to school, finishing my degree.

02:54 And I was like, okay, well, maybe I should kind of look into this.

02:57 And so I took an intro to programming class.

03:00 It was in Python.

03:01 And that just kind of, yeah, led me down this path.

03:05 So now for the past, I don't know, four years or so, been working professionally in the software world,

03:09 started out in a QA role at an IoT company.

03:14 And now, yeah, doing a lot of serverless programming in Python these days.

03:19 Second company now, but that does some school bus safety products.

03:24 Interesting.

03:25 Very cool.

03:26 Yeah.

03:26 Yep.

03:27 But a lot of Python and a lot of serverless.

03:29 Well, serverless and IoT, I feel like they go pretty hand in hand.

03:34 Yes.

03:35 Yep.

03:35 Yeah.

03:36 Another thing is with serverless is when you have very like spiky traffic.

03:42 Like if you think about school buses that, you know, you have a lot coming on twice a day.

03:47 To bimodal.

03:48 Exactly.

03:48 Like the 8 a.m. shift and then the 2.30 to 3 shift.

03:52 So, yeah, that's a really good use case for serverless is something like that.

03:57 Okay.

03:58 Are you enjoying doing the programming stuff instead of the cable stuff?

04:01 Absolutely.

04:02 I sometimes I live in Michigan, so I look outside and look at, you know, the snow coming down or these storms.

04:10 And yeah, I just, yeah, I really, some people like you don't miss being outside.

04:14 I'm like, maybe every once in a while, but I can, I can go walk outside on a nice day.

04:19 You can choose to go outside.

04:21 You're not ready to go outside in the sleet or rain.

04:24 Yeah.

04:25 Yeah, absolutely.

04:26 We just had a mega storm here and just the huge tall trees here in Oregon just fell left and right.

04:33 And there's in every direction that I look, there's a large tree on top of one of the houses of my neighbors.

04:39 Oh, man.

04:40 Maybe a house or two over.

04:42 But it just took out all the, everything that was a cable in the air was taken out.

04:46 So it's just been a swarm of people who are out in 13 degree Fahrenheit, you know, negative nine Celsius weather.

04:52 And I'm thinking, you know, not really choosing to be out there today, probably.

04:55 Right.

04:56 Excellent.

04:57 Well, thanks for that introduction.

04:58 I guess maybe we could, a lot of people probably know what serverless is, but I'm sure there's a lot who are not even really aware of what serverless programming is.

05:07 Right.

05:08 Let's talk about what's the idea.

05:10 What's the, what's the Zen of this?

05:12 Yeah.

05:13 So, yeah, I kind of, you know, made the joke that serverless doesn't mean there are no servers, but, and there's, hopefully I don't butcher it too much, but it's more like, functions as a service.

05:25 There's other things that can be serverless too.

05:28 Like there's, you know, serverless databases or, a lot of different, services that can be serverless.

05:35 Meaning you don't have to think about like how to operate them, how to think about, you know, scaling them up.

05:40 You don't have to, you know, spin up, VMs or, or Kubernetes clusters or anything.

05:45 You, you don't have to think about that part.

05:48 It's just your, your code that goes into it.

05:51 And so, yeah, serverless functions are probably what people are most familiar with.

05:55 And that's, I'm sure what we'll talk about most today, but, and, yeah, that's really the idea.

06:01 You don't have to, you don't have to manage the server.

06:04 Oh, sure.

06:05 And that's a huge barrier.

06:07 I remember when I first started getting into web apps and programming and then another level, when I got into Python, because I had not done that much Linux work, getting stuff up running.

06:19 It was really tricky.

06:20 And then having the concern of, is it secure?

06:24 How do I patch it?

06:25 How do I back it up?

06:26 How do I keep it going?

06:27 All of those things, they're non-trivial, right?

06:31 Right.

06:31 Yeah.

06:32 Yeah.

06:32 There's, there's a lot to think about.

06:33 And, and if you like work at an organization, it's probably different everywhere you go to that.

06:39 They, you know, how they manage their servers and things.

06:42 So, so putting in some stuff in the cloud kind of brings some, some commonality to it too.

06:46 Like you can, you can learn how, you know, the Azure cloud or Google cloud or AWS, how those things work and, and kind of have some common ground too.

06:55 So, yeah, for sure.

06:58 Like having, I mean, also feels more accessible to the developers in a larger group.

07:03 You know, in the sense that it's not a DevOps team that kind of takes care of the servers or a production engineers where you hand them your code.

07:11 It's, it's a little closer to just, I have a function and then I get it up there and it continues to be the function, you know?

07:17 Yeah.

07:17 You, and that is a different mindset too.

07:19 You kind of see it all the way through from writing your code to deploying it.

07:23 yeah.

07:25 With, without maybe an entire DevOps team that you just kind of say, here you go, go deploy this.

07:31 Yeah.

07:32 Yeah.

07:32 So in my world, I mostly have virtual machines.

07:37 I've moved over to kind of a Docker cluster.

07:40 I think I've got 17 different things running in the Docker cluster at the moment, but both of those are really different than serverless.

07:48 Right.

07:48 Yeah.

07:49 Yeah.

07:49 So it's been working well for me, but when I think about serverless, let me know if this is true.

07:55 It feels like you don't need to have as much of a, a Linux or server or, or sort of an ops experience to create these things.

08:04 Yeah.

08:05 I would say like you could probably get away with like almost none, right?

08:09 Like at the simplest form, like with, like AWS, for instance, their Lambda functions, you can, and that's the one I'm most familiar with.

08:19 So, forgive me for using them as an example for everything.

08:22 There's a lot of, different, serverless, options, but, you could go into the AWS console and you could actually, write your, you know, Python code right in the console, deploy that they have function URLs now.

08:37 So you could actually have like, I mean, within a matter of minutes, you can have a serverless function set up.

08:43 And so, AWS Lambda, right.

08:46 That's the one.

08:47 Yep.

08:48 Lambda being, I guess, a simple function, right?

08:50 We have Lambdas in Python.

08:51 They can only be one line.

08:52 So I'm sure you can have more than one line in the AWS Lambda, but.

08:56 Yeah.

08:56 There are limitations though, with, with Lambda that you, that are, definitely some pain points that I ran into.

09:03 So.

09:03 Oh, really?

09:04 Okay.

09:04 What are some of the limitations?

09:05 Yeah.

09:06 So, package size is one.

09:09 So if you start thinking about all these like amazing, packages on PyPI, you do have to start thinking about how, how many you're going to bring in.

09:19 So, and I don't know the exact limits off the top of my head, but it's pretty quick Google search on their, their package size.

09:27 It might be like 50 megabytes zipped, but two 50.

09:31 when you decompress it, to do a zip base, then they do have, containerized Lambda functions that go up to like a 10 gig limit.

09:41 So that helps, but, okay.

09:43 Yeah.

09:43 Yeah.

09:44 They, those ones, used to be less performant, but they're, they're kind of catching up to where they're, that was really on something called cold starts.

09:52 but they're, they're getting, I think pretty close to it.

09:56 Not, not being a very big difference, whether you Dockerize or zip these functions, but, but yeah.

10:03 So when you start just like pip installing everything, you've got to think about how to get that code into your function.

10:10 and, how much it's going to bring in.

10:13 So yeah, that definitely was, a limitation that, had to quickly learn.

10:19 Yeah.

10:20 I guess it's probably trying to do pip install -r effectively.

10:24 Yeah.

10:25 And it's like, you know, you, you can't go overboard with this.

10:27 Right.

10:28 Right.

10:28 Right.

10:29 Yeah.

10:29 Yeah.

10:29 When you start bringing in packages, like, you know, maybe like some of the like, scientific packages, you're, you're definitely going to be hitting some, some size limits.

10:38 Okay.

10:38 And with the containerized ones, basically you get probably give it a Docker file and a command to run in it and it can, it can build those images before and then just execute and just do a Docker run.

10:49 Yeah.

10:49 I think how those ones work is you, you store an image, on like their container registry, Amazon's, is it ECR, I think.

10:58 And so then you kind of pointed at that and yeah, it'll, execute your like handler function.

11:05 when that, when the Lambda gets called.

11:08 This portion of talk Python and me is brought to you by multi-platform error monitoring at Sentry.

11:14 Code breaks.

11:16 It's a fact of life.

11:17 With Sentry, you can fix it faster.

11:19 Does your team or company work on multiple platforms that collaborate?

11:23 Chances are extremely high that they do.

11:27 It might be a reactor view front end JavaScript app that talks to your FastAPI backend services.

11:32 Could be a go microservice talking to your Python microservice or even native mobile apps talking to your Python backend APIs.

11:41 Now let me ask you a question.

11:42 Whatever combination of these that applies to you, how tightly do these teams work together?

11:47 Especially if there are errors originating at one layer, but becoming visible at the other.

11:52 It can be super hard to track these errors across platforms and devices.

11:56 But Sentry has you covered.

11:58 They support many JavaScript front end frameworks.

12:00 Obviously Python backend, such as FastAPI and Django.

12:04 And they even support native mobile apps.

12:06 For example, at Talk Python, we have Sentry integrated into our mobile apps for our courses.

12:11 Those apps are built and compiled in native code with Flutter.

12:15 With Sentry, it's literally a few lines of code to start tracking those errors.

12:18 Don't fly blind.

12:20 Fix code faster with Sentry.

12:22 Create your Sentry account at talkpython.fm/sentry.

12:25 And if you sign up with the code TALKPYTHON, one word, all caps, it's good for two free months of Sentry's business plan,

12:32 which will give you up to 20 times as many monthly events, as well as some other cool features.

12:36 My thanks to Sentry for supporting Talk Python To Me.

12:39 Yeah, so out in the audience, Kim says, AWS does make a few packages available directly just by default in Lambda.

12:48 That's kind of nice.

12:49 Yeah, yep.

12:50 So, yeah, Bodo, which if you're dealing with AWS and Python, you're using the Bodo package.

12:57 And yeah, that's included for you.

12:59 So that's definitely helpful in any of their, you know, transitive dependencies would be there.

13:04 I think Bodo used to even include, like, requests, but then I think they eventually dropped that with some, like, SSL stuff.

13:12 But yeah, you definitely, you can't just, like, pip install anything and not think of it unless, depending on how you package these up.

13:21 So.

13:21 Sure.

13:22 Sure.

13:22 That makes sense.

13:23 Yeah.

13:23 Of course they would include their own Python libraries, right?

13:26 Yeah.

13:26 And it's not a, yeah, it's not exactly small.

13:29 I think, like, Bodo core used to be, like, 60 megabytes, but I think they've done some work to really get that down.

13:36 So.

13:37 Yeah.

13:38 Yeah, that's, yeah, that's not too bad.

13:40 I feel like Bodo core, Bodo 3, those are constantly changing.

13:43 Like, constantly.

13:45 Yeah.

13:45 Yeah, well, as fast as AWS adds services, they'll probably keep changing quickly.

13:52 Yeah.

13:53 I feel like those are auto-generated, maybe, just from looking at the way the API

13:57 looks at it, you know, the way they look written.

13:59 And so.

14:00 Yeah.

14:00 Yeah, that's probably the case.

14:03 Yeah.

14:03 I know they do that with, like, their infrastructure is code CDK.

14:09 It's all, like, TypeScript originally, and then you have your Python bindings for it.

14:13 And so.

14:13 Right, right, right, right.

14:15 I mean, it makes sense, but at the same time, when you see a change, it doesn't necessarily

14:18 mean, oh, there's a, there's a new important aspect added.

14:22 It's probably just, yeah, I don't know.

14:23 People have actually pulled up the console for AWS, but just the amount of services that

14:29 are there.

14:30 And then each one of those has its own full API, like a little bit of the one of those

14:33 things.

14:34 So we regenerated it, but it might be for some part that you'd never, never call, right?

14:38 Like you might only work with S3 and it's only changed.

14:41 Yeah.

14:41 I don't know, EC2 stuff, right?

14:44 Right.

14:44 Yep, exactly.

14:45 Yeah, indeed.

14:47 All right.

14:47 Well, let's talk real quickly about some of the places where we could do serverless, right?

14:53 Yeah.

14:53 You mentioned AWS Lambda.

14:54 Yeah.

14:55 And I also maybe touch on just 1 million requests free per month.

15:00 That's pretty cool.

15:01 Yeah.

15:01 Yeah.

15:02 So yeah, getting like jumping into AWS sometimes sounds scary, but they have a pretty generous

15:08 free tier.

15:08 Definitely do your research on some of the security of this, but yeah, you can, you know, a million

15:13 requests free per month.

15:15 You probably have to look into that a little bit because it's, you know, you have your memory

15:21 configurations too.

15:22 So there's probably, I don't know exactly how that works within their free tier, but

15:26 you're charged like with Lambda, at least it's your like invocation time and memory and

15:33 also amount of requests.

15:34 So yeah.

15:36 I'm always confused when I look at that and go, okay, with all of those variables, is that

15:41 a lot or a little?

15:42 I know it's a lot, but it's hard for me to conceptualize.

15:45 Like, well, I use a little more memory than I thought.

15:47 So it costs like, wait a minute.

15:48 How do I know how much memory I use?

15:49 You know, like, yeah.

15:50 What does this mean in practice?

15:52 Yeah.

15:52 It's built by how you configure it too.

15:55 So if you say I need a Lambda with 10 gigs of memory, you're, you're being built at that

16:01 like 10 gigabyte price threshold.

16:05 So there is a really, a really cool tool called power to our AWS Lambda power tuner.

16:13 So yeah, what that'll do is you can, it creates a state machine in AWS.

16:19 Yeah.

16:19 I think I did send you a link to that one.

16:21 So the power tuner will create a state machine that, invocates your Lambda

16:28 with several different memory configurations.

16:31 And you can say, I want either the best cost optimized version or the best performance optimized

16:37 version.

16:37 So, and that'll tell you, like, it'll say, okay, yeah, you're best with a Lambda configured

16:43 at, you know, 256 megabytes, you know, for memory.

16:48 So, sorry.

16:50 Yeah.

16:51 For the link it's, this is power tools.

16:54 This is a different, amazing package.

16:55 Yeah.

16:56 Maybe I didn't send you the, power tuner.

16:58 I should, okay.

16:59 Sorry.

16:59 That's news to me.

17:00 Okay.

17:01 Sorry.

17:02 Yeah.

17:02 And they have similar names.

17:04 Yeah.

17:04 There's only so many ways to describe stuff.

17:07 Right.

17:07 Yeah.

17:08 Okay.

17:08 They have it right in their head.

17:09 Yep.

17:09 And it is an open source package.

17:11 So there's probably a GitHub link in there, but yeah.

17:14 Yeah.

17:14 And, this will tell you like the best way to optimize your, your Lambda function, at

17:19 least as far as memory is concerned.

17:22 So, yeah, really good tool.

17:24 It gives you a visualization and gives you a graph that will say like, okay, here's,

17:28 here's kind of where cost and performance meet.

17:31 And so, yeah, it's, it's really excellent for figuring that out.

17:36 yeah, at least in, in AWS land, I don't know if some of the other cloud providers have

17:42 something similar to this, but, yeah, it's definitely a really helpful tool.

17:47 Sure.

17:48 Yeah.

17:49 Like I said, I've, I'm confused and I've been doing cloud stuff for a long time when I look

17:53 at it.

17:53 Yeah.

17:53 So, well, there's some interesting things here.

17:55 So like, you can actually have, a Lambda invocation that costs less with a higher

18:02 memory configuration because it'll run faster.

18:05 So you're, I think Lambda bills like by the millisecond now.

18:09 So, you can actually, because it runs faster, it can be cheaper to run.

18:14 So, well, that explains all the rust that's been getting written.

18:17 Yeah.

18:17 Yeah.

18:19 There's a real number behind this.

18:21 I mean, we need to go faster, right?

18:23 Okay.

18:24 So, you know, the, the, I think maybe AWS Lambda is one of the very first ones as well to come

18:30 on with this concept of serverless.

18:32 Yeah.

18:32 It, it, I don't know for sure, but it probably is.

18:36 And then, yeah, your other big cloud providers have them.

18:38 And now you're actually even seeing them, come up with, a lot of like Vercel has

18:44 some kind of, some type of serverless function.

18:47 I don't know what they're using behind it, but it's almost like they just put a,

18:51 a nicer UI around AWS Lambda or whichever cloud provider that's, you know, that's potentially

18:57 backing this up.

18:58 But, yeah, they're just reselling their flavor of, of somebody else's cloud.

19:03 Yeah.

19:03 Yeah.

19:04 It could be because yeah, Vercel, obviously they have a really, nice suite of products

19:09 with a, with a good UI, very usable.

19:11 So, no.

19:12 Okay.

19:13 So Vercel, some of them people can try.

19:15 And then we've got the two other hyperscale clouds, I guess you call them.

19:20 Google cloud has serverless, right?

19:22 Yep.

19:23 Okay.

19:23 So I'm not sure which ones that they might just be called cloud functions.

19:27 and yeah, Azure also has, they got cloud run and cloud functions.

19:33 I have no idea what the difference is though.

19:35 Yep.

19:36 Yep.

19:36 And, yeah, Azure also has a serverless product and I'd imagine there's probably like

19:41 even more that we're, we're not aware of, but, yeah, it's kind of, nice to not think

19:47 about, setting up servers for something.

19:51 So then I think maybe, is it fast?

19:53 Yeah.

19:54 Function as a service.

19:54 Let's see.

19:55 Yeah.

19:55 But if we search for F-A-A-S instead of pass or, IaaS, right?

20:01 There's, we've got Almeda, Intel.

20:04 I saw that IBM had some, oh, there's also, the, we've got digital ocean.

20:09 I'm a big fan of digital ocean because I feel like their pricing is really fair and they've

20:14 got good documentation and stuff.

20:16 So they've got functionless, sorry, serverless functions, that you can, I don't use these.

20:23 Yeah.

20:23 Yeah.

20:24 I haven't used these either, but yeah.

20:26 and, yeah, as far as costs, like for, especially for like small, like personal

20:30 projects and things where you don't need to have a server on all the time, they're yeah,

20:36 pretty, pretty nice.

20:37 If you have a website that you need something server side where you got to have some Python,

20:41 but you don't need a server going all the time.

20:44 Yeah.

20:44 Okay.

20:44 Like maybe I have a static site, but then I want this one thing to happen.

20:49 If somebody clicks a button, something like that.

20:51 Yeah.

20:51 Yeah, absolutely.

20:52 Yep.

20:53 You could be completely static, but have something that is, yeah.

20:57 Yeah.

20:57 That one function call that you do need.

20:59 Yeah.

20:59 Exactly.

21:00 And then you also pointed out that cloud flare has some form of serverless.

21:05 Yeah.

21:05 And I haven't used these either, but, yeah, I do know that they have, some type

21:11 of, you know, functions as a service.

21:14 I don't know what frameworks for languages.

21:17 They let you write them in there.

21:19 Yeah.

21:19 I use, bunny.net for my CDN.

21:23 It's just absolutely awesome platform.

21:25 I really, really love it.

21:26 And one of the things that they've started offering, I can get this stupid, completely useless cookie

21:30 banner to go away is they've offered a, what they call, edge compute.

21:35 Oh, yeah.

21:36 Okay.

21:36 What you would do.

21:38 I don't know where to find it somewhere, maybe, but basically the CDN has 115, 120 points of

21:46 presence all over the world where, you know, this one's close to Brazil.

21:49 This one's close to Australia, whatever.

21:51 And, but you can actually run serverless functions on those things.

21:56 Like, so you deploy them.

21:57 So the code actually executes in 150, 115 locations.

22:01 Yes.

22:02 Yeah.

22:02 Probably cloudflare or something like that as well, but I don't know.

22:04 Yeah.

22:05 AWS has, they have like Lambda at edge, at the edge.

22:10 So that's kind of, goes hand in hand with their, like CDN, cloud, cloud front,

22:16 I believe.

22:17 Yeah.

22:17 So they have something similar like that, where you have a, a Lambda that's going to

22:21 be, you know, perform it because it's yeah.

22:23 Distributed across their CDN.

22:25 Yeah.

22:26 CDN that's a whole nother world.

22:27 They're, they're getting really advanced.

22:30 Yeah.

22:30 Yeah.

22:31 Yeah.

22:33 So, we won't, maybe that's a different show.

22:35 It's not a show today, but it's just the idea of like you distribute the compute

22:40 on the CDN is pretty nice.

22:42 The drawback is just JavaScript, which is okay, but it's, it's not the same as right.

22:47 Yes.

22:48 Yeah.

22:48 I wonder if you could do high script.

22:51 Oh yeah.

22:52 That's an interesting thought.

22:53 Yeah.

22:53 Yeah.

22:54 Yeah.

22:54 We're getting closer and closer to Python in the browser.

22:57 So yeah.

22:57 Yeah.

22:58 My JavaScript includes this little bit of WebAssembly and I don't, I don't like semicolons,

23:02 but go ahead and run it anyway.

23:03 Yeah.

23:05 Out in the audience.

23:06 It looks like, Cloudflare probably does support Python, which is.

23:10 Okay.

23:10 Yeah.

23:11 Yeah.

23:11 There's, there's so many options out there for, for serverless functions that are, yeah,

23:15 really, especially if you're already in, you know, if you're maybe deploying, you know,

23:19 some static stuff over Cloudflare, Cloudflare or Vercel.

23:23 Yeah.

23:24 It's sometimes nice just to be all, all in on one, one service.

23:28 Yeah.

23:29 Yeah.

23:29 It really is.

23:30 Let's talk about using, choosing serverless over other things, right?

23:35 You actually laid out two really good examples or maybe three, even with the static site

23:39 example, but you know, I've got bursts of activity.

23:43 Yeah.

23:43 Right.

23:44 And really, really low, incredibly, incredibly low usage other times.

23:50 Right.

23:50 Yeah.

23:51 Yeah.

23:51 You think of like, yeah, you're, you're black Friday traffic, right?

23:54 Like you to not have to think of like how many servers to be provisioned for something

24:01 like that.

24:02 Or if you don't know, I think there's probably some like, well, I actually know there's

24:07 been like some pretty popular articles about people like leaving the cloud.

24:11 and, and yeah, like if you know your scale and you know, you know exactly what you

24:17 need, yeah, you, you probably can save money by just having your, your own infrastructure

24:24 set up or, but yeah, if you don't know, or it's very like spiky, you don't need

24:30 to have a server that's consuming a lot of power running, you know, 24 hours a day.

24:34 You can, just invoke a function as you need.

24:38 So this portion of talk Python may is brought to you by Mailtrap and email delivery platform

24:46 that developers love an email sending solution with industry, best analytics, SMTP and email

24:53 APIs, SDKs for major programming languages and 24 seven human support.

24:59 Try for free at Mailtrap.io.

25:02 Yeah.

25:03 There's a super interesting series by David Heinemeyer Hansen of Ruby on rails fame and

25:10 from, base camp about how base camp has left the cloud and how they're saving $7 million

25:15 and getting better performance over five years.

25:19 Yeah.

25:19 But that's, that's a big investment, right?

25:21 They bought $600,000 for hardware, right?

25:26 Yeah.

25:26 Yeah.

25:27 Only so many people can do that.

25:28 Right.

25:29 And you, you know, you gotta have that running somewhere that, you know, with, with,

25:33 with backup power and, yeah.

25:36 Yeah.

25:37 So what they ended up doing for this one is they went with some service called theft,

25:43 okay.

25:43 Hosting, which is like white glove, white.

25:46 So white, labeled is the word I'm looking for where it just looks like it's your hardware,

25:52 but they put it into a mega data center and there's, you know, they'll have the hardware

25:57 shipped to them and somebody will just come out and install it into racks and go, here's

26:00 your IP.

26:00 Right.

26:01 Like a, yeah.

26:02 virtual, virtual VM or a VM in a cloud that, but it takes three days, three

26:09 weeks to boot.

26:09 Right.

26:11 Yeah.

26:11 Yeah.

26:12 Yeah.

26:12 Which is kind of the opposite is almost, I mean, I'm kind of diving into it because it's,

26:16 it's almost the exact opposite of the serverless benefits, right?

26:20 This is insane stability.

26:22 I have this thing for five years, 4,000 CPUs we've installed and we're using them for the

26:29 next five years rather than how many milliseconds am I going to run this code for?

26:33 Right.

26:34 Exactly.

26:34 Yeah.

26:35 Yeah.

26:35 Yeah.

26:36 It's definitely the far opposite.

26:37 And so, yeah, you kind of, you know, maybe serverless isn't for every use case, but it's

26:42 definitely a nice like tool to have in the toolbox.

26:45 And yeah, you definitely even working in serverless, like if you're, yeah, eventually you're going

26:51 to need like maybe to interact with the database that's got to be on all the time.

26:54 You know, it's yeah, there's a lot of, it's a good tool, but it's definitely not the,

26:59 one size fits all solution.

27:02 So yeah, let's talk databases in a second, but for, you know, when does it make sense

27:07 to say we're going to put this, like if this suppose I have an API, right?

27:10 That's a pretty, an API is a real similar equivalent to what a serverless thing is.

27:15 Like I'm going to call this API, I think it's going to happen.

27:17 I'm going to call this function.

27:18 The thing's going to happen.

27:19 Let's suppose I have an API and it has eight endpoints.

27:22 It's written in FastAPI or whatever it is.

27:24 It might make sense to have that as serverless, right?

27:27 You don't want to run a server and all that kind of thing.

27:29 But what if I have an API with 200 endpoints?

27:32 Like, where is the point where like, there are so many little serverless things.

27:35 I don't even know where to look.

27:36 They're everywhere.

27:37 Which version is this one?

27:38 You know what I mean?

27:38 Like where, where's that trade-off and how do, you know, you and the people you work with

27:43 think about that?

27:43 Yeah, I guess that's a, a good, good question.

27:48 I mean, as you start like, you know, getting into these like microservices, how small

27:54 do you want to break these up?

27:55 And so there, there, there is some different thoughts on that.

27:58 Even like a, a Lambda function, for instance, if you, if you put this behind an API,

28:03 you can use a single Lambda function to, for your entire rest API, even if it is,

28:12 you know, 200 endpoints.

28:13 So, okay.

28:15 So you put the whole app there and then when a request comes in, it routes to whatever

28:19 part of your app.

28:20 Theoretically.

28:21 Yeah.

28:22 Yeah.

28:22 So there's, a package called power tools for, AWS power tools.

28:28 I don't know.

28:29 Power tools for Python.

28:30 Yeah.

28:30 I don't care.

28:30 Yeah.

28:31 Yeah.

28:31 I know the similar name.

28:32 Yeah.

28:33 So they have a, a really good like event resolver.

28:36 So you can actually, it's, it almost looks like, you know, flask or, some of the

28:42 other Python web frameworks.

28:44 And so you, you kind of have this resolver, whether it's, you know, API gateway and, and

28:49 AWS are different.

28:50 they have a few different options for, the API itself, but yeah, you, in, in theory,

28:57 you could have your entire API behind a single Lambda function, but then that's probably not

29:03 optimal.

29:04 Right.

29:04 So you're, that's where you have to, figure out how to break that up.

29:09 And so, yeah, they do like that same, the decorators, you know, app dot post or

29:16 yeah.

29:16 Yeah.

29:17 Yeah.

29:17 And your endpoints and you can do the, with the, have them, have variables in there

29:23 where maybe you have like ID, as your lookup and it can, you know, slash user slash

29:29 ID is going to find your, find, you know, a single user.

29:33 So, and, and their documentation, they actually addressed this a little bit.

29:38 Like, do you want to do, they, they call it either like a, a micro function pattern where

29:44 maybe every single endpoint has its own Lambda function.

29:48 but yeah, that's a lot of overhead to maintain.

29:51 If you had, like you said, 200 endpoints, you have 200 Lambdas.

29:55 you gotta upgrade them all at the same time.

29:58 So they have the right.

29:58 Yeah.

29:59 Yeah.

29:59 Yeah.

29:59 Yeah.

30:00 That's right.

30:01 So, yeah.

30:02 So, there, there's definitely some, even, even conflicting views on this.

30:07 How, how micro do you want to go?

30:09 And so, I was able to go to AWS reinvent and, November and, and they actually kind

30:17 of pitched this, this hybrid, maybe like if you take your like CRUD operations, right.

30:22 And, and maybe you have, your create update and delete all on one Lambda that's con with

30:29 its configuration for those, but your read is on another Lambda.

30:33 So maybe your CRUD operations, they all interact with a relational database, but your reader just,

30:39 does like, reads from a dynamo database, where you kind of sync that, that data up.

30:45 And so you could have your permissions kind of separated for each of those, Lambda functions.

30:50 And, you know, people reading from an API don't always need the same permissions as,

30:56 you know, updating, deleting.

30:58 And so, so yeah, there's a lot of different ways to, to break that up and how, how micro

31:02 do you go with this?

31:03 definitely.

31:04 How micro can you go?

31:05 Yeah.

31:06 Yeah.

31:07 Cause it sounds to me like if you had many, many of them, then all of a sudden you're back

31:11 to like, wait, I did this because I didn't want to be in DevOps and now I'm a different

31:16 kind of DevOps.

31:17 Yeah.

31:18 Yeah.

31:18 So, yeah, that.

31:20 Python, that package, the power tools is, is does a lot of like heavy lifting for you.

31:27 at PyCon, there was a talk on serverless that, they, the way they described the power

31:34 tools package was it, they had said it like codified your, your serverless best practices.

31:39 And, and it's really true.

31:41 They give a lot, there's like so many different tools in there and there's a, a logger,

31:45 like a structured logger, that works really well with Lambda and you, you don't even have

31:50 to use like the AWS, login services.

31:54 If you want to use like, you know, data dog or, or Splunk or something else, you, it's just

31:59 a structured logger and how you aggregate them.

32:01 It's like up to you and you can even customize how you format them, but it's, works really

32:07 well with Lambda.

32:07 yeah.

32:09 So that's.

32:09 You probably could actually capture exceptions and stuff with something like century even.

32:13 Right.

32:14 Oh yeah.

32:14 Python code.

32:15 There's no reason you couldn't.

32:16 Right.

32:16 Exactly.

32:17 Yep.

32:18 yeah, some of that comes into, you know, packaging up those, those libraries for

32:23 that.

32:23 you know, you, you do have to think of some of that stuff, but like, yeah, yeah.

32:29 Data dog, for instance, they, they provide something called like a Lambda layer or a Lambda extension,

32:34 which is another way to package code up.

32:37 That just makes it a little bit easier.

32:38 So, yeah, there's a lot of, a lot of different ways to, to attack some of these problems.

32:43 A lot of that stuff, even though they have nice libraries for them, it's really just calling

32:47 a HTTP endpoint and you could go, okay, we need something really light.

32:51 I don't know if requests is already included or, but there's some gotta be some kind of HTTP

32:54 thing already included.

32:55 We're just going to directly call it.

32:57 Not sure.

32:58 Yeah.

32:58 All these packages.

32:59 Yeah.

32:59 Yep.

33:00 Yeah.

33:00 Yeah.

33:01 This code looks nice.

33:02 This, this power tools code, it looks like well-written Python code.

33:07 It's they, they do some really amazing stuff and they, they bring in a Pydantic too.

33:13 So, yeah, like, being mostly in serverless, I I've never really gotten to use like FastAPI,

33:20 right.

33:20 And leverage Pydantic as much, but with, power tools, you really can.

33:25 So, so they'll package up, Pydantic for you.

33:28 And so you can actually, yeah, you can have Pydantic models for, you know, validation

33:35 function on these.

33:36 It's like a, a Lambda function, for instance, it always receives an event.

33:41 There's always like two arguments to the fun, to the handler function.

33:43 It's event and context.

33:45 And like event is always a, it's a, a dictionary in Python.

33:50 And so they, they can always look different.

33:53 And so, yeah.

33:55 Yeah.

33:56 So, cause the event.

33:57 Yeah.

33:58 so if you look in the power tools, github their, their tests, they have like,

34:04 okay, here's what a, an event.

34:07 API gateway proxy event dot JSON or whatever.

34:11 Right.

34:11 Yes.

34:12 Yeah.

34:12 So they have, yeah.

34:14 Examples.

34:14 Yes.

34:15 Yeah.

34:16 So like, you don't want to parse that out by, by yourself.

34:19 you know, so they, they have, Pydantic models or they, they might actually just be

34:25 Python data classes, but, that you can, you can say like, okay, yeah, this function

34:31 is going to be for, yeah.

34:32 An API gateway proxy event, or it's going to be an S3 event or whatever it is.

34:38 You know, there's, there's so many different ways to receive events, from different AWS

34:42 services.

34:43 So, so yeah, power tools kind of gives you some, some nice validation.

34:47 And yeah, you might just say like, okay, yeah, the body of this event, even though I,

34:51 I don't care about all this other stuff, that they include the path headers,

34:58 queer string parameters, but I just need like the body of this.

35:01 So you just say, okay, event dot body, and you can even use, you can validate that further.

35:06 The event body is going to be a Pydantic model that you created.

35:10 So yeah, there's a lot of different pieces in here.

35:12 If I was working on this and it didn't already have Pydantic models, I would take this and go

35:17 to Jason Pydantic.

35:19 Oh, I didn't even know this existed.

35:21 That's where it's.

35:21 Okay.

35:22 Boom.

35:22 Put that right in there.

35:23 And boom, there you go.

35:25 It parses it onto a nested tree, object tree of, of the model.

35:30 Very nice.

35:30 But if they already give it to you, they already give it to you.

35:32 Yeah.

35:32 Yeah.

35:33 Just take what they give you.

35:34 Yeah.

35:35 Those specific events might be data classes instead of Pydantic.

35:38 just because you don't, that way you don't have to package Pydantic up in your Lambda.

35:43 But, yeah, if you're already, figuring out a way to package power tools, you're, you're,

35:48 you're, you're close enough that you probably just include Pydantic too.

35:51 But yeah, yeah, it's, and they also, I think they just added this feature where

35:58 it'll actually generate, open API schema for you.

36:02 kind of, I think, yeah, fast, FastAPI does that as well.

36:04 Right.

36:05 So, yeah, so that's something you can leverage power tools to do now as well.

36:10 Oh, excellent.

36:11 And then you can actually take the open API schema and generate a Python client board on

36:15 top of that.

36:16 I think with, yeah, yeah.

36:17 So you just say it's robots all the way down.

36:20 Right.

36:20 Turtles all the way down.

36:22 Yeah.

36:22 Yeah.

36:23 Yeah.

36:23 yeah.

36:25 I haven't used those open API generated clients, very much.

36:30 I was always like skeptical of them, but, yeah.

36:32 In theory.

36:34 I just feel heartless or, you know, soulless, I guess the word looks more.

36:37 And he's just like, okay, here's another star org, star, star KW orgs thing where it's like,

36:42 couldn't you just like write it, make some reasonable defaults and give me some keyword

36:46 argue, you know, just like, yeah, but if it's better than nothing, you know, it's better than

36:50 right.

36:50 Yeah.

36:50 Yeah.

36:51 So, but yeah, you, you can see like power tools.

36:55 They, they took a lot of influence from FastAPI and it does seem like it.

36:59 Yeah, for sure.

37:00 Yeah.

37:00 Yeah.

37:00 Oh, it's, it's definitely really powerful.

37:03 And you get some of those same benefits.

37:05 Yeah.

37:05 This is new to me.

37:06 It looks, it looks quite nice.

37:07 So, another comment by Kim is tended to use serverless functions for either things that

37:12 run briefly, like once a month on a schedule or the code that processes stuff coming in on

37:17 an AWS SQS simple queuing service queue of unknown schedule.

37:23 So maybe that's an interesting segue into how do you call your serverless code?

37:29 Yeah.

37:29 Yeah.

37:30 So, as we kind of touched on, there's a lot of different ways from like, you know,

37:34 AWS for instance, to do it.

37:36 So, yeah, like AWS Lambda has like Lambda function URLs, but I haven't used those as much,

37:43 but if you just look at like the different options and, and like power tools, for instance,

37:47 you can, you can have a, a load balancer that's gonna, where you set the endpoint to invoke

37:53 a Lambda, you can have, API gateway, which is another service they have.

37:59 so there's a lot of different ways.

38:02 Yeah.

38:02 So, that's kind of almost getting into like a way of like streaming or an asynchronous

38:10 way of processing data.

38:11 So, yeah, maybe, in AWS, you're using a queue, right?

38:17 That's, filling up and you say like, okay, yeah, every time this queue is at this size or,

38:22 or this timeframe, invoke this Lambda and process all these messages.

38:27 So there's a lot of different ways to, to, to invoke a Lambda function.

38:33 So, if it's, I mean, really as simple as, you can invoke them like from the AWS CLI

38:40 or, but yeah, most people are probably have some kind of API around it.

38:44 Yeah.

38:44 Yeah.

38:45 Almost make them look like just HTTP endpoints.

38:47 Right.

38:48 Yeah.

38:48 Yeah.

38:49 Mark out there says not heard talk of ECS.

38:53 I don't think, but I've been running web services using Fargate serverless tasks on ECS for years

38:59 now.

38:59 Are you familiar with this?

39:01 I haven't done it.

39:01 I, yeah, I, I'm like vaguely familiar with it, but yeah, this is like a, a serverless,

39:08 yeah.

39:08 Serverless compute for containers.

39:10 So, I haven't used this personally, but yeah, very like similar concept where it

39:18 kind of scales up for you and, yeah, you don't have to have things running all

39:22 the time, but yeah, it can be dockerized applications.

39:25 yeah, in fact, the company I work for now, they do this with their Ruby on rails applications.

39:29 They, they dockerize them and run, with, with Fargate.

39:34 so, Docker creating Docker containers of these things, the less familiar you are with

39:41 running that tech stack, the better it is in Docker.

39:44 You know what I mean?

39:45 Yeah.

39:45 Yeah.

39:46 Like I could run straight Python, but if it's Ruby on rails or PHP, maybe it's going

39:50 into a container that would make me feel a little bit better about it.

39:53 Yeah.

39:53 Especially if you're in that workflow of like handing something over to a DevOps team, right?

39:58 Like you, you can say like, here, here's an image or a container or Docker file that,

40:02 you know, that will work for you.

40:04 You know, that's maybe a little bit easier than, trying to explain how to set up an environment

40:10 or something.

40:10 So, yeah.

40:11 Yeah.

40:12 Yeah.

40:12 Fargate's a really good serverless option too.

40:15 Excellent.

40:16 What about performance?

40:17 You know, you talked about having like a whole API apps, like FastAPI or flask or whatever.

40:23 Yeah.

40:23 Start up those apps can be somewhat, can be non-trivial basically.

40:27 And so then on the other side, we've got databases and stuff.

40:31 And one of the bits of magic of databases is the connection pooling that happens, right?

40:36 So the first connection might take 500 milliseconds, but the next one takes one.

40:40 It's already open effectively, right?

40:42 Yeah.

40:43 Yeah.

40:43 That's definitely something you really have to take into consideration is like how, how

40:48 much you can do.

40:49 That's where some, some of that like observability, some of like the tracing that you can do

40:53 and profiling is, is really powerful.

40:56 Yeah.

40:57 Yeah.

40:57 AWS Lambda, for instance, they have, they have something called cold starts.

41:02 So like, yeah.

41:05 So the first time like a Lambda gets invoked or maybe you have, you know, 10 Lambdas that

41:12 get called at the same time, that's going to, you know, invoke 10 separate Lambda functions.

41:17 So that's like great for the scale, right?

41:19 That's really nice, but on a cold start, it's usually a little bit slower invocation because

41:26 it has to initialize.

41:27 Like I think what's happening, you know, behind the scenes is they're like, they're moving your

41:33 code over.

41:34 That's going to get executed.

41:35 And anything that happens like outside of your handler function.

41:40 So importing libraries sometimes you're establishing a database connection.

41:47 Maybe you're, you know, loading some environment variables or, or some secrets.

41:52 And so, yeah, there's definitely, performance is something to consider.

41:57 I, yeah, that's probably, you, you mentioned rust.

42:01 yeah, there's probably some more performant, like run times for some of these serverless functions.

42:06 So, I've even heard some people say, okay, for, for like client facing things, we're, we're

42:14 not going to use serverless.

42:15 Like we just want that performance.

42:17 So, so that cold start definitely can, that can have an impact on you.

42:21 Yeah.

42:23 Yeah.

42:23 On both ends that I've pointed out, like the app start, but also the service, the database

42:28 stuff with like the connection.

42:29 Right.

42:29 Yeah.

42:30 So yeah.

42:31 Relational databases too.

42:32 That's an interesting thing.

42:34 with, yeah.

42:34 What do you guys do?

42:35 You mentioned Dynamo already.

42:36 Yeah.

42:37 So Dynamo really performant for, a lot of connections.

42:41 Right.

42:42 But a, so Dynamo is a, you know, serverless database that can, that can scale.

42:47 You can query it over and over and that's not going to, it doesn't reuse a connection

42:52 in the same way that like a SQL database would.

42:56 so that's, that's an excellent option, but if you do have to connect to a relational

43:02 database and you have a lot of invocations, you can, you can use a, like a proxy,

43:09 if, if you're all in on AWS.

43:11 And so again, sorry for this is really AWS heavy, but, but if you're using their like

43:16 relational database service, RDS, you can use RDS proxy, which will use like a pool of connections

43:22 for your Lambda function.

43:24 Oh, that can, yeah.

43:25 That can give you a lot of, performance or, at least you won't be, you know, running

43:33 out of connections to your database.

43:34 So, another thing too, is just how you structure that connection.

43:39 So, I mentioned cold Lambdas, you obviously have warm Lambdas too.

43:43 So, a Lambda has its, its handler function.

43:47 And so anything outside of the handler function can get reused on a, on a, on a warm Lambda.

43:52 So you can establish the connection to a database and it'll get reused on every invocation that

43:58 it, that it can.

43:58 That's cool.

43:59 Do you have to do anything explicit to make it do that?

44:02 Or is that just, it just has to be outside of that handler function.

44:06 So, you know, kind of at your, your top level of your, your file.

44:10 So.

44:10 Yeah.

44:11 Excellent.

44:12 Yeah.

44:12 It makes me think almost one thing you would consider is like profiling the import statement

44:18 almost.

44:18 Right.

44:19 And yeah, that's what we normally do, but there's a library called import profiler that actually

44:24 lets you time how long different, different things take to import.

44:27 It could take a while, especially if you come from, not from a native Python way of thinking

44:33 in like C# or C++ or something you say hash include or using such and such

44:40 like that's a compiler type thing that really has no cost.

44:44 Yeah.

44:44 There's code execution.

44:46 And when you import something in Python and some of these can take a while, right?

44:49 Yes.

44:50 Yeah.

44:51 So, there's a lot of tools for that.

44:53 There's some, I think even maybe specific for Lambda.

44:55 I don't like data dog has a profiler that gives you like this, I forget what the graphic

45:01 is called, like a flame, a flame graph.

45:03 Yeah.

45:04 That'll give you like a flame graph and show like, okay, yeah, it took this long to,

45:09 make your database connection this long to import Pydantic.

45:12 And it took this long to, make a, a call to DynamoDB, you know, so you actually

45:19 kind of like break that up.

45:21 AWS has x-ray, I think, which does something similar to, so, yeah, it's definitely something

45:27 to consider.

45:27 another, just what you're packaging, is definitely, something to, to watch

45:34 for.

45:35 And so, I mentioned, yeah, I mentioned using pants to package Lambdas and they, they

45:42 do, hopefully I don't butcher how this works behind the scenes, but, they're using,

45:48 they're using Rust and they'll actually kind of like infer your dependencies for you.

45:52 And so they have a, an integration with AWS Lambda.

45:58 They also have it for Google cloud function.

46:00 So yeah, it'll go through.

46:02 You say, here's like my, AWS Lambda function.

46:05 This is the file for it and the function that needs to be called.

46:09 And it's going to create a zip file for you, that has your, your Lambda code in it.

46:15 And it's going to find all those dependencies you need.

46:17 So it'll actually, you know, by default, it's going to include like, you know, Bodo that you

46:23 need.

46:23 If, if you're using Bodo, it'll, if you're going to use, you know, pie, my SQL or whatever

46:30 library, it's going to pull all those in and zip that up for you.

46:34 And so if you just like open up that zip and you see, and especially if you're,

46:40 sharing code across your code base, maybe you have a shared function to, to make some of these

46:45 database connections or calls.

46:46 Like, you, you see everything that's going to go in there.

46:50 And so, yeah.

46:52 And so how, how like pants does it is it's, it's file-based.

46:55 So, sometimes just for like ease of imports, you might throw a lot of stuff in like your,

47:00 your init.py file and say like, okay, yeah.

47:03 From, you know, you add all kind of bubble up all your things that you want to import in

47:08 there.

47:09 well, if one of those imports, is also using, open CV and you don't need

47:17 that, then pants is going to say like, oh, he's importing this.

47:21 And because it's file-based now this Lambda needs open CV, which is a, you know, massive

47:27 package.

47:28 That's going to, it's going to impact your, your performance, especially in those cold

47:33 starts because that, that code has to be moved over.

47:36 So yeah, that's, that's pretty interesting.

47:38 So kind of an alternative to saying, here's my requirements or my pyproject.toml, my lock

47:45 file or whatever, that just lists everything the entire program might use.

47:48 This could say, you're going to import this function.

47:51 And to do that, it imports these things, which import those things.

47:54 And then, and then it just says, okay, that means here's what you need.

47:57 Right.

47:57 Right.

47:57 Yeah.

47:58 Yeah.

47:59 It's definitely one of like the best ways that I found to, to package up,

48:03 Lambda functions.

48:04 I think some of the other tooling might do some of this too, but yeah, a lot of times

48:08 it, it would require like requirements that TXT, but if you have like a large code

48:13 base to where maybe you do have this, this shared, you know, module for, that, you

48:19 know, maybe you have, you know, 30 different Lambda functions that are all going to use

48:23 some kind of helper function.

48:24 It's just going to go in, like grab that.

48:27 And it doesn't have to be like pip installable pants is smart enough to just be like, okay,

48:30 it needs this code.

48:31 And so, but yeah.

48:33 You definitely have to be careful.

48:34 Yeah.

48:35 Yeah.

48:35 And then there's so many other cool things that, that pants is doing that, you know,

48:39 they have some, really nice stuff for testing and, linting and formatting.

48:43 And it's, yeah, there's a lot of really good stuff that they're doing.

48:48 Yeah.

48:49 I had Benji on the show to talk about pants.

48:51 That was, that was fun.

48:52 Yeah.

48:53 So let me go back to this picture.

48:55 Is this the picture?

48:56 I have a lot of things open on my screen now there.

49:00 So on my server setup that I described, which is a bunch of Docker containers running on one

49:05 big machine.

49:06 I can go in there and I can say, tail this log and see all the traffic to all the different

49:11 containers.

49:11 I can tell another log and just see like the logging log book, log guru, whatever output

49:17 of that, or just the web traffic.

49:19 Like there's different ways to just go, I'm just going to sit back and look at it for

49:22 a minute to make sure it's chilling.

49:24 Right.

49:24 If everything's so transient, not so easy in the same way.

49:28 So what do you do?

49:29 Yeah.

49:30 So, yeah, power tools does, they have their, their structured logger that helps a lot, but

49:37 yeah, you have to kind of like aggregate these logs somewhere.

49:39 Right.

49:39 Because yeah, you can't, you know, a Lambda function, you can't like SSH into.

49:44 Right.

49:45 So, yeah, you can't have to make too long.

49:47 Yeah.

49:48 Yeah.

49:48 so yeah, you, you need to have some way to aggregate these.

49:54 So like AWS has cloud watch where, that will like by default kind of log all of your

50:00 standard out.

50:00 So even like a print statement would go to, cloud watch, just by, by default.

50:06 but you probably want to like structure these better with, most likely in, in, you

50:11 know, JSON format, just most tooling around those is going to help you.

50:16 So, yeah, the power tool structured logger is, is really good.

50:20 And you can, you can, even like you can have like a single log statement, but you can

50:26 append different keys to it.

50:28 And, it's, it's pretty powerful.

50:31 especially cause you, you don't want to like, I think like, so if you just like

50:36 printed something in a Lambda function, for instance, that's going to be like a different

50:40 row on each of your, like, by like the default cloud watch, like it'll be, I, how

50:46 it breaks it up is really odd unless you have some kind of structure to them.

50:50 Okay.

50:51 And so, yeah, so definitely something to, to consider.

50:54 something else you can do is, yeah, there's, there's metrics you can do.

51:00 so like how it works with like cloud watch, they have a specific format.

51:04 And if you use that format, you can, it'll automatically pull that in as a metric

51:11 and like data dog has something, similar where you can actually kind of like go in there.

51:15 You can look at your logs and say, like, find a value and be like, I want this to be a metric

51:20 now.

51:20 And so that's really powerful.

51:23 Oh, the metric sounds cool.

51:24 So I see logging and racing.

51:27 What's the difference between those things?

51:29 Like tracing as a level and just a high level of logging.

51:33 Yeah.

51:34 Tracing, and hopefully I do do the justice, differentiating too.

51:40 I feel like tracing does have a lot more to do with your, your performance

51:45 or, maybe even closer to like tracking some of these metrics.

51:49 Right.

51:49 I've used the data dog tracer a lot.

51:55 and I've used the AWS, like X-ray, they're tracing utility a little bit too.

52:01 And so like those might, those will show you.

52:04 So like, maybe you are reaching out to a database, writing to S3.

52:08 APM, application performance monitoring, where it says, yes, you spent this much time in a

52:14 SQL query in this much time in.

52:17 Identic serialization.

52:17 Whereas the login would say a user has been sent a message.

52:22 Right.

52:23 Exactly.

52:23 Yeah.

52:24 Yeah.

52:24 Yep.

52:24 Tracing definitely is probably more around your, your performance and yeah.

52:28 Things like that.

52:28 I see.

52:29 It's kind of insane that they can do that.

52:31 You know, you, you, you see it in the Django debug tool bar and the pyramid debug tool bar,

52:36 but they'll be like, here's your code and here's all your SQL queries.

52:38 And here's how long they took.

52:39 And you're just like, wow, that thing is reaching deep down in there.

52:42 You know, the data dog one is very interesting because like it, it just knows like that this

52:48 is a SQL connection and it tells you like, oh, okay.

52:51 This SQL connection took this long.

52:52 And it was like, I didn't tell it to even trace that.

52:55 Like it just like, it, it knows really well.

52:58 Yeah.

52:58 So like the connections open is another to say, and here's what it sent over SSL, by the way.

53:04 How'd you get in there?

53:05 Yeah.

53:06 Yeah.

53:06 So it's in process.

53:08 So it can do a lot.

53:09 It is impressive to see those things that work.

53:12 All right.

53:12 So that's probably what the tracing is about, right?

53:14 Yes.

53:15 Yeah.

53:15 Yeah.

53:15 Definitely.

53:16 Probably more around performance.

53:17 You can put some different things in tracing too.

53:20 Like I've, I've used it to say, like we talked about those like database connections to say

53:25 like, oh yeah, I, this is a, this is reusing a connection here.

53:29 Cause I was, I was trying to like debug some stuff on, am I creating a connection too many

53:33 times?

53:33 So I don't want to be, so yeah, you can, you can put some other useful things in tracing

53:38 as well.

53:38 But yeah.

53:39 And pat out the audience.

53:40 Oops.

53:41 Move around.

53:41 When you're using many microservices, like single execution involves many services.

53:46 Basically it's hard to follow the logs between the services and tracing helps.

53:50 Tie that together.

53:51 Yeah.

53:52 Yeah.

53:52 That's for sure.

53:53 All right.

53:54 Let's close this out, Tony, with one more thing that I'm not sure how constructive it

53:59 can be.

53:59 There probably is some ways, but testing, right?

54:02 Yeah.

54:02 Yeah.

54:03 That's definitely.

54:05 If you could set up your own Lambda cluster, you might just run that for yourself.

54:09 Right.

54:10 So yeah.

54:11 How do you do this?

54:12 Right.

54:12 Yeah.

54:13 To some extent you can write like, like there's, there's a Lambda Docker image that you could run

54:17 locally and you can do that.

54:19 But if, if your Lambda is reaching out to DynamoDB, I guess there's, there's technically

54:24 a DynamoDB container as well.

54:27 Like, like you could, it's a lot of overhead to set this up, but rather than just doing like,

54:32 you know, flask start or, you know, whatever the command is to like spin

54:37 up a flask.

54:38 I pressed the go button in my IDE and now it's.

54:41 Yeah.

54:41 Yeah.

54:42 Yeah.

54:42 So that's definitely, and there's more and more tooling coming out, you know,

54:47 that's, that's coming out for this kind of stuff.

54:49 But, if you can like unit tests, there's no reason you can't just like, you know,

54:55 run, run unit tests, locally.

54:58 But when you start getting into the integration test, you're probably getting to the point where

55:03 maybe you just deploy to actual services.

55:08 and you know, it's, it's always trade-offs, right?

55:11 Like there's, there's costs associated with it.

55:13 There's the overhead of like, okay, how can I deploy to an isolated environment, but maybe

55:19 it interacts with another microservice.

55:20 So, yeah, so there's, there's definitely trade-offs, but.

55:25 I can see that you might come up with, like a QA environment, almost like a mirror image

55:31 that doesn't share any data, but it's sufficiently close, but then you're running, I mean, that's

55:37 a pretty big commitment because you're running a whole replica of whatever you have.

55:41 Right.

55:41 Yeah.

55:42 And so, yeah, QA environments are great.

55:45 but you might even want lower than QA.

55:48 You might want to have a, dev or like a, one place I worked at, we would

55:56 spin up an entire environment for every PR.

55:59 So, you could actually, yeah, like when you created a PR, that environment got spun

56:05 up and it ran your integration tests and system tests against that environment, which, you

56:10 know, simulated, your prod environment a little bit better than running locally on your

56:15 machine.

56:16 So, certainly a challenge, to, to test this.

56:20 And yeah.

56:21 And there's always these like one-off things too.

56:24 Right.

56:24 Like, you can't really simulate like that memory limitation of a Lambda locally,

56:30 you know, as much as when you deploy it and things like that.

56:33 So.

56:33 Yeah.

56:34 Yeah.

56:34 That would be much, much harder.

56:36 I, maybe you could run a Docker container and put a memory limit on it.

56:40 You know, that might work.

56:41 Yeah.

56:42 Yeah.

56:42 Maybe you're back into like more and more DevOps to avoid DevOps.

56:46 Right.

56:46 Yeah.

56:47 Yeah.

56:48 So there it goes.

56:49 But interesting.

56:50 All right.

56:51 Well, anything else you want to add to this conversation before we wrap it up about out of

56:55 time here?

56:55 Yeah.

56:56 I guess, I don't know if I have it.

56:58 Hopefully we covered enough.

56:59 there's just a lot of like good.

57:01 Yeah.

57:02 There's a lot of good resources.

57:03 The, the tooling that I've mentioned, like power tools and pants, just amazing communities

57:09 like power tools has a discord and go on there and ask for help.

57:13 And they're super helpful.

57:14 pants has a Slack channel.

57:16 You can join their Slack and ask, you know, about things.

57:19 And, and so those two communities have been really good and really helpful in this.

57:24 A lot of good, talks that are available on YouTube too.

57:27 So just, yeah, there's definitely resources out there and, that a lot of people have,

57:31 you know, fought this for a while.

57:32 So yeah, excellent.

57:34 And you don't have to start from just create function and start typing.

57:38 Yeah.

57:38 Yeah.

57:39 Cool.

57:39 All right.

57:40 Well, before you get out of here though, let's, let's get your recommendation for a PI PI package,

57:45 something, something fun.

57:47 I probably, you know, we've talked a lot about it, but power tools is definitely one,

57:53 that is like every day getting used for me.

57:56 So the, yeah, power tools for Lambda and Python, they actually support other, other, languages too.

58:03 So they have like the same functionality for like, you know, no JS, you know, for like

58:08 typescript and.net.

58:09 And so, yeah, but this one definitely, leveraging power tools and, Pydantic together

58:17 just really made like, serverless, a lot of fun to, to write.

58:22 So, yeah, definitely doing great things there.

58:25 Excellent.

58:25 Well, I'll put all those things in the show notes and it's been great to talk to you.

58:29 Thanks for sharing your journey down the serverless path.

58:33 Yeah.

58:34 Yep.

58:34 Thanks.

58:34 Thanks for having me.

58:35 You bet.

58:36 Yeah.

58:36 Enjoy chatting.

58:37 Same.

58:37 Bye.

58:38 That's it.

58:38 This has been another episode of talk Python to me.

58:42 Thank you to our sponsors.

58:44 Be sure to check out what they're offering.

58:45 It really helps support the show.

58:47 Take some stress out of your life.

58:49 Get notified immediately about errors and performance issues in your web or mobile applications with

58:55 Sentry.

58:55 Just visit talkpython.fm/sentry and get started for free and be sure to use the promo

59:01 code talkpython, all one word.

59:03 Mailtrap, an email delivery platform that developers love.

59:07 Try for free at mailtrap.io.

59:11 Want to level up your Python?

59:12 We have one of the largest catalogs of Python video courses over at Talk Python.

59:17 Our content ranges from true beginners to deeply advanced topics like memory and async.

59:22 And best of all, there's not a subscription in sight.

59:25 Check it out for yourself at training.talkpython.fm.

59:27 Be sure to subscribe to the show.

59:29 Open your favorite podcast app and search for Python.

59:32 We should be right at the top.

59:33 You can also find the iTunes feed at /itunes, the Google Play feed at /play,

59:39 and the direct RSS feed at /rss on talkpython.fm.

59:43 We're live streaming most of our recordings these days.

59:46 If you want to be part of the show and have your comments featured on the air,

59:49 be sure to subscribe to our YouTube channel at talkpython.fm/youtube.

59:54 This is your host, Michael Kennedy.

59:56 Thanks so much for listening.

59:57 I really appreciate it.