Pixi, A Fast Package Manager

Episode Deep Dive

Meet the Guests

Wolf Vollprecht

Wolf brings a rich background in robotics, having earned a master's degree in Robotics from Zurich and worked with Disney Research on innovative projects like robots drawing images in the sand. His journey with package management began at QuantStack, where he contributed to the development of Mamba, a fast alternative to Conda.

Ruben Arts

Ruben, also rooted in robotics, has experience building AI-driven robots at Smart Robotics. His work with Conda and Mamba at Smart Robotics led him to join Wolf at Prefix, the company behind Pixi.

What to Know If You're New to Python

If you're new to Python, understanding package management is crucial for maintaining clean and efficient development environments. This episode introduces Pixi, a high-performance package manager that simplifies dependency management and environment setup. Familiarize yourself with the basics of Conda and Mamba, as Pixi builds upon these tools to offer enhanced performance and broader language support. This foundation will help you grasp how Pixi can streamline your Python projects.

Key Points and Takeaways

Introduction to Pixi, a High-Performance Package Manager

Pixi is introduced as a high-performance package manager designed for Python and other languages. Unlike traditional package managers, Pixi not only manages dependencies but also handles Python itself, streamlining the setup process for developers and data scientists.

Addressing Dependency Hell and Reproducibility Challenges

The guests discuss the common issues of dependency conflicts and reproducibility in Python projects. Pixi aims to simplify dependency management by ensuring consistent environments across different systems, reducing the frustration of incompatible package versions.

Comparing Pixi with Existing Package Managers: Conda, Mamba, and NixOS

Pixi is compared to other package managers like Conda, Mamba, and NixOS. While Conda has been a staple in the Python ecosystem, Pixi offers enhanced performance and broader language support. The discussion highlights how Pixi builds upon the strengths of these existing tools to provide a more efficient and versatile package management solution.

Standalone Binary and Cross-Platform Compatibility

One of Pixi's standout features is its ability to be installed as a standalone binary, eliminating the need for pre-installed Python or other dependencies. This ensures cross-platform compatibility, allowing users to set up environments seamlessly on Windows, macOS, and Linux.

Lock Files and Enhanced Reproducibility

Pixi emphasizes the importance of lock files for maintaining reproducible environments. By adopting a YAML-based log file format similar to CondaLock, Pixi ensures that all dependencies, including specific versions and SHA hashes, are consistently installed across different setups.

Seamless Integration with CondaForge and Compatibility with Conda Packages

Pixi maintains 100% compatibility with Conda packages, allowing users to leverage the extensive repository available on CondaForge. This compatibility ensures that users can easily transition to Pixi without losing access to their existing Conda packages.

Inspiration from Modern Package Managers like Cargo

The guests draw inspiration from modern package managers such as Cargo for Rust. Pixi aims to replicate Cargo's ease of use and efficient dependency resolution, making package management more intuitive and less error-prone for Python developers.

Future Plans: PyPI Integration and Expanding Features

Looking ahead, Pixi plans to integrate more deeply with PyPI, the Python Package Index, to support a wider range of Python packages. Additionally, features like Pixi Build will allow users to build packages directly from Pixi projects, further enhancing its utility and flexibility.

Community Involvement and Open Source Contributions

Pixi is actively seeking contributions from the community. With an emphasis on open-source collaboration, the team encourages developers to participate through their GitHub repository and Discord channel, fostering a supportive and innovative development environment.

Packaging Con Conference and Networking Opportunities

Wolf and Ruben are organizing Packaging Con 2023, a conference dedicated to package management in Python and beyond. This event aims to bring together package manager developers, share insights, and explore new ideas to advance the state of package management.

Quotes and Stories

Wolf Vollprecht on Standing on the Shoulders of Giants:

"We're definitely standing on the shoulders of giants. We're taking inspiration from multiple different ecosystems and trying to synthesize the best ideas into our tools."

Ruben Arts on Overcoming Dependency Challenges:

"Having the ability to install any version of the robotic software on any version of Ubuntu was a game-changer for us. It completely unbound us from being locked to a specific distribution."

Wolf on the Magic of Pixi:

"Pixi is like making package management magic. It's a standalone binary that just works, allowing you to focus on building your projects without worrying about the underlying dependencies."

Overall Takeaway

Pixi emerges as a promising advancement in the realm of package management for Python and beyond. By addressing longstanding issues like dependency conflicts and reproducibility, and by drawing inspiration from modern tools like Cargo, Pixi offers a streamlined, efficient, and versatile solution for developers and data scientists. Its seamless integration with existing ecosystems, combined with a focus on community collaboration and continuous improvement, positions Pixi as a potential next-generation package manager. Whether you're grappling with complex dependencies or seeking a more intuitive package management experience, Pixi is certainly worth exploring.

Explore more about Pixi and join the conversation on their GitHub and Discord.

Links from the show

Wolf Vollprecht: github.com/wolfv

Ruben Arts: github.com/ruben-arts

pixi: prefix.dev

Prefix: prefix.dev

Launching pixi: prefix.dev

Conda: docs.conda.io

Conda Forge: conda-forge.org

NixOS: nixos.org

Packaging Con 2023: packaging-con.org

Watch this episode on YouTube: youtube.com

Episode #439 deep-dive: talkpython.fm/439

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 In this episode, we have Wolf Volprecht and Ruben Arch from the Pixie Project here to talk about

00:04 Pixie, a high-performance package manager for Python and other languages that actually manages

00:10 Python itself too. They have a lot of interesting ideas on where Python packaging should go,

00:15 and they're putting their time and effort behind them. Will Pixie become your next package manager?

00:20 Listen in to find out. This is Talk Python in May, episode 439, recorded October 19th, 2023.

00:27 Welcome to Talk Python in May, a weekly podcast on Python. This is your host, Michael Kennedy.

00:46 Follow me on Mastodon, where I'm @mkennedy, and follow the podcast using @talkpython,

00:51 both on fosstodon.org. Keep up with the show and listen to over seven years of past

00:57 episodes at talkpython.fm. We've started streaming most of our episodes live on YouTube. Subscribe to

01:04 our YouTube channel over at talkpython.fm/youtube to get notified about upcoming shows and

01:09 be part of that episode. This episode is sponsored by Posit Connect from the makers of Shiny. Publish,

01:16 share, and deploy all of your data projects that you're creating using Python. Streamlit, Dash,

01:22 Shiny, Bokeh, FastAPI, Flask, Reports, Dashboards, and APIs. Posit Connect supports all of them.

01:28 Try Posit Connect for free by going to talkpython.fm/posit, P-O-S-I-T.

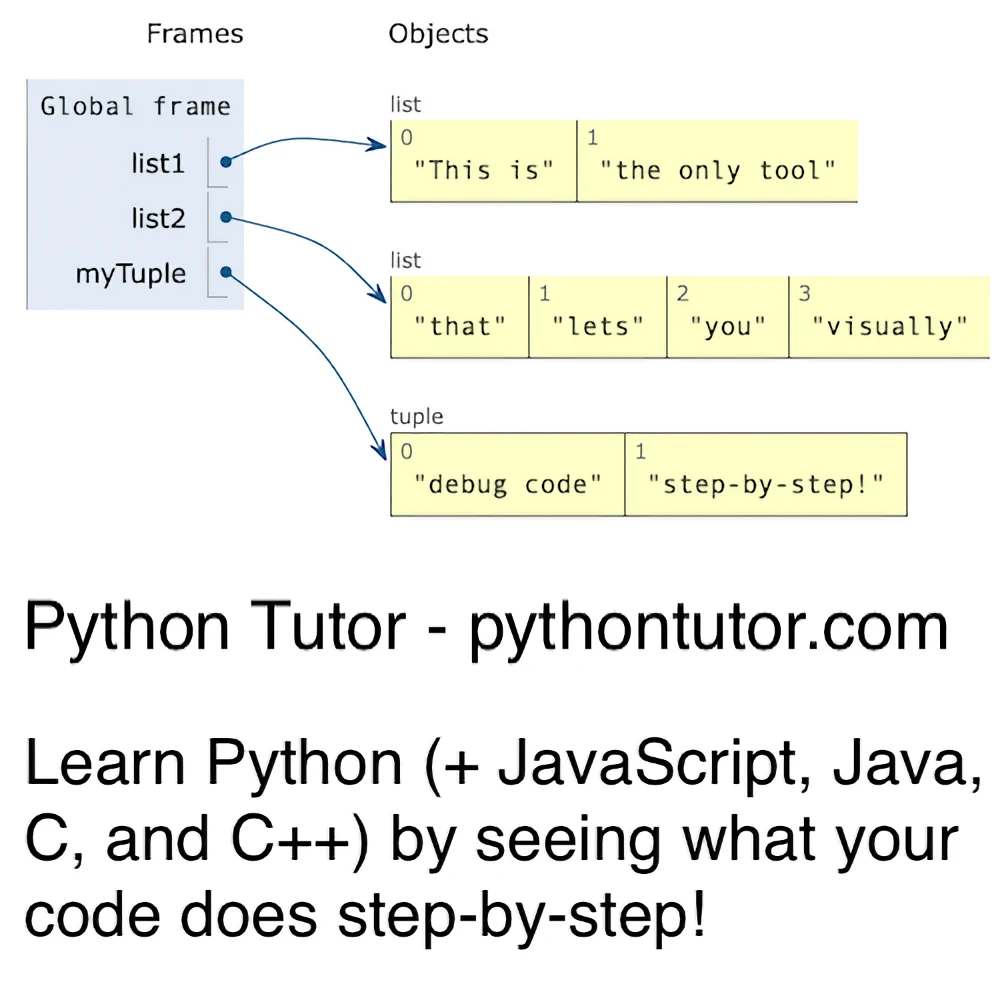

01:34 And it's brought to you by Python Tutor. Visualize your Python code step-by-step to understand just

01:42 what's happening with your code. Try it for free and anonymously at talkpython.fm/python-tutor.

01:50 Wolf, Ruben, welcome to Talk Python To Me. Hello. Thanks for having us. Yeah, it's great to have

01:55 you here. We're going to dive into packaging once again. And we've talked about packaging a couple

02:01 times over the last few months. It's a super interesting topic. And there are these times

02:07 where it seems like there's a fixed way and everyone kind of agrees like this is how you do things.

02:12 For example, I think Flask and Django have kind of been web frameworks for a long time. Then all of a

02:17 sudden, you know, a thousand flowers bloom and there's a bunch of new ideas in the web space.

02:22 I think that was driven by async and the typing stuff. And a bunch of people said, well, let's try

02:27 new things now that we have these new ideas. And the other frameworks were more stable, couldn't make

02:31 those adjustments. And I think people are just, you know, we're kind of at one of these explosion

02:36 points of different ideas and different experiments in packaging. What do you all think?

02:41 Yeah, that's an interesting way to put it. I think we definitely see a lot of interest in package

02:45 management these days and new ideas being explored. But I also think that we're definitely standing on

02:51 the shoulders of giants. So kind of similar to what you just described with the web frameworks,

02:54 where actually, I think we are taking a lot of inspiration from multiple different ecosystems that

03:00 are out there and try to kind of synthesize the best ideas into our tools.

03:05 Yeah, you got some interesting ideas for sure. Ruben?

03:07 I cannot really add to that anymore. I'm standing on the shoulders of giants like, whoa.

03:13 So yeah, absolutely.

03:14 But I think we'll go into that.

03:15 Yeah, we sure will. Now, before we get into the topics, let's just do a quick introduction for folks

03:20 who don't know you. I feel like this is a really interesting coincidence because the very last

03:25 previous show that I did was with Sylvan and Jeremy, a bunch of folks from QuantStack. And,

03:31 you know, just out of coincidence, like I said, your colleagues, right? So,

03:35 Wolf, let's start with you. A little background on you.

03:37 I did work at QuantStack for quite a while. And it's also where my journey with package management

03:42 began. But maybe just taking one more step back, I studied in Zurich and I actually graduated in

03:49 robotics there with a master's degree.

03:51 Yeah.

03:52 That's awesome.

03:53 I had some fun times. I was also working with Disney Research on like a little robot that was

03:58 drawing images in the sand and these kind of fun things. But at QuantStack, we were doing a lot of

04:05 scientific computing stuff, initially trying to like reimplement NumPy in C++, which is a library

04:11 called Xtensor and always doing a lot of package management and mostly in the CondaForge and Conda

04:17 ecosystem. And Conda at some point became really slow and CondaForge became really large. And that led me to

04:24 kind of experiment with new things, which resulted in Mamba. And then I got really lucky and had the

04:31 opportunity to create my own little startup around more of these package management ideas, which is the

04:37 current company called Prefix. And we'll dive more into Pixie and all these new things that we're doing,

04:43 I think, later on.

04:43 Yeah, that's a lot of interesting stuff. What language do you program a robot that writes in the sand in?

04:49 It's always a mix of Python and C++. So I think I stuck to that up until now.

04:53 Yeah, yeah, it sounds like it. Ruben, what's your story? Tell people a bit about yourself.

04:58 Yeah, so I also started in robotics. I did a Megatronics engineering degree. And while working in robotics,

05:06 I started at my previous company, Smart Robotics. And there we were building the new modern AI-driven

05:13 robots. So that also involves a lot of deep learning packages and stuff like that. And that is kind of

05:19 how I got into these package management solutions. And we started using Comba to package our C++ and our

05:26 Python stuff and to make it easy to use in these virtual environments where we combine those packages.

05:33 And it all was made easier by Mamba, which was built by Wolf. So that's how we got in touch.

05:39 And later on, I moved to Wolf's company. So that's why I'm here now.

05:44 Excellent. So you're a prefix dev as well.

05:47 Yes.

05:48 Awesome.

05:48 I'm an expert dev there.

05:50 Yeah.

05:50 Cool. Well, I guess let's start with a little bit of maybe setting the stage. So you all talked about

05:56 Conda and Conda Forge and really relying on that for a while and then wanting better performance,

06:03 some other features we're going to talk about as well. But give us a quick background for those

06:08 maybe non-data scientists or people who are not super into it. What is Conda and what is Conda Forge

06:13 and the relationship of those things? Who wants to take that?

06:15 Conda is, generally speaking, a package manager. That's all it is. Actually has nothing specific to AI,

06:24 ML, data science, et cetera. But most people associate it with Python and machine learning,

06:29 let's say. And Conda is written in Python and it's like, I don't know, 10 or 15 years old.

06:35 And it kind of comes out of an era where there were no wheel files on PyPI and people had to

06:43 compile stuff on their own machines. There was no good Windows support.

06:46 Right. You can't use this. Where's your Fortran compiler? Come on.

06:50 Yeah, exactly.

06:51 What year is this again?

06:53 And you need your GCC, et cetera.

06:55 Yeah.

06:56 That's kind of when Conda was born. And I think it really was one of those early tools that

07:02 tried something with binary package management cross-platform. So basically,

07:06 Conda allowed you to install Python and a bunch of Python packages that needed compiled extensions

07:13 like NumPy, SciPy, et cetera. And it kind of comes out of this Travis Olyphant universe

07:18 of scientific Python tools.

07:21 Yeah. He's made a huge impact for sure.

07:23 Yeah. But for us, sort of the key feature is just that it's like a cross-platform generic package

07:30 manager that you can actually use for any language. So you can also create Conda packages for R.

07:36 And there are actually quite a few R packages on Conda for us, let's say. And you can also do Julia or Rust, et cetera. So there's a lot of possibility and potential. I think it also kind of hits a sweet spot where Conda is really not a language specific package manager at the same time cross-platform. Because usually what you have is you have either like some sort of like Windows package manager or Linux package manager like apt-get or DNF on Fedora. Or you have like a language specific package manager like pip.

07:53 Or Julia has package.jl or I don't know, R has CRAN, et cetera. And so Conda kind of sits at the crossroads of those two where it's not language specific and also cross-platform. And I think that makes it like really interesting. And then maybe I can also talk a little bit about Conda for it because I think that's a lot of things.

08:11 Conda for it because I think that's a lot of things.

08:41 Conda for it because I think that's a lot of things.

09:11 like an SDK and other like DLLs that we need from the operating system. But everything above is like managed by Conda or Mamba or Pixie. So all of these tools work with on the base of the same packages.

09:23 And that starts at like Bzip2 or Zlib, like these low-level compression libraries, OpenSSL and then up to Python. And then you can also get Qt, which is a graphical user interface library, which is written in C++. And applications that are building on top of Qt. So like, for example, physics simulation engines and stuff like this. So and you also get Qt and lots of libraries like this.

09:48 All is not bound to like a specific operating system in that sense. And that makes it pretty, pretty nice. For example, also in CI, when you want to test your own software and stuff like this, you can use the same commands to set up basically the same packages across different platforms.

10:04 Yeah, nice. So kind of like what Wheels did for pip and PyPI. Conda was way ahead of that game, right? But with a harder challenge, because it wasn't just Python packages. It was all these different ones, right?

10:17 Yeah, including Python itself. So that's also one of the things that people sometimes maybe not realize, but Python itself is actually properly packaged on CondaForge and installable via Conda or Mamba or Pixie.

10:29 Ruben, any thing you want to add to that before we start talking about what you all are creating?

10:33 Yeah, so from my history, it's like this multi-platform stuff is less used in robotics, because a lot of the stuff is still running in Linux, but it moved it from the ability to run it only on Ubuntu to any version you want.

10:49 And you could install any version of the robotic software you're running on, like any version of Ubuntu. So where we were locked, not just to Linux, but locked to a distribution of Linux, we were now like completely unbound and the developers can set up their own environments, which is just really powerful for the user itself. That brought it back into our company in a much better way.

11:14 That's excellent. I'm always blown away at how much traffic these package managers have, how much bandwidth they use and things like that.

11:21 Yeah.

11:22 Who's hosting CondaForge and where you get that stuff from?

11:27 Currently, CondaForge is entirely hosted by Anaconda.org. We do have a couple of mirrors available, but they are not really used. But one of the more exciting mirrors that we have is on GitHub itself.

11:39 GitHub has this GitHub packages feature and we are using an OCI registry where you would usually put your like Docker containers and stuff like that. We upload all the packages there just as a backup. And we're planning to make it usable as well. So that would be nice for like your own GitHub actions and stuff because they could just like take the packet from sort of GitHub internally.

12:00 Just write down the server rack in the data center.

12:03 Yeah, exactly.

12:04 Keep it local. Always good to be local.

12:06 Yep.

12:07 Okay. I want to focus mostly on Pixie for a conversation because I think that's got a lot of excitement. Maybe we'll get some time to talk about Mamba and other things as well. But yeah, let's you all wrote this interesting announcement entitled Let's Stop Dependency Hell talking about Pixie here. I think we can sort of talk through some of the ideas you laid out there and that'll give people a good idea of what this is all about.

12:31 Yeah.

12:31 Yeah.

12:31 Yeah.

12:31 Yeah. So first of all, let's start with some of the problems you're trying to solve here. So say we've all experienced issues with reproducibility and dependency management. I will tell you just yesterday. And if it was later in the day for me, it would probably be today. I'm running into a problem where with my courses website, where I try to install both the developer dependencies and the production dependencies. And it it's like this one requires greater than this dependency. And this one requires less than that dependency.

13:01 You can't install it. You can't install it. I'm like, well, how am I supposed to do this? Like, I'd rather have it shaky than impossible. So, you know, it's dependency challenges are all too present for me. But yeah, let's let's maybe you can lay out some of the some of the ideas like what you had in mind when you're talking about reproducibility and challenges here.

13:21 Yeah, I think you're not alone, first of all. So a lot of people have these kind of problems. And it's also not only in the Python world, let's say, but I think it's maybe a bit more pronounced in the Python world, just because there are so many packages and the way that package management in the Python world works.

13:38 Yeah, I feel like we can always look over the JavaScript. People feel a little bit better, but it's still a challenge for us.

13:43 That's true.

14:13 Yeah, I feel like we can always look over the way that we can always look over the Python world.

14:18 We can always look over the Python world, but we can always look over the Python world.

14:19 We can always look over the Python world, but we can always look over the Python world.

14:20 We can always look over the Python world, but we can always look over the Python world.

14:23 And we can always look over the Python world.

14:24 We can always look over the Python world.

14:26 We can always look over the Python world.

14:27 And we can always look over the Python world.

14:29 We can always look over the Python world.

14:31 We can always look over the Python world.

14:33 We can always look over the Python world.

14:35 And we can always look over the Python world.

14:37 We can always look over the Python world.

14:39 We can always look over the Python world.

14:40 We can always look over the Python world.

14:41 We can always look over the Python world.

14:42 We can always look over the Python world.

14:43 We can always look over the Python world.

14:44 We can always look over the Python world.

14:45 So it's not only Pixie.

14:46 There's also one thing called Rattler build, which is actually building the conna packages.

14:50 And there is another.

14:51 And then we have the back end of our website prefix.dev, which is also written in Rust and also uses Rattler underneath.

14:57 So that's really nice for us.

14:59 And the big win.

15:02 This portion of Talk Python To Me is brought to you by Posit, the makers of Shiny, formerly RStudio, and especially Shiny for Python.

15:11 Let me ask you a question.

15:12 Are you building awesome things?

15:14 Of course you are.

15:15 You're a developer or data scientist.

15:16 That's what we do.

15:17 And you should check out Posit Connect.

15:19 Posit Connect is a way for you to publish, share, and deploy all the data products that you're building using Python.

15:26 People ask me the same question all the time.

15:29 Michael, I have some cool data science project or notebook that I built.

15:32 How do I share it with my users, stakeholders, teammates?

15:35 Do I need to learn FastAPI or Flask or maybe Vue or React.js?

15:40 Hold on now.

15:41 Those are cool technologies and I'm sure you'd benefit from them, but maybe stay focused on the data project.

15:46 Let Posit Connect handle that side of things.

15:49 With Posit Connect, you can rapidly and securely deploy the things you build in Python.

15:53 Streamlit, Dash, Shiny, Bokeh, FastAPI, Flask, Quadro, Reports, Dashboards, and APIs.

16:00 Posit Connect supports all of them.

16:02 And Posit Connect comes with all the bells and whistles to satisfy IT and other enterprise requirements.

16:08 Make deployment the easiest step in your workflow with Posit Connect.

16:12 For a limited time, you can try Posit Connect for free for three months by going to talkpython.fm/posit.

16:18 That's talkpython.fm/posit.

16:23 The link is in your podcast player show notes.

16:25 Thank you to the team at Posit for supporting Talk Python.

16:29 If I wanted to stick with, say, Conda, could I still use Rattler build and then somehow upload that to CondaForge, something along those lines?

16:37 Okay.

16:38 So, you can totally, like, that's kind of the baseline sort of commonality between all of these tools is that we are sharing the same sort of Conda packages and the same metadata.

16:48 So, we definitely want to be 100% compatible package-wise with Conda for now.

16:52 Excellent.

16:53 We might have features later on, but we, like, we don't, like, we want to go through, like, Conda as a project has also, like, become much more community oriented.

17:01 And there is, like, a process called Conda Enhancement Proposals.

17:04 And we have already written a few of those.

17:07 So, there are many ideas, but we can talk about that later.

17:10 Trying to improve the overall system instead of overthrowing it.

17:13 Yes, yes.

17:14 Yeah.

17:15 Like, we would love to, like, improve the entirety of, like, Conda packages, CondaForge, and all of this.

17:20 Like, that's our main dream.

17:22 So, and then with some of the low-level tools in Rattler and with Pixie, we're kind of combining a bunch of tools that already existed.

17:28 And one thing essential for reproducibility is that you have log files.

17:32 So, at the point where you are sort of resolving your dependencies, we are also writing them into a log file.

17:38 And that's, like, something known from Poetry, from NPM, Yarn.

17:42 Cargo also has it.

17:44 And there's also a Conda project that's called CondaLock that writes log files.

17:48 And so, we have adopted the same format that CondaLock uses, which is a YAML-based log file format, and implemented in Rattler.

17:56 And we are exposing it and using it in Pixie.

17:58 So, anytime you, like, add a new dependency to your project, we write it in a log file.

18:03 And we make sure that, like, you can install the same packages, the same set of packages, the same versions, and SHA hashes in, like, the future.

18:13 And the other part about reproducibility, and that's more on the repository side, is that CondaForge never deletes old packages.

18:19 So, that's similar to PyPI, but not really this, like, it's different in a lot of, like, Linux distributions.

18:25 But, with PyPI, it's also the case that, you know, old versions are just kept around.

18:29 Do you ever worry that that might not be sustainable?

18:33 Like, it's fine now, in 20 years, like, we cannot pay for the thing from 20 years, like, it's, we just can't get enough donations to support Flask 0.1.

18:44 We just can't.

18:45 It's out.

18:46 That's the problem of the person that uses Flask 0.1, right?

18:49 Like, that's not the problem of the repository.

18:51 I think we're just making sure that...

18:53 No, no, sure.

18:54 For sure.

18:55 You could still run it, and you should probably sandbox it like crazy so that there are no, like, zero days that could affect your system.

19:02 You do have some things that are, like, self-hosted Conda capabilities that maybe we'll get a chance to talk about.

19:09 Like, theoretically, you could download these and save as a company or an organization or a researcher.

19:16 You could get the ones that actually count for you, right?

19:18 Yeah.

19:19 Like, you mean only have a subset of the packages that you need?

19:21 Yeah, say I'm using 50 packages with the transitive closure of everything I'm using.

19:25 And so I'm just going to make sure I have every version of those on Dropbox or on a hard drive I put away somewhere.

19:32 It's actually pretty funny, because what you create on your local system is a cache of all the packages that you ever used.

19:39 And you could activate that cache as a channel, like what Condor Forge is.

19:43 You could make your own channel of all your packages locally.

19:46 This is something we used when the internet went down in our company.

19:50 And we still needed to share packages with each other and needed to make our environments.

19:54 And just some people would spin up their own channel and you could use it from there.

19:58 It's just a different URL.

19:59 Yeah, that's awesome.

20:00 Cool.

20:01 I derailed your...

20:03 There, Wolf.

20:04 No, but yeah, I think like log files are the basis for reproducibility and then the fact that packages are never deleted.

20:12 I think that's something that like log files make a little bit like a Docker container sort of.

20:19 Yeah.

20:19 Yeah.

20:20 Because you know exactly what's in your software environment.

20:22 We don't control the outside and we don't do sandboxing as of now.

20:26 But that's kind of the way we think about log files.

20:28 And it just makes it very convenient also to ship basically that log file plus the Pixie Tommel and stuff to your coworker and they can just run it.

20:37 And we also resolve for multiple operating systems at the same time.

20:41 So you can say, you can specify in your Pixie Tommel if you want Linux, macOS and Windows and we resolve everything at the same time in parallel with async Rust code and stuff like this.

20:51 Nice.

20:52 So it's pretty fast and nice.

20:53 And yeah, the idea is that you can send it to your coworker.

20:56 They can just do a Pixie run start, which will just give them everything they need and have them up and going.

21:01 Really cool.

21:02 In your announcement for Pixie, one of the things you said is you're looking for the convenience of modern package managers such as Cargo.

21:11 Yeah.

21:12 What's different than say pip and PyPI versus Cargo?

21:16 Like when you say that, what are these new features you're like?

21:18 I wish we had this already.

21:20 We don't.

21:21 So I'm going to build it.

21:22 I think one thing that's just really nice with Cargo is, and that also attracts so many contributors to Rust projects, at least like that's the way I feel about it.

21:30 It's that it's so easy to just say Cargo run whatever.

21:34 And it's most of the time works and you just do Cargo build and like it builds.

21:39 And that's the experience that we want to recreate with Pixie.

21:42 And Cargo also comes with log files and Cargo just does this pretty nicely.

21:47 I mean, there are some peculiarities about how Rust builds packages or thinks about dependencies where the result is pretty different, let's say from like Python ecosystem and stuff like this.

21:57 But the baseline experience is definitely what we're also striving for.

22:01 And part of the problem is maybe also that pip is not managing Python.

22:05 So you always have that a little bit of a chicken and egg problem where you need to get Python first to be able to run pip.

22:11 And with Pixie, you don't have that problem because we also manage Python.

22:14 So you can specify in your Pixie what version of Python you want.

22:18 You get it on Windows, macOS and Linux in the same way.

22:20 And everything is just one command.

22:22 And it's everything is also locked in your log file, etc.

22:26 So that's kind of, yeah, we just control a bit more than pip.

22:30 And I think that's what's giving us some power.

22:33 And then pip also, like as far as I'm aware, and we recently had discussions with Python or Python package management developers.

22:41 They haven't come up with a log file format that works for everyone yet.

22:45 So Poetry has their own implementation and a bunch of other tools maybe have their own implementations as well.

22:50 Right. There's the pip lock from pipenv and others.

22:53 Yeah.

22:53 We're also kind of working on that.

22:55 I don't know if you saw that, but we just announced another tool that's also low level, sort of on the same level as Rattler, but it's called RIP.

23:02 And it deals with Python resolving and the wheel files.

23:05 Yeah.

23:06 And so we want to kind of cross over those two worlds where we resolve the counter packages first, then we resolve the Python packages after.

23:14 And we stick everything into the same log file that will for now be similar to the, yeah, basically based on the counter log format, which is a YAML file.

23:22 Interesting.

23:23 So this rip, I'm familiar that I didn't necessarily in my mind, tie it back to Pixie, but would that allow you to, could you mix and match?

23:30 Like some stuff comes off Conda Forge and some stuff comes off of PIPI, but you express that in your dependency file?

23:37 Yeah.

23:38 Like there are parts of the semantics aren't yet figured out, let's say.

23:42 Yeah.

23:43 But the idea is definitely that you can install Python and NumPy, for example, from Conda Forge.

23:47 And then, I don't know, scikit-learn from PIPI.

23:49 Like that's maybe not the example of how you would use it, but.

23:52 Yeah, of course.

23:53 Right.

23:53 Maybe you do one of the web frameworks, right?

23:55 Like FastAPI versus some of the scientific stuff from Conda.

23:59 Yeah.

23:59 Yeah.

23:59 Yeah.

24:00 I find the, at least the official Conda stuff.

24:02 Sometimes the framework, certain frameworks are a little bit behind and there are situations

24:06 where having the latest one within an hour matters a lot.

24:11 You know, for example, hey, it turns out, theoretically, it's not real.

24:14 It turns out that say Flask has a super bad remote code execution problem.

24:19 We just found out that if you send like a cat emoji as part of the URL, it's all over.

24:24 So patch it now, right?

24:26 Like you don't want to wait for that to like slowly get through some, you need that now, right?

24:30 And PyPI, I find it's kind of the tip of the latest in that regard.

24:35 I do agree to some extent.

24:37 So it's like, we also found that a lot of, there are these no arch packages, like pure

24:43 Python packages.

24:44 And I think, and there's just way more packages on PyPI.

24:48 And the turn of managing that on Conda Forge is a bit high.

24:51 So that's also like, we have lots of reasons.

24:54 And also in real world examples, we often find people mixing PyPI, pip and Conda.

24:58 So that's why we're thinking like, we need proper sort of support for PyPI in our tool to

25:03 make it really nice for Python developers.

25:05 It would take it to another level for sure.

25:07 And it would certainly make it stand out from what Conda does or what pip does, honestly.

25:12 Conda, for example, they, there is a way to kind of like add some Python dependency or pip dependencies,

25:17 but it's really just invoking pip as like a sub process and then installing some additional stuff into your environment.

25:22 And it's not really nice, not really tightly integrated.

25:25 And so we actually kind of took, did the work and wrote a resolver and rust.

25:31 So SIT solver.

25:32 And we've just extended it to also deal with Python or pip metadata, which is kind of what rip is.

25:38 Yeah.

25:39 So that's going to be very interesting just to figure out how to integrate those things and like really make them work nicely together.

25:45 I want to talk about the ergonomics using Pixie.

25:47 But first, maybe Ruben, you could address this first.

25:50 But I opened this whole conversation with a thousand flowers blooming around the package management story.

25:58 And I think for a long time, what people had seen was they're going to try to innovate within Python.

26:05 So you install Python, you create your environment, and then like you have a different workflow with different tools.

26:11 But some of the new ideas are starting to move to the outside.

26:14 Like we'll also manage Python itself.

26:17 If you say you want Python 3.10 and you only have 3.11 installed, we'll take care of that.

26:21 And something built on Python has a real hard time installing Python because there's this chicken and egg probably needs it first, right?

26:27 And it sounds like you all are taking that approach of we're going to be outside of Python, you know, built in Rust or any binary that just runs on its own would work to have a greater control, right?

26:38 So yeah, I know.

26:39 Just what are your thoughts on that?

26:40 Yeah.

26:41 So the one of the strong points is Pixie that you can install it as a standalone binary.

26:46 So you have a simple script or you can even just download it and put it in your machine and then you can install whatever you want.

26:53 So you're not limited to Python alone.

26:55 And in a lot of cases, you want to mix a lot of stuff.

26:59 So sometimes you need a specific version of SSH or sometime you need a specific version of open SSL or whatever that needs your package.

27:07 And you would have these long lists of getting started to like, oh, you need to install this with APT or you need to install this with name anything, any other package manager, and then you can run pip install and then it should all work.

27:21 And Pixie kind of moves it back to you have to have Pixie and you have to have the source code of the package that you're running or you're directly like using Pixie to install something.

27:35 And then you're running the actual code that you're trying to run instead of going to read some kind of read me from a person on the internet.

27:42 Yeah. And it's also pretty challenging for newcomers.

27:45 The programming.

27:46 This is really focused on making it easy.

27:48 Yeah, exactly.

27:49 I just want to run this.

27:50 You're like, but what am I doing all this terminal stuff?

27:52 Like, I just want to run.

27:53 I wrote the program.

27:54 I want it to go.

27:55 Yeah.

27:56 I feel like maybe that's part of why notebooks and that whole notebooks, Jupyter side of things is so popular because assuming somebody has created a server and got it started for you.

28:04 Like you don't worry about those things, right?

28:06 Yeah, exactly.

28:07 Yeah.

28:07 Let's talk about kind of the that beginner experience.

28:10 If you have an example on your website somewhere where it just shows if you just check out a repository that's already been configured to use Pixie, it's just clone Pixie run to run start or something like that.

28:24 Right.

28:25 You don't have to create the environments and even that could potentially happen without Python, even on the machine initially.

28:30 Right.

28:30 Totally.

28:31 Yeah.

28:31 So funny part of Pixie is we Pixie itself is a Pixie project.

28:35 So if we want to build Pixie, it is a Rust project, but we run Pixie run built in this case or Pixie install Pixie run install.

28:43 So you kind of move everything back into the tasks in Pixie and you can you can run it using Pixie and Pixie will take care of your environment.

28:52 Nice.

28:53 Yeah.

28:53 So basically, as I also said before, we're learning a lot, for example, from cargo.

28:57 So we also have a single Pixie tommel file that kind of defines all of your dependencies, a bit of metadata about your project, and then you can define these tasks.

29:06 And so like what we see on the screen is that we have a task that's called start and that just runs Python main dot PI.

29:12 So that's pretty straightforward.

29:15 But obviously, like you can go further, like you can have tasks that depend on other tasks and that we're learning a lot from.

29:21 So there's a project called task file dot def.

29:24 And we also want to integrate caching into these tasks so that if you like one task might download something on your system, like some assets that you need, like images and stuff.

29:33 And if you already have them cached, then you don't need to re download them and these kind of things.

29:37 So we're really like wanting to build a simple but powerful task system in there.

29:41 And that benefits greatly from having these dependencies available.

29:44 Because like in this case, what we see on the screen, we have two dependencies and one of those is Python 3.11.

29:50 And that means the moment you run pixie run start, it will actually look at the log file and look at what you have in your local environment installed.

29:59 And the environments are always local to the project, which is also a difference to call that number.

30:03 So it will look into that environment and check if Python 3.11 is there.

30:07 And if the version that you have in your environment corresponds to the one that's listed in the log file.

30:12 If not, it will download the version and install it into your environment and like make sure that you have all the stuff that's necessary or listed to run your to run what you need.

30:21 Nice.

30:22 This portion of Talk Python To Me is brought to you by Python Tutor.

30:26 Are you learning Python or another language like JavaScript, Java, C or C++?

30:31 If so, check out Python Tutor.

30:33 This free website lets you write code, run it and visualize what happens line by line as your code executes.

30:40 No more messy print statements or fighting with the debugger to understand what code is doing.

30:45 Python Tutor automatically shows you exactly what's going on step by step in an intuitive visual way.

30:50 You'll see all the objects as they are represented in Python memory and how they are connected and potentially shared across variables over time.

30:59 It's a great free tool to compliment what you're learning from books, YouTube videos and even online courses like the ones right here at Talk Python Training.

31:07 In fact, I even used Python Tutor when creating our Python Memory Management and Tips course.

31:12 It was excellent for showing just what's happening with references and containers in memory.

31:17 Python Tutor is super easy to check out.

31:19 Just visit talkpython.fm/python-tutor and click visualize code.

31:24 It comes preloaded with an example and you don't even need an account to use it.

31:29 Again, that's talkpython.fm/python-tutor to visualize your code for free.

31:33 The link is in your podcast player show notes.

31:35 Thank you to Python Tutor for sponsoring this episode.

31:38 So for example, you got in your example Python 3.11 for some flexibility there on the very, very end.

31:47 Yeah.

31:48 Does that download a binary version or does it build from source or what happens when it needs that?

31:53 Yeah.

31:54 So typically, like Gonda is a binary package manager.

31:57 So usually what you download is binary.

31:59 Yeah.

32:00 We are working on the source dependency capabilities where also Rackler build, what I mentioned before, is going to play a big role.

32:05 Because the idea is that you can also run Pixie build at some point soon and that will build your Gonda package out of your Pixie project.

32:12 But we would use the same capabilities to basically also allow you to get local dependencies and then build them ad hoc and put them into your environment.

32:21 Yeah.

32:21 So that comes back to the example you gave before with the problem that there's a huge share of a bug or something.

32:28 And you would want to use a non-support, yeah, a version that's not shared around the world yet.

32:35 So you need this GitHub link and that package you need to install.

32:40 And that's something we still want to support through this local or URL based dependency.

32:45 But for that, we first need to be able to build it.

32:47 Yeah.

32:48 Kind of like the Git plus on pip install.

32:50 Yeah.

32:51 Yeah, exactly.

32:52 I found out where this little section was here where this Pixie is made for collaboration on your announcement

32:57 where it just says Git clone some repo, Pixie run, start build, whatever.

33:01 Yeah.

33:02 Maybe just talk through like what happens there.

33:04 Because if I don't even have Python, much less a virtual environment, much less the things installed.

33:08 You know, if I try this at Python, if they just say clone this, go here, Python run.

33:13 Like if you don't have Python, it'll just say Python, what is that?

33:16 If you do have Python, it'll say, you know, FastAPI, what is that?

33:20 Right?

33:21 Like there's a lot of steps.

33:22 Yeah.

33:23 So that really simplifies.

33:24 And that's kind of what I was talking about with the beginners as well.

33:27 Like, you know, maybe speak to what's happening here.

33:29 Yeah.

33:30 So when you do Pixie run, it will create and you have nothing on your system, right?

33:36 Except for Pixie and that repository.

33:38 Then it's going to create a hidden folder inside of your project.

33:42 That's called .pixie.

33:43 Pixie and in there, it will install all of these tools that are dependencies of the project.

33:47 So Python, NumPy, scikit-learn, whatever.

33:50 And that like, and then when you do Pixie run, it will invoke.

33:54 Actually, there's a thing called Dino task share, which we're using.

33:57 And that's basically something like it looks like bash, but it also works in Windows, which

34:02 is like the key feature here.

34:03 Yeah.

34:04 So that will sort of run the task.

34:06 And in this case, like some task is probably defined inside of the Pixie Thomas and that

34:11 might run something like Python.

34:12 I don't know, start Flask or start Jupyter or, you know, whatever the developer desires

34:19 to do.

34:20 But the cool thing is that it will like in the background, activate the environment, like the

34:24 virtual environment and use it to run your software.

34:27 Yeah, that's really cool.

34:28 And that, yeah, most of that kind of happens behind the scenes.

34:31 So also with Conda, for example, or Mamba, it's usually multiple steps.

34:36 So usually what you would do is you do like Mamba create my environment and then the environment

34:42 will have some name and then you would need to do Mamba activate my environment.

34:45 And then, then only you would be able to run stuff.

34:48 And what you're running is also probably going to look more complicated than just typing Pixie

34:53 run some task, which does all of that.

34:55 Right.

34:56 The some task is almost an alias for the actual run command, right?

34:59 Yeah.

35:00 Yeah.

35:01 It could be something very complicated and it could also be multiple tasks that actually

35:04 run in the background because they can depend on each other.

35:06 Excellent.

35:07 I really like that the virtual environment or all the binary configuration stuff is a sub

35:14 directory of the project.

35:16 That's always bothered me about Conda.

35:18 If I go, I've got, I think I have about 260 GitHub repos on my, my GitHub profile and I check

35:25 out other people's stuff and check it out.

35:27 And so if I go just to my file system and I go in there, I'm like, I haven't messed with

35:32 this for a year.

35:33 Was that on the old computers on my laptop?

35:34 Is on my mini?

35:35 Like what, what was that on?

35:36 I don't.

35:37 So it, it could be, I haven't set it up or maybe I have.

35:39 Right.

35:40 And if I go there and I see there's a V and V folder or something along those lines, I'm

35:44 like, Oh yeah, it might be out of date, but I definitely have done something with this

35:47 here.

35:48 I probably can run it.

35:49 Whereas the Conda style, like you don't know.

35:51 What did you name it?

35:52 Yeah.

35:53 If you have 200 of them, what is the right one?

35:55 Do I activate it?

35:56 And then also if something kind of goes haywire, it's like, you know, I'm just gonna RMRF that

36:02 that folder and it's, it's out.

36:04 Just, just recreate it on the new version of whatever.

36:07 Right.

36:08 But if it's somewhere else, you know, there's just like this, this disconnect.

36:10 It's, I know there's like a command flagged override or something into like get Conda to

36:14 put it locally, but defaults are powerful.

36:16 Right.

36:17 And I really like that.

36:18 It's, it's like there and you can just blast away the dot pixie and, you know, start over

36:22 if you need to.

36:23 We also using the same tricks that Conda uses and a bunch of other package managers.

36:27 So you can have these multiple environments, but they actually share the underlying files.

36:32 So if you use the same Python 3.11 version and multiple environments, it's not like you

36:37 don't duplicate those files.

36:38 You don't lose a lot of storage, for example.

36:40 That's nice.

36:41 And the other thing that's really cool.

36:42 I mean, Conda also gives you that, but you can have completely different Python versions

36:45 in all of these environments.

36:47 And it's, it's very like straightforward to use.

36:50 Like you don't need to run it through containers or stuff like that.

36:53 It's just like all in your system.

36:55 And yeah, very nice and isolated.

36:57 Yeah.

36:58 So one thing that I ran across here that was pretty interesting while just researching this

37:03 is you said Pixie and Conda like Nix are language agnostics.

37:07 And I'm like, what is this Nix thing?

37:08 Yeah.

37:09 And that brought me over to Nix OS.

37:10 What is, what is this?

37:11 Nix basically is a functional package manager.

37:14 Okay.

37:15 It works with a functional programming language, which is kind of an interesting idea.

37:19 And a lot of people that know Nix really love it.

37:23 So we would like for Pixie to also be as loved as Nix is by Nix people.

37:27 And basically what's nice about the functional programming language is that it kind of, you know, from the input, the output.

37:35 So you can cache the function execution and you know, okay.

37:39 Like if the function didn't change and the inputs didn't change, then the output is also not going to change.

37:43 Right.

37:44 You can cache the heck out of it.

37:45 You can parallelize it so much and so on.

37:47 Yeah.

37:48 So that's kind of what, like, that's how I understand Nix is that basically you have a function that you execute to, let's say, get bash on your system or get Python on your system.

37:57 And once you have executed that function for that specific Python version, you know, that you have, you know, Python with that hash in your system somewhere.

38:06 And then Nix has some magic to kind of string things together so that you can also sort of do something like a Conda activate where it would put the right version of Python, NumPy and whatever you install through Nix onto your like system path and make it usable.

38:21 Mm hmm.

38:22 And so I think Nix and Pixie are competitors.

38:25 Anyway, the thing about the functional language is that it also makes it like way less beginner friendly, at least of my opinion.

38:32 Yeah, I agree.

38:33 The way Pixie kind of works is like really straightforward in a way that you just define your dependencies and ranges and stuff and you get the binaries with Nix.

38:42 Sometimes you need to like, usually you build things from source.

38:46 So that's also a difference.

38:47 I think they have like distributed caches that you could use and things like that.

38:51 But honestly, I'm not a user of Nix, so I'm not sure how widely these caches, like widely used these caches are.

38:57 But we definitely look at Nix as like also another source of like inspiration.

39:02 And I think they have something really good going for them because people that use Nix, they are like super evangelical about it.

39:09 Well, it also probably helps its functional programming, right?

39:12 People who do functional programming, like they love functional programming.

39:15 That's for sure.

39:16 The pureness of it is pretty nice.

39:18 And then Nix also goes like a step further where you can sort of manage your entire like configuration and everything through the same system.

39:26 And that's also pretty powerful.

39:27 And maybe maybe we can find some interesting ways of like supporting something similar.

39:31 But in a way, like if you look at Pixie, I think we are trying to we don't actually care so much about Conda in a way or like maybe that's also the wrong way to put it.

39:40 But but basically what we're looking is also like, how does Docker do things and how does Nix do things?

39:45 And like, how can we kind of like learn from those tools?

39:48 And yeah, we have a pretty well defined vision for ourselves.

39:52 And the main part is that we just want to make it easy to get started.

39:55 So you shouldn't have the hassle of learning a new thing to get started.

39:59 You should just know like the bare minimum of information on how to run something.

40:04 And Pixie is there to help you instead of we do something like with a complete vision that's making it perfect.

40:10 And we're even doing it in the specific OS that you need to install.

40:14 We want this to be used on every OS and we want this to be used by everyone.

40:18 So you can share your code with anyone anywhere.

40:21 That's something we really focus on.

40:23 Sure. The clone and then just Pixie run.

40:25 That's pretty easy.

40:26 It's pretty easy for people to do, right?

40:28 I would say so.

40:29 So that's the experience of someone's set up a project for you on your announcement post.

40:34 You'll have a nice little example of not a terribly complicated example of an app that you might or a project you set up.

40:42 But maybe just talk through like if I want to start with just maybe I have a GitHub repo already, but I haven't set it like what's the process there?

40:49 If you already have a GitHub repository, for example, you would just do Pixie in it and then give it.

40:54 Yeah, basically you would just say dot because that's your current folder.

40:58 Or if you don't have anything, you would just do something like Pixie to any my project.

41:01 And that will create the my project folder for you with a Pixie.toml file inside.

41:05 And then once you have that, you can do Pixie at Python and you can use like the specifiers from Gondar.

41:12 So you could do something like Python equals 3.11 and that would get you Python 3.11 into the dependencies of that project.

41:19 And then when you and also install it at that point.

41:22 And after it installs, it creates that log file that you can also like should check into your repository so that you know what the latest versions were that were like working for your project.

41:32 Okay, like the pinned, basically the pinned versions or constraints.

41:36 Yeah.

41:37 One other thing that happens when you do Pixie add is that it actually goes and tries to figure out like what's the latest version that's available for that package and then already puts a pin into your dependencies.

41:46 So what we see on the screen is like we do Pixie add cowpy and then it adds cowpy 1.1.5.star.

41:52 So that's a pretty specific version already.

41:54 Nice.

41:55 And you haven't done it here.

41:56 But so example is Pixie run cowpy and then the parameters hello blog reader and it like does the cow saying hello blog reader.

42:03 But when you talked earlier about the tasks or whatever, you could just say create a task called cow and it is Python cowpy hello blog reader.

42:12 Right.

42:12 Right.

42:13 And that you would just say Pixie run cow and the same thing would happen.

42:15 Is that I got that all put together, right?

42:17 That's absolutely the case.

42:18 And but basically everything, any binary executable that you have in your environment, like in this case cowpy, you can also call with Pixie run whatever.

42:26 Like so you could also do Pixie run Python and they would start Python 3.11 or whatever you have installed inside of that environment.

42:32 Yeah. And that would actually do the REPL and everything.

42:34 Yeah. Yeah.

42:35 Just like having it globally installed.

42:37 So one other feature of Pixie that we haven't mentioned before is that you can still do global install.

42:42 So sometimes you have that comment line tool that you really love.

42:45 One of the things we I usually install is bat, which is like cat with wings.

42:50 What you can do with Pixie is you can do a Pixie global installed bat and that will install bat and make it globally available.

42:56 So you can run it from wherever it's not tied to any like project environment.

43:01 It's just on your system in your home folder, essentially.

43:04 And you can just run that wherever you are.

43:07 And it works.

43:08 The one that comes to mind for me a lot is Pipex is one of them.

43:12 That's exactly where we got this using similar mechanisms to that.

43:16 So every tool that you installed this way is installed into its own virtual environment.

43:20 So they don't have any overlap.

43:22 You can install versions that are completely unrelated.

43:25 Even the different pythons, right?

43:27 One thing that I also like a lot about this and, you know, pour one out for poor old pep, something, something, something about the Dunder PI packages folder.

43:37 I can't remember what the PEP number is, but basically the idea that if I'm just in the right place, the run command should grab whatever local environment is the one I've set up rather than explicitly go and find the environment, activating the environment, etc.

43:52 So it looks like when you say Pixie run, there's no Pixie activate or any of those things, right?

43:58 How does that work?

43:59 The way condo environments work is that you need to have some sort of like little activation thing where basically the past variable environment variables changed and adjusted and some other activation scripts are run.

44:10 And with Pixie what we're doing is we run those in the background and then we extract all the environment variables that are necessary for the activation basically to work.

44:19 And then we just inject it right before we execute what you want to execute like CalPy in this case.

44:27 Yeah. So there's like an implicit activate or you don't even have to say activate in Python.

44:31 You can just, if you just use that Python, you say the path to the virtual environment Python run that like that's sufficient.

44:39 Yeah.

44:40 That's more or less what happens.

44:41 Like, yeah, sometimes, you know, packages can have different requirements when it comes to activation.

44:45 So like Python doesn't have many requirements when it comes to activation, but some other packages, they might need some other like environment variables that are specific to the environment location where they are installed, etc.

44:55 Sure. Well, even Python virtual environments can get weird where like you can set environment variables that get set during the activation of the virtual environment, right?

45:04 Like, I don't think many people do that because it's transient, but it could.

45:08 We also have a pixie shell command. So if you want to have that experience of like an activated environment, you can use pixie shell and then it is like basically a shell that acts like an activated environment.

45:20 Like poetry has the same and many others.

45:22 The example here shows like I'm in the top level of the project and I say pixie run. What if I'm like three directories down and I say pixie run? What happens then?

45:31 The exact same thing will happen because pixie runs from the root of the project and all your tasks are by default running from the root of the project.

45:39 So you define them with the boss in your project as they are always and then where you are, you can run those tasks as they are.

45:48 But if you want to run something in that directory, you can just use pixie run and then your own commands to do X on that directory.

45:56 And then there's this other way of using it, like the pixie itself will run down the path that you're in and we'll find the first pixie project that it encounters.

46:05 And for instance, pixie itself has some examples.

46:08 So if you move into the example directory and then in one of the examples, those are their own pixie project.

46:14 So if you run it there, pixie run start, it will start the example instead of the actual pixie project of pixie.

46:21 Interesting. So you could have a nested one, like there's a main one, but then inside you could have a little sub pixie projects.

46:27 Yeah, a little bit like node and npm in that regard.

46:30 We have an issue that's open about mono repo support and cargo does a pretty nice job.

46:36 Yeah.

46:37 Yeah.

46:38 That sounds like a really good idea for mono repo support.

46:40 There's a different problem that you normally with mono repo is have some shared dependencies.

46:46 So if you, for instance, have in your, your, your root of your repository, you have quite an dependency defined, then you want that shared between all the packages.

46:59 Yeah.

47:00 Down in your repo tree.

47:01 So that's something we still have to support.

47:03 So right now there are like two separate projects and the pixie tool will just find the first projects that encounters.

47:10 But we need some kind of way to define a workspace or mono repo.

47:15 If you would say it like that.

47:16 And then you could like link those environments together.

47:19 And if you start a lower level one, you would start the main one with it or something like that.

47:26 That's still in the works.

47:27 Look at the dependencies of the top one.

47:29 And then you might add some more in your little sub project type of thing, something like that.

47:33 Yeah.

47:34 Well, even what you already have sounds pretty excellent for you.

47:36 Yeah.

47:37 So currently if you have like a system where you have a backend server that's completely Python or Rust or whatever, you could have that as a separate project and then have another project that is like the front end.

47:47 So you do some, you install npm there or whatever.

47:50 And those are completely separate within your repository.

47:53 And the main repository is just some tooling to, for instance, lint everything or, or something like that, or install your base dependencies that you want to use in the, in the complete repository.

48:05 But you could already set it up pretty nicely.

48:08 I'm sure if you have a truly large organization with a monorepo, which for people that know, that just means like all the code or the whole organization is in one huge repository instead of a bunch of projects.

48:19 But dependencies across projects, it's just within that kind of that file structure.

48:23 Like it's a lot.

48:24 I was complaining about having a dependency that had two things that wanted the same library, both lower than and greater than some version number.

48:32 Like that's for one project, you know what I mean?

48:34 You put it all together.

48:35 It's only going to get more challenging.

48:36 So tools like this, these sub projects and stuff, I think could help go like, all right, this part needs these things.

48:42 Cause that's the data science part.

48:43 This other part needs that thing.

48:45 Cause that's the microservice part.

48:47 So what else do people know about Pixie taking dependency, taking PR, PRs and contributions?

48:54 Definitely.

48:55 Like we also stood like pretty early.

48:57 So we love people that test Pixie and tell us the feedback on like our discord channel or on like GitHub.

49:05 I think we have discussions open as well and issues, any feedback is appreciated.

49:09 I will really like trying to take package management to the next level.

49:12 That includes like building packages that includes like package signing stuff like this, security, et cetera.

49:18 There are so many things and issues to work on.

49:21 And I think it's going to be very fun.

49:23 Yeah.

49:24 I'm also actually organizing packaging con.

49:26 Okay.

49:27 That's happening.

49:28 And like a week from now, actually, and really looking forward to that.

49:31 So that's going to be fun to chat with a lot of package manager developers.

49:35 Does it have an online components?

49:37 Yeah.

49:38 So it's in Berlin, but it's also hybrid.

49:40 So you can join virtually if you want.

49:42 Will the videos be on something like YouTube later?

49:45 Yep.

49:46 Yep.

49:46 Okay, cool.

49:47 If the timing lines up, you'll have to give me the link to the videos and I'll put it into the show notes for people.

49:52 I get, we might somehow miss like the conference runs, but the videos aren't yet up.

49:56 But if they are, you know, send me a link and we'll make it part of the show.

49:59 So people can check it out.

50:00 And one of prefix bus will also talk about this, these rust crates that we've been building and how it all fits together.

50:07 If you want to learn more about that and if you want to contribute, like also if you want to learn rust, like we're more than happy to kind of like help you, like guide you as time permits, obviously.

50:17 Yeah, we're trying to be really active on our channels.

50:20 So on GitHub, we have some good first issues.

50:23 And if you have some questions, just ask around.

50:26 And in our discord, we're very active and really try to react as fast as possible to anything.

50:32 Right at the bottom of prefix.dev, you've got your little discord icon down there.

50:37 So people can click on that to kind of be part of it.

50:40 Right.

50:41 I think it's also on the top.

50:42 Yeah.

50:43 Yeah.

50:44 I see you all both are like me and have like, not accepted that, that X Twitter is called X.

50:51 Yeah.

50:52 I'm not changing mine.

50:53 They should come out with the final logo, right?

50:55 Like that's not, that can't be it.

50:57 I can't be it.

50:58 It's like a child.

50:59 Like I'm just, this is what I got.

51:01 And it's there.

51:02 Maybe I need, I should probably put an EX Twitter in there just for.

51:05 Yeah.

51:06 Yeah.

51:07 And then a quick question from Elliot's, any meaning behind the name Pixie?

51:12 We thought very long about the name.

51:14 We had a bunch of different versions.

51:16 Like initially we thought PX, just P and X, but that was somehow like hard.

51:22 Have you considered X?

51:23 I can't even just use that for whatever.

51:25 Just kidding.

51:26 Sorry.

51:27 Back to Twitter.

51:28 Burnt.

51:29 It is burnt.

51:30 We also thought about packs like P A X, but that's currently executable that you already

51:36 have on your system if you're using Linux or Mac.

51:39 So that didn't work.

51:40 That'd be tricky.

51:41 Yeah.

51:42 Yeah.

51:42 Yeah.

51:42 Yeah.

51:42 Yeah.

51:43 We thought about P E X.

51:44 I don't know.

51:46 We wanted to derive it a little bit from the name prefix because that's kind of the company

51:50 name, but Pixie seemed really cool because it's a, apparently a magical fairy and we want to

51:55 make a package management magic.

51:57 Yeah, exactly.

51:58 It's, I think the name is great.

51:59 It's short enough to type.

52:00 It's pretty unique.

52:01 You can, it's somewhat Google-able, right?

52:04 Yeah.

52:05 And you can pronounce it.

52:06 Yeah.

52:07 That was also important to it.

52:08 Yeah.

52:09 You don't have to debate.

52:10 Is it PI PI or is it PI PI?

52:12 Like let's say, no, like, make it lowercase.

52:14 Make it lowercase.

52:15 It's not an acronym.

52:16 You don't say the letters.

52:17 We created this thing called MicroMamba, which I don't want to like go into too much detail,

52:21 but a lot of people complained about MicroMamba being too long to type.

52:24 So we had to stay under the five character limit.

52:27 Yeah.

52:28 I think there's, there's value in that.

52:30 Yeah.

52:31 There's definitely value in that.

52:32 So let's close out our conversation with where you all are headed.

52:35 What's next?

52:36 Yeah.

52:37 Like we are super excited about a bunch of upcoming features.

52:40 One is definitely what I already mentioned, Pixie builds so that you can build a package

52:44 right away from Pixie.

52:45 To prepare them for Conda Forge, right?

52:47 Well, for Conda Forge or like maybe you also have some internal stuff or your own private

52:52 things and stuff.

52:53 We just want to make that easy because that is currently way too hard to like make a Conda

52:58 packages like a bunch of steps.

53:00 And that also kind of precludes that you could use source and get dependencies for like other

53:06 Pixie projects.

53:07 Because basically what we do, what we will do in the background is like, if you depend on

53:12 a source dependency for another Pixie project, we will build it into a like package on the

53:16 fly and then put it into your environment.

53:17 And then like integrating with the PyPI ecosystem.

53:20 That's what we're actually working on the most right now.

53:22 And that is the rip thing that I told you about.

53:25 Yeah, that's awesome.

53:26 Because we just see a lot of need in the community to have this.

53:29 A lot of projects in the wild are kind of mixing it.

53:32 Yeah.

53:33 If you're working with PyPI, I will switch my stuff over and give it a try and see how

53:37 it works.

53:38 That would be great.

53:39 Until then I can't, right?

53:40 I've just got, I've got like hundreds of packages and a lot of them I'm sure are just unique to

53:44 PyPI.

53:45 We are not far away.

53:47 Like I think the hard bits are solved and that was like resolving because it works quite

53:51 different from Conda.

53:53 You need to like get the individual wheel files to get the metadata, etc.

53:57 And like that doesn't scale if you need all the metadata upfront, which is actually the case

54:02 in Conda.

54:03 You have all the metadata upfront, but with PyPI you don't.

54:05 And so we had to make the solver lazy.

54:07 We had to make the solver generic and we are through that process now.

54:10 And now it's basically just engineering work in that sense to integrate it with Pixie.

54:14 But it's going to happen and it's going to be nice.

54:16 I'm sure.

54:17 Yeah.

54:18 We also have some ideas of like, can we somehow merge Pixie Tomil into PyProject Tomil so that

54:23 it's like more natural to like Python developers and you only need to manage one file.

54:28 And I think PyProject Tomil gives us the flexibility that we would need to do that.

54:32 I think it does.

54:33 You've got things like Hatch and others that kind of got a way to go in there.

54:38 Yeah.

54:39 And then we have some other ideas that are a bit more out there maybe, but, or not really,

54:43 but like we already have a set up Pixie action for GitHub.

54:46 That's really nice.

54:47 And then another idea is like, how can you go from a virtual environment to a Docker image

54:53 easily?

54:54 So that's also something that we're thinking about.

54:56 Okay.

54:57 These kind of things.

54:58 All very exciting.

54:59 Awesome.

55:00 How long has this been around?

55:01 I'm your blog post is two months old.

55:02 It's announcing this stuff.

55:03 So.

55:04 Yeah.

55:05 I mean, I think we maybe made the repository public months earlier than the blog post or

55:09 so, but it like prefix as a company is like just very little over a year old.

55:16 And that's when we like really started to build the website, the platform, Pixie, Rattler,

55:22 and all of these things.

55:23 So I think Pixie, we saw that maybe like five months ago.

55:26 So not too old, still very fresh.

55:29 Yeah.

55:30 Yeah.

55:31 I think it's a new software smell.

55:32 Yeah.

55:33 Exactly.

55:34 Definitely.

55:35 I hope you don't get the, yeah, like, but we also know how to, yeah.

55:40 You don't want that.

55:41 Personally, I'm very surprised how stable it is already.

55:44 And I think that's partly due to the use of rust and the fact that we can very heavily

55:51 check some of the, you know, workings of the tool before we ship it.

55:55 Well, it looks like it's off to a really good start.

55:57 I like a lot of the ideas here.

55:58 So yeah, keep up with the good work.

56:00 Before we wrap it up, we're basically out of time, but there's the, always the open source

56:05 dream of I'm going to build a project.

56:07 It's going to get super popular.

56:09 The dream used to be, I'm going to do some consulting around it, right?

56:13 I've created project X project X is popular so I can charge high consulting rates.

56:17 That's the dream of the nineties.

56:19 I think the new dream is I'm going to start a company around my project and, and have some

56:24 kind of open core model and something interesting there.

56:27 You guys have prefix.dev.

56:28 What's the dream for you?

56:29 Like how, what's your, how are you approaching this?

56:31 I think a ton of people would be interested to just hear like, how did you make that happen?

56:36 You know?

56:37 So you saved the hardest question for last.

56:40 You don't have to answer it, but I do think it's, it's interesting.

56:43 Yeah.

56:44 Package management is a hard problem.

56:45 And there are, there are lots of sort of sub problems that I would say enterprise customers

56:51 in a way are willing to pay for.

56:53 That includes like security managed repositories, let's say like basically red heads and like more

56:59 or less red heads project product is that they have this like, I know five or 10 years or something

57:04 like of support for like old versions of packages for enterprise customers.

57:09 And I think we have a pretty interesting approach to package management that is pretty easy to kind of grasp.

57:15 And like part of why we want to make Pixie build a thing is also because we want people to make more packages and then upload them to our website and kind of grow this entire thing in popularity and make it super useful so that we hopefully end up with customers that are supporting our work.

57:32 Awesome.

57:33 Well, good luck to both of you.

57:34 And thanks for being on the show to share what you're up to.

57:37 Sure.

57:38 Thank you.

57:39 This has been another episode of Talk Python To Me.

57:42 Thank you to our sponsors.

57:43 Be sure to check out what they're offering.

57:45 It really helps support the show.

57:48 Python Tutor.

57:49 Visualize your Python code step by step to understand just what's happening with your code.

57:54 Try it for free and anonymously at talkpython.fm/python-tutor.

58:00 Want to level up your Python?

58:03 We have one of the largest catalogs of Python video courses over at Talk Python.

58:07 Our content ranges from true beginners to deeply advanced topics like memory and async.

58:12 And best of all, there's not a subscription in sight.

58:15 Check it out for yourself at training.talkpython.fm.

58:17 Be sure to subscribe to the show, open your favorite podcast app, and search for Python.

58:22 We should be right at the top.

58:24 You can also find the iTunes feed at /itunes, the Google Play feed at /play, and the direct

58:30 RSS feed at /rss on talkpython.fm.

58:33 We're live streaming most of our recordings these days.

58:36 If you want to be part of the show and have your comments featured on the air, be sure to

58:40 subscribe to our YouTube channel at talkpython.fm/youtube.

58:44 This is your host, Michael Kennedy.

58:46 Thanks so much for listening.

58:47 I really appreciate it.

58:48 Now get out there and write some Python code.

58:50 I'll see you next time.

58:51 I'll see you next time.

58:51 Bye.

58:52 Bye.