Python Perf: Specializing, Adaptive Interpreter

This episode is about that new feature and a great tool called Specialist which lets you visualize how Python is speeding up your code and where it can't unless you make minor changes. Its creator, Brandt Bucher is here to tell us all about.

Episode Deep Dive

Guest Introduction and Background

Brandt Bucher is a Python core developer who joined the Faster CPython team at Microsoft. He studied computer engineering at UC Irvine, discovering his love for Python during a senior design project. Over time, he became a Python triager, then a core developer, and now focuses on performance improvements within CPython. In this episode, Brandt shares how his passion for open-source contributions and his background in both software and hardware led him to develop a tool called Specialist, which visualizes Python’s new adaptive interpreter optimizations introduced in Python 3.11.

What to Know If You're New to Python

If you're newer to Python and this performance discussion sounds advanced, don’t worry. You just need to be aware of a few basic concepts before diving in:

- Python’s language and runtime are extremely dynamic—variables (their types and locations) can change as the program runs.

- Python 3.11 introduces an optimization layer in CPython called the specializing adaptive interpreter (PEP 659).

- Tools like Specialist help show how Python’s optimizations apply in your code.

- Some math details might get specialized (optimized), but only if your code and data types line up in a way Python expects (like float + float, not float + int).

Key Points and Takeaways

Why Python 3.11 is Significantly Faster

Python 3.11 brings notable performance improvements—often quoted as a 25% speed boost or more for everyday code. Much of this jump is due to the specializing adaptive interpreter, which replaces general-purpose bytecode instructions with specialized ones at runtime.- Links and Tools:

The Specializing Adaptive Interpreter (PEP 659)

This interpreter detects the most common code paths and swaps out general bytecode instructions for specialized ones (e.g.,BINARY_ADDbecomesBINARY_ADD_INTif it consistently sees integers). It then adapts or “falls back” if those assumptions become invalid (e.g., you switch from integer to string addition).- Links and Tools:

Adaptive vs. Specialized Instructions

In Python 3.11, bytecode instructions can be in an “adaptive” state while the interpreter observes real-world data. Once stable patterns emerge (e.g., always floats), the adaptive instructions switch to specialized ones (e.g., float-specific math). If the pattern changes, the interpreter falls back automatically.- Links and Tools:

Transparent Speedups with No Code Changes

One of the best features of the new adaptive interpreter is that most Python users do not need to modify their code to benefit. Simply upgrading to Python 3.11 can result in impressive speed improvements, especially for tight computational loops or repeated function calls.Specialist: Visualizing Where Python Optimizes

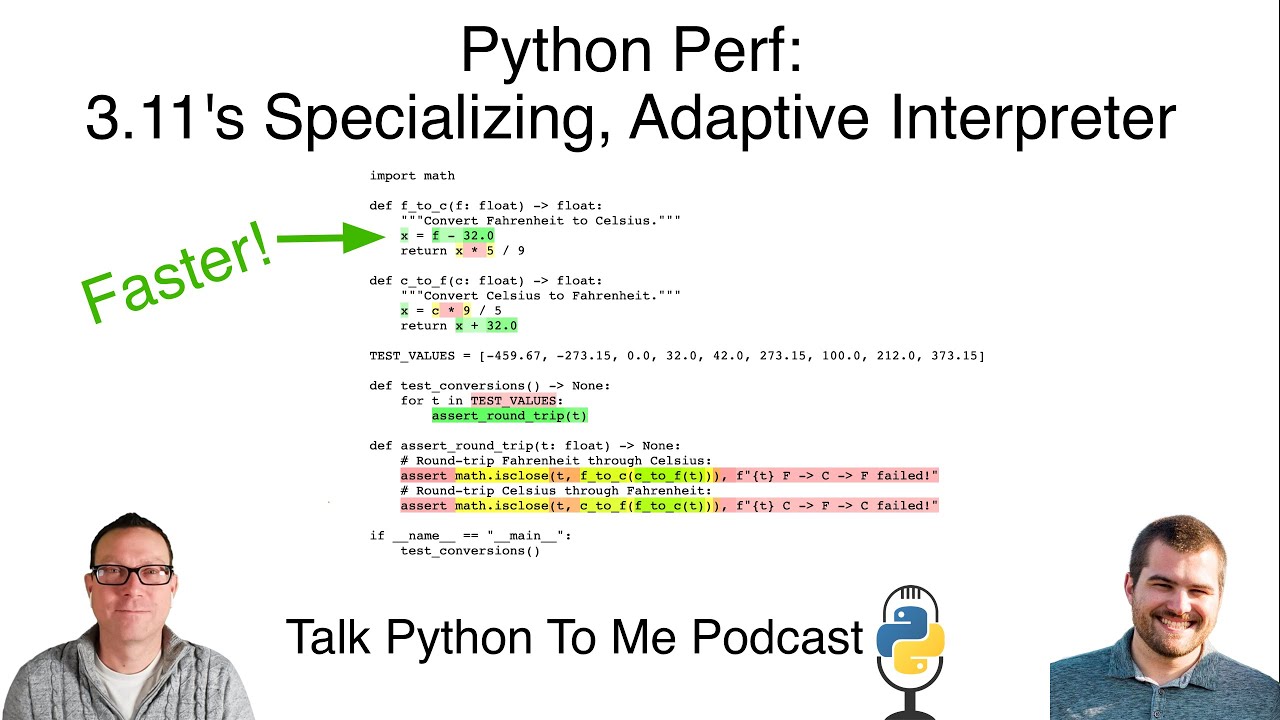

Brandt created Specialist to highlight where code is specialized (in green) or not specialized (in red or yellow). By running your code under Specialist, you get an annotated view showing which parts of your program Python speeds up and which parts remain generic.- Links and Tools:

- Specialist project on GitHub

dismodule for bytecode introspection: docs.python.org/3/library/dis.html

- Links and Tools:

Minor Tweaks for Major Gains

Brandt shared examples like changing32to32.0in a Fahrenheit-to-Celsius function. This small change—ensuring both operands are floats—increases the likelihood Python will specialize the math operations. Reordering or combining certain operations (e.g.,(5/9)*xvs.5*(x/9)) might also trigger compile-time optimizations.Falling Back from Specialized Modes

The interpreter’s fallback mechanism ensures correctness remains paramount. If Python sees unexpected types (e.g., switching from integer to string addition), it discards the specialized instructions and reverts to the slower, more general approach until it has stable assumptions again.- Tools and Concepts:

- Fallback to “adaptive” or “generic” opcodes

- Tools and Concepts:

Collaboration with Other Python Teams

The Faster CPython team regularly collaborates with other performance-focused groups, such as the Cinder folks at Meta (Facebook). While not all custom implementations (like JITs in Cinder) are upstreamed, knowledge sharing accelerates CPython’s performance improvements for everyone.- Links:

Potential Future Directions (3.12 and Beyond)

The team is exploring more specializations and possibly a JIT, but these efforts likely won’t land in 3.12. However, the adaptive interpreter infrastructure lays the groundwork for more advanced optimizations—meaning Python’s speed story will keep evolving.- Tools and Repos:

Practical Advice on Assessing Performance

Brandt recommends upgrading first, seeing if your code gets noticeably faster, and only then using Specialist to find any missed optimizations in truly performance-critical code. For large or production applications, building CPython with profiling stats (--with-pystats) can reveal deeper insights without exposing proprietary code.

- Tools:

Interesting Quotes and Stories

- Brandt on contributing to CPython: “I’d wake up, go to a coffee shop, and for a half hour just look at newly opened issues from first-time contributors … so they wouldn’t have the same slow experience I had.”

- On surprising small tweaks: “Just adding a decimal to an integer changed the math to float-plus-float, letting the interpreter actually speed up your code.”

Key Definitions and Terms

- Bytecode: A lower-level, platform-independent representation of your Python code, executed by the CPython virtual machine.

- Adaptive Instruction: A bytecode placeholder that observes the runtime types and patterns before deciding which specialized approach to use.

- Specialized Instruction: A faster bytecode operation optimized for specific data types (e.g.,

ADD_FLOAT,LOAD_SLOT). - Fallback: The mechanism by which Python discards specialized instructions if runtime conditions no longer match the initial assumptions.

- PEP 659: A Python Enhancement Proposal introducing the specialized adaptive interpreter in Python 3.11.

Learning Resources

If you want to deepen your Python foundations or explore Python 3.11 features in detail, here are some excellent courses from Talk Python Training:

- Python for Absolute Beginners: Covers fundamental Python concepts in a fun, project-based way.

- Python 3.11: A Guided Tour Through Code: Dive into the latest Python 3.11 features, including performance enhancements and new language syntax.

Overall Takeaway

Python 3.11 ushers in a transformative jump in performance by integrating the specializing adaptive interpreter. Brandt Bucher’s work on both CPython’s speedups and his Specialist tool showcases how seemingly small adjustments—like enforcing consistent data types—can significantly impact execution time. By upgrading to Python 3.11, most developers will see notable speed boosts with no code changes. For those wanting to push Python’s performance boundaries further, specialized visualization tools and deeper runtime introspection are now available. The overarching message is clear: Python is growing ever faster and more powerful, and the best is yet to come.

Links from the show

Specialist package: github.com

Faster CPython: github.com

Faster CPython Ideas: github.com

pymtl package: pypi.org

PeachPy: github.com

Watch this episode on YouTube: youtube.com

Episode #381 deep-dive: talkpython.fm/381

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 We're on the edge of a major jump in Python performance.

00:03 With the work done by the FasterCpython team and Python 3.11 due out in around a month,

00:08 your existing Python code might see an increase of well over 25% in speed with no changes to your code.

00:15 One of the main reasons is its new specializing adaptive interpreter.

00:19 This episode is about that new feature and a great tool called Specialist,

00:23 which lets you visualize how Python is speeding up your code and where it can't unless you make minor changes.

00:29 Its creator, Brant Booker, is here to tell us all about it.

00:32 This is Talk Python To Me, episode 381, recorded September 15th, 2022.

00:37 Welcome to Talk Python To Me, a weekly podcast on Python.

00:53 This is your host, Michael Kennedy.

00:54 Follow me on Twitter where I'm @mkennedy and keep up with the show and listen to past episodes at talkpython.fm.

01:01 And follow the show on Twitter via at Talk Python.

01:04 We've started streaming most of our episodes live on YouTube.

01:07 Subscribe to our YouTube channel over at talkpython.fm/youtube to get notified about upcoming shows and be part of that episode.

01:14 This episode is sponsored by Microsoft for Startups Founders Hub.

01:18 Check them out at talkpython.fm/founders hub to get early support for your startup.

01:24 And it's brought to you by compiler from Red Hat.

01:27 Listen to an episode of their podcast as they demystify the tech industry over at talkpython.fm/compiler.

01:35 Brant, welcome to Talk Python To Me.

01:37 Thank you for having me.

01:38 I'm excited to talk about some of this stuff.

01:39 I am absolutely excited about it as well.

01:42 I feel there's a huge renaissance coming, happening right now, or has been happening for a little while now around Python performance.

01:49 It's exciting to see, especially in just the last couple of years, that you definitely see these different focuses,

01:55 whether it's, you know, improving single-threaded Python performance, multi-threaded Python performance, you know, Python in the browser.

02:02 There's a lot of really cool stuff happening, right?

02:04 Yeah.

02:04 Oh, if we could talk WebAssembly and PyScript and all that, that is a very exciting thing.

02:09 There's probably some performance side around it, maybe something we could touch on,

02:13 but that's not the main topic for today.

02:14 We're going to talk about just the core CPython and how it works, which is going to be awesome.

02:19 Some work you've done with the team there at Microsoft and your contributions there.

02:23 Before we get into all that stuff, though, let's start with your story.

02:26 How did you get into programming in Python?

02:27 Yeah.

02:27 So I originally studied computer engineering, so like hardware stuff at UC Irvine.

02:34 Around my, like, third or fourth year, so like 2017, I encountered Python really for the first time

02:41 in like a project setting.

02:42 Basically, it was a senior design project.

02:44 We get to kind of make whatever we want.

02:46 So we made this cool system that basically it's point cameras at a blackjack table and it's X card counters.

02:52 And if you want to do something like that in, you know, four months or whatever, Python is kind of the way to go.

02:57 Yeah, the CV stuff there is really good, right?

02:59 Yeah.

03:00 So it was NumPy and OpenCV, and that was kind of my first exposure to this stuff.

03:03 And so I learned that I like developing software a lot more than developing hardware.

03:08 So I kind of never looked back and just went full in.

03:13 About a year later, so like 2018, I opened my first PR to the CPython Red Belt,

03:19 and it was merged.

03:20 And you're up to that.

03:21 What was that PR?

03:22 Oh, there's this standard library module called Module Finder.

03:27 Basically, it's one of those ones that's kind of just a historical oddity,

03:32 and it's still in there.

03:33 Basically, you can run it over any Python script.

03:36 It detects all the imports, and so you can use it to build an import graph.

03:39 And I forget exactly what I was using it for.

03:41 I think it was for work.

03:42 And I ran into some bugs that had been just kind of lingering there for years.

03:46 And so I, you know, submitted patches for a bunch of them.

03:50 And so that was kind of my first experience contributing to open source in general.

03:54 That PR was actually open for a while.

03:57 It was open for like, I think it was like a month or two or something like that.

04:02 So in the meantime, I contributed other things to like my Pi type shed, but that's still my first open source contribution.

04:09 It counts the day it was open.

04:10 Yeah, it counts as a beginning or the end of the PR, right?

04:14 Yeah, exactly.

04:15 Because those can be very different things sometimes.

04:17 They can be very different.

04:18 You know, we have now in CPython, we have the, just in Python, I guess, we have the developer in residence with Lucas Schlinger.

04:25 He's there to sort of facilitate making that a lot faster.

04:28 And I feel like people's experience there might be picking up speed and improving.

04:32 Yeah, no, I think that's a great thing.

04:34 And in general, just seeing this kind of shift towards full-time Python core development,

04:38 you know, being funded by these big companies is really exciting to see.

04:43 I think it improves the end contributor experience, the user experience,

04:47 and just gets things done, which is nice.

04:49 I don't have any numbers, but I imagine there are fewer of those kind of lingering,

04:53 you know, months old PRs than there were back when I first started.

04:57 Yeah.

04:58 Way, way long ago, four years ago.

05:00 So far back in the past.

05:02 So long, so long, yeah.

05:04 So yeah, so that was like 2018, 2019, I became a member of the triage team,

05:10 which is basically a team for people who are kind of more involved in Python

05:16 than just your average drive-by contributor.

05:18 So while they're not full core developers, they basically can do anything a core developer can,

05:24 except vote and actually, you know, press the merge button.

05:26 So that was really nice because it made doing things like reviewing PRs easier.

05:30 Tagging issues, closing issues, that sort of thing.

05:34 A year later, I became a core developer.

05:36 How did you get the experience for that?

05:37 Like, how do you, you know, it's one thing to say, well, I've focused on this module and here's this fix.

05:41 And it's another to say, you know, give me anything from CPython and I'll assess it.

05:45 Well, it wasn't give me anything from CPython.

05:47 And it was, you know, made it part of my morning routine to, you know, wake up,

05:51 go to a coffee shop, order a coffee, and just for a half hour, look at newly opened issues.

05:57 And for newly opened PRs.

05:59 Yeah.

05:59 Yeah.

06:00 Well, I focus mostly on PR review for new contributors.

06:03 Basically, I had filters set up that said, you know, show me all the PRs open the last 48 hours from a new contributor.

06:09 I thought, okay, my first contribution experience wasn't that great.

06:12 It'd be great if these people who have never opened a PR to CPython before can get a response within 48 hours.

06:18 Whether that's telling them to sign the contributor license agreement or formatting fixes or pinging the relevant core dev or whatever.

06:24 So that was kind of how I dove into that.

06:27 All this terminology that you're using, PRs and stuff.

06:30 No, this is great.

06:31 What I was going to say is that this is new to Python, right?

06:35 It wasn't that long ago that Python was on Mercurial or before then Subversion.

06:40 It's, you know, it's coming over to GitHub is kind of a big deal.

06:43 And I feel like it's really opened the door for more people to be willing to contribute.

06:47 What do you think?

06:48 Oh, it absolutely lowered the barrier to entry for people like me.

06:51 Like using the old bugstoppython.org was tough at first, but I eventually kind of got used to it just in time for it to be replaced with GitHub issues, which I much prefer.

07:01 But I have a hard time seeing myself emailing patches around or, you know, I have a lot of respect for people where that was the development flow a number of years ago.

07:10 So I became a core developer in 2020.

07:14 And I guess it was about exactly a year ago, almost exactly a year ago, I joined the Python performance team here at Microsoft.

07:21 Were you at Microsoft before then?

07:23 No, I was not.

07:24 I worked for a company called Research Affiliates in Newport Beach.

07:28 And I think you actually had my old manager, Chris Ariza, on the show a couple years ago.

07:33 I have had Chris on the show.

07:34 That's fantastic.

07:34 Small world, huh?

07:36 Yep.

07:36 Small Python world.

07:37 Yeah, small Python world.

07:39 Just a couple million of us.

07:40 No, that's great.

07:42 So this brings us to our main topic.

07:45 And, you know, let's start at the top, I guess.

07:49 You know, there's the faster Python team, I guess.

07:52 I don't know.

07:53 How do you all refer to yourself?

07:55 Yeah, we refer to ourselves as the faster CPython team.

07:58 I think internally our distribution list is the CPython performance engineering team, which sounds a little gnarlier, but it's a lot wordier.

08:06 That's a cool title to have on a resume, isn't it?

08:09 Yeah, I think I'll use that one.

08:11 There you go.

08:12 There's been a ton of progress.

08:14 And some of this work was done in 3.10.

08:17 But, you know, there's this article here that I got pulled up on the screen.

08:21 It says, Python 3.11 performance benchmarks are looking fantastic.

08:25 That's got to make you feel good, huh?

08:27 I mean, they were looking fantastic back in, what, June?

08:29 Yeah.

08:30 They're a small bit more fantastic now.

08:33 So, yeah.

08:33 Yeah, exactly.

08:34 This article's from June.

08:35 So this is a, it's only better from here, you know, from there, right?

08:38 It's really exciting.

08:40 And like I said, it's, this is a performance jump that at least since I've been involved in Python, we haven't seen this in a point release.

08:47 You know, where we're seeing 25, 30%, sometimes more improvements for pure Python code rather than kind of the 5, 10, 15% range that might be more typical.

08:58 And again, I think that's kind of a product of this very conscious effort, whether it's my team or that a lot of people that we interact with.

09:07 So, for example, Pablo, release manager, strength council member at Bloomberg has been working a lot on this stuff with us.

09:14 Outside contributors, do they come to mind or Dennis Sweeney, Ken Jin.

09:19 There's definitely been a focus on this and it's paying off, which is really exciting, like I said.

09:24 Yeah.

09:24 And maybe a little bit more in parallel instead of a cooperative effort, but there's also the Cinder folks over at Meta Facebook.

09:32 That's absolutely a cooperative effort.

09:34 You know, even though Cinder isn't necessarily, we're not merging all of Cinder back into CPython, several of the changes are being upstreamed into CPython.

09:44 And in fact, just earlier this week on Tuesday, we had, I think, like a two-hour meeting where the Cinder team walked our team through how their JIT works.

09:52 Yeah.

09:53 So it's, even though, yeah, it can be seen as a parallel effort, I think it's very collaborative.

09:57 I think also, I, like you, am very much blown away at the step size of the performance improvements.

10:04 It's just super surprising to me that a 30-year-old language and a 30-year-old runtime can get that much better in that short of a time.

10:12 Yeah.

10:12 And I think, again, I feel like I'm going to keep coming back to this, but having, you know, full-time people dedicated to this, having teams of people dedicated to this, I think that's a big part of sort of unlocking this.

10:24 Because some of the things that are required for those big jumps are kind of larger architectural changes that, you know, a single volunteer who's, you know, doing this on their free time probably wouldn't have been able to do without coordinating with others and without throwing, you know, a significant amount of effort at it.

10:41 Yeah.

10:41 I mean, there are people out there, core devs, who have done amazing stuff.

10:44 Shout out to Victor Stinner.

10:46 I feel like a lot of the performance stuff that I've seen in the last couple of years, he is somehow associated with, you know, some major change.

10:53 But the changes that are being undertaken here, they're much more holistic and far-reaching.

10:59 And it really does take a team, I think, to make it reasonable, right?

11:03 What's cool about the 3.11 effort is it's a combination of kind of both sorts of changes.

11:07 So we have, you know, a bunch of kind of one-off, very targeted changes, probably five or six or ten of those.

11:15 And then we have, you know, one or two of these kind of larger changes that, you know, we can build upon in the future.

11:21 And they're never really done, which, you know, that's exciting because it means we get to keep making Python faster.

11:28 Yeah.

11:28 It is exciting.

11:29 I think another area to just highlight real quick before we get into too much detail is, correct me if I'm wrong, but none of this is particularly focused on multi-core parallelism, right?

11:41 No.

11:41 So one member of our team, Eric Snow, he's basically the sub-interpreter guy.

11:46 Exactly.

11:46 Yeah.

11:47 So he is focusing most of his time on, you know, a per-interpreter kill and, you know, all that sort of stuff.

11:55 But, I mean, all the numbers that you're looking at here and all of our benchmarks, it's all single-threaded, single process.

12:02 If you're running Python code, it will get faster without you changing your code, which is awesome.

12:07 Yeah, it's super awesome.

12:09 I want to highlight that because if Eric manages to unlock multi-core performance without much contention or trade-offs or, you know, if you've got an eight-core machine and you get 7x performance by using all the cores, like, that would be amazing.

12:23 But all of this work you're doing applies to everyone, even if they're trying to do that stuff, right?

12:28 So even if somehow we get this multi-core stuff, the computational multi-core stuff working super well, the work that you're doing and the team is doing is going to make it faster on every one of those cores, right?

12:38 So they're kind of multiplicative initiatives, right?

12:41 So if we could get a big improvement in the parallel stuff, it's only going to just multiply how much better this aspect of it's going to be for people who use that, right?

12:49 It's great to be kind of pursuing all these different avenues because they pay off in different timeframes, right?

12:54 Some of these are longer efforts.

12:56 And in Eric's case, the 7x program for very long effort.

12:59 I think he's done a great job sticking with it and seeing it through.

13:03 And some of these we're seeing in point releases.

13:05 And so they absolutely build on each other.

13:09 Like you said, you can get a 7x increase from sub-interpreter just to throw out a number.

13:13 But Python as a whole got 25% or 30% faster than you're seeing much more than a 7x increase.

13:18 Right.

13:19 Absolutely.

13:19 So very, very exciting times.

13:21 Two things before we get into the details of a particular interpreter and stuff.

13:26 Tell me a bit about this team.

13:27 You know, I interviewed Guido and Mark Shannon a while ago, about a year ago, I suppose, about this plan when they were kicking it off.

13:35 And we didn't have these numbers or anything, but we did talk about what we were doing.

13:39 And he talked about the team that he's working with there.

13:41 So he said, certainly it's just more than the two of us.

13:44 You know, tell us about the team.

13:45 Yeah.

13:46 So I think there are seven people.

13:49 So there's Guido and Mark, Eric and me, as we mentioned.

13:52 Another core developer, Urit.

13:54 One other engineer, El.

13:56 And a manager for the team who also does some engineering effort as well and is a member of the triage team, Mike Dronfum.

14:04 Yeah.

14:04 Nice.

14:05 Yeah.

14:05 Yeah.

14:05 He worked on Pyoxidizer.

14:08 Pyodide.

14:09 That's right.

14:09 Pyodide.

14:10 Yeah.

14:10 I don't know why.

14:11 It's a pie.

14:13 There's some kind of like a molecule on the end.

14:16 Exactly.

14:16 Exactly.

14:17 Pyoxidizer.

14:18 That's right.

14:18 That's the foundation for PyScript, actually, which is quite cool.

14:22 Py3 is cool too.

14:23 The other question.

14:24 Yeah.

14:24 Yeah.

14:25 Yeah.

14:25 Yeah.

14:25 Yeah.

14:25 The other thing that I want to ask you about is, so we have these numbers and visibility into Python 3.11 that's got a lot of conversations going.

14:35 And people are saying they're looking amazing and fantastic and other nice adjectives.

14:39 But this is in beta, maybe soon to BRC.

14:42 I'm not sure.

14:43 What is Python 3.11 status these days?

14:46 3.11, we just released our last release candidate, I think this week.

14:52 Right.

14:52 So this, if basically the idea is this, the final release candidate and the actual 3.11 release should be the exact same thing.

15:01 Unless we find any serious bugs that we're fixing before 3.11 won, the release candidate is going to be 3.11.

15:10 Awesome.

15:10 This is as close to stable as any of the releases.

15:13 Almost there, right?

15:14 Okay.

15:14 Yeah.

15:15 Cool.

15:15 So the reason I ask that is a lot of the work you've been doing recently is probably on 3.12, right?

15:20 Yes.

15:20 Yeah.

15:21 So beta freeze, which is basically when we go from alphas into betas, and there's no more basically new features allowed at that point.

15:29 That happens every May.

15:30 And so everything that we've been working on since May goes into 3.12.

15:35 Are you excited about the progress you've made?

15:36 Yeah.

15:37 Yeah.

15:37 Very excited.

15:38 It seems to be still coming along well.

15:39 Yes.

15:39 Yeah.

15:40 And it's nice.

15:41 We still have a lot of time before the next beta.

15:43 Let's talk really quickly about the faster-cpython thing put together by Mark Shannon.

15:48 Guido called it the Shannon plan.

15:50 And the idea is, how can we make Python five times faster?

15:54 If we could take Python and make it five times faster, that would be a really huge deal.

15:57 And again, none of that is multi-core.

16:00 If you could somehow unlock all the cores and you've got eight, that's 35 or 40 or something like that.

16:07 This is an ambitious plan.

16:09 It started out with a little bit of work with 3.10.

16:12 Is that when the adaptive specializing interpreter first appeared, or did it actually wait until 3.11 to show up?

16:18 No, I don't believe we.

16:20 We don't have it at 3.10.

16:21 Yeah, I didn't think so either.

16:22 Missing something.

16:23 Yeah.

16:23 Yeah.

16:24 So that's in 3.11.

16:25 The rest of it looks accurate, though.

16:26 Yeah.

16:27 So then basically that was stage one.

16:29 Stage two is 3.11, where there's a bunch of things, including kicking over the interpreter.

16:34 We're going to talk about the specializing interpreter.

16:37 A bunch of small changes here.

16:38 And then stage three for 3.12 is JIT.

16:42 Have you guys done any work on any of the JIT stuff?

16:45 Right now, it's not looking like 3.12 will ship with a JIT.

16:49 We think there are other changes that we can make that don't require a JIT that will still pay off.

16:56 We're probably planning on at least experimenting with what a JIT would look like.

17:01 Like I said, we already have gotten kind of a guide towards Cinder's JIT.

17:04 And so we're talking kind of high-level architecture and prototyping and that sort of thing.

17:08 But I would be surprised if 3.12 shipped with JIT.

17:13 But it's a long effort.

17:16 So starting now is a big part of that.

17:17 Research is being done, huh?

17:18 Yes.

17:19 Actively.

17:19 Yeah.

17:19 Yeah.

17:20 Cool.

17:20 All right.

17:21 Well, this was put together quite a while ago, back in 2020, as here's our plan.

17:26 And of course, plans are meant to evolve, right?

17:28 But still, looks like this plan is working out quite well because of the changes in performance that we saw already in 3.11 beta.

17:35 And pretty fantastic.

17:37 There are a bunch of changes here about things like zero overhead exception handling.

17:43 I believe there was an overhead for entering the try block.

17:47 Every time you went in or out of a try accept block.

17:51 So even if I did try pass, accept pass, there was overhead associated with that.

17:56 Right.

17:57 So basically, we would push a block that says, oh, if an exception happens, jump to this location.

18:03 Now what we do is we realize, oh, we actually have that information ahead of time when we're actually compiling the bytecode.

18:10 So since the common case is that an exception is raised, then we can store that data in a separate table and say, oh, if an exception is raised at this instruction, then jump here without actually having to do any of that management at runtime.

18:24 So it's a little more expensive.

18:27 I believe if an exception is raised.

18:28 But in the case of an exception, it's not raised.

18:30 I think it is basically as close to truly zero cost as possible.

18:36 This portion of talk, I think it's a little bit more.

18:38 This portion of talk, Python to me is brought to you by Microsoft for startups, founders hub.

18:41 Starting a business is hard.

18:43 By some estimates, over 90% of startups will go out of business in just their first year.

18:48 With that in mind, Microsoft for startups set out to understand what startups need to be successful and to create a digital platform to help them overcome those challenges.

18:57 Microsoft for startups founders hub was born.

18:59 Founders hub provides all founders at any stage with free resources to solve their startup challenges.

19:05 The platform provides technology benefits, access to expert guidance and skilled resources, mentorship and networking connections, and much more.

19:14 Unlike others in the industry, Microsoft for startups founders hub doesn't require startups to be investor backed or third party validated to participate.

19:23 Founders hub is truly open to all.

19:26 So what do you get if you join them?

19:27 You speed up your development with free access to GitHub and Microsoft cloud computing resources and the ability to unlock more credits over time.

19:35 To help your startup innovate, founders hub is partnering with innovative companies like open AI, a global leader in AI research and development to provide exclusive benefits and discounts.

19:45 Through Microsoft for startups founders hub, becoming a founder is no longer about who you know.

19:50 You'll have access to their mentorship network, giving you a pool of hundreds of mentors across a range of disciplines and areas like idea validation, fundraising, management and coaching sales and marketing, as well as specific technical stress points.

20:03 You'll be able to book a one-on-one meeting with the mentors, many of whom are former founders themselves.

20:08 Make your idea a reality today with a critical support you'll get from founders hub to join the program.

20:14 Just visit talkpython.fm/founders hub, all one word, no links in your show notes.

20:19 Thank you to Microsoft for supporting the show.

20:21 I think that's the way it should be.

20:23 You know, exceptions, as the word implies, is not the main thing that happens.

20:28 It's some unusual case that has happened, right?

20:31 So not always, but often means something has gone wrong.

20:34 So if something goes wrong, well, you kind of put performance to the side anyway, right?

20:39 Yeah.

20:39 Well, and, you know, a lot of the sort of optimizations that you want to see, especially in, for example, JIT code or whatever, exceptions are the sort of thing that mess that up.

20:49 Where, you know, if an exception is raised, okay, get out of the TUDIC code, go back to slow land where we know what's going on and have better control of context and everything.

20:57 But yeah, no, it's really exciting to see.

20:59 It's really cool design.

21:01 Yeah, it was Mark Shannon who did this.

21:03 Mark Shannon did a lot of this.

21:05 There's a bunch of improvements coming along, but what I want to really focus on here is the PEP 659, the specializing adaptive interpreter.

21:15 And in addition to being on the team, you've created a really cool project, which we're going to talk about as soon as we cover this one, about how you actually visualize this and maybe even change your code to make it faster.

21:27 Understanding how maybe opportunities might be missed for your code to be specialized or adapted.

21:32 No, PEP 659.

21:34 I mean, the concepts are not too difficult, but the implementation is really hairy.

21:39 So I think it definitely deserves to be gone over 70.

21:43 Because it's really cool when you get down to how it actually works and what it's doing.

21:47 Is this the biggest reason for performance boosts in 3.11?

21:51 I think it's the most important reason for performance boosts.

21:54 I mean, any performance boost kind of depends on what you're doing, right?

21:57 Like we did, for example, Pablo and Mark worked together on making Python to Python calls way faster and way more efficient.

22:04 So if you're doing lots of recursive stuff, that's going to be the game changer.

22:07 If you spend all your time writing loops that just do try, accept, try, accept.

22:12 Yeah.

22:13 That one's better.

22:14 Yeah.

22:15 If you're, yeah, got it.

22:17 Try accept in tight lubes or, you know, if you're, you do lots of regular expressions,

22:21 then our improvements in that area will probably matter more than this.

22:24 But this is cool because we can build upon it to kind of unlock additional performance improvements.

22:32 Sure.

22:33 We can kind of get to that once we have a better idea of how exactly.

22:35 When I look at PEPs, usually it'll say what its status is and what version of Python it applies to.

22:42 And I see this PEP, it doesn't say which version it applies to and its status is draft.

22:46 What's the story here?

22:48 I'm actually not sure what the story is behind the PEP itself.

22:52 I think it's a good informational document that explains, you know, the changes that we did get into Python 3.11.

22:58 But I don't think the PEP was ever formally accepted.

23:02 As you can see, it's just an informational PEP.

23:05 So it's kind of more the design of what exactly we're doing and how we plan to do it.

23:09 Right.

23:10 Because it's not really talking about the implementation so much as like, here's the roadmap and here's what we plan to do and stuff.

23:17 Right?

23:18 Yeah.

23:18 There's a lot of pros in there that says, here's how we might do it.

23:20 But, you know, no promises.

23:22 We could change this literally anytime.

23:24 And we have.

23:25 Yeah.

23:25 The design has changed fairly significantly since this PEP was first published.

23:30 But we've updated that so it can remain.

23:32 Yeah.

23:33 Cool.

23:33 Okay.

23:34 So the background is when we're running code, it's not compiled and it's not static types.

23:41 Right?

23:41 It's because it's Python.

23:43 It's dynamic.

23:43 And the types could change.

23:45 They could change because it just uses duck typing and we might just happen to pass different things over.

23:50 I mean, we do have type hints.

23:52 But as the word there is, it applies as a hint, not a requirement like C++ or C# or something.

23:58 You have to have the CPython runtime be as general as possible for many of its operations.

24:05 Right?

24:06 Yeah, absolutely.

24:06 And beyond just types, things like I could create a global variable at any time.

24:11 I could delete a global variable at any time.

24:13 I could add or remove arbitrary attributes.

24:16 All these sort of very Pythonic things about Python or un-Pythonic, depending on how you're looking at it.

24:22 These are all things that would never fly in pilot length.

24:25 Yeah, and they mean you can't be overly specific about how you work with operations.

24:31 So, for example, if you say, I want to work with call this function and pass it the value of X.

24:36 Well, where did X come from?

24:38 Is X a built-in?

24:39 Is it a global variable?

24:41 Is it at the module level?

24:43 Is it a parameter?

24:45 Is it a local variable?

24:46 All these things have to be discovered at runtime, right?

24:49 For the most part, yeah.

24:50 Yeah, for the most part.

24:51 Part of this adapting interpreter is it will run the code and it says, look, every single time they said load this variable called X, it came out of the built-ins, not out of a module.

25:04 And so we're going to replace that code, specialize it, or quicken it.

25:08 I've heard of quickening, so I'll have to work on the nomenclature a little bit there.

25:11 We can clear up the terms a bit, yeah.

25:14 Yeah, yeah, but you're going to take this code and you're going to replace it and don't say just load an attribute or load a value from somewhere and go look in all the places.

25:22 You're like, no, no, no, go look in the built-ins and just get it from there.

25:25 And that skips a lot of steps, right?

25:27 Yep, yeah.

25:28 Or maybe I'm doing math here and every single time it's been an integer.

25:31 So let's just do integer math and not arbitrary addition operator.

25:35 With the huge asterisk that if it does become a global variable or if it does start throwing floats at your addition operation, that we don't say false or even produce an incorrect result.

25:49 Right, because you could say, use x.

25:51 But before that, you might say, if some value is true, x equals this thing.

25:55 And it goes from being a built-in to a local variable or some weird thing like that, right?

26:00 And if it overly specialized and couldn't fall back, well, bad things happen, right?

26:05 Yeah, it would be surprising if you were getting len, the len function from the built-ins over and over and over.

26:10 And then you, for some reason, define len as a global.

26:14 Python, you know, the language specification says it's going to start loading the global now,

26:18 regardless of, you know, where it made it be able to pull.

26:21 And that's the same for, you know, like attribute accesses.

26:24 If you used to be getting an attribute off the class and then you shadow it on the instance,

26:28 you need to start getting it from the instance now.

26:30 You can't keep getting it from the class.

26:31 Is it correct?

26:32 Right.

26:32 And this is one of those problems that arises from this being a static dynamic language that can be changed as the code runs.

26:39 Because if this was compiled, wherever those things came from and what they were, they can't change.

26:44 Their type was set.

26:45 Their location was set.

26:47 And so then the compiler can say, well, it's better if we inline this.

26:51 Or it's better if we do this machine operation that works on integers better or some special thing like that.

26:59 Right.

26:59 It doesn't have to worry about it falling back.

27:01 And I feel like that ability to adapt and change and just be super dynamic is what's kind of held it back a lot.

27:07 Right.

27:07 Yep.

27:08 And I like that word they used, adapt, because that's kind of a big part of how the new interpreter works.

27:12 You can change your code and the interpreter adapts with it.

27:15 If X starts being an attribute on the instance rather than from the class, well, soon the interpreter will learn that sometime later after running your program.

27:24 And then, OK, start doing the fast path for instance access rather than class access.

27:29 Let's start there.

27:30 How does it know?

27:31 Right.

27:31 It doesn't compile.

27:32 So it has to figure this stuff out.

27:36 Right.

27:36 I run my code.

27:37 Why does it know that I can now treat these things X and Y's integers versus strings?

27:42 Yeah.

27:42 So stepping back a little bit, like how does this new interpreter work?

27:46 So the big change, kind of the headline is that the bytecode instructions can change themselves while they're running.

27:54 Something that used to be a generic ad operation can become something that is specialized, which is kind of the specialized instruction is the terminology we use for adding two integers or adding two floats.

28:07 And so this happens sort of in a couple of different phases.

28:10 After you've run your code for some amount of time, basically after we've determined it's worth optimizing because optimization is free.

28:18 So if something's only run once, you know, it's module level code or a class body.

28:22 There's no, no reason to optimize that at all for like leader runs.

28:26 Yeah.

28:26 Yeah.

28:27 We have heuristics for, okay, this, this code is warm.

28:30 And that's, you know, a term that you hear in JITs often because it's a higher order for specializing.

28:35 Basically after we've determined that a block of code is warm, we quicken it, which is that term that you used earlier.

28:43 And this basically means walking over the bytecode instructions and replacing many of them with what we call adaptive variant.

28:51 And, you know, you can, you can see an example in the pet, but you have to kind of walk through that example.

28:55 If you have an attribute load, once the code is quickened after we've determined it's warm, we walk over all the instructions.

29:01 All of the load attributes by code instructions become load adder adaptive.

29:06 And what those adaptive instructions do is when we hit them, when we're actually doing the attribute load, in addition to actually loading the attribute, they will kind of do some fact finding.

29:18 They'll gather some information and say, okay, I loaded the attribute, did it come from the class, did it come from the instance, did it come from the plot, did it come from a dict, did it come from a module?

29:26 You know, there's, there's a bunch of different possibilities.

29:28 Oh.

29:29 And so using that information, the adaptive instruction can turn itself into one of the specialized instructions.

29:36 So the example you have here on the screen can either be load adder instance value or module or slot.

29:41 And what the specialized instructions do is really cool.

29:44 Basically, they start with a couple of checks just to make sure if the assumptions are holding true.

29:49 So for a load adder instance value, we, you know, check and make sure that, for example, the class of the object is as we expect.

29:58 Then our attribute isn't shadowed by a descriptor or something weird like that, or that we're not now getting it off of a class object or whatever.

30:06 And then it has a very optimized form of getting the actual attribute.

30:11 There's a lot of expensive work that you can skip if you know that, you know, you have an attribute that is coming directly off the instance.

30:19 Or another one is load adder slot.

30:22 Slots are really interesting for Python performance.

30:25 And, you know, they kind of capture more than a lot of the stuff, the difference of the possible and the common.

30:31 And by that, I mean, it's possible that every time you access a field off of a class, that it was dynamically added and it came from somewhere else and it's totally new and it could be any type.

30:41 What's more likely, though, is it's, you know, the customer always has a name and the name is always a string.

30:46 Right.

30:47 And with slots, you can sort of say, I don't want this particular class to be dynamic.

30:52 And because of that, you can say, well, it doesn't need to have a dictionary that can evolve dynamically, which improves the access speed and the storage and all of that.

31:01 And here you all are pointing out that, well, we could actually have a special opcode that knows whenever I access X, X is a slot thing.

31:09 Skip all the other checks you might have to do before you get there.

31:12 Well, yeah.

31:13 And even accessing the slot is going to be faster.

31:16 So I'm not 100% brushed up on how load adder slot works, but the slots are implemented using descriptors.

31:23 So to get the slot from your class, you still, or from your instance, you still need to go to the class, look up the attribute in the class dict, find the descriptor, verify it's a descriptor, call the descriptor.

31:35 Into that list.

31:36 Yeah.

31:36 Exactly.

31:37 We can do it even faster than that.

31:39 So even if you do have slots, this happens really fast without even any dictionary lookups.

31:44 We say, has the class changed?

31:46 No.

31:46 Okay, cool.

31:47 We'll get to this later, but we remember what offset the slot was at last time and we just reach directly to the object.

31:55 Grab it.

31:55 There's no dictionary lookups.

31:57 We're not calling any descriptors, doing anything like that.

32:00 It's about as close to, you know, a compiled language as a dynamic language would be.

32:04 Yeah.

32:05 Just verify it.

32:06 Class has a change.

32:06 Okay, reach in.

32:07 I remember where it was.

32:08 You know, I tried to kind of align this up saying the possible and the common.

32:11 Most likely your code is not changing.

32:14 And when it's well written, it's probably using the same type.

32:17 You know, it's not like, well, sometimes it's a string and sometimes like that is the quote seven.

32:22 And sometimes it's the actual integer seven.

32:24 Like it should probably always be one.

32:26 You just don't express that in code unless you're using type hints, right?

32:30 And they're not enforced.

32:31 Yeah.

32:31 Yeah.

32:32 And getting back to the adaptive nature and making sure that we are correct.

32:36 You know, if we had something that was a slotted instance coming through a bunch of times and that suddenly you throw a module in or something with an instance dictionary or something else,

32:45 or maybe the attribute doesn't exist or those quick checks that I mentioned that happen before we actually do the fast implementation of loading a slot.

32:55 If any of those checks fail, then we basically fall back on the generic implementation.

33:00 We say, oh, our assumptions don't hold.

33:03 Go back.

33:03 And if that happens enough time, then we go back to the adaptive form and the whole cycle.

33:07 So if I'm throwing a bunch of integers into an add instruction and then later I stop and I start throwing a bunch of strings into an add instruction,

33:15 we'll do the generic version of add for a little bit while we're kind of switching over.

33:19 But then the interpreter will kind of get the hint and start, you know, specializing for string edition later.

33:25 And it's really cool to see that happen.

33:28 This portion of Talk Python To Me is brought to you by the Compiler Podcast from Red Hat.

33:32 Just like you, I'm a big fan of podcasts.

33:35 And I'm happy to share a new one from a highly respected and open source company.

33:40 Compiler, an original podcast from Red Hat.

33:43 With more and more of us working from home, it's important to keep our human connection with technology.

33:48 With Compiler, you'll do just that.

33:50 The Compiler Podcast unravels industry topics, trends, and things you've always wanted to know about tech through interviews with people who know it best.

33:58 These conversations include answering big questions like, what is technical debt?

34:01 What are hiring managers actually looking for?

34:04 And do you have to know how to code to get started in open source?

34:07 I was a guest on Red Hat's previous podcast, Command Line Heroes, and Compiler follows along in that excellent and polished style we came to expect from that show.

34:16 I just listened to episode 12 of Compiler, How Should We Handle Failure?

34:20 I really valued their conversation about making space for developers to fail

34:24 so that they can learn and grow without fear of making mistakes or taking down the production website.

34:29 It's a conversation we can all relate to, I'm sure.

34:32 Listen to an episode of Compiler by visiting talkpython.fm/compiler.

34:36 The link is in your podcast player's show notes.

34:38 You can listen to Compiler on Apple Podcasts, Overcast, Spotify, Pocket Cast, or anywhere you listen to your podcasts.

34:44 And yes, of course, you could subscribe by just searching for it in your podcast player,

34:49 but do so by following talkpython.fm/compiler so that they know that you came from Talk Python To Me.

34:55 My thanks to the Compiler Podcast for keeping this podcast going strong.

35:01 Right, so we shouldn't, we being Python developers that write code that just executes without thinking too very much about what that means,

35:09 we should not have to worry or maybe even know that this is happening, right?

35:14 If everything goes as it's supposed to, it should be completely transparent to us.

35:18 Yes.

35:19 The only way that you should know that anything is different about 3.11 is your CPU cycles.

35:25 The cloud hosting bill is the end of the month.

35:28 You should be able to upgrade and if behavior changes, that's a buck.

35:32 Tell us about that.

35:33 Going from this adaptive version, the adaptive instance sounds slightly more expensive than the,

35:39 just the old school load adder, for example, because it has to keep track and it does a little bit of inspecting to see what's going on.

35:46 But then the new ones, once it gets adapted and quickened, it should be much faster.

35:50 Is there a chance that it gets into some like weird loop where just about the time the adaptive one has decided to specialize it,

35:58 it hits a case where it falls back and it like it ends up being slower rather than faster before?

36:03 Well, yeah, the worst case scenario would be, you know, you send the same type through n number of times

36:10 and then right when it's going to try to specialize you, set it through a different type.

36:13 Exactly.

36:14 Whatever, if n is the limit, like if it goes to n plus one times and then trips it back.

36:18 Yeah.

36:19 Yeah.

36:19 Yeah.

36:20 So that would be sort of the worst case scenario.

36:22 But we have kind of ways of trying to avoid that if at all possible.

36:26 Okay.

36:27 So, for example, if we fail one of those checks, we don't immediately turn into the adaptive form.

36:33 We will do it after, you know, that check has failed a certain number of times.

36:38 And as just a concrete example, that number of times that we have hard coded is a prime number.

36:43 So it's less likely that you'll fall into these sort of patterns where it's like, oh, I send three ins through that string.

36:49 Three ins.

36:50 It'd be hard to, you know, get that worst case behavior without being intentionally malicious.

36:54 By the way, we got to keep in mind, like these are extremely small steps in our code, right?

37:01 We might have multiple ones of these happening on a single line of what looks like, oh, there's one line of code.

37:07 Like, well, there's five or however many instructions, bytecode instructions happening.

37:12 And some of them may be specialized and some of them not, right?

37:15 Yeah, exactly.

37:16 And so the overall effect definitely smooths out where, sure, you may have a worst case behavior at one or two or three individual bytecode instructions.

37:24 But your typical function is going to have much more than that, even a smallish function is going to have, you know, 20 or 30 instructions doing it, you know, real.

37:32 Yeah.

37:32 Yeah.

37:33 If you care about its performance, it'll be doing a lot there.

37:35 Exactly.

37:36 And so some will specialize successfully, some won't.

37:38 But in general, your code will get 25-ish percent faster.

37:42 Is there a way you could know?

37:44 I mean, is there a way that you might be able to know if it specializes or not?

37:46 We'll get to that.

37:47 But it looks like if I go and use the disk module, D-I-S, not for disrespect, but for disassembly.

37:57 Disassembly, yeah.

37:57 So you can say, you know, import disk and then maybe from disk, import disk.

38:02 You can say disk and give it a function or something.

38:04 It'll write out the bytecode of what's happening.

38:07 Does all of this mean that if I do this in 3.11, I might see additional bytecodes than before?

38:14 You know, instead of load adder, would I maybe see the load adder instance value?

38:17 Will I actually be able to observe these specializations?

38:20 If you pass a keyword argument to your disk utilities, then yes, you will be able to see.

38:26 Okay.

38:26 But if I don't, for compatibility reasons like load adder adaptive and load adder instance,

38:32 those are just going to return as load adder?

38:34 Load adder.

38:35 Exactly.

38:35 Okay.

38:36 Yeah.

38:36 So the idea is anyone that's currently consuming the bytecode, they shouldn't have to worry about specialization because the idea is they're totally transparent.

38:43 You know, so they should only see what the original bytecode was.

38:47 But if you want to get at it, then yeah, if you get any of the disk utilities,

38:51 you could pass it adaptive through and that will show you the adaptive.

38:56 And again, you'll only see them if it's actually gets quick at meaning if you run it,

39:00 you know, a dozen times or something.

39:02 Okay.

39:02 So if I wrote, say a function and so often what you're doing, if you're playing with disk is like you write the function and then you immediately write,

39:10 print out the disk output.

39:12 Maybe you've never called it.

39:13 Right.

39:14 And so that might actually give you a different, even if you said yes to the specialization output,

39:19 that still might not give you anything interesting.

39:22 It won't give you it.

39:23 Yeah.

39:23 It'll just give you as if you hadn't passed the keyboard argument.

39:25 Because again, this all happens at runtime.

39:27 So if the code isn't being run, nothing happens.

39:29 You know, we don't specialize code that is ever run.

39:33 What counts as warm, Mike?

39:35 How many times do I got to call it?

39:36 The official answer is that's an implementation detail of the interpreter subject to change at any time.

39:41 The actual answer is either eight calls or eight times through a loop in a function.

39:46 So if your function has a loop that executes more than eight times, or if you call it more than eight times.

39:52 So just calling your function eight times should be enough to quicken it.

39:55 Right.

39:56 Well, maybe that number changes in the future, but just having a sense, like, is it 10 or is it 10,000?

40:02 You know what I mean?

40:03 Like, where's the scale up before I see something?

40:07 Yeah, if you want to make sure it's quickened, but you don't want to take up too much time,

40:09 I'd say just run it a couple less at a time.

40:11 As short man, when we're writing tests and stuff, we do like 100 or 1,000.

40:15 Because that also gives it time to actually adapt to the actual data that you're sending.

40:19 Because it's not enough to just quicken it, then you'll just have a bunch of adaptive instruction.

40:23 They actually have to see something.

40:24 Yeah, well, now we're paying attention.

40:25 Like, great.

40:26 You need to have something to pay attention to, right?

40:29 Yeah, and you'll see that too.

40:30 Because if you have any sort of logic flow inside of your function, when you're looking at the bytecode, any paths that are hit will be specialized,

40:38 but any paths that aren't obviously won't because we don't specialize dead code.

40:42 So it has a bit of a code coverage aspect, right?

40:46 You can think about it like that.

40:47 If you look at it, you might see part of your code and it's just, it's unmodified because whatever you're doing to it didn't hit this else case ever.

40:55 Yeah, well, and that's what's really exciting about this too, is when you're, if you run your code a bunch of times and then you call this on it,

41:03 you see a lot of information that would potentially be useful if you were,

41:07 for example, JIT compiling that function.

41:09 You see at every addition site, you're adding floats or ints.

41:14 You see at every attribute load site, whether it's a slot or not, you see where the dent code is, you see where the hot code.

41:19 All that stuff is sort of getting back to what I said, how this sort of enables us to build upon in the future.

41:25 Not only can we add more specializations or specialize more op codes or,

41:29 you know, do that more efficiently, we can also use that information to kind of infer additional

41:35 properties about the code that are useful for, you know, other faster, lower representation.

41:40 Yeah, I can certainly see how that might be.

41:42 Might inform some kind of JIT engine in the future.

41:45 Yeah.

41:46 I think the PEP is interesting.

41:48 The PEP 659, people can check that out.

41:50 But like you said, it's informational.

41:52 So it's not really the implementation exactly.

41:54 Yeah.

41:55 So let's talk a bit about your project that you did.

41:59 In addition to just being on the team, the personal project that you did,

42:03 that basically takes all of these ideas we've been talking about and says, well, two things.

42:08 One, can I take code and look at that and get that answer?

42:12 Again, kind of back to my code coverage example, like coloring code lines to mean stuff.

42:16 And then what's interesting about this, and we'll talk to this example that you put up,

42:20 is there's actionable stuff you could do to make your code faster if you were in a super

42:25 tight loop section.

42:26 Like I feel like applying this visualization could help you allow Python to specialize more

42:32 rather than less.

42:33 Yeah.

42:34 I mean, in general, this tool is really useful for us as maintainers of the specialization

42:40 stuff because we get to see, you know, where we're failing to specialize.

42:44 Because ideally, you know, if we've done our job well, you should get past your Python

42:48 without changing your code at all.

42:49 First and foremost, this is a tool for, you know, us in our work so that we can see, okay,

42:54 what can we still work on here?

42:56 But that is sort of a cool knockout effect is that if you do run on your code, you also

43:01 know where it's not specializing.

43:02 And if you know enough about how specialization works, you may be able to fix that.

43:06 But I would say a word of caution against, you know, like getting two in the weeds and

43:12 trying to get every single bytecode to do what you want, because that's, there are much

43:16 better ways of making it faster, right?

43:18 There are places where you're like, these two functions, I know we got 20,000 lines

43:22 of Python, but these two, which are like 20 lines, they are so important.

43:27 And they happen so frequently.

43:28 Anything you can do to make them faster matters.

43:31 You know, people rewrite that kind of stuff in Rust or in Cython.

43:34 Before you go that far, maybe adding a dot zero on the end of a number is all it takes.

43:40 You know, something like that, right?

43:41 That's kind of what I'm getting at.

43:42 Not to go crazy or try to think you should mess with the whole program.

43:45 But there are these times where like five lines matter a lot.

43:49 Yeah.

43:50 Okay.

43:50 Well, tell us about your project specialist.

43:53 One really cool thing that our specializing adaptive interpreter does is we've worked really

43:59 hard to basically make it easy for us to get information about how well specializations

44:03 are working.

44:04 So you can actually compile Python.

44:06 It'll run a lot slower, but you can compile Python with this option with PyStats.

44:11 And that basically dumps all of the specialization stats.

44:14 Yeah.

44:15 And you actually pointed out that you can, in the faster CPython idea section, it like lists

44:21 out like a...

44:22 Yeah.

44:22 So there's tons of counters.

44:23 So you can see that when we run our benchmarks, you know, load adder instance value is run

44:28 what, 2 billion times, almost 3 billion times.

44:30 And it misses its assumptions, 1% of those.

44:34 And yeah.

44:35 And so there's tons of these counters that you can dump.

44:37 And that's really cool because we can run our performance benchmarks and see how those numbers

44:41 have changed.

44:41 And even cooler than that, it allows us kind of separately to, for example, there's been

44:47 at least one case where we've worked with a large company that has a big private internal

44:51 app and they can run it using Python 3.11 and we can get these stats without actually looking

44:56 at the source code, which is really cool.

44:57 And so we want to help you be faster and we're working on the runtime, but we don't want you

45:03 don't want to show us your code.

45:04 And so we're not going to look at it.

45:05 And so those stats are really useful for kind of knowing, okay, 90% of my attribute access

45:10 were able to be specialized.

45:11 What about the remaining 10%?

45:13 Where are they?

45:14 So, you know, an additional question, like why couldn't they be specialized?

45:17 And that's something that's a lot easier to tell when you're looking at the code.

45:21 And so this tool was basically my original intention for writing it is, you know, once we get beyond,

45:28 you know, seeing the stats for benchmark and we run something on it, that makes it easy to

45:32 tell at a glance where we're failing and how.

45:36 Right.

45:36 It's like saying you have 96% code coverage versus these two lines are the ones that are not

45:41 getting covered.

45:42 Exactly.

45:43 You get a lot more information from actually getting those line numbers than from the 97%.

45:47 And so basically the way it works, we already talked about how in the disk module, you can

45:54 see which instructions are adaptive or specialized.

45:57 And all that this tool does, it visualizes.

46:00 Literally the implementation of this tool is just a for loop over this, where we just kind

46:08 of categorize the different instructions and then map those to colors and all sorts of crazy

46:12 concepts.

46:12 Yeah.

46:12 Yeah.

46:12 And for people listening, you know, imagine some code and here you've got a for loop with

46:18 the tuple decomposition.

46:20 So you got for I comma T in enumerative text that are going to do some stuff.

46:24 And it has the I and the T turned green, but then some dictionary access turned yellow.

46:30 And it talks about, is it not at all specialized?

46:33 Is it, did it try but fail to get specialized?

46:37 And things like that, right?

46:38 Yep.

46:38 Yeah.

46:39 So green indicates successful specializations.

46:42 Red are those adaptive instructions that are slightly slower and represent missed specializations.

46:47 Yellow and orange are kind of a gradient.

46:50 As we talked about, you know, one line of Python code easily be 10 or 20 byte code instruction.

46:56 Right.

46:56 Yeah.

46:57 Yeah.

46:57 It's kind of a way of compressing that information.

46:59 It's really.

47:00 And one thing I want to point out about this too is, you know, you'll notice it's actually,

47:06 you see characters within a line.

47:08 And this is something that's really cool because this is piggybacking on a feature that's completely

47:12 unrelated to specialization.

47:13 Originally, when I was writing this, it highlighted each line.

47:18 So you could see this line was kind of green or this line was kind of yellow.

47:21 But then I remembered, maybe you're familiar, in Python 3.11, we have really, really formative

47:29 tracebacks where it will actually underline the part of the code that has a syntax error that

47:34 has an exception that was raised or something.

47:37 And so that's the PEP that's linked first in the description there.

47:41 It's, you know, called fine-grained error locate or fine-grained locations tracebacks.

47:46 And so what that means is that previously we just had line number information in the bytecode,

47:51 but now we have line number and end line number and start column and end column information,

47:56 which means that due to this completely unrelated feature, we can now also map it directly to which

48:01 characters in source file correspond to individual bytecode.

48:04 That's super cool.

48:05 Yeah.

48:05 So for example, here we've got a string and you're accessing it by element, by passing an index,

48:12 and you were able to highlight the square brackets as a separate classification on that array indexing

48:19 or that string indexing.

48:20 Exactly.

48:21 So it wouldn't have been as helpful to just see that that line was kind of yellow-orange-ish.

48:25 You know, we see that the fast variable store was really, really quick.

48:29 We see that, you know, the modulo operation and the indexing of string by int wasn't that fast.

48:37 We weren't able to specialize it, but, you know, we were able to specialize, you know, the lookup of the name TILEN.

48:46 We weren't able to specialize the function column.

48:47 So that sort of information, that granularity is really, really cool.

48:51 Yeah, it is super cool.

48:52 And I think a good way to go through this, you know, you've got some more background that you write about here,

48:56 but we've already talked a lot about specializing.

48:58 Yeah, this is just summarizing the pet piece.

48:59 Yeah, exactly.

49:00 People can check it out there if they want the TILEN, the too-long-didn't-read version.

49:04 But you've got this really nice example of, you know, what may be in the first few weeks of some kind of Python programming class.

49:11 Write a program that converts Fahrenheit to Celsius and Celsius to Fahrenheit,

49:16 and then just tests that, you know, round-tripping numbers gives you basically the same answer back within floating-point variations, right?

49:24 Yeah, I really like this example because it, you know, as we'll show, it's kind of presented through the lens of performance optimization,

49:31 but it also does a good job of showing you kind of how the interpreter works and how those little tweaks kind of cascade.

49:37 Mm-hmm, absolutely.

49:38 And it highlights certain things you can take advantage of that, you know, if you just slightly change the order,

49:44 it actually has a different runtime behavior, which we don't often think about in Python.

49:48 We're doing C++.

49:49 We would maybe debate, do you dereference that pointer first, or can you do it in the loop?

49:53 Yeah.

49:54 But not so much here.

49:56 So let's go through, I mean, I guess just to remind people, Fahrenheit to Celsius, you would say X equals F minus 32,

50:03 and then you multiply the result once you've shifted the zero by five divided by nine,

50:08 and the reverse is you multiply the Celsius by nine divided by five, and then you add the 32 to shift the zero again.

50:15 And basically that's all there is to it.

50:17 And then you go through and say, well, let's run the specialist on it to get its output.

50:21 And maybe talk about this first variation that we get here.

50:24 Yeah.

50:24 So as we were talking about, you know, the red indicates is adaptive instructions, and the green indicates the specialized instruction.

50:32 So we can see here that some things were specialized very well.

50:35 For instance, look at a cert roundtrip.

50:37 You know, that's great green.

50:38 Because it's in that hot loop, we got enough information about it to say, okay, a cert roundtrip is being loaded from the global namespace.

50:47 And it's a Python function.

50:48 So we can do that cool Python to Python call optimization.

50:53 And, you know, that's basically as fast as any function call in Python is going to be.

50:58 But some things aren't specialized.

51:01 So the things that jump out, you know, the things that we may want to actually take a closer look at would be the map, which is, you know, yellow and red.

51:08 So, for instance, we can, the loads of the local variable f in that first function and the load of the constant 32, those are yellow because the map that they're involved in, the subtraction operation, wasn't specialized.

51:23 But the individual loads of those instructions were specialized.

51:26 I see.

51:26 So half yes, half no for what they were involved in.

51:29 Yeah, yeah.

51:29 Green plus red equals yellow.

51:31 So, yeah.

51:33 So that subtraction wasn't specialized.

51:35 And the reason is, is in 3.11, just based on heuristics that we gathered, we determined it was worth it, at least for the time being, to optimize int plus int, float plus float, but not int plus float, float plus int.

51:48 And so what we're doing here is we're subtracting a float and an int.

51:52 And so we're able to see that that isn't specializable.

51:55 But if we were to somehow change that so that it was two floats, two ints, then it would be specializable.

52:01 Right.

52:01 Because when I look at it, it looks like it absolutely should have been specialized.

52:04 I have a float minus an int.

52:07 The int is even a constant.

52:08 Like you're, okay, well, this is standard math.

52:13 And it's always a float and it's always an int and it's always subtraction.

52:16 Right.

52:16 It seems like that should just, well, the math should be obvious and fast.

52:20 But because, as you pointed out, there's this peculiarity or maybe an implementation detail for the moment.

52:26 Absolutely an implementation detail.

52:27 Yeah, yeah, yeah.

52:28 Yeah.

52:28 If it's an int and a float, well, right now that problem is not solved.

52:33 Maybe it will be in the future, right?

52:34 It seems like pretty low hanging fruit, but you've got all the variations, right?

52:37 Yeah.

52:38 Specializations aren't free.

52:39 So for instance, like when we're running those adaptive instructions, we need to check for all of the different possible specializations.

52:46 So every time we add a new specialization that has some cost.

52:50 And basically we determined, at least for the time being, like I said, that we've tried to do int plus float and float plus int.

52:57 At least based on the benchmarks that we have and the code that we've seen, it just isn't worth it.

53:02 Sure.

53:02 Okay.

53:03 Yeah.

53:03 Yeah.

53:04 And it's something like int plus int is very easy to do quickly.

53:08 Float plus float is very easy to do quickly.

53:10 Int plus float, there's some coercion that needs to happen there.

53:13 Right.

53:14 So it's already constant anyway.

53:15 Yeah.

53:16 Yeah.

53:16 So it's not something that, it's something that caught some time to check, but we don't have a significantly faster wave.

53:23 It doesn't happen on a register on the CPU or something.

53:26 Yeah, exactly.

53:27 Okay.

53:28 So then you say, well, look what the problem is.

53:31 It's float in int where we have f minus 32, which seems again, completely straightforward.

53:36 What if they're both floats?

53:38 Well, what if like, you know, it's going to end up a float in the end anyway.

53:41 How about make it 32.0 instead of 32?

53:44 Yep.

53:44 And then bam, that whole line turns green, right?

53:47 Yep.

53:47 Yeah.

53:47 So now you're basically doing that entire line using fast local variables and look like native floating point operation.

53:55 You're just adding literally two C doubles together, which is.

53:59 Yeah.

53:59 And this is what I was talking about where you could look at that line and go, oh, well, I just wrote the integer 32, but I'm doing floating point math.

54:07 It's not like I'm doing integer math.

54:08 So if you just put, you know, write it as a constant with a zero, you know, dot zero on it, that's a pretty low effort change.

54:15 And here you go.

54:16 Python can help you more and go faster, right?

54:18 Yeah.

54:19 And that's not a transformation that we can do for you.

54:21 Because if F is an instance of some user class that defines dunder, so that would be a visible change if it started receiving a float as a right hand argument.

54:30 So those are things that we can't do while making the language still.

54:34 Right.

54:35 But your specialist tool can show you.

54:38 And again, figure out where your code is slow and then consider whether they're just like, well, we only got 100,000 lines.

54:44 Let's who's assigned to specializing today.

54:47 Yeah.

54:47 And it also requires, this is a simpler example, but it does require, you know, a somewhat deep knowledge of how the specialization work.

54:55 Because for things other than binary operation, it's not going to become clear what the fix is.

55:01 You just see kind of where the, I hesitate to even call it a problem, but you see where there's the potential for improvement, but not necessarily how to improve.

55:08 Yeah.

55:08 And then you have another one in here that's, I think, really interesting because it, so often when we're talking about math and at least commutative operations, it doesn't matter which order you do them in.

55:19 Like five times seven times three.

55:21 If it's the first two and then the result or multiply the last two.

55:25 And, you know, unless there's some weird floating point edge case that, you know, the IEEE representation goes haywire.

55:31 It doesn't matter.

55:31 Right.

55:32 And so, for example, here you've got, you know, to finish off the Fahrenheit to Celsius conversion, it's the X times five divided by nine.

55:40 And that one is busted too for the same reason, right?

55:43 Because it's a five.

55:43 Yeah.

55:44 Because, so this is kind of for two reasons.