Robust Python

Episode Deep Dive

Guest introduction and background

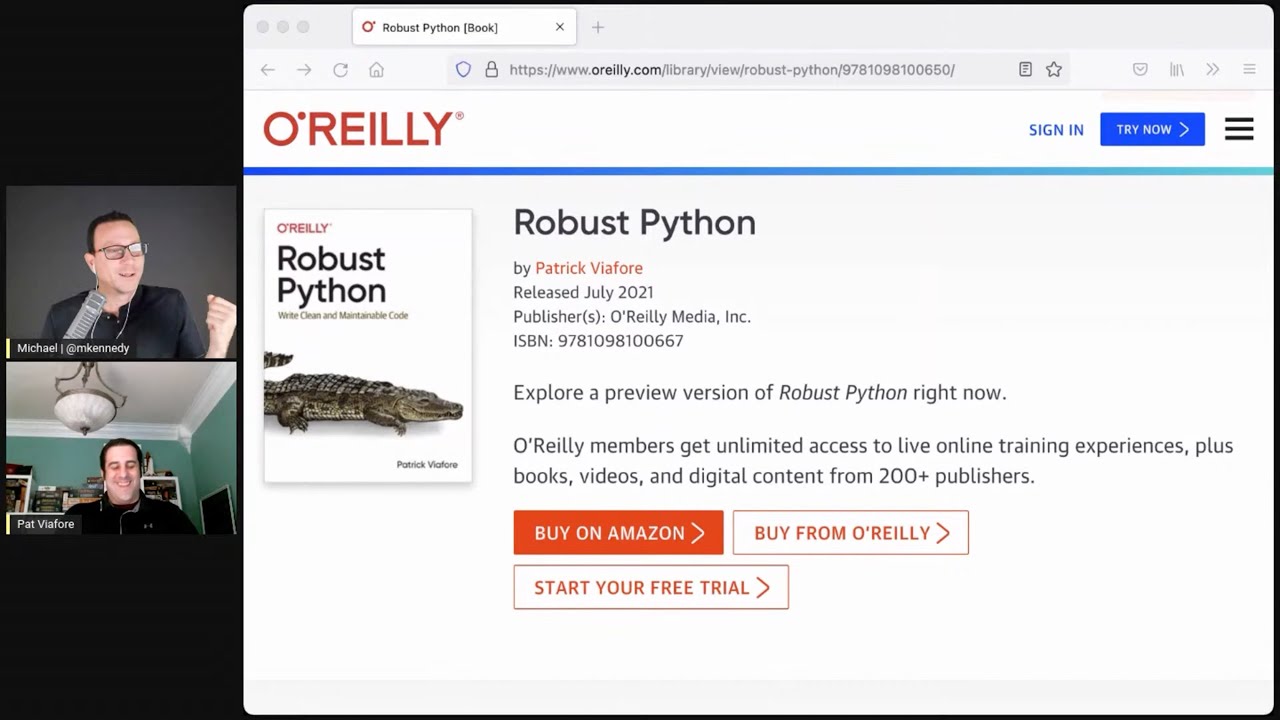

Patrick (Pat) Viafore is a seasoned software developer currently working at Canonical (the company behind Ubuntu) on its public cloud team. He has a background in C++ but fell in love with Python for its simplicity and flexibility. Patrick authored the book Robust Python (published by O’Reilly) inspired by his passion for writing maintainable, sustainable, and expressive Python code. In the episode, he shares insights on how to keep Python codebases healthy over time, integrate typing practices in a balanced way, and communicate effectively with future developers of your software.

What to Know If You're New to Python

If this episode is your first deep dive into Python code quality and maintainability, here are some helpful pointers before you jump in:

- Dynamic vs. Typed: Understand that Python is primarily a dynamic language but supports optional static typing (e.g.,

mypy, type hints) for clarity and tooling. - Data Structures: Familiarity with dictionaries, sets, lists, and data classes will help you follow the examples about “choosing the right container” and structuring data.

- Code as Communication: Python places a strong emphasis on readability (a core reason for its popularity), so good naming and minimal “surprises” are highly valued.

- Incremental Refactoring: You don't have to fix everything at once. Often, improving just the critical sections of your code can yield big benefits.

Key points and takeaways

- Sustaining Code for the Long Run

A central theme of the episode is writing code that remains maintainable and adaptable over time. Pat emphasizes that software engineering is more than short-term programming—it’s “programming integrated over time.” Sustainable code involves balancing immediate feature delivery with the need to keep things extensible and readable.

- Links & Tools:

- Types as a Communication Tool

Python’s optional static typing can help avoid many common errors (like

NoneTypeissues) and enhance editor tooling for code completion. But it’s not all-or-nothing—Pat recommends using types where you see recurring bugs or where clarity is most critical. Gradual typing ensures you keep Python’s strengths in speed of development while reducing error-prone sections. - The Principle of Least Surprise

One of Pat’s best practices is keeping your function, class, and variable names clear and consistent with what they do. If something is called a “getter,” it shouldn’t change state or do hidden side effects. Violating these expectations forces every future maintainer to spend extra time investigating code they can’t trust at face value.

- Links & Tools:

- Python Enhancement Proposals (peps.python.org) (See code style references)

- Links & Tools:

- Moving Beyond Dictionaries for Heterogeneous Data

Using a dict to represent many different fields (e.g.,

'name','age','date_of_birth') can create confusion. Converting these to a data class or a Pydantic model makes your intent explicit and easier to type-check. Distinguish between dictionaries for “lookup by key” and data classes for “grouped data with fixed fields.” - Incremental Refactoring and the Boy Scout Rule

Rather than striving for “perfect code” in a single pass, a more realistic approach is to leave the codebase “cleaner than you found it.” Each time you modify or review code, fix small inconsistencies, add a couple of type annotations, or simplify logic. Over time, these tiny improvements can significantly enhance maintainability and reduce technical debt.

- Links & Tools:

- Working Effectively with Legacy Code (amazon.com) (Mentioned in conversation)

- Links & Tools:

- Data-Backed Conversations with Management

Technical debt and code quality can seem abstract to non-developers. Pat discussed using concrete data—like the number of

NoneTypeerrors in production or how many times a bug reappears—to demonstrate the value of spending time on better testing, type hints, or refactoring. Show the cost in real terms (e.g., lost customers, extra support hours).- Links & Tools:

- cProfile (docs.python.org) for performance measurement

- Links & Tools:

- Don’t Gold Plate Early

While robust code matters, Pat cautions that you shouldn’t hinder your minimum viable product (MVP) or initial release by over-engineering. Strike a balance: shipping features is still a priority. Many robust practices, like adding tests or type hints, can be phased in once you’ve validated that the feature itself is valuable.

- Links & Tools:

- pytest (pytest.org) for incremental testing

- Links & Tools:

- Performance vs. Maintainability

Optimizing the wrong part of your code often leads to tangled, hard-to-read solutions. Always measure before you optimize, whether that’s using built-in Python profiling tools or logging-based metrics. If a code path is rarely called or can run asynchronously, it may not be a pressing place to introduce complexity.

- Links & Tools:

- cProfile (docs.python.org)

- SnakeViz (jiffyclub.github.io/snakeviz) for visualizing profile results

- Links & Tools:

- Extensibility via Plugins

When multiple features have similar mechanics but different “policies,” a plugin system can simplify how new logic gets added over time. Pat mentioned Stevedore (docs.openstack.org) for plugin development in Python. This pattern helps break code into smaller, easier-to-understand components.

- Links & Tools:

- APIs, Duck Typing, and Liskov Python’s “duck typing” means an object’s suitability is determined by what methods it supports rather than its class. Concepts like the Liskov Substitution Principle (from the SOLID guidelines) still apply: if a function claims to work with any “file-like object,” your “substitute” class should behave like one. Embracing Python’s dynamic nature is easier when the code states its behavior clearly.

- Links & Tools:

Interesting quotes and stories

- “Software engineering is programming integrated over time.” – Quoted from Titus Winters, highlighted by Pat to emphasize the importance of maintainability.

- “I thought I was writing a technical book, but I ended up writing a book about empathy.” – Pat on how maintainable code really focuses on making life easier for other (future) developers.

- “The more trust you build into your code, the faster you can work.” – On how principle of least surprise and consistent data structures help teams add features quickly.

Key definitions and terms

- Duck Typing: A style of dynamic typing where the object’s current set of methods and properties determines its valid usage, rather than its class or type name.

- Mypy: A static type checker for Python. It analyzes your code’s type hints to catch errors before runtime.

- Data Class: A Python 3.7+ feature that automatically generates special methods (like

__init__and__repr__) based on class attributes, making it easier to store structured data. - Technical Debt: The implied cost of additional rework created by choosing a quick or easy solution now, instead of using a better approach that might take longer initially.

Learning resources

If you want to dive deeper into some of the themes from this episode, here are a few curated resources:

- Python for Absolute Beginners A comprehensive introduction for learning Python language fundamentals and best practices.

- Rock Solid Python with Python Typing For a deeper dive into how type hints can make your code more reliable and maintainable.

- Build An Audio AI App This course covers real-world application design with Python, using frameworks like FastAPI and Pydantic—both closely related to topics from the show.

Overall takeaway

Maintaining a robust Python codebase goes far beyond just writing code that works today. It’s about empathy for your future self and teammates, choosing data structures that convey intent, and adopting incremental improvements like selective type hints. When you use Python’s power carefully—from dictionaries for fast lookups to data classes for clearer models—you’ll deliver features without sacrificing speed or adaptability. Ultimately, robust Python is about creating a positive experience for everyone who encounters your code, now and in the years to come.

Links from the show

Robust Python Book: oreilly.com

Typing in Python: docs.python.org

mypy: mypy-lang.org

SQLModel: sqlmodel.tiangolo.com

CUPID principles @ relevant time: overcast.fm

Stevedore package: docs.openstack.org

Watch this episode on YouTube: youtube.com

Episode #332 deep-dive: talkpython.fm/332

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 Does it seem like your Python projects are getting bigger and bigger?

00:02 Are you feeling the pain as your code base expands and gets tougher to debug and maintain?

00:07 Patrick Viafor is here to help us write more maintainable, longer-lived, and more enjoyable

00:12 Python code.

00:13 This is Talk Python To Me, episode 332, recorded August 30th, 2021.

00:19 Welcome to Talk Python To Me, a weekly podcast on Python.

00:35 This is your host, Michael Kennedy.

00:37 Follow me on Twitter, where I'm @mkennedy, and keep up with the show and listen to past

00:41 episodes at talkpython.fm.

00:43 And follow the show on Twitter via at Talk Python.

00:46 We've started streaming most of our episodes live on YouTube.

00:50 Subscribe to our YouTube channel over at talkpython.fm/youtube to get notified about upcoming

00:55 shows and be part of that episode.

00:57 This episode is brought to you by Clubhouse, soon to be known as Shortcut, by masterworks.io,

01:03 and the transcripts are brought to you by assembly.ai.

01:07 Please check out what they're offering during their segments.

01:09 It really helps support the show.

01:10 Pat, welcome to Talk Python To Me.

01:13 Thank you.

01:14 I'm so honored to be here.

01:15 Long-time listener.

01:16 Oh, that's fantastic.

01:17 I really, really appreciate that.

01:18 It's an honor to have you here.

01:20 We're talking about one of these subjects that I really enjoy.

01:24 I feel like it's one of these more evergreen type of topics.

01:28 You know, it's super fun to talk about the new features in Flask 2.0, but that's only relevant

01:34 for so long and for so many people.

01:35 But writing software that's reliable, that can be changed over time, doing so in a Pythonic

01:41 way, that's good stuff to learn no matter where you are in your career.

01:45 Oh, I absolutely agree.

01:47 You've written a book called Robust Python, which caught my interest.

01:51 I, as you can imagine, get a couple of books and ideas said to me periodically per day.

01:57 And so they don't usually appeal to me.

02:00 But this one really does for the reasons that I exactly stated in the opening.

02:04 And we're just going to sort of riff on this more broad idea of what is robust Python?

02:09 What is clean code?

02:11 Like, how do you do this in Python and make it maintainable for both you in the future

02:15 and other people and so on?

02:16 Yeah, sounds good.

02:17 Before we get to that, though, let's start with you.

02:20 What's your story?

02:21 So I got into programming like many males my age.

02:25 I loved video games as a kid.

02:26 And I was 12 or 13.

02:29 And some ad in one of my video game magazines caught my eye for video game creator studio.

02:34 Oh, nice.

02:35 And it was this C++, just C++ engine, but it provided easy ways to do sprites and animations

02:42 and particle effects.

02:43 And they gave you step-by-step instructions.

02:46 Here's how you build Pong.

02:47 Here's how you build Frogger.

02:49 And here are the assets.

02:50 I started riffing on it.

02:51 I really came to love it.

02:52 I then took a programming class in high school, True Basic, which I don't remember much of other

02:59 than I don't think I'd ever go back to it now.

03:01 But it interested me enough that I decided to go to computer science college.

03:06 I learned more about the video game industry, especially some of the hours they work and said,

03:10 you know what, maybe the video game industry is not for me, but I still loved programming

03:15 at its heart.

03:15 So I continued on and just found a few jobs after college and just went from there.

03:20 As far as Python went, I worked in telecom for a little bit.

03:24 And early 2010s, we had some test software.

03:27 It was all written in the Tickle programming language.

03:30 And that is an interesting programming language.

03:33 As far as types go, everything's treated as a string.

03:36 And it led to some very unmaintainable code.

03:39 So a few sharp engineers and myself, we decided, you know, what would it look like if we wrote

03:43 this in Python?

03:43 And a whole test of the automation was born from there.

03:46 And we just went, wow, Python makes everything nicer.

03:50 Like, this is so much pleasurable to work with.

03:52 And I've done like C++ and Java before then.

03:55 So Python was this breath of fresh air.

03:57 And I just fell in love with the language.

03:59 Yeah.

03:59 Those are both very syntax symbol heavy languages.

04:03 I've done a ton of C++.

04:05 I did professional C++ development for a while.

04:07 I have never done much Java.

04:09 But yeah, they're really interesting.

04:12 I remember when I came to Python, I came from C#, but also from C++.

04:16 And just in my mind, those symbols, all the parentheses on the if statements and the semicolons

04:23 at the end of the line and all that business, it was just required.

04:26 That's just what language is needed to be.

04:28 And then they weren't there.

04:30 And I'm like, I just, I'm having a hard time with this.

04:32 Right.

04:33 And I felt it was weird.

04:34 But then when I went back just to work on like a project that was still ongoing, it seemed

04:39 more weird.

04:39 I'm like, wait a minute.

04:40 My eye, you know, like the wool has been taken off my eyes.

04:43 I know now these are not required and still here I am continuing to write them.

04:48 And it just drove me crazy once I realized I don't have to put these things here.

04:52 It's just this language that makes me.

04:53 And so, yeah, it was really nice.

04:55 Yeah.

04:55 Yeah.

04:56 Python kind of captured that magic for me.

04:58 And it's funny because going back to C++ every once in a while, my C++ got a lot better

05:04 because of Python.

05:04 You start focusing on the simple.

05:06 That's nice.

05:07 Yeah.

05:07 Yeah, exactly.

05:08 You're not trying to flex with your double pointers and all that kind of stuff.

05:11 It's like, we don't need this.

05:13 Let's not do this.

05:14 Come on.

05:14 Very cool.

05:15 So how about now?

05:15 What are you doing?

05:16 So right now I am a software engineer at Canonical.

05:18 I'm on their public cloud team.

05:20 So we're building the Ubuntu operating system for public clouds, AWS, Azure, GCE, a couple others.

05:27 And so we customize the images for clouds, work with a lot of other canonical teams to make sure that their changes are reflected in our software.

05:36 We maintain the CICD pipelines to deliver those as often as we can.

05:41 Most of the toolings are in Python, so I get to use Python day to day, which makes me happy.

05:45 Oh, that's really cool.

05:46 What kind of features are we talking about here?

05:49 Or aspects?

05:50 Yeah, general hardware enablement.

05:52 Clouds may have new instances, new processor types.

05:56 Working to make sure that that's enabled.

05:58 Do a lot of close work with our kernel team.

06:02 Features that the cloud themselves might request.

06:05 Maybe, you know.

06:06 Like something the cloud needs to set up and maintain the VM aspect.

06:10 Yeah.

06:11 Okay.

06:11 More on the infrastructure side.

06:13 Maybe how do they hibernate their VMs?

06:16 Okay.

06:16 How do they improve boot speed?

06:18 Those sort of changes.

06:19 Yeah.

06:19 Or how do they improve the security profile?

06:21 That's something I'm working on right now with some of our clouds.

06:23 Yeah.

06:24 Super, super important.

06:25 Yeah.

06:26 That's part of the promise to go into the cloud is often there's better security and better durability.

06:31 But if it fails, it fails for potentially all of some big chunk of the internet, right?

06:36 Like that's the consequence of failure is also higher.

06:39 So.

06:39 Yeah.

06:39 Yeah.

06:39 You have to be very aware of how many people are using your software when you deploy to the cloud.

06:44 Does it make you nervous to work on that kind of stuff?

06:46 Oh, absolutely.

06:48 Yeah.

06:48 I wouldn't, I'd be lying if I said it didn't, but you, you learn to be careful.

06:52 You learn to really focus on making everyone, you know, having good communication between your teams, making sure there's no surprises for anyone, that sort of thing.

07:01 I remember that as well, just working on certain things.

07:04 Like the first e-commerce system I wrote, I'm like, this thing's going to be charging thousands of dollars per transaction.

07:09 And I might screw this up.

07:11 And I'm really worried for deploying it for the whole company.

07:14 There's like big purchases people were doing.

07:16 And it made me really nervous.

07:18 But at the same time, one of the things I've come to learn over my career programming is it's one of the things that will really put a sad, sad look on your face is if you spend a lot of time and create beautiful software and no one uses it.

07:30 That's right.

07:31 Yeah.

07:32 So the, even though it may be stressful that it's getting used a lot and it's really central, that's also amazing, right?

07:37 Yeah.

07:37 But it's also made me a better programmer because you just start thinking about explicit error cases more.

07:42 And my last job, I mentioned I did telecom and 911 calls get routed through your equipment.

07:47 You don't want to fail that.

07:49 And yeah.

07:50 Wow.

07:51 It is scary at first, but you learn to develop disciplines over time of, okay, how do we make sure we're not making mistakes?

07:57 You know, speaking of that, I think that's maybe a good segue in to talk about this idea of robust Python.

08:04 First of all, like, let's just talk about your book for just a minute and then we'll just get into the general idea of it.

08:08 Like what motivated you to write this book?

08:11 So I mentioned I've done C++ development in the past.

08:13 I started diving heavy into modern C++ and how it uses a lot more type safety features and some earlier versions of C++.

08:23 Did a quick dabble into Haskell just from a learning perspective and really started to love static types.

08:29 But my day-to-day language was Python.

08:31 So I really, really dove deep on, you know, how do I make my Python safer moving forward?

08:36 So I pitched to a few publishers.

08:38 I want to write a book about, you know, type system best practices in Python.

08:42 Let's talk type checkers.

08:43 Let's talk about design types and so on and so forth.

08:46 O'Reilly, who published my book, they bit and they said, oh, that sounds interesting.

08:51 Can we expand the scope a little bit more?

08:53 And I thought, okay, well, why do I write types in Python?

08:56 Why do I, you know, advocate type systems?

08:59 It's to make things more maintainable, make things live longer, to make things clearer.

09:04 And the idea of robust Python came out of that.

09:06 Well, I think it's really a pretty interesting idea.

09:09 I do think when you come from these languages like C++ or Java or something, there is something to be said for it literally is verified to be clicked together.

09:21 Just like a perfect jigsaw puzzle.

09:23 Yeah.

09:23 Right.

09:23 It won't catch every error, but it can catch more errors.

09:27 And that's a good thing.

09:28 Yeah.

09:28 Well, I remember how excited I was when I got my first non-trivial C++ thing to compile.

09:33 And I'm like, yes, I've done it.

09:35 Little did I know, like I was in for it.

09:37 I mean, it was out of compiler errors and into like real errors.

09:41 Yeah.

09:41 At that point.

09:42 Right.

09:42 Yeah.

09:43 The fun errors.

09:44 Right.

09:45 Yeah.

09:45 Exactly.

09:46 The why, what is it?

09:47 What is it?

09:48 Seg fault?

09:49 Whatever.

09:49 I don't know.

09:51 We're going to work on that.

09:52 So anyway, that was, that was really interesting.

09:55 I do think that it's not required, right?

09:59 I do think that there is really interesting code being written in Python.

10:02 And you can see that how it's, it's being adopted in all these ways.

10:06 The biggest, maybe example I have in mind of these two things side by side is, you know,

10:11 YouTube versus Google video and how Google video was in Python with 20 engineers.

10:16 Google video was a hundred C++ engineers.

10:19 And eventually Google just said, all right, we're going to put that project aside and buy

10:22 YouTube because they just keep out running us in features.

10:25 Yeah.

10:25 I think that's the beauty of Python is that you can build things fast.

10:29 You can see them working faster.

10:31 And you don't have to fight the compiler as much of the way.

10:34 So it's almost like these two things are at odds.

10:36 And the answer is just Python's gradual typing.

10:38 You just type as you need to.

10:40 You add typing where it makes sense.

10:42 And I won't be prescriptive and say you need types everywhere, but there are certain places

10:47 where it makes a lot of sense and save you money and time.

10:49 I do find it pretty interesting how they've implemented types in Python.

10:53 And to be clear, this idea of robust Python that you're focused on, types is just one part

10:58 of it, but it sounds like it was the genesis of it.

11:00 It was absolutely the genesis.

11:02 Yeah.

11:02 Yeah.

11:03 So we have sort of two other realities that we might look at.

11:08 We could look at C++ or C# or Java.

11:10 They're all the same in that regard.

11:12 They're like static compiled languages.

11:14 And then we've got something that's much closer to Python with TypeScript, where they said,

11:19 we have the same problem.

11:20 We have JavaScript, which is even less typed because the runtime types are all just weird

11:26 dictionary prototype things anyway.

11:28 And we want to make that reliable for larger scale software and integrations and stuff.

11:33 So we create TypeScript.

11:34 But the real fork in the road, I think that was interesting is the TypeScript people said,

11:39 we're going to apply the ideas of absolute strict typing.

11:43 Once you start down that path, it's all down that path.

11:46 And they have to match up.

11:47 Whereas in Python, it's like, let's help you down this path, but not give up the zen of Python,

11:54 where you can easily put things together and you're not restricted by this type system.

11:59 But if you run the right tools, be that PyCharm, VS Code, or mypy or something like that,

12:05 it'll be there to help you make it better in lots of ways.

12:08 Oh, absolutely.

12:09 I think that's a really creative and interesting aspect of it.

12:12 Yeah.

12:12 Yeah.

12:13 I absolutely agree with that.

12:15 A lot of things that I discuss throughout the book is the idea that these are tools in your toolbox.

12:22 And so I talk about types.

12:24 I talk about API design.

12:25 I talk about sensibility.

12:26 They're all tools and they shouldn't be applied everywhere.

12:30 I really want to start focusing on first principles.

12:33 Why do we do the things we do in software engineering?

12:35 And what are the most appropriate for them?

12:38 You had a really interesting quote in your book about software engineering.

12:42 And I've always sort of struggled to have this conversation with people like, oh, are you a programmer?

12:48 Are you a coder?

12:49 Are you an engineer?

12:50 Like, where are you on that?

12:52 Like, is that even a meaningful distinction?

12:54 And to a large degree, I've always felt like it was just sort of whatever sort of culture you're in.

12:59 You know, if you're in a startup, it's one thing.

13:01 If you're in like a giant enterprise, they may value a different title in a different way.

13:05 It's all kind of the same.

13:06 But you had this cool quote that said something like software engineering is programming integrated over time.

13:11 Yes.

13:12 And I wish I could claim credit for that, but that actually came from Titus Winters of Google and C++ Con or CPP Con Talk.

13:19 And it's just really resonated with me.

13:22 We program, but software engineering is the efforts of that programming over years or decades.

13:29 Your code will live decades.

13:31 The more valuable it is, the longer live it probably will be.

13:34 I've worked on code that's been 15 years old when I started on it.

13:38 And there's code that I wrote 12 years ago that's still running operationally.

13:42 And that scares me to some degree.

13:44 Because like, oh, did I know what I was doing back then?

13:48 But it just reemphasized like why it's so important to think towards the future of what your audience.

13:55 You're going to have maintainers that come after you.

13:57 And do you want them to curse your name?

14:00 Or do you want them to be like, oh, thank goodness, this person wrote something that I can use.

14:03 It's really easy.

14:04 And I always prefer the latter on that.

14:06 I also like to say.

14:08 And the nicer code that you write and the more durable and long live code that you write,

14:13 the more it can continue to have a life even after you've stepped away from the project, right?

14:19 It's like, this thing is still working well.

14:22 We can keep growing it rather than it's turned into a complete pile of junk that we've got to

14:26 throw away and start over, right?

14:27 So that's a goal that you might want.

14:29 And then also that long-term maintainer might be you.

14:32 Yep.

14:32 Yep.

14:33 You might be working out five years from now and you go, why did I make the decisions that I did?

14:37 I don't remember this.

14:38 Maybe you've jumped to a different project and came back.

14:41 It absolutely can be you.

14:44 This portion of Talk Python To Me is brought to you by Clubhouse.io.

14:48 Happy with your project management tool?

14:50 Most tools are either too simple for a growing engineering team to manage everything or way

14:55 too complex for anyone to want to use them without constant prodding.

14:58 Clubhouse.io, which soon will be changing their name to Shortcut, is different though, because

15:03 it's worse.

15:03 No, wait.

15:04 No, I mean, it's better.

15:05 Clubhouse is project management built specifically for software teams.

15:09 It's fast, intuitive, flexible, powerful, and many other nice positive adjectives.

15:13 Key features include team-based workflows.

15:16 Individual teams can use Clubhouse's default workflows or customize them to match the way

15:21 they work.

15:22 Org-wide goals and roadmaps.

15:24 The work in these workflows is automatically tied into larger company goals.

15:28 It takes one click to move from a roadmap to a team's work to individual updates and back.

15:33 Type version control integration.

15:35 Whether you use GitHub, GitLab, or Bitbucket, Clubhouse ties directly into them so you can

15:40 update progress from the command line.

15:42 Keyboard-friendly interface.

15:44 The rest of Clubhouse is just as friendly as their power bar, allowing you to do virtually

15:49 anything without touching your mouse.

15:51 Throw that thing in the trash.

15:52 Iteration planning.

15:54 Set weekly priorities and then let Clubhouse run the schedule for you with accompanying burndown

15:59 charts and other reporting.

16:00 So give it a try over at talkpython.fm/clubhouse.

16:05 Again, that's talkpython.fm/clubhouse.

16:08 Choose Clubhouse.

16:09 Again, soon to be known as Shortcut, because you shouldn't have to project manage your project

16:14 management.

16:17 Let's talk about some of the core ideas that you have about making software maintainable,

16:23 reliable.

16:24 One of the things you talk about is the separation of time and how code has to communicate or the

16:30 artifacts that we all produce around code has to communicate with people both maybe almost

16:37 immediately.

16:37 Right?

16:38 Like, I'm working on this project with a couple of other developers and we need to,

16:42 like, keep it going forward.

16:43 The other one is, like, way down in the future, someone comes back and they're like, oh, I'm

16:47 new here.

16:48 The person who created it left.

16:49 Maybe talk about some of those ideas that you've covered.

16:52 Yeah.

16:53 And so it feels a little weird because what we're talking about is asynchronous communication.

16:57 And it's weird to talk about that on a Python podcast and not talk about async away.

17:01 Yeah, exactly.

17:02 But it's asynchronous communication in real life, which I actually think is much harder.

17:06 You have to think about your time traveling to some degree.

17:09 You have to think about the future and you have to communicate to them.

17:12 You probably will never meet them.

17:13 You'll never talk to them.

17:15 The only thing that lives on are the artifacts you create.

17:17 So your code, your documentation, your commit messages, that's what people in the future are

17:23 going to construct this mental model of your work from.

17:25 They're going to be doing archaeology when things go wrong.

17:27 Why is this code the way it is?

17:29 Is it safe to change?

17:31 What were the original intentions?

17:33 And so the more you can embed that into code you write and the surrounding commit messages,

17:37 documentation, the more robust your code base is going to be, the more you're communicating

17:43 intent future.

17:44 That's one of the things that was so important for 2020, 2021 for all of us, right?

17:49 Like, yeah, we didn't know how much we were going to need it because there's always been

17:53 this kind of tension.

17:54 Well, there's the open source world and these other projects.

17:58 And there's those weird remote teams, but we come to our cubicles and we all sit down

18:02 and we have our stand up in the morning and we write our software together.

18:05 And like that's, and we use, you know, Perforce or something internal where we lock the file.

18:11 No one else can edit the file till I unlock the file, right?

18:13 Like there's these, these different ways.

18:15 And we've been moving more and more towards this sort of everything.

18:19 Even if the person is right next to you, the way we work is as if they were across the

18:23 world.

18:23 Yeah.

18:24 And, you know, it's, it's been really, I guess, lucky for us as an industry that that

18:28 was mostly in place.

18:30 Yes, absolutely.

18:31 That actually became true.

18:32 Yep.

18:32 And so I think you touched on an interesting point of a lot of developers think, oh, if

18:38 we're close in space, we can collaborate.

18:40 You know, I don't have to worry about my remote teams and I don't have to worry about, you

18:44 know, someone global.

18:46 But by thinking of that, your collaboration in that terms, it sets you up to think about

18:51 the future as well, because you could be asynchronous in space or asynchronous time.

18:55 Right.

18:56 And basically the same tools are there to, to address it.

19:00 The same strategies help with both.

19:01 Yeah.

19:02 So one of the things that you talked about was this principle of least surprise.

19:08 Yeah.

19:09 Tell us about that principle.

19:10 Yeah.

19:11 The principle of least surprise also known as the principle of least astonishment.

19:13 I feel like it's safe to say most developers have gone through a code base and been legitimately

19:19 surprised and went, that function does that?

19:22 Oh my goodness.

19:23 I would never have thought that.

19:24 Once worked on a nasty bug where the get event function was setting an event.

19:30 And I kept overlooking it because I'm like, what's a get event?

19:33 It's just a getter.

19:34 I can ignore this.

19:35 And like two days later.

19:36 This will have no side effects.

19:37 This will be fine.

19:38 Two days later.

19:38 I'm like, let me go step through this.

19:40 And I was floored.

19:41 I'm like, of course this explains my bug.

19:43 So your goal when developing software is you don't want to surprise your future readers.

19:48 I mean, I think this is why people say avoid clever code, favor clear code over clever code.

19:53 You don't want to surprise readers.

19:56 Many, many people may not be as well versed in the languages.

20:00 Maybe they're coming from a different language, maybe from TypeScript to Python.

20:03 The more you rely on clever tricks or poor naming or just the wrong patterns, you're throwing people off for a pee and they need.

20:11 There's an added cognitive burden that they must then carry to understand what you've read.

20:16 One of the beautiful things about different abstractions are here's a function.

20:20 I read the function name.

20:21 That's all I need to know.

20:22 Here's a class.

20:24 I understand what the class does.

20:25 I don't need to go and look into the details.

20:28 And it lets you build these more larger building blocks of conceptual models.

20:33 But if that's not true, right?

20:35 Like if a getter is a setter, well, then all of a sudden those things are all out the window and that's bad.

20:40 Yeah.

20:41 I mean, we work by building mental models and you need trust to build those mental models.

20:45 And as soon as that trust is violated, it just starts taking time to do everyday tasks.

20:50 You say, I want to implement a single API endpoint.

20:54 Well, if I have to go dig through 10 different files just to make sure I'm doing everything right, that's going to slow me down.

21:00 If I can trust that my mental model is correct and that it's been shown to be correct time and time again, I can put more faith in that code and I can feel safer to start changing.

21:09 Yeah, for sure.

21:10 Another thing that I really liked about some of your ideas was that you talked about people having good intentions, even if they write bad code.

21:19 Like they're trying their best and they're for the most part.

21:22 I mean, there might be people who are just lazy or whatever, but for the most part, like they tried to write this well.

21:27 Even if it came out bad, they probably tried to write it well and it just didn't turn out as good as they hoped.

21:32 Or even they wrote it well and it's just changed over time and those original assumptions got lost.

21:38 Right, right.

21:38 It made sense in the early days and the assumptions or the context changed.

21:42 Now it's no longer accurate.

21:44 Yeah.

21:44 Or it's inflexible and it's really hard to use to extend to your current use case.

21:49 I see that all the time.

21:50 One of the ideas that I thought was interesting is this idea of legacy code.

21:54 And I've always been kind of fascinated with what does legacy code mean?

21:57 Yeah.

21:57 Because legacy code for one person could be COBOL.

22:01 Legacy code for another person could be Python 2.6.

22:05 That's pretty old.

22:06 It could even just be Python 3.6 that's been around for a while, right?

22:12 And there's different people who have different definitions.

22:14 Michael Feathers has a cool book called Working Effectively or Effectively Working with Legacy Code.

22:18 Something like that.

22:19 That's an interesting book.

22:20 I believe it comes from maybe a slightly different time, but still some of the ideas will make you think.

22:25 And I was going to quote that book actually because his is a legacy code base is a code base that doesn't have tests.

22:30 And I used to think that for a long time.

22:33 I love tests, but I've come to evolve my understanding of legacy code.

22:39 And here's where the definition I've settled on.

22:41 It's a legacy code, a legacy code base.

22:43 It's a code base in which you can no longer communicate to the original authors or maintains.

22:47 So the length of time doesn't matter as much.

22:50 If you've lost contact from the original authors, all you have is the code base and its surrounding documentation to understand why it's doing the things it does.

22:59 So that's become my favorite definition for legacy code as a place.

23:02 Yeah, I like that one too.

23:03 I don't really like the tests one.

23:05 I see where it comes from, but I feel like it's judging a world from too strict of a place.

23:13 Because not every piece of code is written in a way that it has to be absolutely correct.

23:20 Yeah.

23:21 Right.

23:21 So I think this is also a good way to sort of scope this conversation.

23:26 Because a lot of times people hear, oh, I have to use protocols and I have to use mypy and I have to use X, Y, and Z.

23:34 And I have to do all these fancy things because Pat and Michael said so because they were awesome.

23:38 And it's a big hassle.

23:39 I don't think I need it.

23:40 But here I'm trying to be a good software developer, right?

23:43 So I'll give you an example.

23:45 Years ago, I switched all the Talk Python stuff, especially the Talk Python training stuff, from relational databases and SQLAlchemy over to MongoDB and MongoEngine.

23:55 And so I had to write a whole ton of code that would take a couple of tables and then put them into a structure and then put them in Mongo.

24:02 And that was all fine and good.

24:04 But here's the thing.

24:05 The moment that code ran successfully, I never wanted to run it again.

24:09 It only had to run once.

24:10 It had to move the data one time.

24:12 When it was done, there was no scenario where I cared about its typing or I cared about its continuous integration.

24:18 I could have deleted it.

24:20 I just kept it because, hey, source control.

24:21 But there's these scenarios, right?

24:24 On the other hand, you talk about if you're an online reservation system, like the reservation booking engine, that part needs an entirely different bit of attention and mindset than my little migration script, right?

24:38 Yeah.

24:38 And what I see is what value are the things delivering?

24:42 Some things have one-off value, and that's perfectly okay.

24:45 Your migration script, it's service value, but there won't be much value derived from it in the future.

24:51 Maybe from an archaeology standpoint of how did I go do this?

24:54 Yes, exactly.

24:56 Maybe.

24:56 With something that's core to a business, a reservation booking engine, it delivers value when you built it, but you want it to keep delivering value throughout its lifetime.

25:07 And furthermore, the people who are working on it want to deliver value just as fast as you did in the beginning.

25:12 So you don't want to slow down the future.

25:15 You start getting into where you get product managers saying, why is this taking so long?

25:19 This is super easy.

25:21 Why do you have to spend three weeks just adding this one little field?

25:24 And the answer is often, oh, you know, we didn't think about how to enable value faster when we built it.

25:31 And there's a tricky line there because you can't just go play everything and say, I'm going to make everything super flexible.

25:36 That often has the reverse effect.

25:38 It makes things too flexible and that becomes unmaintainable.

25:41 But there's a fine line between saying, okay, I'm going to think for the future and deliver value now.

25:46 Yeah.

25:46 If every dependency can be replaced and everything can be configured from a file, some settings file and like you never, you know, eventually that becomes a nightmare.

25:55 It sounds cool.

25:56 It's not cool.

25:57 I worked on some of those.

25:58 Yeah.

25:59 It's just painful.

26:00 And so here's the advice I give to people who want to think about how to make their code base more maintainable.

26:05 Target your moneymakers, the things that produce value, because those are the things you want to protect.

26:09 And whatever value means to you.

26:11 Target things with high churn.

26:13 So you can look in your Git history.

26:15 You can see what files change the most.

26:16 Chances are those are the files that are being read the most.

26:19 They're the files that people are working in the most.

26:21 Putting more safeguards in those files, making them more extensible will pay off just because more people are using them.

26:28 Look for areas where you do large swaths of changes.

26:32 It's called shotgun surgery, where if you want to add a single thing, you have to touch 20 files.

26:37 The same 20 files keep getting changed again and again in a grouping.

26:41 That tells you that, you know, if I were someone coming in new to the project, how do I know it's 20 and not 19, 21?

26:47 That's a place we can simplify.

26:48 Those are the things that are super easy to forget a case.

26:52 Oh, yeah.

26:52 Like, oh, we added this feature and every if statement had an else if that covered the new thing, except for that one where we did the auditing or except for that one where we checked if they were an admin.

27:03 Oh, that one.

27:04 Now everyone's an admin.

27:05 Whoops.

27:06 Yep.

27:06 And so that's the sort of places where I think type hinting is and other strategies are super useful because you can start encoding that those ideas of I want to catch this when I miss a case.

27:18 You can start encoding that into your checks, into linters, type checkers, static analysis, and so on and so forth.

27:25 Important.

27:25 I think people are pretty familiar with the typing system these days.

27:31 I think it's really cool.

27:32 The new type system is coming along with more things like 3.9 now lets you write lowercase set bracket integers or whatever rather than from typing import capital set.

27:43 And then you can say it in parallel, right?

27:45 That's really nice.

27:46 With the pipe union definition, you can do like none, pipe, a thing instead of optional.

27:52 Yeah.

27:53 All of those are really nice and so on.

27:56 I suspect that a lot of people are using types for their editor but are not going any further than that with anything like mypy or continuous integration or any of those.

28:06 Do you want to maybe speak to like the use case of both of those?

28:10 Yeah.

28:10 So the use of editors alone is valuable.

28:13 You get autocomplete.

28:15 I mean, autocomplete alone.

28:17 You get squiggly if you do it wrong.

28:18 Yeah.

28:18 I mean, that's right.

28:19 Autocomplete alone is so good.

28:21 And I was just thinking when you were talking about that getter that was actually a setter, there's a really good chance that whoever wrote that code knew that was bad.

28:30 And yet their tooling was such that it was so error prone for them to change the name that they were willing to live with a getter that changed values.

28:40 Because they're like, I could break so much stuff in ways I don't understand if I don't have like a proper set of tools, like a proper editor that'll do multifile refactoring and continuous integration and all of those things.

28:54 Right.

28:54 And so this is sort of like in that vein of your tools now do more for you.

28:59 Yeah.

28:59 And so if you take a look at how costly errors are, an error of the customer is incredibly costly when you factor in support and testing and, you know, field engineering, whatever you need to resolve that customer context, not to mention loss of customer faith.

29:14 Yeah, that's a big one.

29:15 It's expensive for, you know, tests in the later stages of QA to catch an error too, because now our development hasn't been planning to go fix this test or this code.

29:25 We have to stop what we're doing and go back and fix this test that maybe worked on three weeks ago.

29:29 The best time to catch an error is immediately after you write it.

29:32 And that's where that tooling comes in with your editors.

29:35 As you're typing, if you can find an error, great.

29:38 You is the least amount of costs you could have spent.

29:41 And then the second least amount, in my opinion, is letting some sort of stack analysis tool catch it.

29:46 Something like my pie.

29:47 So using types, you can say, you know, I want to encode some assumptions to my code base.

29:52 This value will never be none.

29:54 This value may be none.

29:56 This value may be an enter string or this string.

29:59 If you're right that it's never none, you never have to check it for none.

30:03 Right.

30:03 But if you're wrong, you always have to check it for none.

30:06 Right.

30:06 Which is it?

30:07 Yeah.

30:07 We don't want to do defensive programming of checking is none.

30:10 every single variable we create in every function in vacation.

30:14 That would just, it wouldn't be fun.

30:16 But your alternative, if you don't have that tooling is, all right, does this function return

30:20 none?

30:20 Let me go look at its source code.

30:22 Oh, it calls five other functions.

30:23 Let me go look at their source code.

30:25 Oh, this calls something to the database.

30:27 Is that a nullable feel?

30:28 And anytime you're making someone trawl through your code base to try to answer a question of,

30:34 can this value be none?

30:35 You're wasting their time.

30:37 And they're either going to delay delivering features.

30:41 That just adds up over time.

30:43 Or they're going to make some incorrect assumptions.

30:45 And that's going to cause mistakes, which will lead to time and waste money.

30:48 You've already talked about focusing your attention to put things like type annotations on the

30:53 parts that matter and not necessarily stress about the parts that don't, especially for

30:56 code that's being retrofitted.

30:57 Oh, absolutely.

30:58 I think that's not right.

30:59 Like the thing that logs, you know, it's going to turn whatever to a string.

31:03 And if it comes out as like, you know, some object at some address, like we'll catch it

31:07 later and figure it out.

31:08 But it's the core thing that you want that stuff to be right.

31:12 But one of the things that can be challenging is the interest and the buy-in and the love for

31:18 this idea might not be uniform across your team.

31:21 No, no.

31:22 You know, and I've seen the same thing for testing and I've seen the same thing for

31:26 continuous integration, not necessarily the same person in the same thing, but you know,

31:30 it's like if there's a person on your team that just doesn't care about the continuous

31:34 integration and it turns off all the notifications that the continuous integration fails and then

31:40 they keep checking in stuff and failing the build, you know, like not again, I got to

31:44 go fix this because this person doesn't bother to check their thing and it just gets super

31:49 frustrated.

31:49 And I feel like probably typing has a similar analogy to that.

31:52 It does.

31:53 And that's why if you're in a legacy code base or even a maintained code base that doesn't

31:59 have a whole lot of typing in it, there's alternatives you can have just beyond being strategic where

32:04 you pick.

32:04 There's some fantastic tooling like monkey type, which can annotate your code base for you.

32:09 There's Google's type checker, pi type.

32:12 It can do type checking without type annotations.

32:15 And it does it in a little bit different philosophy than my pi, which I think is capital.

32:19 It tries to infer based on just the values throughout your function bodies, the types should be without type

32:25 annotations.

32:25 So there might be ways to get the benefits without actually making the full commitment to those type checkers for just type

32:35 annotations in general.

32:36 There's also, I mean, tie your value to it.

32:39 Look through your bug reports.

32:41 If you find out that, hey, you know, we've had 12 dereferences of none in the past, you know,

32:49 month.

32:49 None type does not have such attribute, whatever.

32:52 Right.

32:53 And it's cost us X amount of dollars.

32:55 I can now go to someone and say, look, this is costing us real money and cut like our time.

33:00 Sometimes data speaks volumes.

33:02 if you're having a tough time convincing people, I often say, find the data to back it up.

33:08 Look through your bug reports.

33:09 If I, what would we have caught?

33:10 Oh, how much faster could we go?

33:12 Oh, how much I'll do, do a, just even an informal survey around your developer base.

33:17 How much more confident do you feel working in your code?

33:20 and use that data to decide, yes, this is working or no, we need to look at alternative

33:25 strategies.

33:26 How much autocomplete do you get?

33:28 I mean, that might be a winner, right?

33:31 That would for me, actually.

33:32 This portion of Talk Python is brought to you by masterworks.io.

33:37 You have an investment portfolio worth more than a hundred thousand dollars.

33:40 Then this message is for you.

33:42 There's a $6 trillion asset class that's in almost every billionaire's portfolio.

33:47 In fact, on average, they allocate more than 10% of their overall portfolios to it.

33:52 It's outperformed the S&P, gold, and real estate by nearly twofold over the last 25 years.

33:58 And no, it's not cryptocurrency, which many experts don't believe is a real asset class.

34:03 We're talking about contemporary art.

34:06 Thanks to a startup revolutionizing fine art investing, rather than shelling out $20 million

34:11 to buy an entire Picasso painting yourself, you can now invest in a fraction of it.

34:16 Few realize just how lucrative it can be.

34:18 Contemporary art pieces returned 14% on average per year between 1995 and 2020, beating the S&P

34:25 by 174%.

34:28 Masterworks was founded by a serial tech entrepreneur and top 100 art collector.

34:33 After he made millions on art investing personally, he set out to democratize the asset class for

34:38 everyone, including you.

34:40 Masterworks has been featured in places like the Wall Street Journal, the New York Times,

34:44 and Bloomberg.

34:45 With more than 200,000 members, demand is exploding.

34:49 But lucky for you, Masterworks has hooked me up with 23 passes to skip their extensive waitlist.

34:55 Just head over to our link and secure your spot.

34:57 Visit talkpython.fm/masterworks or just click the link in your podcast player's show notes.

35:03 And be sure to check out their important disclosures at masterworks.io slash disclaimer.

35:07 I do want to move on to some other ideas because it's not all about typing, but I think typing

35:14 unlocks a lot of these sort of durability ideas that you're covering there.

35:20 So another one that you talked a lot about, I think is really interesting in this context,

35:25 has to do with collection types and knowing the right data type.

35:30 Yes.

35:30 And that matters so much, right?

35:33 Like somebody might use a dictionary where they should have used a set or something.

35:38 And you're like, well, you used a dictionary, so you made me to look this up by value.

35:41 Like, no, no, I just want to have one of everything.

35:43 Okay, but why do we use the dictionary?

35:46 But people, when they're new, they don't necessarily know that.

35:48 They find the first thing that works.

35:50 They're like, oh, a dictionary worked for this.

35:51 We're using dictionaries.

35:52 But beyond that, it means something for certain container types and other things, right?

35:57 Yeah.

35:57 I think if you look at this on a Python of, you know, there should only be one way to do

36:01 it.

36:01 Most people say, but there's multiple ways to do that.

36:03 Like, I can use a dictionary.

36:04 I can use a set.

36:05 I can use a list and just search for unique values.

36:08 And it's encoded in a string and you can parse it every time.

36:11 Yeah.

36:11 The choices you make, the abstractions you choose, communicate a certain intent.

36:17 When you choose to use a set, that tells me I can iterate over it.

36:21 There won't be any duplicates.

36:22 And I don't, I won't be looking things up by key.

36:27 When I think of a dictionary, I think of a mapping from key to value.

36:31 The keys must be unique.

36:32 But if all I care about was the keys and no values, like there's added cognitive,

36:37 just dissonance of why do I have values for this dictionary?

36:40 They're all serious.

36:41 It doesn't make sense.

36:42 Yeah.

36:42 It goes back to the principle of, Lisa Sashman.

36:44 You get surprised like, oh, this dictionary is being used as a set.

36:49 I get it now.

36:50 And if you're not addressing that as you find it, you're just kicking the can onto a future

36:54 maintainer who then has to add that to the 20 other things he's trying to keep track of

36:59 throughout their maintenance of the program.

37:02 yeah.

37:03 Yeah.

37:03 The, you, you mentioned that one of my big pet peeves is, so a dictionary, I

37:08 say is a mapping from key to value.

37:10 That's typically a homogenous mapping.

37:12 Every key is the same type and every value is the same type.

37:14 But we really, really, really love dictionaries for things like JSON responses or relationships

37:21 of data.

37:21 And that can be so detrimental to maintain ability of code bases.

37:25 If you're not careful.

37:26 The problem is a type checker.

37:29 Isn't the greatest at saying, oh, this is a dict.

37:32 Some of the keys are strings.

37:34 Others are bits.

37:35 Others are decimals.

37:37 And the values are all over the type.

37:39 you can use a type stick to try to get around that.

37:42 But really what you're talking about is a relationship of data.

37:45 when you talk about dictionaries, you're getting into the, well, you, if I see, let's

37:50 say I'm code review.

37:51 Not only were, put this in concrete terms.

37:54 I'm code reviewing some code.

37:55 I see someone accessing dictionary and the key is foot.

37:59 I have to go look at all of where that dictionary was created and modified to make sure that

38:05 who is actually a valid field in that dictionary.

38:08 I have no guarantees.

38:09 Just if I see dict and a type checker, I'm sorry, a type annotation.

38:13 So again, it's that trawling through the code base.

38:16 Oh, this is actually coming from an API.

38:18 Now I have to go read the API and I can't effectively code review code or maintain code.

38:24 If I'm just reading that through without doing that every time to make sure something hasn't

38:28 changed.

38:29 So in this case, I'd say use a data class.

38:32 You know, you have explicit fields.

38:34 You can, you can lean on your tooling.

38:36 If you mess up the field, you aren't expecting to see new fields get created and probably 95,

38:43 99% of the time.

38:44 Or, and I'm like, I will prefer a data class to a dictionary.

38:48 If I have heterogeneous data almost all the time.

38:51 Yeah.

38:51 And maybe get it back like, like a flask API call called JSON.

38:56 And then just jam that star star of that thing into the data class.

38:59 Something like that.

39:00 Yep.

39:00 Yep.

39:01 And I know you've talked about Pydantic on the show.

39:03 Oh, a lot.

39:04 I love Pydantic.

39:05 Just, you know, define a model, let it parse that JSON response and just build that data

39:11 class for me.

39:11 Throw an error if it's invalid.

39:13 And I really liked that model of, of attacking programs like that.

39:17 I love Pydantic.

39:18 I love how it, it tries to sort of be flexible.

39:21 It's like, if we, if I think we can fix this, if you had a thing that is a string, but in

39:26 the string, it really is parsable to a number and it's supposed to be a number.

39:29 I'll just go ahead and do that type conversion for you.

39:32 If not, give you a decent error message.

39:34 It's really, really lovely.

39:36 Yep.

39:36 So if I'm, I'm working with APIs, I love Pydantic for that, that reason that you just outlined,

39:40 but I'll often convert it to a data class or Pydantic data class so that I can say, this

39:45 is a relationship of data and I can kind of shape how a user uses that relationship of

39:49 data throughout the lifetime of the code.

39:51 Yeah.

39:52 It's an interesting tension on how much those models get used throughout all the tiers of

39:57 your app and how much you want to keep them separate.

39:59 Have you seen, SQL model?

40:01 I believe it's called.

40:02 Oh, I have not.

40:03 I have not.

40:04 Okay.

40:05 here we go.

40:06 You probably heard a FastAPI, right?

40:08 Yeah, of course.

40:08 Obviously that's where Pydantic got, I think it's, it's a big boost.

40:12 So Sebastian Ramirez came out a few days ago with, this thing called SQL model and it

40:18 already, I think it's less than a week old and has 4,000 GitHub.

40:21 stars, which is amazing, but basically it's a merging of Pydantic and SQLAlchemy.

40:26 Oh, fantastic.

40:26 It has a SQLAlchemy, you didn't work model and it underlying has all the SQLAlchemy

40:32 stuff, but it's models are actually Pydantic models.

40:35 Yeah.

40:36 And I think this is just another illustration of why thinking about what types things are,

40:41 even if you're not doing type annotations, why that's so important.

40:43 It goes back to how do we build mental models?

40:46 How do we build these, these abstractions in our brain that we can rely upon as we

40:51 work through our code base?

40:52 I do think this is interesting in that you could use the same model at the data level

40:56 internal to your app, and then you could even use the API level, but there's also people

41:00 are saying, but maybe that's not a good idea.

41:02 Maybe you want to separate those in, in interesting ways for like one can change and the other doesn't

41:08 have to change.

41:09 But yeah, I've, I see a lot of value to this thing.

41:11 It's, it looks exciting.

41:12 And I mean, the answer to that's going to probably be, it depends if your application needs them

41:17 to be the same.

41:18 Lovely surprise, make them the same.

41:20 If they have different reasons to change.

41:22 This is something I see a lot too.

41:23 We all get the dry principle.

41:26 Don't repeat yourself and rain in our head.

41:28 And we think, oh, source code looks the same.

41:30 We must deduplicate it.

41:32 But if that source code can change for different reasons, you're going to add more headaches

41:37 by deduplicating it.

41:38 You're going to start adding special cases.

41:39 Well, this thing needs to change, but the other thing doesn't.

41:43 How do I reconcile that?

41:44 Special cases.

41:45 And soon you, you've become the very thing you've sworn to destroy as you build that out.

41:50 It's just littered with special cases as you're trying to tie together two things that have

41:56 two separate contexts.

41:58 So, you know, if you want to use something like SQL model for a API level and your internal

42:03 data model, ask yourself, do these have different reasons for change?

42:06 Maybe, maybe in the future.

42:09 And like a lot of this is just empathy for the future.

42:11 When I talk about maintainable code, robust code, you have to have empathy for those future

42:16 maintainers, put yourself in their shoes.

42:18 Are they going to want to migrate sometime and maybe change the API that they present to

42:24 customers or users or other developers?

42:25 Do you want to change your database at the same time?

42:28 If so, keep them together.

42:30 If you want to keep that separate so that you have certain migration paths, maybe you keep

42:34 them separate.

42:35 And so it's going to depend case by case.

42:36 But again, everything comes down to those first principles of what are you communicating

42:41 with your intent when you make that decision in your code?

42:44 Like we've touched on, you're building different things at different times under different

42:48 constraints.

42:49 Are you building Instagram's API, which millions of people and apps are using?

42:53 Or are you building something really quick so you can get that app to work for marketing

42:58 for the next thing for the week?

42:59 Right?

43:00 Yeah.

43:01 If, if it's that the second one, then you, you don't want to worry about too much abstraction

43:05 and you just want to go like, I just need these to be in sync.

43:07 The data goes here.

43:08 We're going to send over the API.

43:09 We're going to be good.

43:10 Right?

43:10 This is going to fly.

43:11 On the other hand, if you're building something incredibly consumed and long lived, then maybe

43:16 you have different design patterns and care about it.

43:18 And I think it's important to think about organizational boundaries is the users, the consumers,

43:24 the actors in your use cases are, is there an organizational boundary separating in the Instagram

43:30 case?

43:30 The people who are going to be using my API, I have no control over in my organization.

43:35 It's general public as far as I'm concerned.

43:38 If it's just someone on my team using it, we can work through, okay, you know, I'm changing

43:43 this API.

43:43 Let me help you through that.

43:45 But if it's someone outside your team or outside your organization, you're going to have a backwards

43:50 compatibility to think about.

43:51 You're going to have all these vast amount of things that people are going to complain about.

43:56 And you need to think about that and say, okay, can I make these changes?

43:59 And is it good for my user base?

44:01 Even though I can't control them, the best I can do is entice them.

44:04 You'll never be able to force someone to use something they don't want to.

44:07 If you don't have that control over them, that's why we have open source forks.

44:10 One of the ideas that I think comes up in this, this whole story, and you talk a lot about

44:16 like inheritance, both inheritance in terms of class hierarchies and even multiple inheritance,

44:22 but also maybe the more traditional interface style with protocols, just so that you can express

44:28 pipe stuff separately, right?

44:30 In interesting ways without coming up with inheritance beasts and whatnot.

44:35 Yeah.

44:35 So one thing I did want to give people a quick shout out.

44:38 So we've all heard of solid principles, right?

44:41 And I've, I really enjoyed the solid principles and I thought they were super interesting.

44:45 I still do.

44:46 I think they're pretty great.

44:48 I recently came across a presentation and it was, I kind of over on Richard Campbell's

44:54 show.net rocks, which I know people probably don't care about that now, but they actually

44:59 talked about this thing called Cupid, which is an alternative to solid.

45:03 I'm going to go check that out.

45:04 And it was super, it has nothing to do with.net.

45:07 It's just like software in general patterns.

45:09 Like what, what do we know now, 20 years later, that doesn't necessarily make so much sense

45:13 for solid.

45:14 And what is the alternative?

45:16 And he has this really cute name.

45:17 So as people are thinking about maybe those kinds of levels, like that's a pretty interesting

45:22 to check out.

45:22 Yeah.

45:23 And I like thinking of solid again, going back to first principles, there are things that

45:27 I like about solid.

45:29 There are things I also think get a bad rep because they're associated with heavy, heavy

45:35 OO code bases that have class hierarchies that are just unmanageable.

45:40 Probably multiple templates in there somehow.

45:42 Yeah.

45:43 And we've kind of grouped these things together.

45:46 So an example, like one of the most misunderstood ones, in my opinion, is the list of substitution

45:51 principle of the solid principles.

45:53 And like it applies when you're doing duck typing in Python, which has nothing to do with

45:57 class hierarchies.

45:58 It doesn't have anything to do with interfaces or things like that.

46:02 It's a turnaround substitutability.

46:04 And so when we talk about duck typing in Python, it's, well, multiple types can represent some

46:09 behavior.

46:10 Maybe they support an addition method and a subtraction method.

46:13 Can they be substituted into this function that's expecting addition and subtraction?

46:18 And so when we think about-

46:19 I do addition and subtraction on dates and times.

46:21 Another might do it on like imaginary numbers.

46:23 But the concept of adding and subtracting things, you can reuse that, right?

46:27 Something like that.

46:28 And so things like list of substitutability, excuse me, list of substitutability principle

46:33 talks about how do you think about substitutability from your requirements and from your behaviors?

46:38 And you can get value from that without ever touching a class.

46:42 So I think all too often we lump those solid principles to a strict OO of the 90s or the knots and think that they aren't as useful.

46:51 I'm going to have to check out Kupin and see how that's changed as well.

46:54 I would expect that a lot of the same first principles are true, but they've kind of reframed it in a way that is more applicable to how we program today.

47:03 I don't remember all the details exactly.

47:05 So, but yeah, something roughly along those lines.

47:09 I think that makes sense.

47:10 So one thing I do also want to just sort of get your thoughts on before we leave it completely in the dust is you talked about a lot of times you've got a dictionary and it's supposed to represent some stuff.

47:20 Maybe it's a response from an API where it says, you know, here was what you requested.

47:25 Here was the status code.

47:26 Here's what the cloud cost of that is.

47:28 And here's like the, you know, some, something that looks like that maybe doesn't make sense as a dictionary.

47:33 Maybe it gets moved into like a Pydantic model or something like that.

47:36 Right.

47:37 But the other one is I have 10,000 users and I want to put their email address as the key and their user object as the value.

47:44 And given an email address, I want to know super fast, which user is that?

47:48 Or do I have that user at all?

47:49 In that case, the dictionary makes a lot of sense.

47:51 Absolutely.

47:52 But there's a super big difference to say the keys represent like the same thing across different results.

47:58 Whereas the same data structure, a dictionary represents this heterogeneous, like not really a class, but kind of a class.

48:06 How do you position those so that beginners understand that those are completely unrelated things that need to be thought about separately, but they kind of appear the same in code?

48:16 Because when I'm talking to people about dictionaries and I'm teaching, they're like,

48:19 Oh, well, why would you use a dictionary here?

48:21 Because over here you were doing it this other way, but now it's like a database.

48:24 Like, what are you doing with it?

48:25 This is weird.

48:26 Why are these not, why are they the same but different?

48:28 So here's how I find it.

48:29 So for the key mapping value, I would say there's a few use cases you're going to have.

48:35 You're going to either be iterating over the dictionary to do something on every element, or you're going to be looking up a specific element.

48:41 And that element that lookup is dynamic, typically passing in some value.

48:46 Maybe it's a variable that contains the email address in your example.

48:50 For something like an API response, something that might be more heterogeneous, something that should be a data class or a Pydantic model, what have you.

48:57 You typically aren't iterating over every key.

49:00 You're looking up specific keys, but it's static to the index, meaning you're passing in string literals between the square brackets.

49:07 This is the key I want on this circumstance.

49:10 You know, the name, the age, the date of birth.

49:13 Right.

49:14 And so you're looking up specific fields and you're building a relationship between different key value pairs.

49:20 A name, age, and date of birth is a relationship called purse.

49:23 With your dictionary, you're doing, or with your mapping, your key value mapping, there's no relationship between email one to user object one and email two to user object two.

49:33 The relationship is just from key to value.

49:35 And that's typically what I explain to people trying to discern the difference.

49:39 That's a really interesting way to think about it because, yeah, you will almost always use static literal strings when it's the heterogeneous API response style.

49:48 And you will almost never do that.

49:50 Why would you say, quote, type in the email address?

49:53 You would just have that object.

49:55 You wouldn't need to type in, like, you would just never create that structure in the first place, which I think is really interesting.

50:01 So, yeah, if you're dynamically passing in the keys, it's probably all the same object, but different ones of them as opposed to an API response.

50:10 And I think that's the heart of why I really wanted to write about robust Python.

50:13 So I've been programming for a while now, and I make a lot of decisions as a senior engineer.

50:18 I really started to step back and ask myself, why do I make these decisions?

50:22 Why do I choose a dictionary over a data class?

50:25 Why do I choose a class over a data class?

50:27 Why do I choose an acceptance test over a unit test?

50:30 Why do I choose to do a plug-in here, but a dependency injection somewhere else?

50:35 And I just wanted to start documenting.

50:37 Here's why I make the decisions I did.

50:40 Here's why, what intent I'm trying to communicate through in the hopes that we start normalizing that conversation more in our field.

50:47 Why are we doing the things we do?

50:49 As a beginner, it's frustrating.

50:51 Oh, wow.

50:51 You use a dictionary here.

50:53 Why?

50:53 How did you ever know to do that?

50:55 Yeah.

50:56 Yeah.

50:56 And so I wanted to really capture, again, I've said it a lot.

51:00 The first principle, why am I doing the things I'm doing?

51:02 What are the things I often give as advice to junior programmers, intermediate programmers, too, and senior programmers?

51:10 It's just, it was really enlightening for me to really step back and try to dissect why I do the things I do in Python and come up with, here are the valid engineering reasons behind it and try to frame that in terms of maintainability.

51:24 Yeah.

51:24 I think that's very valuable.

51:25 I think it'll help people learn.

51:26 Because it's not enough to see it by example.

51:28 You're like, well, these both kind of work.

51:29 It's like, yeah, but they mean something different.

51:32 They totally communicate something different.

51:34 A couple of thoughts from the live stream I want to share.

51:37 Kim Van Wick out there says fantastic advice about having too much configurability.

51:44 Far too often, I prematurely de-dupe two similar blocks of code and then find myself adding special cases.

51:50 I wouldn't need, yeah.

51:51 That sounds like what you were saying as well.

51:53 Yeah.

51:53 And so what I would say is that there's a difference between policies and mechanism.

51:57 The policies are your business logic and the mechanisms are how you go to do something.

52:01 Logging module in Python is fantastic.

52:02 You're logging.

52:04 The logger module doesn't care what you're logging, how you're logging it, when you're logging it.

52:09 It's just the mechanism.

52:10 To dev null, who cares?

52:12 Yeah.

52:12 It's all the same.

52:13 But the what you're logging is your policy.

52:15 And so very often I try to find a way to say, okay, how do I make my mechanisms de-duplicate?

52:21 Because those are what I have to reuse.

52:23 My business rules are going to change.

52:25 They're going to change for different reasons.

52:26 I want to make that simple to define business rules, but keep the mechanisms reusable.

52:30 You want to be able to compose those mechanisms together.

52:33 Also on the live stream, Mr. Hypermagnetic says, is it faster to look up a user or whatever

52:39 from a dictionary rather than iterate over a list?

52:43 And I think, boy, oh boy, if you've been down that path, you know there's a difference.

52:48 This is coming from an advice from a C++ programmer who really cared about performance.

52:52 Measure it.

52:52 Use cases can be surprising.

52:54 If there are times where a contiguous block of memory with a binary search is faster than

53:01 a dictionary lookup.

53:02 But you will not know until you measure it.

53:04 There are also things that need to be faster than your program.

53:08 And there are many things that don't fast in your program.

53:10 I think it's important being aware of which one those are.

53:13 You don't want to, not to sound cliche, the whole premature optimization is the root of all

53:19 evil.

53:19 Breaking that down a bit, you don't want to just optimize everything because it takes time to