Measuring your ML impact with CodeCarbon

Panelists

In this episode, you'll meet Victor Schmidt, Jonathan Wilson, and Boris Feld. They work on the CodeCarbon project together. This project offers a Python package and dashboarding tool to help you understand and minimize your ML model's environmental impact.

Episode Deep Dive

Guests Introduction and Background

Jonathan Wilson is an associate professor of environmental studies at Haverford College. He brings a unique perspective to the CodeCarbon project, combining environmental science expertise with a background in computer science.

Boris Feld works at Comet ML, focusing on experiment management for machine learning workloads. He has helped create and shape CodeCarbon’s open-source development efforts, contributing coding expertise and solutions for measuring emissions during model training.

Victor Schmidt is a PhD student at Mila (Quebec’s AI Institute) in Montreal. He specializes in machine learning and co-founded the CodeCarbon project to better understand the carbon footprint of ML training.

Their collective mission is to empower software developers and data scientists to measure and minimize the environmental impact of machine learning models through CodeCarbon.

What to Know If You're New to Python

Are you new to Python but eager to follow along with measuring ML impact? Here are a few essentials:

- Use a popular code editor: Tools like VS Code or Sublime Text allow quick Python setup.

- Learn the basics of pip install: Installing packages like

codecarbonrequires comfort with pip or other dependency managers. - Basic machine learning concepts: Understanding model training, hyperparameters, and inference will help you follow CodeCarbon’s workflow.

- Practice Python fundamentals: If you’re just starting out, see the “Learning Resources” section below for a beginner-friendly course.

Key Points and Takeaways

Measuring ML Carbon Footprint with CodeCarbon

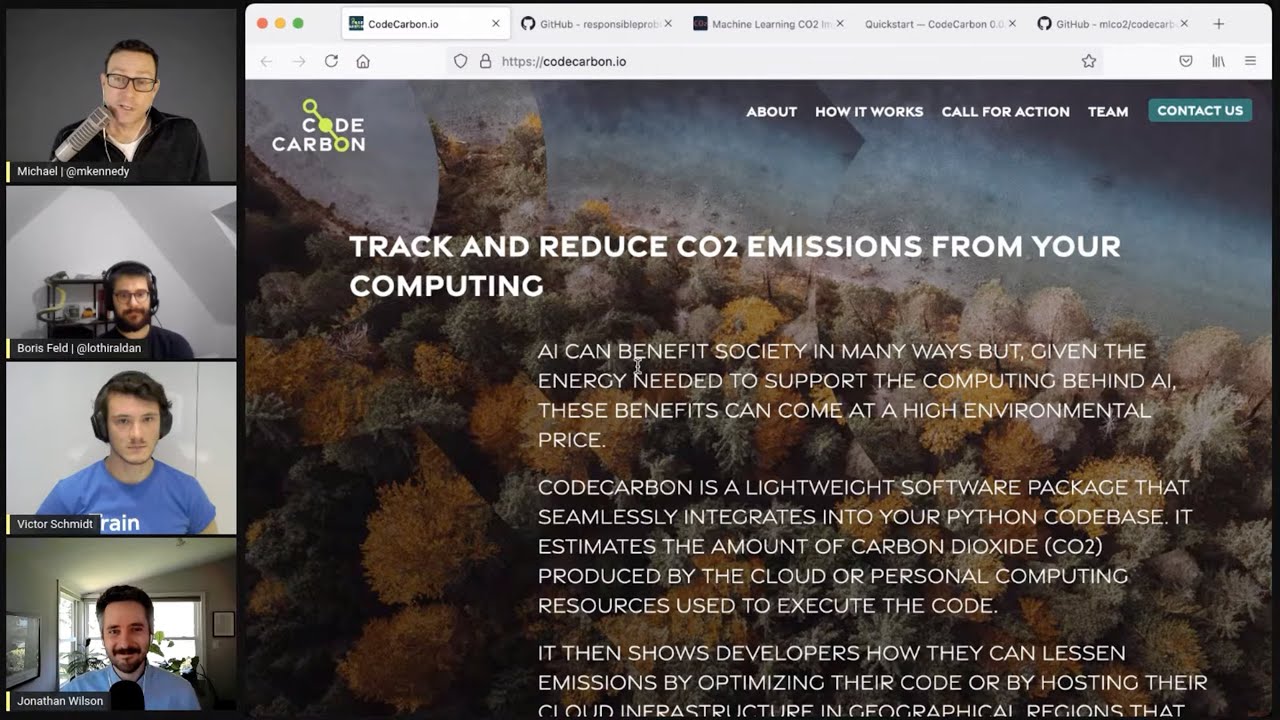

CodeCarbon is a Python package and dashboard that estimates the energy consumption and carbon emissions of ML workloads. By integrating a few lines of code (pip install codecarbonand a simple start-stop tracker), data scientists can automatically capture energy metrics, map them to local or cloud energy grids, and see an approximate carbon footprint.- Links & Tools:

Why Estimating Emissions Matters

The rise of large-scale ML models has sparked concern about their environmental cost. Even medium-scale GPU training can be energy intensive, yet many organizations don’t track these impacts. CodeCarbon’s team underscores that you must measure usage before you can optimize or reduce it.- Links & Tools:

- NVIDIA SMI (for GPU power usage)

- Intel Power Gadget (for CPU monitoring on some setups)

- Links & Tools:

Grid Power Intensity and Location-Aware Emissions

CodeCarbon can use local grid emissions data to map kilowatt hours consumed to CO2 output. Where you train your model matters: some regions rely on coal, others on hydro or nuclear. A model that emits a certain amount of CO2 in one area could be “cleaner” elsewhere simply because of the greener energy mix.- Links & Tools:

- ElectricityMap / CO2 Signal (tracking real-time grid mixes)

- Links & Tools:

Cloud Providers and Data Transparency

Estimating emissions on the cloud can be trickier, since actual data center efficiency and energy mix may differ from local averages. CodeCarbon currently relies on published region-level data or assumptions about the local grid. The guests encourage cloud providers to share more detailed stats so that estimates can be more accurate.- Cloud Provider Regions: AWS, GCP, Azure all have varying degrees of published sustainability data.

Practical Ways to Reduce ML’s Carbon Footprint

Tactics like switching to low-carbon data center regions, employing early stopping, pruning large models, or using Bayesian search instead of brute-force grid searches, all save time and reduce emissions. These optimizations align with both environmental benefits and lower compute costs.- Links & Tools:

- Comet ML (Experiment management and hyperparameter tracking)

- Links & Tools:

Balancing Accuracy vs. Emissions

More compute time doesn’t always mean better results. The “law of diminishing returns” in model accuracy often sets in before skyrocketing resource usage. CodeCarbon helps you identify when to stop training, or when further compute yields little performance gain relative to its environmental cost.Open-Source Collaboration and Volunteer Contributions

CodeCarbon is an open-source project sustained by volunteers and a few sponsor companies. The team welcomes help in areas like collecting energy grid data, refining CPU measurements, improving data visualizations, and building a central API for large organizations to consolidate their runs.- GitHub Issues: CodeCarbon Issues

Going Beyond CSV: Future Reporting and Dashboards

Currently, each training run logs to a CSV file. In the future, CodeCarbon aims to provide an API and dashboards for entire teams or departments, showing aggregated footprints across multiple runs and experiments. This centralized tracking could drive data-driven environmental initiatives company-wide.Transparent Offsets vs. Actual Reductions

Many companies claim “carbon neutrality” through offsets, but that doesn’t mean zero carbon was emitted upfront. The CodeCarbon discussion stresses direct reduction—through better hardware choices, code efficiency, or greener power—rather than relying entirely on offset programs.Industry Impact and Collective Responsibility

While single developers can choose better coding practices or region selection, structural change from hardware manufacturers, cloud providers, and open-data policies is also needed. Projects like CodeCarbon hope to drive broader awareness and accountability in the ML industry.

Interesting Quotes and Stories

"If you want to improve it, you must measure it before." — Jonathan Wilson

"It's not just because a company claims carbon neutral... it doesn't mean no carbon was emitted." — Victor Schmidt

"CO2 offset is good, but not emitting in the first place is even better." — Boris Feld

Key Definitions and Terms

- CodeCarbon: A Python library to measure the estimated power usage and resulting carbon emissions during ML training or similar compute-intensive tasks.

- Grid Search: A brute-force hyperparameter search method that can lead to excessive training runs and higher emissions.

- Early Stopping: A technique that halts training once the model stops improving significantly, saving time and energy.

- RAPL: (Running Average Power Limit) – An Intel interface for reading estimated CPU power usage.

- ElectricityMap / CO2 Signal: Services that provide real-time or historical carbon intensity data for different geographic regions.

Learning Resources

If you are completely new to Python, consider starting with Python for Absolute Beginners. It covers foundational skills needed to follow along with the Python-based CodeCarbon library.

For those looking to strengthen ML and data science practices in Python, check out Data Science Jumpstart with 10 Projects. You’ll build practical skills that align well with applying CodeCarbon to real-world data science pipelines.

Overall Takeaway

CodeCarbon is a vital tool for uncovering and reducing machine learning’s hidden carbon footprint. By integrating straightforward Python tracking code, developers and researchers can make more informed decisions, optimize training loops, and hopefully drive an industry-wide shift toward greener AI. The team’s commitment to open-source collaboration, combined with forward-thinking practices like location-aware training and refined hyperparameter searches, demonstrates that sustainability in machine learning is an achievable goal—one measured line of code at a time.

Links from the show

Victor Schmidt: @vict0rsch

Jonathan Wilson: haverford.edu

Boris Feld: @Lothiraldan

CodeCarbon project: codecarbon.io

MIT "5 Cars" Article: technologyreview.com

Green Future Choice Renewable Power in Portland: portlandgeneral.com

YouTube Live Stream: youtube.com

Episode #318 deep-dive: talkpython.fm/318

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 Machine learning has made huge advancements in the past couple of years.

00:03 We now have ML models helping doctors catch disease early.

00:06 Google is using machine learning to suggest routes in their Maps app that will lessen the amount of gasoline used in a trip, and many more examples.

00:13 But there's also a heavy cost for training these machine learning models.

00:17 In this episode, you'll meet Victor Schmidt, Jonathan Wilson, and Boris Feld.

00:21 They work on the Code Carbon project together.

00:24 This project offers a Python package and dashboarding tool that will help you understand and minimize your ML model's environmental impact.

00:32 This is Talk Python To Me, episode 318, recorded May 19th, 2021.

00:50 Welcome to Talk Python To Me, a weekly podcast on Python, the language, the libraries, the ecosystem, and the personalities.

00:56 This is your host, Michael Kennedy.

00:58 Follow me on Twitter where I'm @mkennedy.

01:00 And keep up with the show and listen to past episodes at talkpython.fm.

01:04 And follow the show on Twitter via at Talk Python.

01:07 This episode is brought to you by Square and us over at Talk Python Training.

01:12 Please check out what we're offering during our segments.

01:14 It really helps support the show.

01:16 When you need to learn something new, whether it's foundational Python, advanced topics like async, or web apps and web APIs,

01:23 be sure to check out our over 200 hours of courses at Talk Python.

01:27 And if your company's considering how they'll get up to speed on Python, please recommend they give our content a look.

01:33 Thanks.

01:34 Boris, Victor, Jonathan, welcome to Talk Python To Me.

01:38 Thanks for having us.

01:39 Glad to be here.

01:40 It's great to be here with you.

01:41 Welcome, everyone.

01:42 You all are doing really important work, and I'm super excited to talk to you about it.

01:45 So we're going to talk about machine learning, how much carbon is being used for training machine learning models, and things like that.

01:54 And some cool tools you built over at CodeCarbon.io and that collaboration you got going on there.

01:59 But before we get into all those sides of the stories, let's just start with yours.

02:04 Jonathan, you want to go first?

02:05 How did you get into Python?

02:06 Sure.

02:06 Yeah, thanks for having us, Michael.

02:07 My name is John Wilson.

02:08 I'm an associate professor of environmental studies at Haverford College.

02:11 I'm actually an environmental scientist.

02:13 So I was brought in to kind of consult from the environmental side of this project.

02:17 But I have a secret history as a computer science undergraduate back in the dark ages, learning to code on C, C++, and Java.

02:25 And yeah, so I was brought in to kind of like provide that environmental perspective on the project.

02:30 And, you know, having a little bit of a coding background, you know, despite how rusty it is, has been pretty helpful at thinking some of these connections through between computational issues and environmental issues.

02:41 Yeah, I can imagine.

02:41 Did you find Python to be pretty welcoming, given like a C background and stuff?

02:46 Oh, gosh, yeah.

02:46 I mean, you know, I learned back in the bad old days of, not bad old days, I shouldn't say that, scheme, things like that, you know, a little bit more challenging, you know, where it derives.

02:54 Yeah, that's one of the first languages I had to learn for like a few CS classes I took.

02:58 I was like, we're going to start with scheme, like anything with this.

03:01 Give me something mainstream, please.

03:02 Yeah, sometimes I feel like, you know, the sort of like older person, you know, telling the kids these days about how, you know, we had to walk uphill both ways in the snow to learn programming.

03:11 And it's much, much easier.

03:12 And it's one of the things I like about Python is it's really accessible to people from different fields.

03:17 You know, you get into it from aspects of natural sciences, but even people who are like in the digital humanities are using it to, you know, for language processing and things like that.

03:25 It's super flexible, which is really neat.

03:27 Yeah, it's really impressive what people in those different fields are doing, how they can bring that in.

03:31 Boris, how about yourself?

03:32 How did you get into Python?

03:33 I actually discovered Python during my master's degree.

03:36 And I got a math teacher that introduced us to Python because they use Python for his own thesis.

03:43 And I had one stuff to do, which was implementing RSA encryption.

03:47 And I didn't want to do it because math was not my forte.

03:51 So instead, I did some encryption inside images in Python.

03:55 And I fell in love with Python.

03:57 Oh, fantastic.

03:58 Victor?

03:58 I discovered this taking a data science class in, when was that?

04:04 2014, I think it was.

04:06 That's really, I really entered Python through the data science perspective.

04:11 And then I took a course in general web development.

04:15 And we used Django.

04:16 Oh, right.

04:16 Nice.

04:17 And so it's been, yeah, so it's been really like the main language I've been using.

04:21 I was taught CS with Java and so on.

04:24 So I was never a computer science fan.

04:28 Like, I liked math.

04:30 And I found Python to be really flexible in that regard.

04:33 Like, you can do math super easily without, like, getting lost in translation.

04:36 Yeah.

04:37 One of the things that just came out is one of the new Texas instruments, the TI-84 calculator.

04:42 They have a, you can now program it with Python.

04:44 So that's kind of interesting now that it's one of the old calculators that everyone's probably used going through math and whatnot.

04:53 Is now sort of, you know, more in the modern space.

04:56 I think my first program was on my TI because I was bored in math course.

05:01 And I cannot follow.

05:02 So instead, I wrote some programs.

05:04 Yeah.

05:04 That's not a terrible way to spend your time.

05:07 All right.

05:07 So let's go ahead and talk about the main, you know, get to the main subject here.

05:12 So let's start by just setting the stage.

05:15 There's an interesting article here that came out.

05:18 This is not even that new.

05:19 It's, you know, 2019.

05:20 And it's in the MIT Technology Review.

05:23 So, you know, that gives it probably a little more than just some random blog says this.

05:28 And it's got a picture, a big picture of a data center.

05:30 And the title of the article by Karen Ho is, Training a Single AI Model Can Emit As Much Carbon As Five Cars Throughout Their Lifetime.

05:40 So that sounds pretty horrible.

05:42 But we also know that machine learning has a lot of value to society, a lot of important things that it can do.

05:48 So here's where we are.

05:50 And this seems like a good place to start the conversation for what you all are doing.

05:55 I have a mixed feeling about this article.

05:57 I think one of the great things it did is raise a lot of attention and awareness about this topic.

06:03 I think a lot of approximations were made.

06:06 And the goal here is not to criticize authors, but rather to say that in the meantime,

06:12 things have evolved and our understanding has, I think, been a little more precise.

06:16 Well, maybe because people are building tools that measure it instead of estimate.

06:20 So there's that, definitely.

06:22 And we hope that helps.

06:23 But it's also one of the things you need to put in perspective is that the kind of model they're looking at is not necessarily your everyday model.

06:31 Someone can just train on their local computer or even in academia.

06:36 It's hard to get your hands on such large clusters and the number of GPUs used and so on.

06:43 So like, I just want to put that in perspective that even if those numbers were accurate,

06:48 and they are not, but I mean, it's a ballpark.

06:51 It's not like every data scientist you'll meet and every AI researcher you'll meet is going to have something in that level of complexity that they train every day.

07:01 Right.

07:02 So I have over here a sim racing setup for some sim racing I do, and it has a GeForce 2070 in it.

07:10 It would have to run a very long time to emit this much carbon, right?

07:14 Like, you got to have the necessary money and compute resources to, you know, even get there, right?

07:21 Yeah.

07:21 Like the number, I don't remember exactly the setup that they're looking at in this paper,

07:26 but like typically the modern language models that you hear about, like, oh,

07:32 OpenAI has a new NLP model and like GPG-3, and it's that number of billion of parameters.

07:38 Like, they train those things on hundreds and hundreds of machines for a very long time.

07:43 This is not something you can do easily.

07:44 It costs millions of dollars in investment upfront, and then just using those things is super expensive.

07:52 So while I think we should be careful, it's not like the whole field is like that.

07:56 Yeah, that's a very good point.

07:57 I recall there was some cancer research that needed to answer some big problem,

08:02 and there was an article where they spun up something like 6,000 virtual machines across AWS clusters for an hour

08:10 and had it go crazy to answer, you know, some protein folding question or something like that.

08:15 That would use a lot of energy, but it's extremely, extremely rare as well.

08:18 On the other hand, you know, if you created that model and it solved cancer, well, you know,

08:23 people drive cars all the time for less valuable reasons than, you know, curing cancer.

08:27 Yeah, and I think just to build on what Victor said, you know, I think there was something really valuable about this article coming out.

08:32 You know, I think for a long time, there's been attention that's been paid to the sort of environmental toll of supply chains in computing.

08:39 You know, people have talked a lot about where minerals come from.

08:42 Yeah, and one of the things that was really interesting about this article was, you know, with the approximations,

08:48 it caught people thinking about the question that kind of animates our collaboration,

08:52 which is when you're doing any kind of energy-intensive computational issue,

08:56 you might want to think about where your electrons come from.

08:59 You know, what's actually powering the hardware that you're using to do this?

09:04 And I think this article did a really nice job of, like, focusing attention that there are some really energy-intensive projects

09:09 that in particular ways, particularly if they're located in particular locations,

09:14 they can have a really large environmental cost that isn't really transparent to the user or the person training the model.

09:20 Yeah, yeah.

09:21 Well, I don't want to go down this road just yet.

09:23 I want to keep talking about the more high-level a little bit.

09:26 But, you know, if the people who did this very expensive model, if they just said, I'm going to pick the closest AWS data center to me,

09:32 that rather than, let me find the data center by just flipping a switch and say,

09:39 no, no, maybe the one up in Prinnville, Oregon, by the dam, would be better than the one by the coal factory, for example, right?

09:47 Like, that's something they could easily do, and it maybe doesn't change anything for them, right?

09:51 Yeah, it's not always the case, because when you have, for example, health data and so on,

09:54 there are legislations, but definitely, like, if you can, and there's more than just the money at stake here,

10:02 and it's probably going to be a marginal change, because the prices tend to be not equal, but kind of uniform still.

10:09 I think it's another decision item.

10:12 Yeah.

10:12 Boris, you got any thoughts on this article before we move on?

10:15 Yeah, I think it came with a good, to highlight a serious issue, and if people want to improve it, as most of my manager told me,

10:26 if you want to improve it, you must measure it before.

10:28 So we are there to give us a start of an answer to, and give them action how to improve the cut carbon emission on training models.

10:39 Hopefully to not train less models, but train better models.

10:44 Yeah.

10:44 I think, for example, this is part of what the recent Google paper, led by David Patterson,

10:50 on training neural networks and their environmental impact.

10:54 This is one of the things they say, and it's quite a dense paper, and a lot of metrics and so on.

11:00 But one of the things they say is, if you don't want people caricaturing your numbers and putting approximations out there,

11:06 well, you'd better publish those numbers yourself, right?

11:09 And you need tools to do that.

11:11 So if you're Google or if you're close to the infrastructure that you use, it's easier.

11:17 It's even better if you have access to the plugs.

11:20 But that's not the case of everyone, right?

11:22 Right.

11:22 So you're saying if you could put something to actually measure the electricity going through the wire,

11:27 instead of some approximation, you're in a better place to know the answer.

11:30 Yeah, definitely.

11:32 That's where I think we might be a little ahead of your schedule, but we might go there still.

11:38 Which is like, that's where Code Carbon comes into play, right?

11:41 This is why we want to create this tool.

11:43 This is a user-facing product, right?

11:46 And I think it's very important to highlight that.

11:48 It is not intended to be the solution for a data center.

11:53 This is not something that we think should be deployed as a cloud provider,

11:58 or if you own your own infrastructure, if you want to have centralized numbers, there are alternatives out there.

12:04 Yeah.

12:04 Things like scapandre.

12:06 I don't know how you say that.

12:08 It's a French word.

12:08 Anyway, it's out there on GitHub.

12:10 You can find it by just looking this way.

12:11 But the goal here is, as a user, what can you do if you don't have those numbers?

12:15 Do you do nothing, or do you try to at least have the start of an estimation,

12:22 and maybe start the conversation with your organization or your provider?

12:26 Yeah, fantastic.

12:27 And you guys are putting some really concrete things out there for Python developers.

12:31 Two quick high-level comments.

12:33 One, Corey Adkins from the live stream says, would it be the same or worse for quantum computers?

12:39 Okay, I'm going to talk out of my depth here.

12:41 So the first, the best answer I can use, I don't know.

12:45 And then to go beyond that, my understanding of quantum computers is that they do very different

12:51 things.

12:51 And you can't just compare the computations made on classical computers with the things

12:57 quantum computers are intended for.

12:59 I think intrinsically, because of the state of that technology, it is extremely energy intensive,

13:05 just because you usually have to pull things down to a few millikelvins or something like that.

13:10 So that may be transitory.

13:13 I'm not sure.

13:14 I don't know about that either.

13:15 I was thinking the same thing.

13:16 You know, just yesterday, Google had Google I.O.

13:19 And they talked about building clusters of qubit sort of supercomputer type things.

13:25 And apparently, they've got to cool it down so much that it's some of the coldest places in the

13:31 universe inside those.

13:32 So on one hand, if quantum computers can do the math super quick, it doesn't take a lot of time

13:37 to run them to get the answer.

13:38 But on the other, if you've got to keep them that cold, that can't be free.

13:41 But it's a very particular kind of math, right?

13:44 And not all problems are translatable from the classical formulation to a quantum compatible

13:51 formulation.

13:52 I'm not sure.

13:53 I think there are problems that we can solve easily on our classical computers that would be

13:57 very hard, if not theoretically impossible to run on quantum computers.

14:01 And it's like, it's a different tool and it's not intended for the same problems.

14:06 So I think it's hard to compare.

14:08 Yeah.

14:08 You will still have some parts of the model training that won't run on quantum computers

14:14 because that doesn't make sense.

14:15 Like pre-processing data, getting data from your different data source, mapping them to a

14:21 common format, exporting your model, creating Docker images, servers.

14:25 There will still be part of the model training process that won't run on those quantum computers.

14:33 This portion of Talk Python To Me is brought to you by Square.

14:37 Payment acceptance can be one of the most painful parts of building a web app for a business.

14:42 When implementing checkout, you want it to be simple to build, secure, and slick to use.

14:47 Square's new web payment SDK raises the bar in the payment acceptance developer experience and provides a best-in-class interface for merchants and buyers.

14:57 With it, you can build a customized, branded payment experience and never miss a sale.

15:02 Deliver a highly responsive payments flow across web and mobile that integrates with credit cards and debit cards,

15:08 digital wallets like Apple Pay and Google, ACH bank payments, and even gift cards.

15:13 For more complex transactions, follow-up actions by the customer can include completing a payment authentication step,

15:20 filling in a credit line application form, or doing background risk checks on the buyer's device.

15:26 And developers don't even need to know if the payment method requires validation.

15:29 Square hides the complexity from the seller and guides the buyer through the necessary steps.

15:34 Getting started with a new web payment SDK is easy.

15:37 Simply include the web payment SDK JavaScript, flag an element on the page where you want the payment form to appear,

15:43 and then attach hooks for your custom behavior.

15:45 Learn more about integrating with Square's web payments SDK at talkpython.fm/square,

15:51 or just click the link in your podcast player's show notes.

15:54 That's talkpython.fm/square.

15:56 Another thing I think it's worth pointing out is it's the training of the models that is expensive,

16:04 but to use them to get an answer, it's pretty quick, right?

16:07 That's pretty low cost.

16:09 It depends on what you're using it for, right?

16:11 So if you have a user-facing model that's going to serve thousands of requests per second,

16:16 then deploying it for a year might be more energy intensive than training it for three days.

16:24 We all know the machine learning lifecycle is not just like you train one model and you succeed and well,

16:28 hooray, right?

16:30 You usually have a lot of iterations, building the models, looking for hyperparameters and so on.

16:35 But even if that takes six months and your model stays online for months or years and serving thousands of people,

16:42 it's not obvious.

16:43 It might even be worse, not worse, more energy intensive.

16:48 Yeah, I guess it depends how many times you run it.

16:51 Another thought, you know, there's a lot of places creating models, like you talked about,

16:55 was it GP3, GPT-3, and whatnot, that are training the models and letting people use them.

17:01 Do you see that as a thing that might be useful and helpful is having these pre-created, pre-trained models?

17:07 Like I know Microsoft has a bunch of pre-trained models with their cognitive services,

17:11 and Apple has their ML stuff like baked into their devices that you don't have to train, you can just use.

17:16 Are the problems being solved and the data being understood usually too general,

17:20 or is that something we can make use of?

17:22 I think pre-trained models are the advantage to keep, as you are training a model once,

17:29 the cost emission of the model during training is amortized for each usage.

17:35 So the more user you have, the more part of the training emission is low.

17:40 But usually you still have to tune the model a bit, so you still have to train it,

17:47 and you're using energy even for prediction.

17:50 So yes and no.

17:51 I'm going to also do the transition to throw the ball to Jonathan for something I think we shouldn't forget

17:57 when we talk about these gains in efficiency, which is like Jevon's paradox,

18:01 and the fact that if you create something that is cheaper to use, and more people use it,

18:07 then the overall impact is lower.

18:10 And I think this is something we tend to forget when we talk about massive improvements,

18:15 or not even massive, just like this is something that is, I think, hard to grasp and anticipate

18:21 when you think about technological advances under the constraint of climate change.

18:25 But this rebound effect is something we should plan for and not just think, well, if you have cheaper models,

18:32 but more people can use them, I think it's not that obvious that it's an overall gain in terms of energy.

18:38 Like then you can talk about all the societal consequences and the advances in cancer research.

18:44 It's really hard to have a definitive answer.

18:46 Yeah, just to build on what Victor said, it really is a difficult, I mean, this is a classic environmental conundrum, right?

18:52 When, you know, the classic example of the Jevons paradox is, you know, adding more roads leads to more traffic

18:59 because more people believe that there's more space for them to drive.

19:02 And so you've seen this over and over again in all sorts of different contexts, that when you build these tools,

19:06 more people will use them and that can end up costing more than not building them in the first place.

19:12 So I think this is something to really be aware of, you know, as we're democratizing these kinds of tools.

19:16 There's a real pro here.

19:18 There are some real strengths of having these tools, you know, easily accessible and that can be used.

19:23 But one has to worry about the potential costs of, you know, having all these tools being employed,

19:30 in particular being employed in all sorts of different kind of sub-energy grids around the world.

19:34 Not all grids are, you know, connected up to solar panels.

19:37 You know, many are connected to coal-fired power plants and that can outweigh the cost.

19:42 Yeah, we can hope, but it's not today, is it?

19:45 Yeah, not yet, not yet.

19:46 One would hope, but maybe soon.

19:48 One last thought about pre-trained model is usually they are trained for more diverse usage.

19:54 So I would think that they tend to be larger than model trained, especially for a single usage inside a single company with a single type of data.

20:04 So I would say they are likely bigger, so they use more energy to train and to use, but by homage, I couldn't say.

20:11 Yeah.

20:12 Well, I think the paradox that you all are speaking about, you know, one of the ways we could see that is just the ability to use machine learning to solve problems is so much easier now.

20:21 That what used to be a simple if-else runs in a microsecond is now a much more complicated part of your program.

20:28 And so, yeah, there's got to be just a raising of the cost there.

20:32 Now, before we make it all sound like machine learning bad for the environment, 100%, there are good things, you know.

20:39 Google, like I said, Google I.O. was yesterday, and they were talking about doing the navigation so that taking into account things like topography, speed, and whatnot, to actually try to optimize, minimize gas consumption with the directions they give you, right?

20:56 And if they could do that with a little bit of computer code to save a ton of CO2 out of cars, like that's a really big win.

21:04 More ML.

21:04 Definitely.

21:05 And I think the reason why we're like, we have started with the online carbon emissions on our side at Mila in Montreal, and Jonathan and colleagues at Haverford with energy usage, and then we came together for quick carbon.

21:19 It's not to say that machine learning is bad, like just as most technologies, it's technology, and it depends on how you use it.

21:27 But where we're going as societies under the constraint of climate change can't leave any field out of questioning themselves of how they use their resources.

21:37 So, yeah, it's something you can't leave out of the picture, which doesn't mean that you can't use it.

21:43 It's like you have to think about it, and we can't have a single rule for everyone.

21:48 It's just you have to take that into account, and you can very well make the decision that it is worth it.

21:53 In many cases, it will be.

21:54 Sometimes, maybe not.

21:55 But be conscious of it.

21:56 Yeah, for sure.

21:57 All right.

21:58 So, I think that brings us to your project, Code Carbon.

22:01 You mentioned it a couple of times.

22:03 So, looking in from the outside, it seems to me like the primary thing that what you guys have done is you've built some Python libraries, a Python package that lets you answer these questions and track these things, right?

22:16 And then a dashboard and data that will help you improve it.

22:19 Is that a good elevator pitch?

22:21 Very good.

22:21 Fantastic.

22:22 All right.

22:23 So, tell us about Code Carbon.

22:24 Do you want to work for us?

22:25 Yeah, sure.

22:26 I'm not busy enough yet.

22:28 We don't have any money, though.

22:29 Oh, good.

22:30 No, but I think it's a great cause.

22:31 And so, tell everyone about it.

22:33 Thank you for the opportunity.

22:34 I think one of the reasons we came together was like, we all know that in the machine learning lifecycle, a lot of the computations you just forget about because there are so many experiments that you run.

22:46 Like, say you have a project and you're going to work on it for like 3, 6, 12 months.

22:50 How many experiments are you going to run?

22:52 How many hyperparameter searches are you going to run?

22:54 It's a very important problem.

22:57 I think this is also something that is central to Comet.ml, which is the company where Boris works.

23:03 They manage experiments.

23:05 And I use that in my daily work.

23:09 And I thought, well, we need something similar to track the carbon.

23:11 It can't just be about the metrics.

23:14 It can't just be about the images you generate because you're turning a GAN, for instance.

23:19 So, how do we go about this?

23:21 And, well, because Python was, I think, the go-to language for AI research and development also.

23:29 Although, in very optimized settings, you might want to go away from that.

23:32 But we thought, well, we need to do something that is going to be plug and play.

23:36 So, it has to be Python.

23:37 It has to run in the background.

23:39 It has to be light.

23:41 And it has to be also something that is versatile in that it is not only about you're just getting yet another metric, but it's also about understanding what it means.

23:52 It's about also education.

23:55 It's about education for yourself, but also maybe for other members of your organization.

23:59 The people who might say, like, say you work in a company and you're thinking, well, I have hundreds of data scientists.

24:06 Like, this is not marginal.

24:07 I want to have an estimation.

24:09 And if estimation is not good enough for you, well, contact your provider and maybe have a wattmeter plugged in somewhere where it matters.

24:16 It's not my expertise.

24:17 But that's basically the idea.

24:19 Yeah, yeah.

24:19 Well, I think plugging in a wattmeter somewhere, that used to be a thing that you could do.

24:24 But now it's Amazon or Azure or Linode or whoever.

24:29 They're not going to let you go plug it into their data center.

24:33 And if you did, there's probably a bunch of other things happening there, right?

24:35 Direct access to the compute resources is just hard to come by.

24:39 Yeah, exactly.

24:40 This is a very big constraint for us.

24:42 I think I expect we'll get into a little more details about that.

24:45 But this is why you need to understand carbon as a tool to estimate things and have approximations.

24:50 And we use heuristics.

24:52 And basically, if a consultant is having you pay for carbon offsets based on those kinds of numbers, you shouldn't pay.

24:59 Because that's not the point.

25:00 Yeah, it's really about giving you the information.

25:02 One of the things I like about what you're doing is you can recommend other areas.

25:07 Like we talked about, like you could switch to this data center.

25:09 And then it would have this impact, right?

25:11 Yeah, I think it's part of the educational mission.

25:13 It's like we all know, or at least I wish we all knew or we want everyone to know.

25:18 I don't know how to put that.

25:19 But that it's the climate change is a very serious threat.

25:23 And being conscious about your energy usage and your consumption of resources in general is one thing.

25:32 It's very important.

25:32 But then I think you consciously leave people out there with that feeling of guilt.

25:36 And there has to be actionable items.

25:39 Changing your region is probably the easiest thing you can do, especially in the age of the cloud.

25:44 And at a time when basically moving your data across continents is about picking a few checkboxes on a web interface.

25:54 Yeah.

25:54 I think just to pick up a little bit on what Vic Victor has said here is that the educational part of this is a very important part of the Code Carbon project.

26:01 Because we know as we have been involved in this, that answering this question, like what's the CO2 footprint of my computational work, is actually a very, very difficult question to answer.

26:11 And it's opaque for a variety of reasons.

26:14 It's opaque because the way that the energy industry deals with CO2 emissions is pretty opaque, unless you know the language of how they express this.

26:23 And it's also difficult to understand when you are able to make the calculation, what does that mean?

26:29 What's one gram of CO2 emitted relative to, say, everyday activities?

26:34 So one of the things that we've tried to do as part of this dashboard is simplify those two steps for people.

26:39 Because we've been approached by people via email, via Slack, and we know we're not the only people concerned about this.

26:45 And so this is just a way to help make these approximations both visible, but also kind of comprehensible and put it in the context of human activities.

26:56 You're right.

26:56 There's a lot of layers and, you know, companies that run the clouds, they are trying to be more responsible with their energy.

27:03 But you don't know.

27:04 A lot of times you don't know if this data center, US, East, one, and AWS, how much energy from different sources is that actually using?

27:14 How much have they actually built their own solar and wind?

27:18 We don't know, right?

27:19 But you get a better sense using your tool.

27:21 You've got better data than the random person who just kind of estimates, well, they're doing some stuff, so it must be fine.

27:27 Another important thing I think is, and Jonathan is much more an expert in that than I am, but not emitting is very different from offsetting in whatever way, wrecks or whatever.

27:39 Yeah.

27:40 Your emissions and our atmosphere, our climate has inertia.

27:44 And the expected compensation in 5, 10, 20 years of your current emissions.

27:51 Those are two very different things, right?

27:54 And it's much easier to put carbon in the atmosphere than taking it away from it.

28:00 And so I think it's not just because you read Google and others, Microsoft are carbon neutral, which comes from compensations of many forms.

28:10 It doesn't mean no carbon was emitted, right?

28:13 Yeah, there's just to build on Victor's point again, you know, there's decades of research in sort of what you'd call, you know, environmental psychology, explaining to people the consequences of inaction or, you know, of diffuse environmental costs to a particular action causes long-term behavior change.

28:29 And I think, you know, one of the things that's been really exciting about seeing the, you know, the machine learning and AI communities kind of like grapple with this question in a very public way is we've started to see articles of, you know, pressure being put on organizations to why don't we have more green energy infrastructure, you know, undergirding our work.

28:48 And so, you know, the speed at which this has become, you know, a public conversation is really heartening, you know, as somebody who's been working in the environment for quite a bit of time.

28:59 Yeah, I would say it does seem to be getting a lot of attention, which is good.

29:02 It's a big problem.

29:03 But attention instead of just head in the sand is a really big deal.

29:06 Like we've been driving cars for a long time.

29:08 We've been flying planes for a long time.

29:10 There's a lot of like raised trucks with super big wheels with, you know, dual coal stack pipes on them, right?

29:17 Like that's, I can't speak for everyone that gets a truck like that.

29:20 But I feel there's a lot of times when we have these conversations, people are just like, well, this is so horrible and so vague that I'm just going to live my life and enjoy it.

29:29 Because I seem to not be able to do anything anyway.

29:31 So I might as well have fun instead of not have fun while things are going wrong, right?

29:36 Like that's kind of that psychology, right?

29:37 And so I don't know.

29:38 How do you all deal with that?

29:39 It's also something that like when it's not before your eyes, it's much more difficult to answer, to understand and to respond to.

29:47 And I think this is part of what we're seeing today with decades of activism is like facts, hard facts are not enough to convince humans.

30:00 And then to some extent, it's also a good thing.

30:02 And it has, I think, value in our recognition.

30:06 But it also has this downside that it's just because you say a number to someone, if they don't understand it, if they don't see it for themselves in their everyday life, it's going to be very hard to understand.

30:18 So until you have used something like Code Carbon, like I code every day, I train models every day, and I train a model for five days on a GPU in Quebec.

30:29 That's my daily life, basically.

30:31 And some might find it dull.

30:33 I like it.

30:34 Anyway, that's not the point.

30:36 I mean, until you have that and you're like, oh, this is what I do.

30:39 Those numbers start to make sense.

30:41 Even if you have numbers for your day to day, that doesn't seem that much, like thousands of grams or kilograms.

30:48 But once you sum all the emissions for all the model trained, for all the machine learning teams, for all the machine learning data scientists, for a company for a year or even for academia, that start to get, depending on your size of the company, of course, that start to get sizable.

31:06 And you might want to take a serious look at it.

31:09 Yeah.

31:09 I want to dive into the code and talk about this.

31:13 But I guess maybe speak really quickly to, I work at a company.

31:17 We make shoes.

31:18 They want me to use ML to figure out how to get better behaviors out of the materials for track runners or whatever.

31:26 So I do that.

31:26 That company, how do I get that company to say, yes, we should measure our scientific data science work and we should offset it?

31:35 There's a lot of layers between the people who care about shoes and sales and people who care about machine learning carbon offsets.

31:43 My personal understanding of this situation is that empowering individuals with tools and numbers to convince organizations is part of our mission.

31:53 So if what the person in charge, whatever their role in the organization, thinks that in order to have an estimation of their carbon impact, they have to find a consulting firm, pay people for five weeks.

32:08 If they think this is the process, they're going to be reluctant.

32:11 And I can understand why.

32:14 If you have a plug and play tool that even you as the evangelizer, if that's a word, you get the idea.

32:22 Yeah.

32:23 It doesn't cost you much to try.

32:24 The way we want to build this thing is just like one import and full of lines.

32:29 Yeah.

32:29 So, yeah.

32:30 So let's talk about the code.

32:31 I think maybe the answer, maybe an approach that you could have there is we'll run something like this on all of the training that we do.

32:38 And then we're going to report up our division in this company generates as much carbon.

32:43 So if you care about carbon, you need to take that into account.

32:45 I think that's a good selling point.

32:47 I think as we can see today together, I mean, those conversations are hard and long and it's not easy to understand all that matters.

32:56 And you may need that consulting firm in the end to help you understand what's at stake in your whole value chain.

33:02 You got to start somewhere.

33:04 Right.

33:04 And if you're an individual and you want to change your organization, well, I think if you want to have an impact, those kinds of tools should be easy to start with.

33:13 And then, as we've said, it's not enough and it's not precise enough.

33:16 And then there are other steps you can take.

33:18 But you need to get the conversations going and started.

33:22 Yeah.

33:22 And to start that, you got to start measuring.

33:24 So in order to do that, let's talk about the code.

33:26 It's literally four lines of code.

33:28 It's all you got to do.

33:29 So you pip install Code Carbon and then from Code Carbon, import Emissions Tracker.

33:34 Create one.

33:35 Tracker.start.

33:36 Do your training.

33:36 Tracker.stop.

33:37 And that's it, right?

33:38 Right.

33:39 And with the decorator solution, it's seven, two lines of code.

33:42 Yeah.

33:44 With the decorator, you can just put a decorator on a function.

33:46 And then basically any training that happens during that will be measured and then saved to a CSV file.

33:53 Right?

33:53 That's correct.

33:54 And if you think a context manager should be implemented, well, you're welcome to create a PR.

33:59 It's going to be super easy.

34:00 Everything is already there.

34:01 Yeah, exactly.

34:02 I can already see it in my mind with Emissions Tracker, you know, as Tracker.

34:07 So it creates one of these CSV files and then what?

34:10 So there are a bunch of things that happen.

34:12 If you want to, like, the two big steps are, one, you look for the hardware you understand,

34:19 like Code Carbon understands, then you track those, you measure the energy consumed, right?

34:26 And so you have that, basically, you measure the energy.

34:29 And then next step is, well, how much carbon does energy has emitted?

34:35 How does energy emitted?

34:36 And so you need to map the code to your location.

34:39 Right.

34:39 And do you do that by just, like, a get location from IP address type of thing or something like that?

34:45 So you can either do that or provide the country ISO code for a couple of countries, Canada, the US.

34:53 We have regions below the national level.

34:55 Another thing that you can actually do is, well, it's not going to help you with the location,

34:59 but it's going to help you with the carbon impact is we can bring CO2 signal,

35:05 which is an API that is being developed, the electricity map, initiative, group, organization, company,

35:12 whatever their status.

35:13 And so that's going to help you an exact estimation at that moment in time,

35:17 depending on what data you have of those computations.

35:20 Otherwise, we need your country code and we're going to map that to historical data.

35:24 This CO2 signal, this is new to me.

35:26 What is this?

35:26 I think you'd better look at them.

35:28 Exactly.

35:29 The electricity map.

35:31 Look at the map.

35:31 Exactly.

35:32 Yeah.

35:32 So they have products, they have predictions of carbon emissions and so on.

35:38 But that's basically, it's an initiative.

35:40 The organization is called Tomorrow.

35:42 The goal here, at least with the electricity map, is to gather data about carbon intensity

35:48 and the energy mix of countries through the forest of APIs and standards countries have for countries and companies and have for that kind of thing.

36:00 So you can see that in Canada, not all or in the US, not all regions are going to work similarly.

36:07 And some regions might not even provide that kind of data in the open.

36:11 Yeah, that's too bad.

36:12 Yeah, but it's really different depending on where you are and just even the US, right?

36:18 Like in the Pacific Northwest, I think it's like very high levels of hydro.

36:22 Southeast, a lot of coal still.

36:25 Like it's not just what country.

36:27 It's even like maybe a little more granular than that, right?

36:29 No.

36:30 At least for large places.

36:31 And also, as you can see, I think this is also a very interesting map because you can see that energy grids are very different from the grid that you know, like nation, state, whatever it is after that, right?

36:44 Yeah, county, city.

36:45 County, city, all of that.

36:46 Like you can see, for example, the one that spans something like Iowa.

36:50 Italy, for example.

36:51 I was thinking, it already are like this thing that goes from Montana to Texas or something.

36:56 Yeah, that doesn't look like any state I learned in school.

36:59 No, but it's probably it's unified grid for some reason because providers got together for under some constraint.

37:05 Yeah, exactly.

37:06 So when I'm looking at this code here, I run that and then I get this map and it can give you recommendations on the cloud regions, right?

37:14 And where you might go.

37:15 So for example, CA Central 1.

37:18 This is something that we might want to change, right?

37:20 The UI of this thing might not be obvious, but you're on the left.

37:23 This, I just want to specify clarification because I even, I sometimes forget.

37:29 I'm like, where did this, where was this thing run?

37:32 That's actually, yeah.

37:33 You run on the left and we show you how it could have been different.

37:36 Interesting.

37:36 Somewhere out.

37:37 Yeah.

37:37 So if I pick say US West 1 for AWS versus EU West 3, you can see the relative carbon offset or production.

37:46 Yes.

37:46 How bad that was or how good it was.

37:48 And these are all comes from those reports generated out of that CSV file.

37:52 Exactly.

37:52 That's correct.

37:53 I just want to be clear about how the data was gathered.

37:55 That's a very important topic.

37:58 So we still need to update the data for GCP, Google Cloud Platform, because they recently released those numbers.

38:06 But for most of those locations, we had to make an assumption.

38:10 The assumption was that the data center was plugged to the local grid.

38:15 So if a data center is in Boston, we assume the data center uses the same energy as Boston's grid, which might not be the case.

38:24 Right.

38:24 Many providers would not now have their own solar panels and whatnot.

38:30 So that might not be the case.

38:31 And so, but unless they release those numbers and I can find the link, I'll share it with you.

38:37 Unless we have those numbers publicized by the providers, I mean, there's only so much we can do.

38:42 Yeah.

38:42 So, yeah.

38:43 Well, here's a call to action for those who haven't released it.

38:46 Get on it, right?

38:47 I think it was part of Jonathan's message earlier, which is like, there are so many layers and so many of them are opaque.

38:54 That's part of the, I think, our responsibility as users.

38:58 Yeah.

38:59 I don't like to put too much weight on individuals' shoulders.

39:01 And I think structural changes have a much wider, well, much more potential.

39:06 But I think it's still interconnected.

39:09 And if you can do something about it, well, you should.

39:11 Yeah.

39:12 Let's talk a little bit about running it.

39:14 So when I go over here and I say start and then stop, like, how do you know how much energy I've used?

39:20 I mean, I know once it leaves the computer, like, there's a lot of assumptions and various things like we talked about.

39:26 But how do you estimate how much that that code has taken?

39:29 That's a very good question.

39:31 And Victor, can I answer this one?

39:33 Oh, yes.

39:33 Sure.

39:34 Okay.

39:35 When you're training and you're running a machine learning program, you are mostly using GPU.

39:41 And you are mostly using NVIDIA GPU.

39:43 And thankfully, NVIDIA has a nice SDK to get, at a given time, the current estimated plus or minus 5% energy usage of the GPU.

39:55 So we get...

39:56 Oh, really?

39:57 Okay.

39:57 So it's not like you're saying, oh, it's a 3070 super and it must be pinned CPU-wise or GPU-wise.

40:04 So let's just assume this much time times this kind of computer, it gives you more narrow, exact measurements.

40:11 Okay.

40:11 Fantastic.

40:12 We still get the energy consumption from all GPU.

40:16 So if you are training multiple models, we might get higher or lower energy estimation.

40:22 I'm not sure there.

40:22 So that's for GPU.

40:24 For CPU, we are supporting Intel.

40:27 And we have several ways of doing that as we get a measurement at the beginning of the training and at the end.

40:34 And we get the total energy usage between the two.

40:37 So we can get the difference.

40:38 Or we can also regularly get the immediate usage, I think.

40:43 We are working to add memory usage because even if GPU and CPU are the topmost resources that you use for multi-gigabytes,

40:54 it tends to be not negligible.

40:57 And once you get...

40:58 Probably disk as well.

41:00 Yeah.

41:00 Everything takes energy.

41:01 The goal is to focus on what takes most of the energy and how easy it is to get that consumption.

41:09 So you get all of that either during the training frequently or at the beginning and the end.

41:15 In addition, we get the duration.

41:17 And we detect if you are running in a data center or not.

41:22 So in case we don't have access to anything, like you are running on AMD GPU, on an AMD CPU, on Windows,

41:33 we can still give you an estimation based on the duration, on your estimated location.

41:39 Or if you are running inside a data center or a specific location, we can also get a more precise estimation for you.

41:46 And we are measuring what energy usage.

41:49 And then we can use our data to estimate again, like estimation of estimation, the CO2 emitted for that usage.

41:58 I don't know how deep you want to go into how it works, but I do want to point out that...

42:04 Give us a little look inside.

42:05 Yeah.

42:05 Yeah.

42:06 Given that it's one of the most difficult areas, I think I also want to use your platform to call for help, which is...

42:12 It's actually the low-level inner workings of CPUs are hard to understand.

42:18 At least for me, I have a background and I'm a researcher, right?

42:22 So it's an area where we need help.

42:25 Not necessarily a hardware specialist, right?

42:27 And writing on hardware, yeah.

42:29 Exactly.

42:29 And so, for example, the way we read the energy consumption of Intel CPUs, I mean, the GPUs, as Boris said, have, at least NVIDIA's GPUs, have this driver that we can ping.

42:43 And NVIDIA SMI is all the INVML package is very useful because we can just ping this and not care about how it's done and trust NVIDIA and use that number.

42:54 But for the CPUs, it's much more complicated.

42:57 The reason is that what happens under the hood is modern Intel CPUs under the right settings write, actually, their energy usage, millijoules to a textile.

43:09 It's the Rappel interface and they write to a textile the number of millijoules they have consumed since, I don't know when, since they were turned on or the 1st of January 1970 or whatever other random date.

43:23 Yeah.

43:23 What matters is that and we look at the difference in it.

43:26 But those numbers are written by the CPU socket.

43:30 So let me give you an example.

43:32 In the academic setting where I work, we have shared clusters.

43:37 I can request part of a node and I'm going to request one GPU and 20 CPUs to do my computations.

43:45 But what I saw looking at the Rappel files is that there are two sockets of 40 CPUs.

43:53 Like we have 80 CPUs per node and two sockets of 40.

43:56 So there's no way to read from the Rappel files.

43:59 Your granularity is 40.

44:00 Yeah.

44:00 Yeah.

44:01 Like the CPUs that are allocated to me might change over time.

44:04 Maybe not.

44:05 It depends on the resource manager.

44:07 You use Slurm and those CPUs will be split across those two sockets.

44:12 And so we have that level of problems too.

44:16 So the high level, you're working in a dedicated environment and it's only your program, then

44:22 Rappel is perfect and we couldn't have dreamt for something better.

44:25 But it does not allow us to go to the core, let alone the process granularity of power consumption.

44:33 So I just want to put a big warning here.

44:37 And one of the things that we need to look into, and it's very hard and there are no good numbers.

44:43 Maybe even what I'm going to say won't make sense.

44:46 But the only solution we have left is, is there some kind of heuristic to map the CPU utilization

44:54 to the energy consumption, basically.

44:57 Because otherwise, we're never going to be able to attribute your processes and sub-processes'

45:04 CPU usage to WAPs, basically.

45:07 Because of this Rappel setup that is written by a socket.

45:11 And I've talked a little to people who understand this way better than I do.

45:17 And they thought this endeavor was very risky.

45:20 And they were pessimistic.

45:22 But I mean...

45:23 And you're like, what else do we have to work with?

45:25 Yeah.

45:25 And so I think next thing is like, we're going to need to get our hands on hardware, have

45:31 it run and see how bad it is.

45:32 And it's going to be one setup with one mode for the compilation of the math libraries I'm

45:40 going to use to benchmark and whatnot.

45:41 Like, there's only so much we can do if the hardware providers don't give, don't tell

45:46 us more.

45:46 It would be nice to see operating systems and then the hardware providers as well allow

45:53 you to access that information, right?

45:54 Like, how much, you know, how much voltage am I, how much am I currently consuming with

46:00 just this process, right?

46:01 And you don't want to profile it.

46:02 Because if you profile it, you'll be like 50% of the problem, right?

46:06 And you'll slow it down and people won't want to touch it.

46:08 And talk to people at Power API and Pyjool initiatives.

46:13 And even they, from what I remember, explained that even if you had total control on the hardware,

46:21 on the software, or the hardware, both of those things are going to be so dependent on the way

46:26 you compile the libraries you use.

46:28 And a number of other facts that it's even the very definition of those things that we're

46:35 looking for is not obvious.

46:36 Or whether it's Linux versus macOS versus Windows, it's got to make a difference.

46:40 It all matters.

46:41 And the reason why I think it's still worth looking for an approximation through CPU utilization,

46:48 even if it's a bad proxy, is it's all bad proxies.

46:52 So why is it like, it doesn't matter if you're precise to the millijoule on your CPU,

46:59 if your uncertainty around carbon emissions is huge, right?

47:04 Otherwise, you end up with something as much carbon as five cars, right?

47:07 And so...

47:09 We can get it down to 2.1 or 2.2 cars.

47:11 Come on, let's go with that.

47:12 Yeah.

47:12 So it's really a very complex endeavor.

47:15 Yeah.

47:16 Yeah, absolutely.

47:17 So it sounds like it runs on multiple platforms.

47:19 We'll try to, at least.

47:21 Yeah, yeah.

47:22 So Windows, Linux, macOS.

47:24 Exactly.

47:25 I'm sitting here recording on my Mac Mini M1, Apple Silicon.

47:29 Can I run it here?

47:30 Yeah.

47:30 You would need to install the Intel Power Gadget, restart your computer, allow specific security

47:37 permissions.

47:37 Not an Intel CPU.

47:38 So I'm guessing you will be back to the simple realistic base on the duration.

47:43 We realized, actually, the Intel Power Gadget also tracks some AMD CPUs.

47:47 Like, we had a user say, like, you don't seem to support AMD.

47:51 And then they still installed the Intel Power Gadget.

47:53 I don't know why, but they did.

47:54 And then it worked.

47:55 So I'm like, I'm not sure how this thing works.

47:58 So Intel AMD, but maybe not Apple Silicon.

48:00 I don't think so.

48:01 Okay.

48:02 Well, probably most people won't be doing training on that.

48:05 The Apple Silicon platform has a dedicated course for machine learning, you know?

48:09 It does have, I think, 16 ML cores.

48:11 Yeah.

48:11 So yeah, maybe.

48:12 Maybe they are.

48:13 They're coming out with the Mac Pro, which is supposed to have Mini Mini 4.

48:17 So maybe that'll be where people do it more.

48:20 So if Apple is hearing you.

48:21 Yeah, pretty.

48:22 Is hearing us.

48:23 Send us a Mac Mini and we'll work on improving the tracking of.

48:28 And one Mac Mini is for all three of you.

48:30 The whole team.

48:30 Come on.

48:31 Let's send it along.

48:32 Make it happen.

48:32 You have my Twitter handle.

48:33 Send me a message.

48:34 Fantastic.

48:35 All right.

48:35 Let's see.

48:36 Before we move on, quick question from Brian in the live stream.

48:39 Other than moving to different data centers, what are some of the highest impact

48:42 changes people can make?

48:44 Different training methods and so on.

48:46 And by the way, that also leads exactly into where I was going.

48:49 Thank you for that.

48:50 A very timely question.

48:51 Like patterns and things you can do.

48:53 Let's talk about that.

48:54 One of the things that we wrote in the carbon emissions of machine learning paper on the

49:00 website is, well, there's hyperparameter searches.

49:02 Like one of the worst thing you can do, both in terms of pure ML performance and carbon emissions

49:09 is grid search, for instance.

49:10 So maybe just don't do that.

49:12 There are, if you're lazy, just do a random hyperparameter search.

49:16 Or if you don't have a good metric.

49:18 Or use Bayesian optimizers and so on to look for those hyperparameters.

49:22 Another thing that is not mentioned in that paper, but I think is still very important.

49:27 And that cycles back to one of your first questions about insurance versus training is,

49:32 there are many methods out there.

49:33 Pruning, distillation, quantization, all of like all that zoo of tools and techniques

49:41 and algorithms to optimize your model.

49:45 And if you're happy with your current model, chances are there are many techniques out there

49:50 that can reduce its size and computational complexity by multiple factors.

49:56 So if you're going to put a product out there with hundreds, thousands, millions,

50:02 of inferences, maybe just think about that.

50:05 I expect people who deploy such tools do think about that.

50:09 If you're deploying a tool for millions, I mean, it's in your interest to think about it

50:14 because it's also going to be cheaper.

50:15 They probably think more about it in terms of just time, time to train, time to get an answer.

50:22 But that also is exactly lining up with energy consumed.

50:25 So, you know, CO2 reduction comes along for the ride.

50:29 Yeah.

50:29 It's often the case that if you invest in ecological solutions, they are going to end up being economical.

50:34 Jonathan, it's more of your area.

50:38 Maybe that's another bold pro to you.

50:41 Oh, I couldn't underscore that more.

50:43 I think, you know, something that I think that has come out of our results that we've seen is that

50:47 there's not a strictly linear trade-off between energy usage and accuracy, for example.

50:53 There's often, there's a shoulder usually there and finding that shoulder using code carbon to figure

50:58 out, you know, if I throw this fraction of a kilogram of CO2 at this problem, I'm actually

51:03 going to get a lower accuracy than if I had stopped beforehand.

51:06 So using a tool to figure out where that is, I think is very helpful.

51:10 And so just being aware of the impact of it and trying to maximize for accuracy and not just energy usage.

51:17 Yeah.

51:17 One of the things you called out is more energy, which means more emissions, is not necessarily

51:22 more accuracy.

51:22 Yeah.

51:23 Adding on more practical solution.

51:24 For example, when you're doing hyperparameter search, which is basically, are you doing the

51:30 combination of numbers of variables and try to find the best combination to get the best results,

51:36 the best, more precise model or whatever metrics you're optimizing for?

51:41 You will likely, most of the machine learning libraries have an option to do early stop.

51:45 Like instead of doing training new model for four days for all hundreds of thousands of combinations,

51:53 you train 10 of them for one day and then you see how it evolved.

51:59 And if you take only the two best of them and try again, you can reduce your training time

52:05 and emission by a lot of percentage.

52:08 And on other protocol, you can also move all known, all the code that doesn't need GPU to

52:15 run something somewhere else, like for CPU, then you start on disk, it's still emitting less

52:20 emission than not using the GPU on your server.

52:24 And try to use your GPU better, even by training more model on the same GPU or changing your model

52:31 to be more efficient to train in less time.

52:35 One of the things that we've also advocated for, and it can sound a little naive, but as

52:39 Jonathan said earlier, like this field has been moving fast is to publish and be transparent

52:45 about those things.

52:46 And I think if the community shows interest and shows that it is one of the broader impact

52:53 features that they look for when they think about the systems they create and deploy, I

52:59 think it's also something that can spread in other areas than just your very specific niche

53:04 of research.

53:05 For instance, I'm thinking about the research community here, but that's my environment.

53:10 But I think it's also the case in the industry.

53:13 Another thing that you all talked about is if you're computing locally, so maybe at your

53:18 university or in your house these days, that's probably where you are.

53:21 The local energy infrastructure matters, right?

53:23 It does.

53:24 It does.

53:25 Like, for example, Quebec has an average of like 20 grams of CO2 per kilowatt hour or something,

53:32 which is probably 40 times lower than some other regions.

53:36 You can check the, well, no, because Quebec doesn't share the data with electricity map.

53:41 It's a shame.

53:41 But you can see other, like if you just compare the results in Europe, for instance, and you

53:47 look for France, which is...

53:48 Like France versus Germany.

53:50 Yeah.

53:51 It has a nuclear electricity grid, mostly France, right?

53:54 So if you compare France to Germany, it's going to be very different.

53:58 Look at that.

53:58 95% low carbon.

54:00 That's well done, France.

54:02 Good job, Boris.

54:03 I think if you click on Germany, what you'll see is you might have a time series somewhere

54:09 for the last 24 hours.

54:10 At least have this nice breakdown over here and you can move it.

54:13 Yeah, there's your time series.

54:15 Right.

54:15 So you can even see that during the day.

54:18 It's not the same.

54:19 And just like your electricity provider will charge you differently for different times of

54:25 usage, like high demand or lower demand times of the day.

54:29 And like carbon emissions are also going to have that kind of variation that you could

54:33 care for.

54:34 One thing I wanted to give a quick shout out to, I don't know about different locations.

54:39 Here in Portland, one of the options we have is to choose a slightly different energy choice.

54:45 If we pay $6 more per month or $11 as a small business, it will basically be wind and solar.

54:52 And if your local grid offers something where you literally pay $6 and it can dramatically

54:57 change it, like, you know, do the world a favor.

54:59 Opt in.

55:00 We have the equivalent in France also.

55:02 Same in the United States.

55:03 There's a lot of, there's a patchwork of different state laws that mandate that these options are

55:07 made available to people.

55:09 So yeah, definitely take advantage of it.

55:11 Yeah.

55:11 Yeah.

55:12 It's, I mean, it literally is a checkbox.

55:13 Do you want to have this yes or no and a small fee?

55:16 And they probably, you know, honestly, probably what's happening when you check that box,

55:19 like some of that energy would have just gone to the general grid and now it's, it's promised

55:23 to you.

55:23 But soon as enough people check that box to go beyond the capacity, then that's going to

55:27 be an economic driver to make more of it happen.

55:30 Right.

55:30 So hopefully, hopefully we can get there.

55:33 Although I suspect data centers are where the majority of the computation happens.

55:37 It doesn't, I mean, I'm not backing this by any knowledge here.

55:41 I'm just, it's just my personal perception, but I feel it's like, it's too little.

55:46 Like, this is too cheap.

55:47 How come, like, it's so cheap, right?

55:51 So, so many things in our data lab should actually be more expensive if we knew how much energy

55:57 and resources and how much they cost the environment.

55:59 And so it feels like it's a no brainer when it's so easy and it's so cheap in this case,

56:04 but like how many other areas of our data lab and consumption have those, those biases?

56:10 Would you pay three times as much to fly, right?

56:13 Would you pay $3,000 to go from France to Portland rather than a thousand or whatever, right?

56:17 That's a harder thing than checking a $6 box.

56:19 Yeah.

56:20 And probably a harder solve a problem.

56:22 But luckily we're, we're talking about computers and ML and not air transportation.

56:26 So don't have to solve it here.

56:28 We'll do that next time.

56:29 Speaking of solving it here, you know, what's next?

56:30 Where are things going for you all in the future?

56:32 So I'm a PhD student at Mila in Montreal.

56:35 So it's a Quebec's AI Institute.

56:38 So I feel like I'm going to stay there for at least two or three more years until I PhD

56:43 and then we'll see.

56:44 But the other two, where are you going with this project?

56:46 Yeah, I think that we've got some things on the horizon.

56:49 One is that the other part sort of under the hood that's kind of complicated is deriving

56:54 the energy mix and getting the CO2 intensity of the energy grid from the energy mix.

56:58 So figuring out, you know, okay, if you know, you have X percent natural gas, X percent coal

57:03 and X percent oil, you know, how does that translate into CO2 emissions?

57:06 That's actually an extremely complicated problem to answer because we have different chemical

57:12 compositions of coal around the world.

57:14 For example, you know, coal that comes out of Kentucky has a different CO2 impact per,

57:19 you know, joule from combustion than coal that comes out of Wyoming, for example.

57:22 So we've got all these different layers to figure out.

57:25 For oil, you've got like the oil sands of Canada versus...

57:28 Exactly.

57:28 ...Sahary Arabia or whatever, right?

57:30 Exactly.

57:30 Yeah.

57:31 And all of these sort of chemical differences, you know, matter and they reflect different

57:35 efficiencies.

57:36 And that's not even getting into the difference in hardware in different power plants.

57:40 So what we want to do is we want to actually dive in a bit deeper and get at some of these

57:45 regional differences in carbon intensity and plug them into the data set here so that,

57:51 you know, we can refine our estimates as much as possible.

57:54 Yeah.

57:54 Yeah.

57:54 And shout out to the cloud providers, provide more data.

57:58 Yeah.

57:58 To the CPU providers, provide more hooks, things like that.

58:02 My hope for the project is that in a few years, we don't need this project anymore because we are doing

58:08 estimation of estimation of estimation.

58:11 There's better people in the industry, cloud providers and hardware vendors that are suited

58:17 to get more precise data.

58:19 But until then, I hope that the project can...

58:23 Companies be aware of their mission, take action on that and allow the project to be more precise

58:29 and give some estimation range for everything we are measuring.

58:33 I feel like there are five to 10 companies in the world that can control all of the information

58:38 you have.

58:38 So we've got Intel, AMD, Apple for the chips.

58:42 We've got AMD and NVIDIA for the cards and Azure, AWS, GCP.

58:49 If they all provided more information, then this would be not much of an estimate, more of a measure.

58:54 A couple of comments from the live stream.