Automate your data exchange with Pydantic

We welcome Samuel Colvin, creator of Pydantic, to the show. We'll dive into the history of Pydantic and it's many uses and benefits.

Episode Deep Dive

Guest introduction and background

Samuel Colvin is the creator and maintainer of Pydantic, a highly popular Python library for data validation and settings management. He has a background in engineering and spent time working on oil rigs in Indonesia, where he discovered his passion for programming. Over the years, Samuel has grown Pydantic from a small project into a widespread tool used by many top tech companies and organizations such as Microsoft, Uber, and financial institutions. His unique journey, from drilling operations to open-source development, shapes his pragmatic approach to building tools that emphasize both performance and simplicity.

What to Know If You're New to Python

If you’re just getting started with Python and want to better understand how Pydantic integrates with Python’s type hints, here are a few points:

- Make sure you’re comfortable with Python classes, dictionaries, and functions, since Pydantic heavily leverages those.

- Familiarize yourself with type hints (e.g.,

int,str,float) because Pydantic automatically interprets them. - Having a basic sense of JSON data and Python’s

dictusage will help you follow the discussion on serialization. - Consider brushing up your foundational skills with Python for Absolute Beginners if you need a gentle introduction to the language.

Key points and takeaways

- Pydantic’s Core Mission: Data Validation and Conversion

Pydantic aims to simplify data intake by automatically converting and validating data types. For example, if you define a field as an

int, Pydantic will coerce"123"(a string) into123(an integer) if possible. It also allows deeper checks like validating if something is a correct email address or file path. This makes it an excellent fit for modern API development but also for any situation where external or user data must be safely processed.- Links and tools:

- Why Type Hints Matter

One of Pydantic’s defining features is that it uses type annotations found in modern Python for validation logic. Instead of learning a new schema language, you rely on Python's typing style, which also means better IDE support, static analysis with tools like mypy, and consistent code. This synergy between Python’s built-in type hints and runtime validation is a core reason for Pydantic’s rapid adoption.

- Links and tools:

- Simple, Declarative Usage with Model Classes

Pydantic centers around its

BaseModelclass. Developers define attributes with type hints, and Pydantic automatically enforces them at instantiation. Whether you’re pulling data from a REST API, web form, or local file, calling your model withUser(**data)triggers type coercion and validation. This minimal boilerplate approach helps reduce hard-to-track bugs caused by unexpected data types.- Links and tools:

- Leniency vs. Strictness in Data Parsing

A critical design choice in Pydantic is its generally “optimistic” style of conversion. It will do its best to turn a string into an integer, parse a timestamp, or interpret a float as an

intif it makes sense. Users can tighten this default behavior by configuring stricter rules or writing custom validators. This flexible approach offers a friendlier developer experience, especially for web apps and user-generated data pipelines, but you can easily dial it in for enterprise-grade strictness.- Links and tools:

- Serialization and JSON Support

Pydantic doesn’t just validate: it simplifies exporting data too. The

.dict()and.json()methods convert a model instance back into a dictionary or JSON string, making it easy to pass clean data to other systems or log valid states of your application. This is particularly beneficial for teams building APIs or microservices where consistent data interchange is essential.- Links and tools:

- Comparing Pydantic to Other Validation Libraries

Many developers historically used tools like Marshmallow or pure data classes. However, Marshmallow doesn’t tightly integrate with Python 3 type hints, and plain data classes offer no runtime validation. Pydantic merges type hints with an engine for runtime type coercion and enforcement, typically with better performance than Marshmallow and more straightforward usage than rolling your own solution.

- Links and tools:

- Performance and Cython Optimization

Under the hood, Pydantic benefits from optional Cython compilation, speeding up certain data validation paths significantly. While Pydantic is already efficient in pure Python, those who demand high throughput can install the Cython-based build to get an extra performance edge. This has helped Pydantic stand out in production-grade, high-performance web services.

- Links and tools:

- Broad Usage Beyond FastAPI

Although closely associated with FastAPI for building modern Python APIs, Pydantic is in no way limited to that ecosystem. It can handle command-line input, form submissions in Flask, advanced data pipelines, or even function-level validation with the

@validate_argumentsdecorator. If you have inbound data you do not fully trust, there’s probably a place for Pydantic.- Links and tools:

- Future of Pydantic: V2 and Python Typing Changes

Samuel discussed possible challenges posed by upcoming Python releases (notably the stringification of annotations in Python 3.10+). There’s an ongoing PEP discussion around ensuring libraries like Pydantic can still dynamically access type objects. The team is also focused on more customizable serialization, improved plugin systems, and even more performance improvements in V2.

- Links and tools:

- Data Model Code Generator and JSON Schema JSON Schema support is built into Pydantic, making it easy to generate or consume schemas for your models. Tools like the Data Model Code Generator can even produce Pydantic models directly from an existing OpenAPI or JSON schema specification, saving developers significant time. This tight integration with standard schemas streamlines creating and documenting an API that shares its structure with front-end and other service teams.

- Links and tools:

Interesting quotes and stories

- Samuel’s Oil Rig Anecdote: One of the more notable stories is how Samuel started coding “seriously” while stationed on remote oil rigs. He spoke about the peculiar combination of stressful, 14-hour night shifts and downtime that allowed him to explore programming on the job.

- Analogy to Aerospace: Samuel drew an interesting comparison between engineering constraints in drilling versus aerospace: “It's kind of like aerospace, but no one minds crashing. So you can innovate more quickly.”

- Community Contributions: Samuel highlighted the “tragedy of the commons” problem around open-source, where new feature requests can pile up, but addressing the backlog of PRs and issues can be slow without more help from the community.

Key definitions and terms

- Type Hints: Python’s optional annotations for variables and function parameters, e.g.

def greet(name: str) -> str: - JSON Schema: A vocabulary that allows you to annotate and validate JSON documents, crucial for describing and enforcing data shapes in APIs.

- Validators: Functions or decorators in Pydantic that run custom checks and transformations for individual fields.

- BaseModel: The core class in Pydantic from which user-defined models inherit, enabling validation, JSON conversion, etc.

- PEP 649: A proposed change to Python that could affect how type annotations are stored and accessed, with implications for Pydantic.

Learning resources

- Python for Absolute Beginners: Ideal for anyone brand-new to Python who wants a more robust foundation before diving into frameworks like Pydantic.

- Modern APIs with FastAPI: Learn how Pydantic pairs with FastAPI to quickly build performant, type-safe APIs.

- MongoDB with Async Python: Explores deeper usage of Pydantic for data modeling alongside MongoDB and async frameworks.

- Build An Audio AI App: Showcases real-world usage of Pydantic and FastAPI in a project-based setting.

Overall takeaway

Pydantic has emerged as a cornerstone for modern Python projects thanks to its seamless integration of type hints, rich validation features, and excellent performance. Whether you’re validating complex data in microservices, orchestrating CLI input, or converting user-generated data for machine learning pipelines, it can bring safety, clarity, and speed to your code. Through Samuel’s journey and the community’s collective innovation, it continues to evolve, setting the standard for data validation in Python. If you work with external or untrusted data at any scale, Pydantic is a powerful ally to have in your Python toolkit.

Links from the show

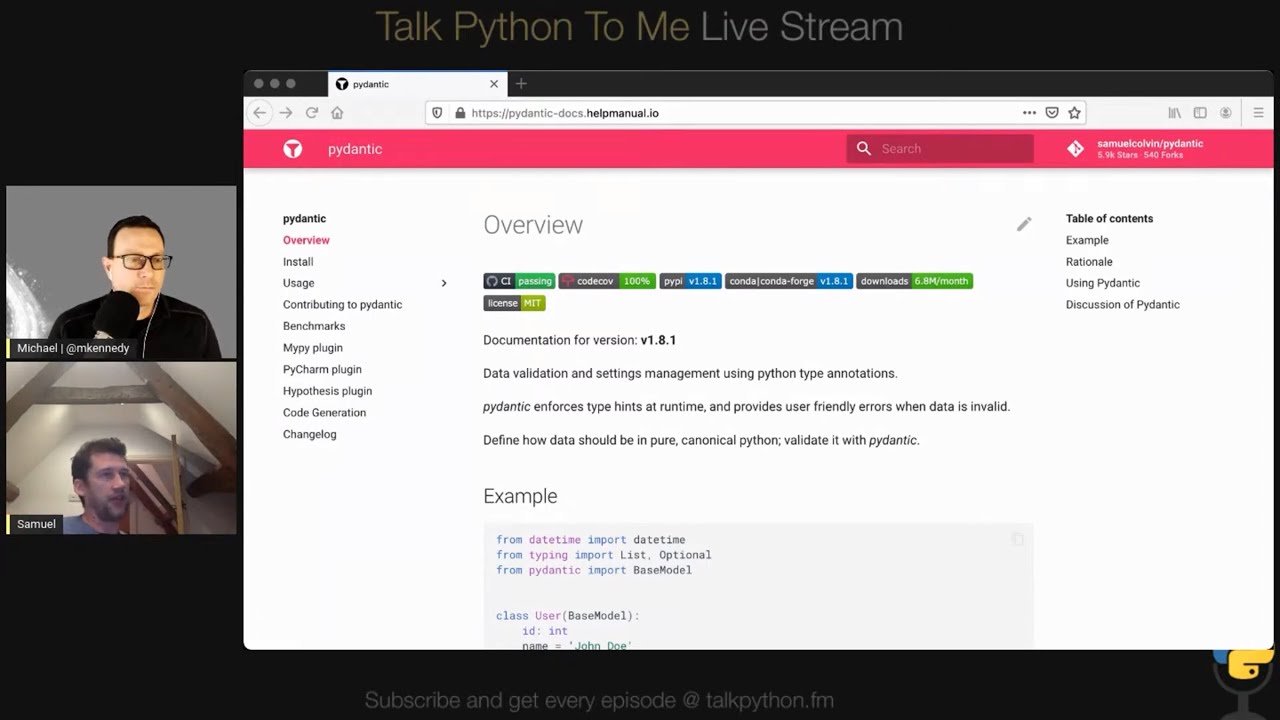

pydantic: pydantic-docs.helpmanual.io

Contributing / help wanted @ pydantic: github.com

python-devtools package: python-devtools.helpmanual.io

IMPORTANT: PEP 563, PEP 649 and the future of pydantic #2678

GitHub issue on Typing: github.com

YouTube live stream video: youtube.com

Episode #313 deep-dive: talkpython.fm/313

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 Data validation and conversion is one of the truly tricky parts of getting external data into your app.

00:05 This might come from a REST API or a file on disk or somewhere else.

00:09 It includes checking for required fields, the correct data types, converting from potentially compatible types,

00:16 for example, from strings to numbers if you have quote 7 but not the value 7, and much more.

00:21 Pydantic is one of the best ways to do this in modern Python, using data class-like constructs and type annotations to make it all seamless and automatic.

00:30 We welcome Samuel Colvin, creator of Pydantic, to the show.

00:34 We'll dive into the history of Pydantic and its many uses and benefits.

00:37 This is Talk Python To Me, episode 331, recorded April 14th, 2021.

00:43 Welcome to Talk Python To Me, a weekly podcast on Python, the language, the libraries, the ecosystem, and the personalities.

01:02 This is your host, Michael Kennedy.

01:04 Follow me on Twitter where I'm @mkennedy, and keep up with the show and listen to past episodes at talkpython.fm,

01:10 and follow the show on Twitter via at Talk Python.

01:13 This episode is brought to you by 45 Drives and us over at Talk Python Training.

01:18 Please check out what we're offering during those segments.

01:21 It really helps support the show.

01:22 Hey, Samuel.

01:23 Hi, Mike. Great to meet you. Very excited to be here.

01:25 Yeah, I'm really excited to have you here.

01:27 You've been working on one of my favorite projects these days that is just super, super neat,

01:31 solving a lot of problems, Pydantic, and I'm really excited to talk to you about it.

01:36 Yeah, as I say, it's great to be here.

01:37 I've been doing Pydantic on and off now since 2017, but I guess it was when Sebastian Ramirez started using it on FastAPI a couple of years ago.

01:46 A couple of years ago, I don't know when, that it really went crazy.

01:49 And so now it's a lot of work, but it's exciting to see something this popular.

01:53 I'm sure it's a lot of work, but it really seems to have caught the imagination of people

01:58 and really excited about it.

01:59 So fantastic work.

02:01 Before we get into the details there, let's start with just your background.

02:06 How did you get into programming Python?

02:07 I did a bit of programming at university, a lot of, a bit of MATLAB and a bit of C++.

02:11 And then my first job after university, I worked on oil rigs in Indonesia, of all strange things.

02:17 There's a lot of time on an oil rig when you're flat out, and there's a lot of other time when

02:22 you're doing absolutely nothing, don't have much to do.

02:24 And so I programmed quite a lot in that time.

02:26 And I suppose that was when I really got into loving it rather than just doing it when I had to.

02:29 And then I guess Python from there, a bit of JavaScript, a bit of Python ever since.

02:33 Yeah, really cool.

02:34 I think things like MATLAB often do sort of pull people in and they have to learn a little bit

02:39 of programming because it's a pain to just keep typing it into whatever the equivalent of the

02:43 REPL is that MATLAB has.

02:45 You do the .imp file and you kind of get going and you start to combine them.

02:49 And then all of a sudden, it sort of sucks you into the programming world when you maybe

02:52 didn't plan to go that way.

02:54 I worry maybe other people learn it the right way and sit down and read a book and understand

02:58 how to do stuff.

02:58 But there's a lot of things that I wish I had known back then that I learned only through

03:02 reading other code or through banging my head against the wall that would have been really

03:06 easy to learn if someone had showed me them.

03:08 But hey, we got here.

03:09 Yeah, I think many, I'd probably say most people got into programming that way.

03:13 And I think it's all fine.

03:14 Yeah, it's all good.

03:15 So what was living on an oil rig like?

03:18 That must have been insane.

03:19 It was pretty peculiar.

03:21 So half the time I was on land rigs and half the time I was offshore.

03:24 Offshore, the conditions were a lot better.

03:26 Land rigs, the food was pretty horrific.

03:28 And a lot of they were all, I always did like a 14 hour night shift and then occasionally

03:33 had to do the day shift as well and then go on to the night shift.

03:35 So sleep was a problem and the heat was a problem.

03:38 Yeah, it was hard baptism of the real working world after university, I must say.

03:43 Yeah, I guess so.

03:43 It sounds really interesting.

03:46 Not an interesting like, wow, I really want to necessarily go out there and do it.

03:49 But you just must have come away with some like some wild stories and different perspective.

03:54 Yeah, lots of stories that I won't retell now.

03:56 I don't think that's the kind of subject matter you want on your podcast.

03:59 But yeah, I mean, how oil rigs work, well, whatever you think about it and however much

04:03 we all want to get away from them is crazy what you can do.

04:06 And so being like up against the at the coalface of that was really fascinating.

04:10 It's kind of like aerospace, but no one minds crashing.

04:12 So you can innovate.

04:14 You can try a new thing.

04:15 You just the faster you can drill, the better.

04:17 It's all that anyone cares about.

04:18 Yeah.

04:18 Well, most people what they care about.

04:20 Yeah, it must have been a cool adventure.

04:21 Awesome.

04:22 So I thought it'd be fun to start our conversation, not exactly about Pydantic, but just about this

04:27 larger story that larger space that Pydantic lives in.

04:33 But maybe to set the stage, give us like a real quick overview of what problem does Pydantic

04:38 solve for the world?

04:38 What is it and what does it solve for the world?

04:40 So the most simplest way of thinking about it is you've got some user somewhere.

04:44 They might be on the other end of a web connection.

04:46 They might be using a GUI.

04:48 They might be using a CLI.

04:50 It doesn't really matter.

04:51 And they're entering some data.

04:52 And you need to trust the data that you that they put in is correct.

04:57 So with Pydantic, I don't really care what someone enters.

05:00 All I care about is that you can validate it to get what you want.

05:04 So if I need an integer and a string and a list of bytes, all I care about is that I can

05:10 get to that integer, string, and list of bytes.

05:12 I don't care if someone entered bytes for the string field as long as I can decode it.

05:16 Or if they entered a tuple instead of a list.

05:18 But yeah, the fundamental problem is people, I suppose in theory, like intentionally trying

05:23 to do it wrong, but mostly innocently getting something wrong, trying to coerce data into

05:27 the shape that you want it to be in.

05:29 Yeah, it's super easy to say like, well, I read this value from a file and the value

05:34 in the file is a number.

05:36 It says one, but you read it as strings.

05:39 So the thing you sent over is quote one, not the number one.

05:42 And in programming, computers hate that.

05:45 They don't think those things are the same unless maybe your JavaScript and you do it in

05:49 the right order, maybe.

05:50 But excluding that odd case, right?

05:53 A lot of times it's just crash wrong data format or whatever.

05:56 We expected an integer and gave us a string.

05:58 But, you know, Pydantic just looks at it and says, it could be an integer if you want it

06:02 to be.

06:02 So we'll just do that.

06:04 Yeah.

06:04 And I think it's something that there's been a lot of discussion about on Pydantic's issue

06:09 tracker.

06:09 There's an entire label strict about the discussions about how strict we should be.

06:13 And I definitely, I think it's fair to say Pydantic compared to its, the other libraries

06:17 is more lenient, more keen on if we can try and coerce it into that type, we will.

06:22 That really started for me trying to make it simple and performant.

06:25 And I just called the, I decided if something wasn't in by calling int on it and seeing what

06:29 happened.

06:29 That had some kind of strange side cases because I called list on something to see if it was

06:35 a list.

06:35 And that meant you could put a string of integers, a string into a list of ints field.

06:40 And it would first of all, call list on it, turn it into a list and then call int on each

06:44 member.

06:45 And that was completely crazy.

06:46 So we've got stricter over the years.

06:48 And I think that in the future, Pydantic will have to get a bit stricter in particular stuff

06:52 like coercing from a float to an int quite often doesn't make sense or isn't what people

06:57 want.

06:57 But most of the time, just working is really powerful, really helpful.

07:01 If there's going to be some kind of data loss, right?

07:03 If it's 1.0000, coercing that to one's fine.

07:06 If it's 1.52, maybe not.

07:09 Yeah, but it's difficult because we're in the Python world where Python is pretty lenient.

07:13 So it doesn't mind you adding two floats together.

07:15 If you call the int function on a float, it's fine.

07:20 So it's also trying to be Pythonic at the same time as being strict enough for people, but

07:24 without doing stuff that obviously, without not doing stuff that's obvious, because lots

07:29 of people do just want coercion to work.

07:31 Right.

07:31 We'll get into it.

07:32 There's lots of places to plug in and write your own code and do those kinds of checks if

07:36 you really need to.

07:36 Yeah.

07:37 All right.

07:37 Carlos has a good question out there in the live stream, but I'm going to get to that later

07:42 when we get a little bit more into it.

07:43 First, let's talk about some of the other things.

07:45 So Pythonic is surprisingly popular these days, but there's plenty of people, I'm sure, who

07:51 haven't heard of it, who are hearing about it the first time here.

07:54 And there's other libraries that try to solve these same type of problems, right?

07:59 Problem is I've got data in often a dictionary list mix, right?

08:04 Like a list of dictionaries or a dictionary, which contains lists, which contains like that

08:08 kind of object graph type of thing.

08:11 And I want to turn that into probably a class, probably a Python class of some sort or understand

08:17 it in some way.

08:18 So, you know, we've got some of the really simple ways of doing this where I just have

08:23 those.

08:23 I want to stash that into a binary form, which is pickle.

08:26 Pickle has been bad in certain ways because it can run arbitrary Python code.

08:31 That's not ideal.

08:31 There's another related library called quickle, which is like pickle, but doesn't run arbitrary

08:37 code.

08:37 And that's pretty nice.

08:38 We have data classes that look very much like what you're doing with Pydantic.

08:42 We have Marshmallow, which I hear often being used with like Flask and SQLAlchemy and Marshmallow.

08:48 You may want to just sort of give your perspective on like what the choices are out there and where

08:53 things are learning from other libraries and growing from.

08:55 Yeah, I put serialization as in pickle, JSON, YAML, TOML, all of them into a different category

09:01 message pack as a slightly different thing from taking Python objects and trying to turn

09:06 them into classes.

09:07 So putting them to one side, because I think that's a kind of different problem that Pydantic

09:12 and Marshmallow and people aren't trying to solve exactly.

09:14 Then there's data classes, which are the kind of canonical standard library way of doing this.

09:18 They're great, but they don't provide any validation.

09:20 So you can add a type in that says age is an integer, but data classes don't care.

09:27 Whatever you put in will end up in that field.

09:29 And so that's useful if you have a fully type-inted system where you know already that something's

09:34 an integer before you pass it to a data class.

09:36 But if you're loading it from a foreign source, you often don't have that certainty.

09:40 And so that's where libraries like Pydantic and Marshmallow come in.

09:43 Actually, Pydantic has a wrapper for data classes.

09:47 So you basically import the Pydantic version of data classes instead of normal data classes.

09:52 And from there on, Pydantic will do all the validation, give you back a completely standard

09:56 data class.

09:56 Having done the validation.

09:58 Marshmallow is probably, well, it is undoubtedly the biggest, most obvious competitor to Pydantic.

10:04 And it's great.

10:05 I'm not going to sit here and bad mouth it.

10:07 It's been around for longer and it does a lot of things really well.

10:10 Pydantic has just overtaken a few months ago Marshmallow in terms of popularity, in terms

10:16 of GitHub stars.

10:17 Whether you care about that or not is another matter.

10:19 There's also Atras, which kind of predates data classes and is closer to data classes.

10:24 But the big difference between Pydantic and Marshmallow and most of the other competitors

10:29 is Pydantic uses type hints.

10:31 So that one means you don't have to learn a whole new kind of micro language to define

10:36 types.

10:36 You just write your classes and it works.

10:39 It works with mypy and with your static type analysis.

10:43 It works with your IDE, like, well, Pycharm now, because there's an amazing extension.

10:48 I forgot the name of the guy who wrote it, but there's an amazing extension that I used the

10:52 whole time with Pycharm that means it works seamlessly with Pydantic.

10:56 And there's some exciting stuff happening.

10:58 Microsoft, they emailed me actually two days ago.

11:00 One of their technical fellows about extending their language server or their front-end for

11:06 language server, Pyright, to work with Pydantic and other such libraries.

11:10 So because you're using standard type hints, all the other stuff, including your brain, should,

11:14 in theory, click into place.

11:16 Yeah, that's really neat.

11:17 I do think it makes sense to separate sort of the serialization file, save me a file,

11:21 load a file type of thing out.

11:23 I really love the way that the type hints work in there because you can almost immediately

11:28 understand what's happening.

11:29 It's not like, oh, this is the way in which you describe the schema and the way you describe

11:34 the transformations.

11:35 It's just, here's a class.

11:37 It has some fields.

11:38 Those fields have types.

11:39 That's all you need to know.

11:40 And Pydantic will make the magic happen.

11:42 What would you say the big difference between Pydantic and Marshmallow is?

11:46 And I haven't used Marshmallow that frequently, so I don't know it super well.

11:50 I would first give the same proviso.

11:52 I haven't used it that much either.

11:53 I probably, if I was more disciplined, I'd have sat down and used it for some time before

11:58 building Pydantic.

11:58 But that's not always the way things work.

12:00 The main difference is it doesn't use type hints or it doesn't primarily use type hints

12:04 as its source of data about what type something is.

12:07 Pydantic is around, from my memory, I can check, but significantly more performant than Marshmallow.

12:14 Yeah, you actually have some benchmarks on the site and we can talk about that in a little

12:17 bit and compare those.

12:18 Yeah.

12:19 Yeah.

12:19 So just briefly, it's about two and a half times faster.

12:22 The advantage of Marshmallow at the moment is it has more logic around customizing how

12:29 you serialize types.

12:30 So when you're going back from a class to a dictionary or list of dictionaries and then

12:35 out to JSON or whatever, Marshmallow has some really cool tools there, which Pydantic doesn't

12:42 have yet.

12:42 And I'm hoping to build into V2 some more powerful ways of customizing serialization.

12:47 Okay.

12:47 Fantastic.

12:48 This portion of Talk Python is brought to you by 45 Drives.

12:53 45 Drives offers the only enterprise data storage servers powered by open source.

12:59 They build their solutions with off-the-shelf hardware and use software-defined open source

13:04 designs that are unmatched in price and flexibility.

13:06 The open source solutions 45 Drives uses are powerful, robust, and completely different.

13:12 And best of all, they come with zero software licensing fees and no vendor lock-in.

13:18 45 Drives offers servers ranging from four to 60 bays and can guide your organization through

13:23 any sized data storage challenge.

13:26 Check out what they have to offer over at talkpython.fm/45 Drives.

13:31 If you get in touch with them and say you heard about their offer from us, you'll get a chance

13:35 to win a custom front plate.

13:36 So visit talkpython.fm/45 Drives or just click the link in your podcast player.

13:44 Let's dive into it.

13:46 And I want to talk about some of the core features here.

13:50 Maybe we could start with just you walking us through a simple example of creating a class

13:56 and then taking some data and parsing over.

13:59 And you've got this nice example right here on the homepage.

14:01 I think this is so good to just sort of look.

14:03 There's a bunch of little nuances to cool things that happen here that I think people will benefit from.

14:08 Yeah, so you're obviously defining your class user here.

14:11 Very simple inheritance from base model, no decorator.

14:16 I thought about the beginning that like this should work for people who haven't been writing

14:20 Python for the last 10 years and where decorators look like strange magic.

14:24 I think using inheritance is the obvious way to do it.

14:27 And then obviously we define our fields.

14:30 The key thing really is that the type int in the case of ID is used to define what type that

14:36 field is going to be.

14:37 And then if we do give it a value as we do with name, that means that the field is not required.

14:42 It has a default value.

14:43 And obviously we can infer the type there from the default, which is a string.

14:46 Then sign up timestamp is obviously an optional date time.

14:50 So it can be none.

14:51 And critically here, you could either enter none or leave it blank.

14:55 And it would again be none.

14:58 And then we have friends, which is a more complex type.

15:00 That's a list of integers.

15:02 And the cool stuff is because we're just using Python type int, we can burrow down into lists

15:06 of dicks, of lists, of sets, of whatever you like within reason.

15:10 And it will all continue to work.

15:12 And then looking at the external data, again, we see a few things like we were talking about

15:15 the coercion.

15:16 Right.

15:16 This external data is just a dictionary that you probably have gotten from an API call,

15:21 but it could have come from anywhere.

15:22 It doesn't have to come from there.

15:23 Right.

15:23 Exactly.

15:24 Anywhere outside.

15:25 But right now we've got it as far as being a dictionary.

15:27 So the point here is we're doing a bit of coercion.

15:31 So trivial converting from a string 123 to the number 123, but then a bit more complex,

15:39 parsing the date and converting that into a date object.

15:43 Right.

15:43 So you have the, in here, the data that's passed in, you've got a quote, 2019-06-01 and

15:50 a time.

15:51 And this is notoriously tricky because things like JSON don't even support dates.

15:56 Like, they'll freak out if you try to send one over.

15:58 So you just got this string, but it'll be turned into a date.

16:01 Yeah.

16:02 And we do a whole bunch of different things to try and do all of the sensible date formats.

16:06 There's obviously a limit as how far to go, because one of the things Pedantic can do is

16:11 it can interpret integers as dates using Unix timestamps.

16:15 And if they're over some threshold in about two centuries from now, it assumes there are

16:20 milliseconds.

16:20 So it works with Unix milliseconds, which are often used, but it does also lead to confusions

16:26 when someone puts in one, two, three as a date and it's three seconds after 1970.

16:31 There are like, there's an ongoing debate about exactly like what you should try and coerce

16:35 and when it gets magic.

16:36 But for me, it's, there's a number of times I've just found it incredibly useful to, that

16:41 it just works.

16:42 So for example, the string format that Postgre uses when you use to JSON just works with Pedantic.

16:49 So you don't even have to think about whether that's come through as a date or as a string

16:52 until you're worried about a limit of performance.

16:54 Most of that stuff just works.

16:56 Yeah.

16:56 Then one of the things that I think is super interesting is you have these friend IDs that

17:01 you're passing over.

17:02 And you said in Pedantic, it gets a list of integers.

17:05 And in the external data, it is a list of sometimes integers and sometimes strings.

17:11 But once it gets parsed across, it not just looks at the immediate fields, but it looks at,

17:17 say, things inside of a list and says, oh, you wanted a list of integers here.

17:20 This, this list, it has a string in it, but it's like quote three.

17:23 So it's fine.

17:24 Yeah.

17:24 And this is where, where it gets cool because we can go recursively down into the rabbit hole

17:29 and it will continue to validate.

17:31 One of the tricky things that I think is what most people want, but where our language fails us is about the word validation.

17:38 Because quite often validation sounds like I'm checking the input data is in the form that I said.

17:43 That's kind of not what Pedantic is doing.

17:45 It's optimistically trying to parse.

17:47 It doesn't care what the list contains in a sense, as long as it can find a way to make that into an int.

17:52 So this wouldn't be a good library to use for, for like unit testing and checking that something is the way that it should be,

17:58 because it's going to accept a million different things.

18:01 It's going to be as lenient as possible in what it will take in.

18:04 But that's by design.

18:05 Yeah.

18:05 The way to take this external dictionary and then get Pedantic to parse it is you just pass it as keyword arguments to the class constructor.

18:16 So you say user of star star dictionary.

18:20 So that'll, you know, explode that out to keyword arguments.

18:23 And that's it.

18:24 That runs the entire parsing, right?

18:26 That's super simple.

18:27 Yeah.

18:27 And that's, again, by design to make it just the simplest way of doing it.

18:30 If you want to do crazy complex stuff like constructing models without doing validation,

18:35 because you know it's already been validated, that's all possible.

18:38 But the simplest interface, just calling in it on the class is designed to work and do the validation.

18:44 Yeah, cool.

18:45 One thing I think is really neat and not obvious right away is that you can nest these things as well, right?

18:51 Like I could have a shopping cart and the shopping cart could have a list of orders and each order in that list could be a Pedantic model itself, right?

18:58 Exactly.

18:59 And it's probably an open question as to how complex we should make this first example.

19:03 Maybe it's already too complicated.

19:04 Maybe it doesn't demonstrate all the power.

19:06 But yeah, I think it's probably about right.

19:08 But yeah, you can go recursive.

19:10 You can even do some crazy things like the root type of a model can actually not itself

19:15 be a sequence of fields.

19:16 It can be a list itself.

19:17 So there is a long tail of complex stuff you can do.

19:21 But you're right.

19:21 The inheritance of different models is a really powerful way of defining types.

19:26 Because in reality, our models are never a nice key in value of different types.

19:31 They always have complex items deeper down.

19:34 Right.

19:34 That's really cool.

19:35 And on top of these, I guess we can add validation.

19:38 Like you have the, you said, the very optimistic validation.

19:41 Like if in the friends list, it said one, two, comma, Jane.

19:46 Well, it's probably going to crash when it tries to convert Jane into an integer and say, no, no, no,

19:51 this is wrong.

19:52 And it gives you a really good error message.

19:53 It says something like the third item in this list is Jane.

19:57 And that's not an integer, right?

19:58 It doesn't just go, well, data is bad.

20:00 You know, too bad for you.

20:02 Yeah.

20:02 But you could decide, perhaps Jane's a strange case.

20:05 But if you wanted the word one, O-N-E, to somehow work, you could add your own logic relatively

20:10 trivially via a decorator, which said, okay, cool.

20:13 If we get the word one or the word two or the word three, then we can convert them to the equivalent

20:18 integers.

20:19 And that's relatively easy to add.

20:21 Yeah.

20:21 Cool.

20:22 Now, one thing that I guess the scenario where people run into this often run into working

20:27 with Pydantic is it's sort of the exchange layer for FastAPI, which is one of the more

20:32 popular API frameworks these days.

20:34 And you just say your API function takes some Pydantic model and it returns some Pydantic model

20:40 and, you know, magic happens.

20:42 But if you're not working in that world, if you're not in FastAPI and most people doing

20:47 web development these days are not because, you know, it's the most popular framework and

20:51 it's also certainly not the most popular legacy framework.

20:54 So you can do things like create these models directly in your code, let it do the validation

21:01 there.

21:02 And then if you need to return the whole object graph as a dictionary, you can just say model.dict,

21:07 right?

21:07 Yeah.

21:08 And also model.json and that will take it right out to JSON.

21:11 As a string.

21:11 Yeah.

21:12 So it will turn a string of that JSON.

21:13 Yeah.

21:14 And you can even swap in more performant JSON encoders and decoders.

21:18 And this is why I was talking about like there's already some power in what you can do here and

21:22 which fields you can exclude.

21:24 And it's pretty powerful.

21:25 But like, as I say, something in V2 that we might look at to make even more powerful is customizing

21:32 how you do this.

21:32 Nice.

21:33 You might expect to just call .dict and it converts it to a dictionary and reverses it,

21:38 if you will.

21:38 But there's actually a lot of parameters and arguments you can pass in to have more control.

21:43 Do you want to talk about maybe just some of the use cases there?

21:47 Yeah.

21:47 As we see here, you can choose to include specific fields and the rest will be excluded

21:52 by default.

21:52 You can say exclude, which will exclude certain fields and the rest will be included by default.

21:57 Those include and exclude fields can do some pretty ridiculously crazy logic going recursively

22:03 into the models that you're looking at and excluding specific fields from those models or specific

22:10 items from lists, even some of the code.

22:12 I forget who wrote that, but like I wrote the first version of it.

22:14 I wrote the second or third version of it.

22:16 And I was looking through about the 10th version of it the other day.

22:19 And it is some of the most complex bits of Pydantic.

22:22 But that's amazingly powerful.

22:24 And then we didn't talk about aliases, but you can imagine if you were interacting with the

22:28 JavaScript framework, you might be using camel case on the front end for like user names,

22:33 name with a capital N, but have user underscore name in Python world where we're using underscores.

22:39 We can manage that by setting aliases on each field.

22:42 So saying that the user underscore name field has the alias user underscore N name.

22:47 And then we can obviously export to a dictionary using those aliases if we wish.

22:52 Oh, that's cool.

22:52 So you can program Pydonic style classes, even if you're consuming, say, a Java or C#

23:00 API that clearly uses a different format or style for its naming.

23:04 Exactly.

23:04 And you can then export again to back to those aliases when you want to go on to like pipe

23:12 it on down your data processing flow or however you're going to do it.

23:15 And in fact, one of the things that will come up in future will be different aliases for import

23:19 on the inside way and the out way.

23:21 But until so far, there's just one, but that's powerful.

23:24 And then you can exclude fields that have defaults.

23:28 So if you're trying to save that data to a database and you don't want to save more than

23:32 you need to, you can exclude stuff where it has the default value.

23:35 And you can exclude fields which are none, which again is often the same thing, but there

23:40 are subtle cases where those are different requirements.

23:42 Yeah.

23:42 And that also might really just, even if you're not saving it to a database, you know, that

23:46 will lower the size of the JSON traffic between say microservices or something like that.

23:51 If you don't need to send the defaults over, especially the nones, because they're probably

23:55 going to go to the thing they get and go, give me the value or none in whatever language

24:00 they're using, right?

24:01 Something to that effect.

24:02 It'll mean the same.

24:03 Yeah.

24:04 So we have complex stuff here.

24:05 So we have exclude unset and exclude defaults.

24:09 So you can decide exactly how you want to exclude it to get just the fields that are actually live,

24:14 as it were, that have custom values.

24:15 Yeah.

24:16 So you have this dict thing, which will turn a dictionary and then you have the JSON one,

24:20 which is interesting.

24:22 We talked about that one.

24:23 It also, you also have copy.

24:24 So you, is that like a shallow copy if you want to clone it off or something?

24:29 It can be a deep copy, but you can update some fields on the way through.

24:33 So there are multiple different contexts where you might want to do that, where you want

24:36 different models where you can go and edit one of them and not damage the other one,

24:40 or you want to modify a model as you're copying it.

24:43 We also have a setting on config that prevents you, that makes fields pseudo immutable.

24:49 So it doesn't allow you to change that value.

24:52 It doesn't stop you changing stuff within that value because there's no way of doing that in Python.

24:55 But that's another case where you might want to use copy because you've set your model up to be

24:58 effectively static and you want to create a new one.

25:01 If you were being super careful and strict, you would say, I'm never going to modify my model.

25:05 I'm just going to copy it when I want a new one.

25:07 Yeah.

25:07 Perfect.

25:08 Yeah.

25:08 Certain things you could enforce that they don't change like strings and numbers.

25:11 But if it's a list, like the list can't point somewhere else, but what's in the list,

25:15 you can't really do anything about that, right?

25:16 Yeah.

25:17 So another thing that I think is worth touching on that's interesting is there's some obvious

25:21 things that are the types that can be set for the field types.

25:26 And then the data exchange conversion types, bools, integers, floats, strings, and so on.

25:31 But then it gets further, it gets more specialized down there as you go.

25:36 So for example, you can have unions, you can have frozen sets, you can have iterables,

25:42 callables, network addresses, all kinds of stuff, right?

25:46 Yeah.

25:46 We try and support almost anything you can think of from the Python standard library.

25:50 And then if you go on down, we get to a whole bunch of things that aren't supported

25:54 or don't have an obvious equivalent within the standard library, but where you go on

25:59 down to Pydantic types on the right.

26:02 So where you're looking is in the example, but Pydantic types, then we get into things

26:07 that don't exist within, or there isn't an obvious type for in Pydantic, in Python.

26:13 There's a kind of subtle point here that like often these types are used to enforce more

26:19 validation.

26:19 So what's returned is an existing Python type.

26:23 So here we have file path, which returns a path, but it just guarantees that that file

26:28 exists.

26:28 And directory path, similarly, is just a path, but it will confirm that it is a directory.

26:32 And email string just returns you a string, but it's validated that it's a legitimate email

26:37 address.

26:38 But then some do more complex stuff.

26:40 So name email will return you, allow you to split out names and email addresses and more

26:45 complex things.

26:46 Got credit cards, colors, URLs, all sorts of good stuff there.

26:51 Yeah.

26:51 Yes.

26:51 So there's a Mexican bank called, excuse my pronunciation, Cavenka, who use the credit card

26:57 fields for all of their validation of credit card numbers.

27:00 Yeah.

27:00 It's super interesting.

27:01 And you think about how do you do this validation?

27:03 How do you do these conversions yourself?

27:04 And not only do you have to figure that out, but then you've got to do it in the context of

27:09 like a larger data conversion.

27:10 And here you just say, this field is a type email and it either is an email or it's going

27:16 to tell you that it's invalid.

27:17 Right.

27:17 Yeah.

27:17 I like this a lot.

27:18 You know, one thing that we've been talking about that's pretty straightforward is using

27:22 this for APIs, right?

27:24 Like somebody is doing a JSON post and you get that as a dictionary in your web framework,

27:29 and then you kind of validate it and convert it and so on.

27:31 But it seems like you could even use this for like web forms and other type of things,

27:37 right?

27:37 Somebody's submitting some kind of ATP post of a form and it comes over if you want to say

27:42 these values are required, this one's an email and so on.

27:45 Yeah.

27:46 So all of the form validation for multiple different projects I've built, some open source, lots

27:51 proprietary use Pydantic for form validation and use the error messages directly back to the

27:56 user.

27:57 I've got quite a lot of JavaScript that basically works with Pydantic to build forms and do validation

28:03 and return that validation to the user.

28:05 I've never quite gone far enough to really open source that and push it as a React plugin

28:10 or something, but it wouldn't be so hard to do.

28:12 So let me ask you this.

28:13 And maybe when you say React, maybe that's enough of an answer already.

28:16 But if it's a server side processing form and it's not a single page sort of front and framework

28:22 style of form submission, often what you need to do is return back to them the wrong data,

28:28 right?

28:29 If they type in something that's not an email and over there it's not a number, you still

28:33 want to leave the form filled with that bad email and that thing that's not a number.

28:38 So you can say, those are wrong.

28:39 Keep on typing them.

28:41 Is there a way to make that kind of round tripping work with Pydantic?

28:45 I've tried and haven't been able to do it.

28:47 But if it's a front end framework, it's easy to say, submit this form, catch the error and

28:52 show the error because you're not actually leaving the page or clearing the form with a

28:56 reload.

28:57 Yeah, the short answer is no.

28:58 And it's something there's an issue about for V2 to return like the wrong value always

29:03 in the context of each error.

29:04 Often you kind of need it to make the error make sense.

29:07 But at the moment, it's generally not available and we will add it in V2.

29:11 What I've done in React is obviously you've still got the values that went into on the

29:15 form.

29:15 So you don't clear any of the inputs.

29:18 You just add the errors to those fields and set the whatever it is, invalid header.

29:23 So they're nice and red.

29:24 Yeah, exactly.

29:24 So if it was a front end framework like React or Vue, I can see this working perfectly as

29:29 you try to submit it for a form.

29:30 But if you got to round trip it with like a Flask, a Pyramid or Django form or something

29:35 that doesn't have it, then the fact that it doesn't capture the data.

29:39 I mean, I guess you could return back just the original posted dictionary, but yeah.

29:42 Yeah.

29:42 So you have the original.

29:43 Yeah.

29:44 Like you say, you've normally normally there.

29:46 It's not too many different levels and it's relatively simple to combine up the dictionary

29:50 you got straight off form submission with the errors.

29:53 But yeah, it's you're right.

29:54 It's something we should improve.

29:55 Yeah.

29:56 I'm not sure that you necessarily need to improve it because it it's mission is being fulfilled

30:01 exactly how it is.

30:03 And if it has this time, but well, sometimes it throws errors and sometimes it doesn't.

30:06 And then like just report, I don't know, it seems like you could overly complicate it as

30:10 well.

30:11 I think that there's like a really difficult challenge in tragedy of the commons in like

30:15 someone wants a niche feature.

30:17 Someone else wants that feature.

30:19 You get 10 people wanting that feature.

30:20 You feel under overwhelming pressure to implement that feature.

30:23 But you forget that there's, I forget, you know, there's like 6,000 people who started,

30:27 but it's like 10, 12,000 projects that use Pydantic.

30:31 Those people haven't asked for that.

30:33 Do they want it or would they actively prefer that Pydantic was simpler, faster, smaller?

30:38 Exactly.

30:39 Right.

30:40 Part of the beauty of it is it's so simple, right?

30:42 I do.

30:43 I get the value.

30:43 I define the class.

30:44 I star, star, take the data.

30:46 And it's either good or it's crashed, right?

30:49 If it gets past that line, you should be happy.

30:51 Yeah.

30:52 And I think that like I'm pretty determined to keep that stuff that simple.

30:55 There are those who want to change it, who say initializing the class shouldn't do the

30:59 validation, then you should call a validate method.

31:01 I'm not into that at all.

31:03 There's stuff where I'm definitely going to keep it simple and there's stuff where I'm

31:06 really happy to add more things.

31:07 So we were talking about custom types before.

31:09 I'm really happy to add virtually, not virtually any, but like a lot of custom types when someone

31:14 wants it, because if you don't need it, it just sits there and mostly doesn't affect

31:19 people.

31:19 Right.

31:19 If you don't specify a column or a field of that type, it doesn't matter.

31:23 You'll never know it or care.

31:24 Yeah.

31:25 Yeah.

31:25 So there's a couple of comments in the live stream.

31:28 And I think maybe we can go ahead and touch on them about sort of future plans.

31:33 So you did mention that there's going to be kind of a major upgrade of V2.

31:37 And Carlos out there asks, is there any plans for Pydantic to give support for PySpark data

31:43 frame scheme validation?

31:44 Or, you know, let me ask more broadly, like any of the data science world integration with

31:50 like Pandas or other, you know, NumPy or other things like that?

31:53 There's been a lot of issues on NumPy arrays and like validating them using list types without

32:00 going all the way to a like Python list because that can have performance problems.

32:05 I can't remember because it was a long time ago, but people, whoever it was, found a solution.

32:10 And like Pydantic is used a lot now in data science.

32:13 If you look at the projects it's used in by Uber and by Facebook, they're like big machine

32:18 learning projects.

32:19 Fast Facebook's fast MRI library uses it.

32:23 Like it's used a reasonable amount in like big data validation pipelines.

32:27 So I don't know about PySpark.

32:30 So I'm not going to be able to give a definitive answer for that.

32:33 If you create an issue, I'll endeavor to remember to look at it and have a look and give an answer.

32:38 But you'll start your PySpark research project.

32:40 Yeah.

32:41 Nice.

32:41 Also from Carlos really is like, what's the timeframe for V2?

32:45 Someone joked to me the other day that the release date was originally put down as the end of March

32:50 2020 and that didn't get reached.

32:52 And it's still, the short answer is that like, I need to, there are two problems.

32:56 One is I need to set some time aside to sit there and build quite a lot of code.

33:01 Second problem is the number of open PRs and the number of issues.

33:04 I find it hard sometimes to bring myself to go and work on Pydantic when I have time off,

33:09 because a lot of it is like the trawl of going through issues and reviewing pull requests.

33:13 And when I'm not doing my day job of writing code, I want to like write code on something fun and not have to review other people's code.

33:20 Because I do that for a day job quite a lot.

33:22 So I've had like a bit of trouble getting my like back ending gear to go and like work on Pydantic because I feel like there's 20 hours of reviewing other people's code before I can do anything fun.

33:34 And I think one of the solutions to that is I'm just going to start building V2 and ignore some pull requests and might have to break some eggs to make an omelet.

33:42 But I think that that's better.

33:43 Okay.

33:43 Yeah.

33:44 Well, and also in your defense, a lot of things were planned for March 2020.

33:49 Yeah.

33:49 And got ready.

33:50 That is true.

33:51 That is true.

33:51 I have sat at my desk in my office for a total of about eight hours since then.

33:55 Yeah.

33:56 So I haven't been back to the office in London at all.

33:58 So, so yeah, I would hope this year.

34:01 Yeah.

34:01 Cool.

34:01 Talk Python To Me is partially supported by our training courses.

34:06 Do you want to learn Python, but you can't bear to subscribe to yet another service?

34:11 At Talk Python Training, we hate subscriptions too.

34:14 That's why our course bundle gives you full access to the entire library of courses for one fair price.

34:20 That's right.

34:21 With the course bundle, you save 70% off the full price of our courses and you own them all forever.

34:28 That includes courses published at the time of the purchase, as well as courses released within about a year of the bundle.

34:33 So stop subscribing and start learning at talkpython.fm/everything.

34:39 And then related to that, a risky chance asks, where should people who want to contribute to PyDentix start?

34:47 And I would help you kick off this conversation by just pointing out that you have tagged a bunch of issues as help wanted.

34:55 And then also maybe reviewing PRs, but what else would you add to that?

34:58 I think the first thing I would say is, and I know this isn't the most fun thing to do, but if people could help with reviewing discussions and issues.

35:06 Like triage type stuff?

35:07 Yeah, but if you go on to discussions, we use the GitHub discussions, which maybe people don't even see.

35:14 But these are all questions you can go in and answer if someone has a problem.

35:18 Lots of them aren't that complicated.

35:19 I know that's perhaps not what risky chance meant in terms of writing code.

35:24 And that's obviously, for some of us, where the fun lives.

35:26 But these questions would be enormously helpful if people could help.

35:29 You can see some of them are answered, and that's great.

35:31 But there are others that aren't.

35:33 And then, yeah, reviewing pull requests would be the second most useful thing that people could do.

35:38 And then if there are help wanted issues, just checking that we're still on for it and it's the right time to do it.

35:44 And then I do love submissions.

35:46 I noticed today there were 200 and something people who've contributed to Pydantic.

35:50 So I do do my best to support anyone who comes along, however inexperienced or experienced building features or fixing bugs.

35:57 Yeah, fantastic.

35:57 Another thing I want to talk to you about, is this the right one?

36:00 I believe.

36:01 No, the validating decorator.

36:03 Well, let's talk about validators first.

36:04 We touched on this a little bit.

36:06 So one thing you can do is you can write functions that you decorate with this validator decorator.

36:13 And it says, this is the function whose job is to do a deeper check on a field, right?

36:18 So you can say this is a validator for name, this is a validator for username or validator for email or whatever.

36:24 And those functions are ways in which you can take better control over what is a valid value and stuff like that, right?

36:31 Yeah, but you can do more.

36:33 You can't just be stricter, as in raising error, if it's not how you want it.

36:37 You can also change the value that you're going to that's come in.

36:42 So you can see in the first case of name contains a space, we check that the name doesn't contain a space as a dummy example, but we also return title.

36:51 So capitalize the first letter.

36:53 So you can also change the value you're going to put in.

36:56 So coming back to the date case we were hearing about earlier, if you knew your users were going to use some very specific date format of day of the week as a string, followed by day of the month, followed by year in Roman numerals, you could spot that with a regex, have your own logic to do the validation.

37:13 And then if it's any other date, pass it through to the normal pydantic logic, which will carry on and do its normal stuff on strings.

37:20 Cool. Now this stuff is pretty advanced, but you can also do simple stuff like set an inner class, which is a config, and just set things like any string strip off the white space or lowercase all the strings or stuff like that, right?

37:34 Yeah. And there's allow mutation, which you've got to there, which is super helpful.

37:38 That's where we can stop fields from being modified.

37:40 There's extra there, which is something people often want, which is what do we do with extra fields that we haven't defined on our model?

37:47 Do we, is that an error? Do we just ignore them or do we allow them and just like bung them on the class and we won't have any type hints for them, but they are there if we want them.

37:55 Yeah, very cool. Okay. So the other thing I wanted to ask you about is really interesting because part of what I think makes pydantic really interesting is its deep leveraging of type hints, right?

38:04 And in Python, type hints are a suggestion. They're things that make our editors light up. They are things that if you really, really tried, I don't think most people do this, but you could run something like mypy against it and it would tell you if it's accurate or not.

38:18 I think most people just put it as there's extra information, you know, maybe PyCharm or VS Code tells you you're doing it right or gives you better autocomplete.

38:26 But under no circumstance or almost no circumstance does having a function called add that says x colon int comma y colon int only work if you pass integers, right?

38:37 You could pass strings to it and probably get a concatenated string out of that Python function because there's no, it's not like C++ or something where it compiles down and checks the thing right.

38:47 But you also have this validating decorator thing, which it seems to me like this will actually sort of add that runtime check for the types. Is that correct?

38:57 That's exactly what it's what it's designed to do. It's always been a kind of like interest to me almost a kind of, yeah, just a kind of experiment to see whether this is possible, whether we could like have semi strictly typed logic in Python.

39:09 I should say before we go any further, this isn't to be used on like every function. It's not like Rust where doing that validation actually makes it faster. This is going to make calling your function way, way slower because inside validate arguments, we're going to go off and do a whole bunch of logic to validate every field.

39:26 But there are situations where it can be really useful and where creating that Pydantic model was a bit onerous, but where we can just bang on the decorator and get some validation kind of for free.

39:36 Right. Because the decorator basically does the same thing. I mean, sorry, the classes do the same thing as this decorator might, but instead of having a class, you have arguments.

39:44 And under the hood, what validate arguments is doing is it's expecting that function, taking out the arguments, building them into a Pydantic model and then running the input against that into that Pydantic model and then using the result to call on the, to call the function.

40:00 Yeah. And that sounds like more work than just calling the function for sure. It depends on how much it does, right?

40:05 Does it cache that kind of, does it like cache the class that it creates when it decorates a function?

40:09 It caches the model.

40:10 Yeah.

40:11 Same as we do in other places, but yes, it's still a lot more like Pydantic fast for data validation, but it's data validation, not a compiler.

40:19 Yeah. So maybe this would make sense if I'm writing a data science library and at the very outer shell, I pass in a whole bunch of data, then it goes off to all sorts of places.

40:28 Maybe it might make sense to put this on the boundary entry point type of thing, but nowhere else.

40:33 Yeah, exactly. Where someone's going to find it much easier to see a Pydantic error saying these fields were wrong rather than seeing some strange matrix that comes out the wrong shape because they passed in something as a, as a string, not an int.

40:45 Or none type has no attribute such and such.

40:48 Yeah.

40:49 Whatever.

40:49 Right.

40:50 That standard error they always run into.

40:51 Okay.

40:52 That's pretty interesting.

40:53 Let's talk a little bit about speed.

40:56 You have talked about this a couple of times, but maybe it's just worth throwing up a simple example here to put them together.

41:03 So we've got Pydantic, we've got Adders, we've got Valadeer, which I've never heard about, but very cool.

41:08 Marshmallow and a couple of others like Django REST Framework and Cerebus.

41:13 So it has the, all of these in relative time to some benchmark code that you have, but it basically gives it as a percentage or a factor of performance, right?

41:23 Yeah.

41:24 And the first thing I'll say is that there were lies, damn lies and benchmarks.

41:28 Like you'll get, you might well get different results, but my impression from what I've seen is that Pydantic is as fast, if not faster than the other ways of doing it in Python.

41:38 Short of writing your own custom code in each place to be like, yeah, to do manual validation, which is a massive pain.

41:44 And if you're doing that, you probably want to go and write it in a proper compiled language anyway.

41:48 Right.

41:48 Right.

41:49 Or maybe just use Cython on some little section, something like that, right?

41:53 So all of Pydantic is compiled with Cython and is about twice as fast.

41:58 If you install it with pip, you will get mostly the compiled version.

42:02 There are binaries available for Windows, Mac and Linux, Windows 64-bit, not 32, and maybe some other extreme, and it will compile for other operating systems.

42:12 So it's already faster than just calling Python.

42:16 Well, I don't know about whether the validation with Pydantic that's compiled is faster than raw Python, but like it'll be of the same order of magnitude.

42:23 Yeah.

42:23 Yeah.

42:24 Fantastic.

42:24 Okay.

42:24 I didn't realize it was compiled with Cython.

42:26 That's great.

42:27 Yeah.

42:27 It's part of the magic, huh?

42:28 Making it faster.

42:29 Yeah.

42:29 So that was David Montague a year and a half ago, put an enormous amount of effort into it and, yeah, about doubled the performance.

42:36 It's Python compiled with Cython rather than real Cython code.

42:40 So it's not as C speed, but it's faster than just calling Python.

42:44 Yeah, absolutely.

42:45 And Cython, taking the type hint information and working so well with it these days, it probably was easier than it used to be or didn't require as many changes as it might.

42:54 Otherwise, I think it's an open question.

42:56 I think it's an open question whether Cython is faster with type hints.

42:58 It does.

42:58 It's in places actually adding type hints makes it slower because it does its own checks that I think is a string when you've said it's a string.

43:05 But yeah, I think it does use it in places.

43:07 Yeah.

43:07 I was thinking more like you don't have to rewrite it in Cython.

43:10 I don't have to convert Python code to Cython code where it has its own sort of descriptor language.

43:15 But like if you have Python code that's type annotated, it'll take that and run with it these days.

43:21 I think it isn't any faster or any better because of the type hints much.

43:25 Although someone out there is an expert and I don't want to say that.

43:27 So I'm not sure.

43:28 Yeah, I hear you.

43:29 All right.

43:30 Another thing I want to touch on is the data model code generator.

43:33 You want to tell us about this thing?

43:34 What is this?

43:34 I haven't used it much.

43:36 But yes, what we haven't talked about here.

43:37 It's just what it is.

43:38 Is JSON Schema, which is what Sebastian Ramirez implemented a couple of years ago when he was first starting out on FastAPI.

43:45 And it's one of the coolest features of FastAPI.

43:47 And Pydantic is that once you've created your model, you don't just get model and model validation.

43:54 You also get a schema generated for your model.

43:56 And in FastAPI, that's automatically created with Redock into really smart documentation.

44:01 So you don't even have to think about documentation most of the time if it's internal or it's not widely used API.

44:08 And even if it's widely used, add some doc strings and you've got yourself like amazing API documentation, just straight from your model.

44:16 And data model code generation, as I understand it, is generating those JSON schema schemas for models.

44:23 Is that right?

44:24 Yeah, I think so.

44:24 It feels to me like it's the reverse of what you described from what Sebastian has created, right?

44:29 Like given one of these open API definitions, it will generate the Pydantic model for you.

44:36 So if I was going to consume an API and I'm like, well, I got to write some Pydantic models to match it.

44:42 Like you could run this thing to say, well, give me a good shot at getting pretty close to what it's going to be.

44:47 Yeah.

44:47 Yeah.

44:48 Yeah.

44:48 I had it around the wrong way.

44:49 But yeah, my instinct is I haven't used it, but that it gets you, it does 90% of the work for you.

44:55 And then there's a bit of like manual tinkering around the edge to change some of the types I suspect.

44:59 But like, yeah, really useful.

45:01 Yeah.

45:01 And it supports different frameworks and stuff.

45:03 And I haven't used it either, but it just seemed like it was a cool thing related to sort of quickly get people started if they've got something complex to do with Pydantic.

45:11 So for example, I built this weather, real time, weather, live weather data service for one of my classes over at weather.talkpython.fm.

45:19 I built that in FastAPI and it exchanges Pydantic models.

45:23 And all you got to do in order to see the documentation, just go to slash docs.

45:28 And then it gives you the JSON schema.

45:31 So presumably I could point that thing at this and then it would generate.

45:36 And go back to the model.

45:37 Exactly.

45:38 And get a fairly complicated Pydantic model prebuilt for me, which I think is pretty excellent.

45:42 Yeah.

45:43 It's worth saying maybe you disagree, but I think the redock version of the documentation or autodox is even smarter than that one.

45:51 I don't know if you've got it.

45:52 Yeah, that one I think is even smarter.

45:53 Oh yeah, this is a really nice one.

45:54 I like this one a lot.

45:55 Yeah, it even gives you the responses there.

45:57 It could be 200 or 422, which I did build that into there, but I didn't expect it to actually know.

46:02 That's pretty interesting.

46:03 Yeah, it's cool.

46:03 Yeah, it's very cool.

46:04 So they're both there, either slash docs or slash redock.

46:08 FastAPI will pull them.

46:10 You can switch one off or change the endpoints, but yeah.

46:12 Yeah.

46:13 And by the way, if you're putting out a FastAPI and you don't want public documentation,

46:18 make sure that you set docs, the docs URL and the redox URL to none.

46:22 And when you're creating your app or your API instance.

46:25 So yeah, that's always on unless you take action.

46:28 So you better be sure you want to.

46:29 Or you can do what I've done, which is protect it with authentication so the front end developers can use it, but it's not publicly available.

46:36 So if you're building like a React app, it's really useful to have your front end engineers be able to go and see that stuff and understand what the fields are.

46:43 But it's a bit of a weird thing to make public, even if there's nothing particularly sensitive.

46:47 So yeah, you can put it behind authentication.

46:49 Yeah.

46:49 Yeah, very good.

46:50 All right.

46:51 You already talked about the PyCharm plugin, but maybe give us a sense for why do we need a PyCharm plugin?

46:57 I have PyCharm.

46:59 And if it has the type information, a lot of times it seems like it's already good to go.

47:04 So what do I get from this PyCharm plugin?

47:06 Like, why should I go put this in?

47:07 So once you've created your model, if we think about the example on the index page again, we would, once we've created our model, accessing .friends or .id or .name will work.

47:18 And PyCharm will correctly give us information about, we'll say, okay, first name exists, like foobar name doesn't exist.

47:28 It's a string, so it makes sense to add it to another string.

47:31 But when we initialize a model, it doesn't know how, like, the init function of Pydantic just looks like, take all of the things and pass them to some magic function that will then do the valid thing.

47:40 I see.

47:41 It looks like star, star, KWR, good luck, go read the docs.

47:45 Yeah, exactly that.

47:46 But this is where the PyCharm plugin comes in because it gives you documentation on the arguments.

47:51 Okay, so it looks at the fields and their types and says, well, these are actually keyword arguments to the constructor, the initializer.

48:00 Yeah.

48:00 Okay.

48:00 Yeah, got it.

48:01 That's very cool.

48:02 And it will also, I don't even know what it does.

48:06 I just use it the whole time and it works.

48:07 You know those things, but you don't even think about them.

48:09 Yeah, cool.

48:10 So it gives you auto-completion and type checking, which is cool for the initializer, right?

48:14 So if you were to try to pass in something wrong, it lets you know.

48:17 Also, it says it supports refactoring.

48:19 If you refactor the keyword.

48:21 One of the really useful things it does is when we talked about validators, which are done by a decorator, they are class methods.

48:28 It's very specifically because you might think that they're instance methods and you have access to self.

48:33 You don't because they're called before the model itself is initialized.

48:37 So the first argument to them should be class CLS.

48:40 It will automatically give you an error if you put self, which is really helpful when you're creating those validators because otherwise without it, HighCharm assumes it's an instance method, gives you self, and then you get yourself into hot water when you access self.userid and it breaks.

48:55 Oh, interesting.

48:56 Okay.

48:56 Yeah, that makes a lot of sense.

48:57 Because it's converting and checking all the values and then it creates the object and assigns the fields, right?

49:04 Yeah, so we can access other values during validation from the values keyword argument to the validator, but not via like self.userid or whatever.

49:13 Yeah, cool.

49:14 And Risky Chance loves that it works with aliases too, which is pretty cool.

49:18 Oh, yeah.

49:19 It does.

49:19 It does lots of cool things.

49:21 I'm really impressed by it.

49:22 It's one of the coolest things that come out of Pydantic.

49:24 Awesome.

49:25 Yeah, I've installed it and I'm like, I'm sure my Pydantic experience is better, but I just don't know what is built in and what is coming from this thing.

49:32 So, yeah, that's...

49:33 We're also used to PyCharm just working on so many things that you don't even notice.

49:38 Like, yeah, you only notice when it doesn't work, so...

49:40 Yeah, absolutely.

49:41 So, we're getting a little short on time, but I did want to ask you about Python DevTools because you talked about having Pydantic work well with DevTools as well.

49:53 Yeah.

49:53 What are these?

49:54 You are also the author of Python DevTools, yeah?

49:56 Yeah, what is this?

49:57 For me, it's just a debug print command that puts stuff pretty and gives it color and tells me what line it was printed on.

50:03 And I use it the whole time in development instead of print.

50:05 And obviously, I wanted it to show me my Pydantic models in a pretty way, so it has integration.

50:11 There are some hooks in DevTools that allow it to customize the way stuff's printed.

50:16 And I actually know that the author of Rich, he slightly frustratingly has used a different system all over again, but he's also supported Pydantic.

50:23 So, Pydantic will also print pretty with Rich as well as with DevTools.

50:27 Yeah, cool.

50:28 Okay, really nice.

50:29 Yeah, Rich is a great TUI, Terminal User Interface Library for Python.

50:34 Yeah, it's cool.

50:35 It's different from DevTools.

50:36 I wouldn't say they compete.

50:37 DevTools is, for me, it's just...

50:38 It does have some other things, some timing tools and some formatting, but for me, it's just the debug print command that Python never had.

50:45 Nice.

50:45 So, what's the Pydantic plugin here, or connection, rather, here?

50:50 So, if I debug out of DevTools a model, I get just a really nice representation?

50:55 Yeah, exactly that.

50:56 It's not showing it...

50:58 It's because you're in the DevTools docs.

50:59 There's some other docs in Pydantic to give you an example.

51:02 But it'll give you a nice example if it expanded out rather than squashed into one line.

51:07 So, usage with DevTools is the last...

51:09 There you go.

51:09 Got it.

51:09 Yeah, so you see that.

51:11 The user picked out nicely instead of, yeah, done like that.

51:15 I suppose that's kind of...

51:16 That demonstrates its usage for me.

51:18 Yeah, perfect.

51:19 That looks really good.

51:20 It's nice to be able to just print out these sorts of things and see them really quickly.

51:25 What's the just basic string representation of a Pydantic model?

51:29 For example, if I'm in PyCharm and I hit a breakpoint, or I'm just curious what something is and I just print it, PyCharm will put a little grayed out...

51:37 String, str representation.

51:39 It's right there.

51:40 I think that's the string representation you're looking at right there.

51:42 Yeah, perfect.

51:43 So, you get a really rich sort of view of it embedded in the editor or if you print it...

51:48 And if you use Repra, then you get basically wrapped in user.

51:51 All right.

51:51 Okay.

51:52 So, it gives you...

51:53 As if it were...

51:53 Yeah.

51:54 You're trying to construct it out of that data.

51:56 Yeah.

51:56 Okay.

51:57 Fantastic.

51:57 Well, you know, we've covered a bunch of things and I know there's a lot more.

52:02 I don't recall whether we talked about this while we were recording or whether we talked about it before and we were just setting up what we wanted to talk about.

52:11 But it's worth emphasizing that this is not just a FastAPI validation data exchange thing.

52:16 It works really great.

52:17 A lot of the stuff happens there.