Scaling Python and Jupyter with ZeroMQ

What if you wanted this async ability and many more message exchange patterns like pub/sub. But you wanted to do zero of that server work? Then you should check out ZeroMQ.

ZeroMQ is to queuing what Flask is to web apps. A powerful and simple framework for you to build just what you need. You're almost certain to learn some new networking patterns and capabilities in this episode with our guest Min Ragan-Kelley to discuss using ZeroMQ from Python as well as how ZeroMQ is central to the internals of Jupyter Notebooks.

Episode Deep Dive

Guest introduction and background

Min Reagan-Kelly is a seasoned contributor to the Jupyter and IPython ecosystem with over a decade of experience. He started out in physics, doing computational simulations of plasmas, and eventually discovered Python’s flexibility for scientific work. That path led him deep into IPython (now Jupyter) development where he helped build advanced features such as IPython Parallel. Min’s current focus includes maintaining PyZMQ (the Python bindings for ZeroMQ) and working on tools that power distributed and interactive computing in Python.

What to Know If You're New to Python

If you’re new to Python but curious about the concepts in this episode, here are some ideas and resources to help you get the most out of the discussion:

- Understand Basic Python Scripting: You’ll want to be comfortable writing and running simple Python scripts before jumping into distributed or asynchronous code.

- Familiarity with Package Installation (pip / venv): ZeroMQ and PyZMQ are external libraries, so practice installing packages in a virtual environment.

- High-level Grasp of Concurrency: Even knowing basic async or threading will help you follow the messaging topics in ZeroMQ.

- Be Aware of Data Structures: ZeroMQ uses messages (rather than raw socket streams), so a little knowledge of Python lists, dictionaries, and byte arrays can be valuable.

Key points and takeaways

- ZeroMQ as a Central Player in Scalable, Asynchronous Messaging

ZeroMQ is a C++ library that abstracts away low-level networking details and focuses on messaging patterns rather than raw TCP/UDP connections. By using sockets with defined patterns (e.g., publish-subscribe or request-reply), developers can build robust, concurrent applications without setting up a separate broker service. This removes much of the overhead and complexity found in typical async solutions like Celery paired with Redis or RabbitMQ. Moreover, ZeroMQ is highly portable across many languages, making it well-suited for polyglot or microservice architectures.

- Links and tools:

- How Jupyter Relies on ZeroMQ

Jupyter notebooks use ZeroMQ sockets at their core to communicate between the “client” (the notebook interface) and the “kernel” (where code runs). For instance, it employs publish-subscribe channels for sending real-time output to all connected clients, and request-reply channels for executing code and returning results. This design allows multiple clients to connect to the same kernel without the kernel itself needing to handle each peer individually.

- Links and tools:

- PyZMQ: Pythonic Bindings for ZeroMQ

PyZMQ is the Python library that provides the bridge between ZeroMQ’s C/C++ code and Python. It wraps native functionality—like atomic message sending, non-blocking IO, and multiple socket types—while staying relatively simple to integrate. PyZMQ is foundational to Jupyter but is equally relevant for custom microservices or distributed Python solutions.

- Links and tools:

- Understanding and Choosing Socket Patterns

ZeroMQ offers several core patterns, each corresponding to a specific socket type. The main ones mentioned are:

- Publish-Subscribe (Pub-Sub) for broadcasting messages to many receivers.

- Push-Pull (Ventilator-Worker) for work queues distributing tasks across multiple workers.

- Request-Reply (Dealer-Router) for classic client-server interactions (but more flexible than raw HTTP). Developers can mix and match these patterns without rewriting large swaths of code—only the socket pattern and who “binds” versus “connects” typically change.

- Links and tools:

- Async vs. Threading vs. Multiprocessing vs. Messaging

Many people default to Python’s

threadingormultiprocessinglibraries for parallel or asynchronous tasks, but message-based frameworks like ZeroMQ can often be more scalable and simpler to reason about. Instead of shared-state concurrency, you have explicit message-passing, which can reduce locking headaches. ZeroMQ’s asynchronous sending/receiving also interacts nicely with Python’sasyncioor frameworks such as Tornado.- Tools:

- Serialization and Zero-Copy Transfers

Large data transfers, such as NumPy arrays, can be sent efficiently with ZeroMQ’s zero-copy features. This means you avoid extra memory copying in Python—great for real-time or high-throughput applications. The Jupyter protocol itself often exploits multi-part messages, sending text (JSON) plus binary buffers in separate frames.

- Tools:

- IPython Parallel and ZeroMQ

An extension of the IPython project, IPython Parallel uses ZeroMQ to manage distributed or parallel computation across many kernels (called “engines”). Rather than manually handling cluster management, IPython Parallel abstracts the complexity through ZeroMQ’s identity-based message routing. It’s a powerful tool for scientific workloads that need to scale code across multiple machines.

- Tools:

- Use Cases in Microservices

For distributed applications with many small services, ZeroMQ can serve as a high-speed communication backbone. It supports ephemeral scaling: new instances can appear and connect with minimal changes to the overall architecture. ZeroMQ’s approach is brokerless, so any service can directly connect to any other—an appealing model for container-based environments where ephemeral services come and go quickly.

- Tools:

- Docker / Kubernetes (referenced conceptually in the conversation)

- Tools:

- Challenges Building PyZMQ (C Extensions) for Cross-Platform

Min discussed overcoming the complexities of distributing PyZMQ wheels. Before Python “wheels,” Windows users often had to compile from source or rely on non-official installers. Tools like

CI Build WheelandDelve Wheelhave simplified cross-platform builds, enabling PyZMQ to ship prebuilt binaries for major OSes and architectures.- Links and tools:

- Real-Time Interaction with Scientific Simulations Min’s background in plasma physics led him to see the benefit of hooking simulations into Python and adjusting parameters in real time. ZeroMQ’s async, message-based design allowed a feedback loop—like turning physical knobs—without rewriting the entire simulation logic. This concept extends well beyond physics, making dynamic, interactive data processing feasible in other domains too.

Interesting quotes and stories

Steering a 5-day simulation in half a day: Min described how, in his physics research, it used to take multiple 5-day runs to tune the parameters for certain simulations. By incorporating Python and ZeroMQ, he was able to steer the simulation mid-run, drastically cutting down total runtime to about half a day.

“Publish-Subscribe is basically sending messages to all who can keep up”: This quote highlights how ZeroMQ is unconcerned about how many subscribers connect or disconnect. It simply broadcasts data without requiring the publisher to store or track individual peers.

Key definitions and terms

- ZeroMQ Context: A container for IO threads that handle the actual network communication. All sockets in a process typically share a context.

- Pub-Sub: Publish-subscribe messaging pattern; one sender, multiple receivers.

- Dealer / Router: Sockets used for request-reply patterns, offering flexible routing of messages.

- Zero-Copy: Transferring data between locations (e.g., memory buffers) without copying it in user space, improving performance.

- IPython Parallel: A Python library for parallel or distributed computing built on ZeroMQ, allowing multiple engines to execute code concurrently.

Learning resources

Here are some recommended resources if you want to dive deeper into the topics from this episode:

- ZeroMQ Guide: In-depth tutorials and best practices for using ZeroMQ.

- PyZMQ GitHub Repo: Source code, issues, and documentation for Python’s ZeroMQ bindings.

- Python for Absolute Beginners: Excellent foundation if you’re brand new to Python.

- Async Techniques and Examples in Python: Learn how to leverage Python’s async features, threading, and multiprocessing—pairs well with messaging approaches like ZeroMQ.

Overall takeaway

ZeroMQ’s strength lies in its combination of simplicity and power. By designing around messaging rather than raw sockets, it greatly simplifies building distributed systems and underpins core projects like Jupyter. Whether you are looking to scale out microservices, add asynchronous interactivity to scientific simulations, or just want an alternative to traditional HTTP for backend communication, ZeroMQ (and PyZMQ in particular) is a valuable tool in Python’s ecosystem.

Links from the show

Simula Lab: simula.no

Talk Python Binder episode: talkpython.fm/256

The ZeroMQ Guide: zguide.zeromq.org

Binder: mybinder.org

IPython for parallel computing: ipyparallel.readthedocs.io

Messaging in Jupyter: jupyter-client.readthedocs.io

DevWheel Package: pypi.org

cibuildwheel: pypi.org

YouTube Live Stream: youtube.com

PyCon Ticket Contest: talkpython.fm/pycon2021

Episode #306 deep-dive: talkpython.fm/306

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 When we talk about scaling software, threading and async get all the buzz.

00:04 And while they are powerful, using asynchronous queues can often be much more effective.

00:08 You might think this means creating a Celery server and maybe running RabbitMQ or Redis as well.

00:14 What if you wanted this async ability and many more message exchange patterns like PubSub,

00:19 but you wanted to do zero of that server work, none of it?

00:22 Then you should check out ZeroMQ.

00:25 ZeroMQ is to queuing what Flask is to web apps, a powerful and simple framework for you to build just what you need.

00:31 You're almost certain to learn some new networking patterns and capabilities in this episode with our guest,

00:37 Min Reagan-Kelly.

00:38 He's here to discuss ZeroMQ for Python, as well as how ZeroMQ is central to the internals of Jupyter Notebooks.

00:45 This is Talk Python To Me, episode 306, recorded February 11th, 2021.

00:50 Welcome to Talk Python To Me, a weekly podcast on Python, the language, the libraries, the ecosystem,

01:08 and the personalities.

01:09 This is your host, Michael Kennedy.

01:11 Follow me on Twitter where I'm @mkennedy, and keep up with the show and listen to past episodes at talkpython.fm,

01:17 and follow the show on Twitter via at Talk Python.

01:21 This episode is brought to you by Linode and Mido.

01:24 If you want to host some Python in the cloud, check out Linode and use our code to get $100 credit.

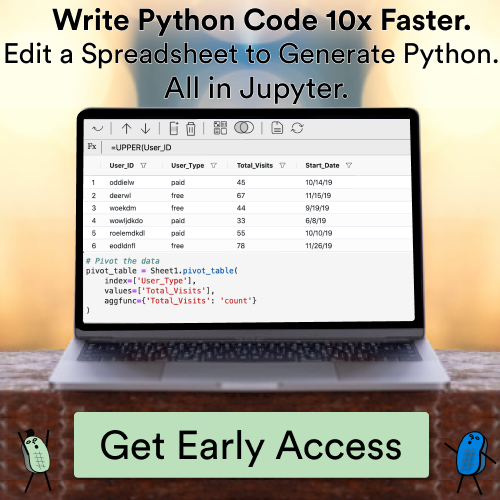

01:29 If you've ever wanted to work with Python's data science tools like Jupyter Notebooks and Pandas,

01:34 but you wanted to do it like you worked with Excel, with a spreadsheet and a visual designer,

01:39 check out Mido.

01:39 One quick announcement before we get to the interview.

01:43 We'll be giving away five tickets to attend PyCon US 2021.

01:47 This conference is one of the primary sources of funding for the PSF, and it's going to be held May 14th to 15th online.

01:54 And because it's online this year, it's open to anyone around the world.

01:58 So we decided to run a contest to help people, especially those who have never been part of PyCon before,

02:03 attend it this year.

02:04 Just visit talkpython.fm/PyCon 2021 and enter your email address, and you'll be in the running for an individual PyCon ticket.

02:13 compliments of Talk Python.

02:14 These normally sell for about $100 each.

02:17 And if you're certain you want to go, I encourage you to visit the PyCon website,

02:20 get a ticket, and that money will go to support the PSF and the Python community.

02:24 If you want to be in this drawing, just visit talkpython.fm/PyCon 2021.

02:29 Enter your email address.

02:31 You'll be in the running to win a ticket.

02:33 Now let's get on to that interview.

02:35 Min, welcome to Talk Python To Me.

02:38 Thanks. Thanks for having me.

02:39 Yeah, it's really good to have you here.

02:41 I'm excited to talk about building applications with zero MQ.

02:45 It's definitely one of those topics that I think lives in this realm of asynchronous programming

02:51 in ways that I think a lot of people don't initially think of.

02:54 Like you think of async programming, like, okay, well, I'm going to do threads.

02:57 And if threads don't work, maybe I'll do multi-processing.

02:59 And then those are my options, right?

03:01 But Qs and other types of intermediaries are really interesting for creating powerful design patterns

03:07 that let you scale your apps and do all sorts of interesting things, right?

03:10 Yeah.

03:10 Yeah.

03:11 Zero MQ has definitely given me a new way of thinking about how different components

03:15 of a distributed application can talk to each other.

03:17 Absolutely.

03:18 So we're going to dive all into that, which is going to be super fun.

03:21 But before we do, maybe just tell us a bit about yourself.

03:24 How do you get started in programming in Python?

03:26 I got started mostly during college where I was studying physics and what essentially amounted to a computational physics degree.

03:33 And that's where I met, I had a professor, Brian Granger, who's one of the heads of the now Jupyter project.

03:40 I started working with him as an undergrad back in 2006, working on what would ultimately become the first IPython parallel,

03:47 the interactive parallel computing toolkit in IPython.

03:50 Oh, that's really cool.

03:50 What university was that at?

03:52 That was at Santa Clara University.

03:53 Uh-huh.

03:53 Okay.

03:54 Nice.

03:54 Yeah, it's really cool.

03:55 I mean, you were working on physics, but you were also like at the heart of Jupyter.

03:59 And I guess IPython at the time, right?

04:01 IPython notebooks is what they were called, yeah?

04:03 Yeah, coming up on 15 years that I've been working on IPython and Jupyter.

04:07 Nice.

04:08 And so you basically, did you do programming before you got into your physics

04:11 or did you just learn that to get going with your degree?

04:14 So I did it for fun in college, but I played around with calculators and stuff in high school,

04:20 but nothing more complicated than printing out a Fibonacci sequence.

04:23 Nice.

04:24 So were you a TI person?

04:26 Were you an HP person?

04:28 What kind of world were you?

04:29 Yeah, TI all the way.

04:31 Yeah, me too, obviously.

04:32 That reverse Polish notation, that was just wrong.

04:35 Yeah.

04:35 Although I did, in college, we did implement reverse Polish calculators because it's easier.

04:39 Yeah.

04:39 Yeah, cool.

04:40 It's easy to write a parser for that.

04:42 Yeah, it's easier on the creator and not on the user, which I feel like computers used to be way more that way, right?

04:48 Like, oh, it's easier for us to make it this way.

04:49 Well, if you put a little more work, it'll be easier for the millions of people that use it.

04:53 Well, maybe not millions in the early, early days, but yeah.

04:55 Very interesting.

04:57 So what kind of stuff were you doing with IPython in the early days?

05:00 What kind of stuff were you studying with your physics?

05:02 In grad school, I was studying computational plasma physics simulation.

05:06 So it was particle simulations of plasmas, which are, you know, what's going on in stars.

05:10 I wasn't studying stars, but that's one of the main plasmas that people know about.

05:15 And so I was doing particle simulations of just studying a system, seeing what happens.

05:19 And we were working on an interactive scale simulation code that was written in C++.

05:24 It was a fairly nice and welcoming C++ physics simulation as far as academic C++ physics simulations go.

05:32 Yeah.

05:32 But essentially, my first year of grad school, I wrote a paper where I had to do a simulation run for five days,

05:39 look at the results, and then change some input parameters and run it again for five days,

05:43 and do that for a few dozen iterations, and then wrote a paper about that.

05:47 And then ultimately, my PhD thesis was about wrapping the same code in Python

05:51 so that I could have programmatic, like, self-steering, actually by this time driven in a notebook.

05:56 Doing what had been manual, like change it, run it again, doing that automatically,

06:02 just by wrapping the C++ code in a Python API, I could do it much more efficiently and actually take something that took multiple iterations of five days,

06:11 I could actually get the same result in a little over half a day, I think.

06:15 Wow.

06:15 That's really cool.

06:16 So you would run basically a little small simulation with C, and then you would get the inputs into Python and go,

06:23 okay, well, now how do we adjust it? How do we change that and go from there?

06:26 It was the same simulation, but I had to tune the inputs a little bit differently in order to,

06:32 there were some details of the physics that basically I had to turn some knobs way up because I wasn't constantly watching it.

06:38 Yeah, I see.

06:39 And then it would say like, oh, that number was too big, turn it down, do it again, and then look and see if it was too big again.

06:44 Whereas once I had a knob that could be turned while the simulation was running, which is the feature that was missing,

06:51 I could say ramp up the current in this case.

06:55 So I was studying the limit of current that you can get through a diode.

06:59 Right.

06:59 And so ramp up the current, and then once I see evidence that it's too high, ramp it back down,

07:05 and then I could change, you know, lower the slope so that I would slowly approach an equilibrium and find the limit.

07:11 Whereas when I had to do it with this long, I had to basically wait until it's done to change the input,

07:17 I had to dramatically over-inject the current, which made the simulations extra slow because there were way too many particles.

07:22 Yeah, yeah.

07:23 And the spatial resolution had to be really fine in order to resolve something called a virtual cathode,

07:28 and I didn't have to do nearly as much.

07:30 Basically, because I could do this live feedback, the simulations themselves could be quicker

07:36 because I didn't have to provide enough information that I could get the right answer out a long time later.

07:42 That's super cool, and obviously it makes a huge difference.

07:44 You know, I feel like we have a similar background in how we got into programming.

07:48 Like, I was doing a math degree, and my math research led me into doing C++ on Silicon Graphics, mainframes, computers, and stuff like that.

07:56 You talk about letting these things run overnight.

07:58 Like, everyone would just kick off these things, and then they would come back in the morning to see what happened.

08:04 And, you know, interesting sort of random sidebars.

08:07 Like, we came in one morning, and we all tried to log into our little workstations or whatever,

08:13 and, like, the system just wouldn't respond.

08:15 There was just something wrong with it.

08:16 And we were like, well, what the heck is going on?

08:18 You know, like, this is like a quarter-million-dollar computer.

08:20 It should let us log in.

08:22 What is wrong with it, right?

08:23 And it was in there running really loud, right?

08:25 It's like this huge, loud machine.

08:26 And it turned out that one of the grad students, not me, had started a job that was—they were trying to figure out what was going on,

08:33 and they were logging a lot because they were having problems with their code.

08:37 And it was in this tight loop that ran all night.

08:40 And because it was, like, a somewhat small group, they had no disk limits or permission restrictions for the large part.

08:47 So it literally used up every byte on the server, and then it just wouldn't do anything at all.

08:52 And, I mean, that's the—the reason I bring this up is, like, that's the challenge of these things where you, like,

08:57 start it and let it run for days and then figure out what's happening.

09:00 You're like, oh, it just used up the entire hard drive of this giant machine.

09:03 Whoops.

09:03 It broke it for everyone, right?

09:05 Yeah.

09:05 And so this live aspect or more self-guided is a really great idea.

09:10 It's really—it's a big step ahead.

09:12 Maybe more so than people initially, like, hear it as, right?

09:15 It's a big deal.

09:16 Yeah, and I think it was—that's part of what got me really excited about the programmatic—

09:21 giving—when you have access to something in Python and you have an environment like iPython or Jupyter or whatever,

09:26 being able to interact with something, what is usually— what you might think of as an offline physics simulation that runs for hours or days,

09:35 if you have a Python API to it, you can steer it while it's going.

09:40 Like, you can turn the knobs on your experiment while it's running.

09:44 You know, sometimes there are reasons why that's not actually a good idea, but it's really powerful to not—

09:49 one of the costs, you know, that I had in my experiment was that in order to change the inputs, I had to start over.

09:54 Like, physically, I didn't have to.

09:56 Like, if it were a physical machine where I were measuring inputs and outputs, I could just, you know, turn the current down and see the results.

10:02 But the code didn't support that.

10:05 The frustrating thing about it was the way the physics was written did support that.

10:08 The C++ code did support that.

10:10 We just hadn't written an interface that allowed me as the user to do it.

10:14 And so the Python wrapping work was surprisingly little.

10:17 It was, you know, expose Python APIs to—or C++ APIs to Python with Cython.

10:22 Yeah, that's cool.

10:23 And then I could turn all the knobs that were already there in the code.

10:25 I just was now allowed to turn them.

10:27 It sounds to me, thinking back on the timeline there, that must have been pretty early days in the data science, computational science world of Python, right?

10:36 Like, NumPy was probably just about out around then.

10:39 By the time I was doing that work, that would have been around 2012.

10:42 Oh, okay.

10:43 So, yeah, it was more established at that point.

10:45 I was thinking back to, like, early, like, 2005 or something.

10:47 That, yeah, not quite, right?

10:49 Yeah, not quite.

10:49 That was when I was still, as a little undergrad, was still able to help make building NumPy—I was able to submit patches to make building NumPy on Mac a little easier.

11:00 That's cool.

11:00 Before it became, like, completely polished and, like, so widely used.

11:04 I suspect it's really nerve-wracking to contribute to those kinds of things now.

11:08 It was a nice community, and it was exciting to be able to say, like, I have a problem.

11:12 There's probably other people who have a similar problem.

11:14 Let me see if I can fix it and then submit a patch.

11:16 Yeah, that's fantastic.

11:17 Cool.

11:17 So you're carrying on with your scientific computation work these days, right?

11:22 What do you go to now?

11:23 Now my job is actually working on Jupyter and Python-related stuff.

11:27 So I am a senior research engineer at Simula Research Lab in Oslo, Norway, where I've been since 2015.

11:34 And I'm the head of the Department of Scientific Computing and Numerical Analysis.

11:38 So I'm in a department where people are doing physical simulations of the brain and studying PEs and things like that.

11:45 But what I do is I work on JupyterHub.

11:48 Yeah.

11:48 So we made JupyterHub as a tool for deploying while I was still at Berkeley.

11:53 We made it as a tool for deploying—for people who want to deploy Jupyter Notebooks.

11:57 We say it's for if you're a person who has computers and you have some humans and you want to help those humans use those computers with Jupyter.

12:04 That's what JupyterHub is for.

12:05 Initially, it was scoped to be an extremely small project because we didn't have any maintenance burden to spare.

12:11 The target of that was research groups like mine in grad school of a few people, maybe small classes,

12:18 who have one server in their office and they just want to make it easier for people to log in and use Jupyter on there.

12:23 Right.

12:23 This is when people— Right, right.

12:25 People had all their SSH tunnel reverse—SSH to my server with the opener reverse tunnel so I can point localhost and it's actually over there.

12:32 So we were trying to make life easier for those folks.

12:35 They were running the servers locally and they're like, how do I get access to my computation over there but on my machine?

12:41 That kind of stuff, right?

12:42 Yeah, it's like if I'm a person who's already comfortable with Jupyter but now I have access to a computer that's over there, how do I run my notebook stuff over there?

12:50 And there were a few solutions to that involving SSH tunnels or whatever.

12:54 And JupyterHub was aimed at those folks.

12:56 But it turned out that's not the user community that's really been the most excited about it.

13:02 With things like Zero to JupyterHub, the JupyterHub on Kubernetes started by UVPanda and now led by a whole bunch of wonderful folks, we're shifted into a larger scale than initially designed for.

13:13 It definitely seems like JupyterLab and the predecessors to it have really probably exploded beyond the scale that people initially expected.

13:22 I mean, it's just the de facto way of doing things if you're doing computation these days, it seems.

13:26 I for certain folks definitely seem to like it.

13:29 We try to respond to feedback and just build things that people are going to use.

13:33 Nice.

13:33 So if you're running JupyterLab for such a big group, I mean, there's got to be crazy amounts of computation and, you know, super computer type stuff there.

13:42 What does your whole setup look like there?

13:43 What are you running for your folks at the research lab?

13:46 I run JupyterHub instances for like a summer school and they'll run a workshop and I'll spin up a cluster for running JupyterHub for doing some physics simulations.

13:55 Usually it's for teaching.

13:56 Okay.

13:57 So I don't operate, I don't operate hubs for research.

13:59 Usually I usually operate them for teaching.

14:01 I see.

14:02 Yeah.

14:02 So maybe the computation there, it's like, yeah, not nearly as high as we're trying to model the universe and let that go.

14:08 Yeah.

14:09 Often those folks, the computations are handed off somewhere.

14:12 Right.

14:13 But yeah, like the folks at NERSC in Berkeley are doing some really exciting stuff with running JupyterHub on a supercomputer.

14:18 Yeah.

14:19 Cool.

14:19 Are you doing like Kubernetes or Docker or are you just setting up VMs or how do you handle that side?

14:25 Thanks to the work of all the folks contributing to Zero to JupyterHub, deploying JupyterHub with Kubernetes, I think, is the easiest to deploy and maintain as long as you have access to a managed Kubernetes.

14:37 I still wouldn't recommend deploying Kubernetes itself to anybody.

14:40 It seems fairly complicated to run and maintain that side of things.

14:43 Yeah.

14:44 But if you have a turnkey solution, the one I use the most is the, is GKE, the Google managed Kubernetes.

14:50 Yeah.

14:50 And Zero to JupyterHub will let you just step through and say, give me a cluster and run JupyterHub on it.

14:56 And then all I really need to do is for the workshop is build the user images.

14:59 Nice.

15:00 Very cool.

15:00 You also do some stuff with Binder.

15:02 Is that right?

15:02 I help operate Binder.

15:04 So mybinder.org is a service built on top of JupyterHub that takes JupyterHub as a service for running notebooks on Kubernetes.

15:12 In Binder's case, it's running on Kubernetes.

15:14 And Binder ties in another Jupyter project called Repo to Docker that says, look at a repo, build a Docker image with the contents of that.

15:21 Hopefully that can run everything.

15:23 So like if it finds a requirement set txt.

15:25 Yeah, exactly.

15:26 It's got something that specifies its dependencies or something like that.

15:28 Yeah.

15:28 And there's a bunch of things that we support.

15:30 And the idea is to automate existing best practices, right?

15:33 So find anything that people are already using to specify environments and then install those and then build an image.

15:39 And then what Binder does is, so I built an image, then send that over to JupyterHub to say launch a notebook server with that image.

15:45 Right.

15:46 Yeah.

15:46 You can see like right here just on the main site, just put in a GitHub repo and maybe a branch or a tag or something and then click go.

15:53 And then it spins up literally a Jupyter notebook that you can play with.

15:57 This portion of Talk Python To Me is sponsored by Linode.

16:01 Simplify your infrastructure and cut your cloud bills in half with Linode's Linux virtual machines.

16:05 Develop, deploy, and scale your modern applications faster and easier.

16:09 Whether you're developing a personal project or managing large workloads, you deserve simple, affordable, and accessible cloud computing solutions.

16:17 As listeners of Talk Python To Me, you'll get a $100 free credit.

16:21 You can find all the details at talkpython.fm/Linode.

16:25 Linode has data centers around the world with the same simple and consistent pricing regardless of location.

16:31 Just choose the data center that's nearest to your users.

16:34 You'll also receive 24-7, 365 human support with no tiers or handoffs regardless of your plan size.

16:40 You can choose shared and dedicated compute instances or you can use your $100 in credit on S3 compatible object storage, managed Kubernetes clusters, and more.

16:51 If it runs on Linux, it runs on Linode.

16:53 Visit talkpython.fm/Linode or click the link in your show notes.

16:58 Then click that create free account button to get started.

17:03 When people go to GitHub, they can oftentimes see the Jupyter Notebook.

17:07 When I first saw that, I'm like, how in the world is GitHub computing this stuff for me to see?

17:13 I'm like, maybe that is super computationally expensive.

17:17 Here's the answer.

17:18 How do they know the data and the dependencies?

17:21 The reality is they've just taken what's stored in the Notebook, right?

17:25 That is the last run and it's there.

17:28 But if you want to play with it, you can't do that on GitHub.

17:29 But you can on mybinder.org, right?

17:32 That turns that into an interactive Notebook.

17:35 It adds interactivity to the sharing that you already have with NVMe or GitHub.

17:39 Yeah, very cool project.

17:40 I didn't know that much about it, but I had Tim Head on the show a while ago on episode 256 to talk about that.

17:47 And I learned a lot.

17:47 So, yeah, quite neat.

17:49 Now, let's jump into 0MQ.

17:51 So 0MQ is not a Python thing, but it is very good for Python people, right?

17:58 It has support for many different languages.

18:00 And your work primarily has been to work on making this nice and easy from Python, right?

18:06 Yeah, so 0MQ is a C++, a library written in C++ with a C API, which makes using it a little easier.

18:12 And it's a very small API, which is part of why it's usable from so many languages,

18:17 is that writing bindings for it is relatively easy.

18:20 The idea of 0MQ is it's a messaging library.

18:25 Naming is a little funky because it comes from the world, right?

18:30 The people who create it come from the world of message brokers and message queues.

18:35 And 0MQ is a bit tongue-in-cheek in that it's not actually a message queue at all.

18:40 It's a messaging library where it's just adding a little bit of a layer of abstraction on the networking in terms of you have some distributed application where things need to talk to each other.

18:50 And 0MQ is a tool for building that.

18:52 And it's this library.

18:54 And then you can write the bindings for that library so that you can use it from any of a variety of languages.

19:00 And so Brian Granger and I worked on the Python bindings to use 0MQ from Python, which is called PyZMQ.

19:07 Yeah, when I first thought of it, I imagined it is like a server.

19:10 Something like Redis or Celery or something like that that you start up.

19:15 And then you create queues or something on it.

19:17 And then like different things can talk to it.

19:19 But I think maybe a better conceptualization of it might be like Flask.

19:23 Flask is not a server, but a Flask is a framework that you can put into your Python app and then run it.

19:30 And it is the server itself, right?

19:32 Yeah.

19:32 And so I'd say that 0MQ, the best way I would describe it is it's a fancy socket library.

19:37 Yeah.

19:37 So you create sockets and you send messages.

19:39 And the sockets talk to other sockets.

19:41 You send messages, you receive messages.

19:44 And then 0MQ is all about what abstractions and guarantees and things it gives around those sockets and messages.

19:51 There seems to be a lot of culture and zen about 0MQ.

19:55 Like there's a lot of interesting like nomenclature in the way that they talk about stuff over there.

20:00 So they talk about the zero in 0MQ.

20:03 And the philosophy starts with zero.

20:05 Zero is for the zero broker.

20:06 It's brokerless.

20:07 Zero latency, zero cost.

20:09 It's free.

20:10 Zero admin.

20:11 You don't have like a server type of thing.

20:13 But also to a culture of minimalism that permeates the project.

20:16 Adding power by removing complexity rather than exposing new functionality.

20:20 You want to speak to that just a little bit?

20:22 Like your experience with that?

20:23 Well, so I can speak to that.

20:25 As someone who doesn't work on LibZMQ that much and more somebody who writes bindings for it.

20:31 I can speak to that as it's nice that LibZMQ doesn't change that much.

20:35 Yeah.

20:35 And that part of point of, so, ZMQ has all these features.

20:39 They have, we'll talk a little bit about it in a second.

20:42 But so it's structured so that there are sockets and there are different kinds of sockets that have different behaviors for building these different kinds of distributed applications.

20:51 But in terms of the API, there's just one.

20:53 Like a socket has an API.

20:55 All sockets have the same API.

20:57 So from the standpoint of writing bindings to a library, I just need to say, I know how to wrap the socket APIs.

21:05 And then as they add new types, those are just constants that I need to handle.

21:09 So I don't need to, oh, there's a new kind of socket.

21:11 I need to implement a new Python class for that new kind of socket.

21:14 I just need to wrap socket.

21:16 So that's really, that's from my perspective in terms of as new features are developed and things in LibZMQ, from my perspective as a binding developer or binding maintainer, that's really nice.

21:28 But it also, that also extends to the application layer.

21:31 That once you have a socket and you understand sockets, changing the type of socket changes your message pattern.

21:36 It doesn't change anything about the APIs you need to use and things like that.

21:40 Yeah, so maybe we could talk a little bit about what the application model, I don't necessarily get into programming yet, but like the application model, right?

21:48 So we've got contexts, we've got sockets, and we've got messages.

21:52 And those are the basic building blocks of what working with this is like.

21:55 So if I wanted to create something that could, you know, maybe other applications could talk to it and exchange data, one of my options might be to create a RESTful API that exchanges JSON, right?

22:06 Yeah, sure.

22:07 There's a lot of challenges with that.

22:09 One, it's sort of send request only, right?

22:12 I send over my JSON and then it gives me a response, but I can't subscribe to future changes, right?

22:19 I got to do something like WebSockets in that world if I want something like that, which I guess might be closer to this.

22:24 And then also it's doing the text conversion.

22:27 It's probably a little bit slower.

22:28 Got to do maybe extra work for async, right?

22:31 So there's a lot of things that are maybe similar, but extra patterns, right?

22:36 So instead of just request response, you might have pub sub, you might have like multicast, like something comes in and everyone gets notified about it.

22:45 Can you talk about some of those differences?

22:47 Like maybe compare it to what other more common APIs people might know about?

22:51 Yeah.

22:52 So the main thing that distinguishes ZRMQ is that you have you, so a context is kind of an implementation detail that you shouldn't need to care about, but you still need to create.

23:00 Okay.

23:00 Sockets are the main thing that you deal with and you create a, so you create a socket and every socket has a type and that type determines the messaging pattern.

23:10 So that means that's where we're getting at these kinds of protocols and messaging patterns.

23:15 So with a web server, that's usually a request reply pattern.

23:19 So you have clients connect, send a request, and then they get a reply.

23:23 Pub sub system might be, you know, some totally different thing.

23:26 Maybe in web server land, maybe it's a server side events, you know, an event stream connection.

23:32 And with ZRMQ, the difference between those is the socket type.

23:36 So if you're creating a publish subscribe relationship, you create a publish socket on one side and you create a subscribe socket on the other side.

23:44 If you're doing a request reply, you use a socket called a dealer and a router.

23:49 There's a request and a reply socket in ZRMQ, but nobody should ever use them.

23:53 They're just a special case of router dealer.

23:55 And then there's another one called a pattern called like a ventilator and sink.

23:58 So that's like what you'd use in a work queue, for instance, where you've got a source of work and then it sends a message to one destination, but you don't necessarily care which one.

24:09 I see.

24:10 So maybe you're trying to do a scaled out computing.

24:13 Yeah, exactly.

24:13 You've got 10 machines that could all do the work and you want to somehow evenly distribute that work, right?

24:20 So you're like, all right, well, we're just going to throw it at ZRMQ.

24:23 All the things that are available to do work can subscribe.

24:26 They need to.

24:27 They could even like drop out after doing some work, but then not receive anymore, potentially something like that.

24:33 So that's what ZRMQ, one of the main things that ZRMQ does is it takes control over those things like multiple peers and connection events and stuff like that.

24:43 Because take, for example, the publish subscribe.

24:46 There's two key.

24:48 The things to think about with ZRMQ are what happens when you've got more than one peer connected.

24:54 Right.

24:55 And what happens when you've got nobody to send to.

24:57 So in the publish subscribe model, what it does is when you send a message on a socket, it will send it will immediately send that message to everybody who's connected and ready.

25:06 So if somebody is not able to keep up, right, there's like a queue that's building up and it's gotten full, it'll just drop, stop, drop messages to that peer until they catch up.

25:15 If there are no peers, then it's really fast because it just doesn't send anything and just deletes the memory.

25:20 Yeah.

25:20 Right.

25:21 So with when you're thinking of ZRMQ from Python, sending messages is really the other thing about it is that it's asynchronous.

25:29 That send is not actually send doesn't return when the message is on the TCP buffer or whatever.

25:36 Send returns when you have handed control of the message to the ZRMQ IO thread.

25:42 And this is why ZRMQ has this concept of context.

25:45 Contexts are what own the IO threads that actually do all the real work of talking to over the network.

25:51 So when you're sending in PyZMQ, you're really just passing ownership of the memory to ZRMQ and it returns immediately.

25:58 And so you don't actually know when that message is actually is finally sent and you shouldn't care.

26:03 Sometimes you do care and it can get complicated.

26:05 Yeah.

26:05 But ZRMQ tries to make you not care.

26:07 Interesting.

26:08 So basically you set up the relationships between the clients and the server through like these different models.

26:14 And then you drop off the messages to ZRMQ and it just it deals from there.

26:19 Right.

26:19 It figures out who gets what and when.

26:22 So your application, as soon as it gets the message passed off to the ZRMQ layer, it can go about doing other stuff, right?

26:29 Exactly.

26:30 So ZRMQ, because it's a C++ library, it's not going to grab the GIL or anything.

26:35 So it's a true, even if you're using Python, it's a true multi-threaded application, even if you're only using one Python thread.

26:40 Yeah.

26:41 You handed that memory off to C++, which is running in the background.

26:43 You can do some GIL holding intense operation and ZRMQ will be happily dealing with all the network stuff.

26:49 Right.

26:50 Right.

26:50 It's got its own C++ thread, which has nothing to do with the GIL and they go do its own thing, right?

26:55 Yeah, it can, there's, there is something that comes up in PyZMQ where it can come back and try to grab the GIL from the IO thread or it used to, it doesn't anymore because of Python does actually need to know when it let go of that memory in order to avoid segfaults.

27:09 Yeah.

27:09 But that's an implementation detail.

27:10 Cool.

27:11 So is there anything about reliable messaging here where you could say, I want to make sure that this message gets delivered to every client?

27:20 Like if you said, for example, in this PubSub, if some of them fall behind, it can just drop the messages.

27:24 Is there a way to say, you know, pile that up and then send it along when it catches up or whatever?

27:30 The ZRMQ perspective is that that's an application level problem.

27:33 So ZRMQ helps you build the messaging layer to not, so that it doesn't crash.

27:39 And that's part of why it drops messages.

27:41 And then it basically, it's up to you to say, you know, if you're sending messages and there's a generation counter, for instance, and then you notice I got message five and then I got message eight.

27:51 It's up to your application to say, okay, keep a, you know, keep a recent history buffer so that folks can come back with a different pattern, a request reply pattern to say, give me a batch of recent messages that I missed so that I can resume.

28:06 But ZRMQ doesn't help you with that.

28:08 It handles all the networking stuff, you know, connections.

28:11 You don't have to worry about that.

28:12 You just have to worry about like, how do we set up a way to ask again or have the application ask if it wants more.

28:17 Yeah.

28:17 Nice.

28:18 David out there on the live stream asks, what alternatives to ZRMQ could have been used to build the Jupyter protocol?

28:24 So maybe before we, thanks for the question.

28:27 Before we get to that though, maybe let's just talk about like, oh wait, ZRMQ was used to build the Jupyter protocol?

28:33 Yeah.

28:33 So the Jupyter protocol, which essentially started out in IPython parallel.

28:37 So we had this interactive parallel computing networking framework that eventually evolved into, wait, we've got this network protocol for remote computation.

28:46 We can build basically a REPL protocol, an interactive shell protocol.

28:51 And that ultimately became the Jupyter protocol, which was built with this kind of ZRMQ mindset of, I want to be able to have multiple front ends at the same time.

29:03 So let's say, and we had in 2010, I think, Fernando and Brian had a working prototype of real-time collaboration on a terminal.

29:11 So you've got, using this protocol, so you've got two people with the terminal, you're typing, you can run code and you can see each other's output.

29:19 So we built the Jupyter protocol with multiple connections in mind.

29:24 So that means there's this request reply socket.

29:26 So the front end sends a request, please run this code.

29:30 And the back end sends a reply saying, I ran that, here's the result.

29:34 And there's another channel called IOPub where we publish output.

29:38 So when you do a print statement or you display a map.plit figure, that's a message that goes on PubSub channel, which means that every connected front end, right?

29:49 So you could have multiple JupyterLab instances.

29:51 They'll all receive the same message.

29:53 Right.

29:53 I mean, that sounds like the perfect example of PubSub.

29:56 Somebody's making a change.

29:57 Well, somebody has triggered the server to make a change, but it doesn't matter who started that change.

30:03 Everybody looking at it wants to see the output, right?

30:06 This portion of Talk Python To Me is brought to you by Mido.

30:09 You feel like you're stumbling around trying to work with pandas within your Jupyter notebooks?

30:13 What if you could work with your data frames visually like they were Excel spreadsheets, but have it write the Python code for you?

30:19 With Mido, you can.

30:21 Mido is a visual front end inside Jupyter notebooks that automatically generates the equivalent Python code within your notebook cells.

30:28 Mido lets you generate production ready Python just by editing a spreadsheet, all right with inside Jupyter.

30:35 You're sure to learn some interesting Python pandas tricks just by using the visual aspects of that spreadsheet.

30:42 You can merge, pivot, filter, sort, clean, and create graphs all in the front end and get the equivalent Python code written right in your notebook.

30:49 So stop spending your time Googling all that syntax and try Mido today.

30:53 Just visit talkpython.fm/Mido to get early access.

30:57 That's talkpython.fm/M-I-T-O or just click their link in the show notes.

31:04 So to answer the question of, and this becomes particularly important in the design of IPython parallel, but getting to the question of what alternatives to 0.mq could have been used for the Jupyter protocol.

31:15 So when we were designing it, we thought this protocol of talking directly to kernels was going to be the main thing.

31:20 It turns out that the main way, you know, in 2020 or 2021, I guess we're in now.

31:25 The most kernels talk one-to-one with a notebook web server.

31:30 And it's the web server that's the one that's actually spanning out to multiple clients.

31:34 Right.

31:35 With that being the case, if we had required the notebook server to be the place where we do all this multiplexing and everything, we could have actually built the lower level Jupyter protocol on something much simpler, just an HTTP rest and event stream.

31:49 Probably could have worked just fine.

31:50 Right.

31:51 Maybe just use WebSockets or something on the web server side.

31:54 Yeah.

31:54 If WebSockets had existed at the time.

31:56 Yeah, yeah, yeah.

31:57 Those were also years away.

31:59 Yeah.

32:00 So much of that stuff's easier now and the browser support it and so on.

32:03 So yeah, super interesting.

32:04 Now, one thing that that makes me think of is, you know, how much, how close are we or some sort of Google Docs, JupyterLab type of thing, right?

32:15 I mean, we're not there, right?

32:16 I know there's SageMath and there's Google Colab.

32:19 There's other systems where this does exist, right?

32:23 Where there's sort of, we can all type on the notebook, same cell, same time type of thing.

32:27 Is there anything like that, JupyterLab, that I just didn't miss or I missed?

32:31 There have been, I think, three prototypes at this point that have been developed and are, and ultimately not finished for various reasons.

32:39 There's another one that's picking up again and going strong using YJS, working with QuantStack, I believe.

32:45 Okay.

32:45 Hopefully soon.

32:46 That's not an area of the project where I've done a lot of work.

32:48 I helped a little bit with the last one.

32:50 Well, I mean, to me, it sounds like that's like just all JavaScript front end craziness and not a whole lot of other stuff, right?

32:56 Yeah, the state probably lives on the server, so you need to have a server, whether it's running CRDT or whatever, to synchronize the state.

33:03 And the PubSub, yeah, as well for the changes.

33:05 Yeah.

33:06 Going down this rabbit hole for a minute, also, Nawa asked an interesting question about 0MQ.

33:12 What's the story with 0MQ and microservices, right?

33:16 And I think microservices, people often set up a whole bunch of little small flask or FastAPI things that talk JSON exchange request response.

33:25 But man, like the performance and the multiplexing, all those types of things sound like it actually could be a really awesome non-HTTP-based microservice.

33:35 Yeah, I think 0MQ is a really good fit for microservice-based distributed applications.

33:40 Because one of the things you do when you're designing with microservices is you're defining the communication relationship and you're scaling axes.

33:48 And a nice thing to do with 0MQ is to say is that your application doesn't change when you've got a bunch of peers.

33:57 Your application doesn't even need to know when a new peer comes and goes because 0MQ handles that.

34:01 So one of the things that's nifty about, that's weird and magical, but also really useful about 0MQ is it abstracts binding and connecting and transports.

34:11 So you can have the same application with the same connection pattern and maybe this one binds and maybe, you know, one side binds and one side connects.

34:19 So pub binds and sub connects.

34:21 But you can also have sub bind and pub connect.

34:24 And you can also have your pub bind once and connect three times.

34:27 And none of that changes how your application behaves.

34:30 It just changes where the connections go.

34:32 Right.

34:32 Because with microservices, when you're doing HTTP requests, you always got to figure out, okay, well, what's the URL I'm going to?

34:38 And sometimes that even gets real tricky with, all right, what is even the URL of the identity server?

34:43 What is the URL of the thing that manages the catalog before I even request it?

34:48 And then usually that's a single endpoint HTTP request type of thing if it's like an update notification.

34:53 So yeah, I can imagine that there's some real interesting things here.

34:56 You can have in, you know, a distributed work kind of situation, you can have one or a few sources of work.

35:03 And then you have an elastic number of workers that just connect and start receiving messages.

35:08 And the way this is in kind of the push-pull pattern.

35:11 So pub sub is always send them every message you send, send it to everybody connected who can receive a message.

35:18 Whereas push-pull is whenever you send a message, send it to exactly one peer.

35:22 And I don't care which one.

35:23 And so if more peers are connected, it will load balance across all those peers.

35:27 But if only one's connected, it will just keep sending to that one.

35:29 And at no point in the sender do you ever need to know how many.

35:35 You never get notified that peers are connecting.

35:38 You never need to know that there are any peers, that there's one peer, that there's a thousand peers.

35:42 It doesn't matter.

35:44 So then it's in your distributed application to say, you know, this one's sending with this push-pull pattern, ventilator sync pattern.

35:51 And then I just elastically grow my number of workers and shut them down.

35:55 And they just connect and close, disconnect and everything.

35:58 And it just works.

35:59 Interesting.

36:00 Okay, so a follow-up question from Nawa that makes me think.

36:04 So he asks, he, she, sorry, asks whether it is a good idea to replace REST communication with CRMQ.

36:11 And so that leads me to wonder.

36:13 You talked about, you send the message and it's sort of fire and forget style, like message sent success.

36:18 But so often what I want to do is I need to know what products are offered on sale right now from the sale microservice or whatever.

36:26 I need to get the answer back.

36:28 These three products.

36:29 Thank you.

36:30 You know what I mean?

36:31 How do I implement something like that where I send a message, but I want the answer?

36:35 That's the request reply pattern.

36:37 So you would use either the request reply sockets or the router dealer sockets for that kind of pattern.

36:44 And that's, you send a message.

36:45 So in that case, this does have multi-peer semantics, but usually the requester is connected to one endpoint.

36:52 It can be connected to several, in which case it'll load balance its requests.

36:57 And the router, so the receiver side, handles requests.

37:00 And each request comes in with the message prefix that identifies who that message came from.

37:07 And then it can send replies using that identity prefix.

37:10 And it will go to whoever sent that request.

37:13 And that's how most of the Jupyter protocol is a request reply pattern.

37:16 Yeah, I guess so.

37:17 That makes sense because you want the answer from the computation or whatever.

37:20 You want to know that it's done and so on.

37:22 Interesting.

37:22 Okay.

37:23 Yeah.

37:23 Well, that's pretty neat.

37:25 The other thing that comes to mind around this is serialization.

37:28 So when I'm doing microservices, I make JSON documents.

37:32 I know what things go in JSON, right?

37:34 Like fundamental types, strings, integers, and so on.

37:37 Surprisingly, dates and times can't go into JSON.

37:41 That blow.

37:41 I still like, this is 2021.

37:43 We can't come up with a text representation of what time it is.

37:46 Anyway, that's a bit of a pain.

37:48 It seems to me like you might be able to exchange more data more efficiently using a binary.

37:54 And it's not even going over the HTTP layer, right?

37:57 It's going literally over a TCP socket.

38:00 Yeah.

38:00 Or IPC with BST sockets or UDP.

38:03 Even lower level than that.

38:04 Yeah.

38:04 Not even touching the network stack.

38:06 Right.

38:06 Yeah.

38:06 This gets us to the last piece of XeromQ that we haven't talked about yet.

38:10 And that's what is a message.

38:11 Right.

38:12 They're nice things.

38:13 You know, if you've worked with, you know, a lower level socket library, just talking TCP

38:17 sockets, you know, you have to deal with like chunks and then figure out when you're done

38:21 with your message protocol.

38:22 But if you've ever written an HTTP server, you know, you need to find those double blank lines

38:27 and all that stuff before you know that you have a request that you can hand off to your

38:31 request handler.

38:32 Right.

38:32 Right.

38:32 You're doing a whole lot of funky, like parsing the header.

38:35 Okay.

38:36 The header, it says it's the next hundred bytes of the thing that I'm getting.

38:39 And this one is an end.

38:40 So I'm at a part like it's, it's gnarly stuff.

38:42 I've worked on projects where we did that and it's super fast, but boy, it is a, it's a low

38:47 level business.

38:47 Something like Flask does for you.

38:49 Yeah.

38:49 Right.

38:50 It, it implements the HTTP protocol and then says, okay, here's a request.

38:53 Please send a reply.

38:54 And it helps you construct that reply message.

38:57 So zero MQ or PyZMQ live at that, at that level of Flask where a message is a, not one

39:05 binary blob, but a collection of binary blobs.

39:09 And zero MQ always delivers whole messages.

39:13 So it's, it's atomic and it's asynchronous and it's messaging, which means you will never

39:17 get part of a message.

39:18 There's no like, okay, I got the first third of this message, keep it in my own buffer until

39:24 I get the, you know, get to the end.

39:26 A zero MQ socket does not become readable until an entire message is ready to be read.

39:31 Right.

39:31 And there may be buffering down the C++ layer, but it's not going to tell you I've received

39:35 a thing until it's fully baked.

39:37 Got the answer.

39:38 Right.

39:38 Right.

39:39 Yeah.

39:39 All that stuff still happens.

39:40 It's just zero MQ takes care of that.

39:42 And then when, when at the PyZMQ level or at the ZMQ API level, when a socket, when you receive

39:49 with a socket or in PyZMQ, you say receive multi-part, you get a list of blobs of memory.

39:55 And so if you're talking about serialization with Jason, so PyZMQ has a helper function

40:00 called send Jason, and it's literally just Jason that dumps thing and then send it.

40:05 Yeah.

40:05 With a little ensuring UTF eight bytes, I think.

40:07 Yeah.

40:07 Yeah.

40:08 Nice.

40:08 So PyZMQ, it's, and this is really turned out to be really important for more important

40:15 for IPython parallel than it turned out to be for the Jupyter protocol.

40:19 I'm not familiar with IPython parallel.

40:21 Tell me about this.

40:22 IPython parallel is, so if you're aware of the Jupyter protocol, it's a network protocol

40:27 for, I've got somewhere over the network where I want to run code.

40:30 And I have this protocol for sending messages.

40:33 Please run this code.

40:34 Give me a return output.

40:35 Show me display stuff.

40:37 IPython parallel is a kind of weird parallel computing library based on the fact that,

40:41 so I've got a network protocol to talk to, to run code remotely.

40:45 Why don't I just wrap that in a little bit to talk to N remote places.

40:49 Maybe partition up the work across them or something like that.

40:52 The fun thing about ZerumQ is that in a Jupyter notebook, the kernel is the server.

40:57 The kernel listens for connections on its various sockets.

41:00 And then the notebook server, the web server, or the Qt console, or the terminal is a client,

41:06 and it connects to those sockets.

41:08 So I Python parallel, because of this fun stuff about ZerumQ not caring about connection,

41:12 direction, or count, adds a scheduler layer, and it modifies the kernel, the IPython kernel

41:19 that you'd use in a Jupyter notebook.

41:20 And the only change it makes is instead of binding on those sockets, it connects to a central scheduler.

41:27 And the kernel is otherwise identical.

41:28 The message protocol is otherwise identical.

41:30 But the connection direction is different because the many-to-one relationship is different.

41:35 There's one controller and many engines instead of many clients connecting to one kernel.

41:40 And then, again, using kind of some of the magic of the ZerumQ routing identities,

41:45 there's a multiplexer in PyZMQ called a monitored queue, where if you have router socket,

41:54 so a router socket is one where the first...

41:56 So we talked about a ZerumQ message is a sequence of blobs of memory.

42:00 So it can just be one.

42:01 With a router socket, it's always at least two, because the first part is the routing identity to tell the underlying ZerumQ

42:07 which peer should it actually send to.

42:10 Sure, okay.

42:10 That's cool.

42:11 And we don't have to worry about that, because that's down at the low level, right?

42:14 But that's what happens.

42:15 When you get a request, you need to remember that first part.

42:17 So when you send the reply, the first part of the reply is the ID that came at the request.

42:22 Got it, got it.

42:22 So it goes back to the right place.

42:23 Yeah.

42:23 But you can also use that if you know the IDs, you can send messages to a destination without being in response to a request, right?

42:32 What a router really is, is a socket that can route messages based on this identity prefix.

42:37 So if you have a bundle of identity prefixes, then you can send messages to anyone at any time.

42:41 And that allows us to build a multiplexing scheduler that from one client connected to one scheduler,

42:48 just send messages, regular, plain old ZerumQ protocol messages, but with an identity prefix from the client.

42:55 And those will end up at the right kernel just by the magic of ZerumQ routing identities.

42:59 Yeah, yeah.

43:00 And so this is a substantially different messaging pattern.

43:04 So the request reply patterns are all the same, but the connection patterns are totally different.

43:09 And the client and the endpoint don't need to know about it at all.

43:13 We just have this adapter in the middle.

43:15 I feel like to really get the zen of this and take full advantage, you've got to really think about these messaging patterns and styles a little bit,

43:22 because they're fairly different than, oh, this is what I know from web servers.

43:25 So the big thing to do with, if you're getting into ZerumQ is to read the, there's something called the guide.

43:29 And there'll be a link in the notes.

43:32 And if you go to zerumq.org, it'll be prominently linked.

43:34 And this goes through kind of the different patterns that ZerumQ thinks about,

43:38 the abstractions in ZerumQ, and the different socket types and what they're for.

43:43 And the guide will help you.

43:44 And there are examples in many languages, including Python.

43:47 Yeah, this seems great.

43:48 Yeah.

43:48 It'll help you build kind of little toy example patterns of here's a publish subscribe application.

43:54 Here's a ventilator sync application.

43:57 And then it also does things with pictures.

44:00 Yes.

44:01 That's, I think, the way to internalize what are the ZerumQ concepts and how do I deal with this.

44:06 So when it comes to serialization, this is really important for iPython Parallel.

44:10 And it also comes up if you're in Jupyter and use the interactive widgets,

44:13 if you use the really intense ones that do like 3D visualization, interactive 3D visualization in the browser that sometimes are streaming a lot of data from the kernel.

44:22 Because a ZerumQ, this combines two things, one from PyZMQ and one from ZerumQ itself.

44:27 So the ZerumQ concept that a message is actually a collection of frames.

44:31 This lets you and another that ZerumQ can be zero copy and PyZMQ supports zero copy.

44:38 So anything that supports the Python buffer interface can be sent without copying,

44:42 meaning it's still copied over the network, but at no point are there any copies in memory.

44:47 So you can send 100 megabyte NumPy array with ZerumQ without copying it.

44:51 But then you've got to think about, oh, wait, if I send a NumPy array using the Python buffer interface,

44:57 all I got were the bytes.

44:58 Where are, you know, where's the D type information?

45:01 Like, how do I know this is a 2D array of integers?

45:04 Because a message is in Python language is a list of chunk of blobs instead of a single blob.

45:11 You can serialize that metadata as like a header and the blob you don't want to copy, the big one, separately.

45:17 So you can say like, Jason dumps the, some message metadata that tells you how to interpret the binary blob and then just the binary blob and you don't copy it.

45:27 So then you can send as one message, right?

45:30 We're not breaking the single message delivery.

45:32 You have your metadata that's serialized with message pack.

45:36 That comes in as like a frame or something like that in the message.

45:38 Yeah.

45:39 Yeah.

45:39 So one frame is your header.

45:41 One frame is the data itself.

45:43 And we do this in the Jupyter protocol that the Jupyter protocol has an arbitrary number of buffers on the end.

45:48 But then there are three frames that are actually Jason serialized dictionaries.

45:53 Very cool.

45:53 Very cool.

45:53 Yeah.

45:54 Looking at the guide here, it says there's 60 diagrams with 750 examples in 28 languages.

46:01 That's a big cross product matrix of options in here.

46:04 And you can also download it as a PDF to take with you, which, yeah, this looks like a really great place to get started.

46:10 Speaking of getting started, let's talk about programming with the Python aspect here.

46:15 All right.

46:16 So here we'll use PyZMQ and this is a library that you work on as well.

46:21 Yeah.

46:21 Yeah.

46:21 I maintain PyZMQ.

46:23 Yeah.

46:23 Awesome.

46:23 So maybe, you know, it's hard to talk about code, but just give us a sense of what it's like to create a server.

46:30 Like in Flask, you know, I say app equals Flask.

46:33 Then I decorate app.route on a function.

46:36 Like what's the 0MQ Python equivalent of that?

46:40 Yeah.

46:40 So first you always have to create a context and then use that context as a socket method that creates sockets.

46:46 And then you either bind or connect those sockets.

46:50 And then you start sending and receiving messages.

46:52 So if you're writing a server, which usually means this is the one that binds.

46:57 Yeah.

46:58 So you'd create a socket.

46:59 You'd call socket.bind and give it a URL, maybe a TCP URL or an IPC URL with a path, you know, a local path.

47:05 And then you'd go into a loop saying, you know, receive a message, handle that message, send a reply.

47:11 Or if it's a publisher.

47:13 They often have a while true loop sort of thing, right?

47:16 Just while true, wait for somebody to talk to me or while not exit.

47:19 Yeah.

47:20 That's a simple version.

47:20 Or you could be integrated into asyncio or tornado or G event or whatever.

47:26 Yeah.

47:27 Yeah.

47:27 One of the fundamental principles of 0MQ is that it's async all over the place.

47:31 What's the async and await story with PyZMQ?

47:35 Is there any integration there?

47:36 Yeah.

47:37 So if you do import zmq.asyncio instead of, if you do import zmq.asyncio as ZMQ, you will have the same thing, but send and receive are awaitable instead.

47:47 Oh, that's glorious.

47:48 Yeah.

47:49 That's really, really fantastic.

47:50 So you should be able to scale that to handling lots of concurrent exchanges.

47:54 Pretty straightforward, right?

47:56 Yeah.

47:56 And that's how the, so taking Jupyter as an example again.

47:59 So the Jupyter notebook uses the tornado framework, which if you're getting into asyncio, tornado is basically asyncio.

48:06 Right.

48:07 Yeah.

48:07 It's the early days, early take asyncio.

48:10 Yeah.

48:10 And there we use something called a ZMQ stream, which is a, something inspired by tornadoes IO stream, which is their wrapper around a regular socket.

48:19 That's like bytes are coming in, call events when bytes have arrived.

48:23 ZMQ stream is a tornado thing that says when you have an on receive method that passes a callback, it says whenever there's a message, call this callback with the, with the message after receiving it.

48:35 Yeah.

48:35 And so that's actually how the IPython kernel and Jupyter notebook work on the ZMQ side is with these ZMQ stream objects.

48:41 Cool.

48:42 So the example you talked about is how to create a server, but you know, web version would be use request to do a request.get against the server to be the client that talks to it.

48:53 What's the, that version in PysmQ?

48:55 In PysmQ, a client looks very much like a server, except instead of bind, you'd call connect.

49:01 And instead of receive, you do a send.

49:02 Yeah.

49:03 Yeah.

49:03 And so in a request reply pattern.

49:05 Yeah.

49:05 So wherever you have a receive on the server side, you have a send on the client side and vice versa.

49:10 So in a request reply pattern, the client is doing send a request and then receive to get the reply.

49:17 In a server, you're doing receive a request and send the reply.

49:21 In PubSub, you're just, you're only sending on the publisher side and on the subscriber side, you're only receiving.

49:27 Nice.

49:27 And when the way you set this, you basically choose these things is when you go to the context and you create the socket, you tell it what kind of pattern you're looking for.

49:35 Is that where you specify that?

49:36 Yeah.

49:36 So ZMQ has a bunch of constants that identify socket types.

49:40 So you'd use, when you create a socket, you always have to give it a single argument that is the socket type.

49:45 So it'd be like ZMQ.pub for a publisher socket, ZMQ.sub for a subscriber socket, router, dealer, push, pull.

49:51 Yeah.

49:52 And that defines the messaging pattern of the underlying sockets.

49:55 You also have some JupyterLab examples, which I guess we can link to as well, like some diagrams for that, right?

50:02 Yeah.

50:02 So the Jupyter protocol has a diagram of, a diagram that we maybe should redesign.

50:08 Whoops.

50:08 I didn't mean that far.

50:09 There we go.

50:11 It shows you what, basically what happens when you have one kernel and multiple front ends connected to it with the different socket types that we have in the Jupyter protocol.

50:19 So the Jupyter kernel has two router sockets and a pub socket and a fully featured front end would have two dealer sockets or request sockets and a sub socket.

50:31 It's just so much is happening below the scenes.

50:33 I think getting your mind around these is really neat, but basically ZMQ is handling so much of this for everyone, right?

50:39 It's handling all the, so we never care about, there's multiple peers connected.

50:43 We don't need to deal with that.

50:45 We have no connection events.

50:46 Some folks working on different issues that causes headaches for them because there's some aspects of ZMQ, like the not guaranteed pub sub delivery.

50:55 It's actually kind of a pain because we actually want all the messages.

51:00 Yeah, yeah, of course.

51:02 Is there any, you know, around a lot of libraries, there's stuff that like adds layers that does stuff.

51:07 So like Flast extensions and stuff like that.

51:10 Is there an extension that will like let you do reliable messaging that you can plug in on top of this or anything like that?

51:16 Yeah.

51:17 So if you look at the ZMQ guide, there are different patterns, some of which are basic uses of sockets.

51:22 So there's no reason to build a, another layer of software on, in order to implement that, a simple ventilator pattern.

51:30 But if you're talking about things like reliable messaging, there are some patterns in the guide and they, they have names.

51:37 And so some people have written those protocols as standalone Python packages that say like implement this scheme on top of ZMQ that might have things like a message replay and, or, you know, election stuff.

51:52 Like if you do Kubernetes things, they're often leader elections to allow you to scale and migrate things.

51:57 So you can do that with a ZMQ applications.

52:00 Yeah.

52:00 And some of those reliable messaging things sound amazing.

52:02 And I go, yeah, that's going to be great.

52:03 But there's other drawbacks to those as well.

52:06 Like poison messages.

52:07 Like I got to make sure I send this, but the server, the client can't receive it.

52:11 So they crash.

52:12 So then I try to send it again.

52:13 And you're just in these like weird loops and there's a lot of, they all have their challenges.

52:17 Yeah.

52:17 So another thing that I think would be interesting to touch on for our conversation, which we've spent so much time talking about all the programming patterns and stuff that I don't know.

52:26 We have as much time anyway as we imagined, but building PyZMQ for basically to wrap this C library, right?

52:35 This is some challenges you've had.

52:36 It supports both CPython and PyPI.

52:39 Sorry, PyPI.

52:40 PyPI.

52:40 Yeah.

52:40 And whatnot.

52:41 So maybe talk about some of the ways you did that.

52:44 You had to do this like in the early days when there was Python 2 and 3.

52:48 There's a lot of stuff going on, maybe pre-wheels, right?

52:50 Yeah.

52:51 So a few years pre-wheels.

52:52 So with IPython and Jupyter, our target audience is pretty wide, right?

52:56 We have a lot of people in education, a lot of students, a lot of people on Windows.

53:00 A lot of those people don't even want to be programmers or care about like.

53:04 Exactly.

53:04 They just want this to work.

53:05 And why won't this thing install?

53:06 I just need to do this for my class or for my project.

53:09 It needs to work, right?

53:10 That kind of thing.

53:11 Yeah.

53:11 And so having a compiled dependency was a big deal for a lot of people.

53:18 And so making binary releases as widely installable as possible was really important to us.

53:24 And supporting as many Python implementations as possible was also important to us.

53:30 So PyzianQ was originally written all in Cython, which is a wonderful library for this when you're interfacing with the C library, especially when you want to do things with the buffer interface.

53:42 So when you have a C object and a Python object that represent the same memory, Cython is the best.

53:48 And that's a lot of what we do for the zero copy stuff in PyzianQ.

53:54 So when we were working on this, wheels didn't exist.

53:56 Wheels being the binary version that you get from PyPI now.

53:59 Yeah.

54:00 So if you pip install something like PyzianQ, it doesn't compile it, right?

54:04 You get a wheel and that just unzips it and it's really nice.

54:07 But at the time there were only eggs.