Marimo - Reactive Notebooks for Python

Episode Deep Dive

Guest Introduction and Background

Akshay is the co-founder of Marimo, a reactive notebook framework for Python. He completed his PhD at Stanford, focusing on machine learning research and model development. Before returning to academia, Akshay worked at Google Brain on TensorFlow, contributing to projects that helped shape aspects of TensorFlow 2.x. His passion for bridging reproducible software engineering practices with the interactive exploration of data science led to Marimo's creation.

What to Know If You're New to Python

If this is your first foray into Python or interactive notebooks, here are a few tips to get the most from this episode:

- Learn basic Python syntax and data structures (lists, dicts, etc.) so you understand the code snippets.

- Know what a "notebook" is: A computational document mixing code, text, and visual outputs.

- Explore how reactivity (the automatic updating of outputs) can accelerate your learning and experimentation.

- Remember that reproducibility is a common challenge in traditional notebooks; watch for how Marimo addresses it.

Key Points and Takeaways

- Marimo: A Reactive Notebook for Python

Marimo is an open source tool designed to fix some of the core pain points in Jupyter, particularly around reproducibility and state management. Its reactive engine automatically detects relationships among cells and triggers minimal re-computation when variables change.

- Links and Tools:

- Reproducibility Issues in Traditional Notebooks

Akshay shared how Jupyter Notebooks can fall out of sync when cells are executed out of order. This leads to confusion and often invalidates results, only a fraction of public notebook repositories fully reproduce their stated results. Marimo uses a dependency graph to ensure code and outputs always align.

- Links and Tools:

- Jupyter Project

- Paper reference on reproducibility crisis (not publicly linked but mentioned in discussion)

- Links and Tools:

- Bringing Software Engineering Rigor to Data Science

The inspiration for Marimo came from Akshay's background in machine learning research, where messy notebooks made collaboration and code reuse difficult. By storing notebooks as plain

.pyfiles, Marimo simplifies versioning in Git, fosters modular code structure, and helps large teams collaborate more smoothly.- Links and Tools:

- Reactive Programming at the Core

Marimo notebooks use static analysis to detect how global variables flow across cells. Whenever a cell changes, it recomputes only dependent cells. This approach significantly reduces errors compared to imperative notebooks, mimicking the elegance of tools like Excel and Pluto.jl but for Python.

- Links and Tools:

- Ease of Experimentation with Caching

Because cells in Marimo can be expensive (e.g., training machine learning models), Marimo offers caching solutions to avoid repetitive computation. The system knows precisely which cells changed so it can reuse previous results without demanding manual reruns across the entire workflow.

- Links and Tools:

- AI and Large Language Models Integration

Marimo provides an AI-powered assistant that can scaffold notebooks or transform code contextually. While still new, it demonstrates how LLMs can power quick prototyping and code generation. Users can integrate additional features like data frame introspection and code rewriting.

- Links and Tools:

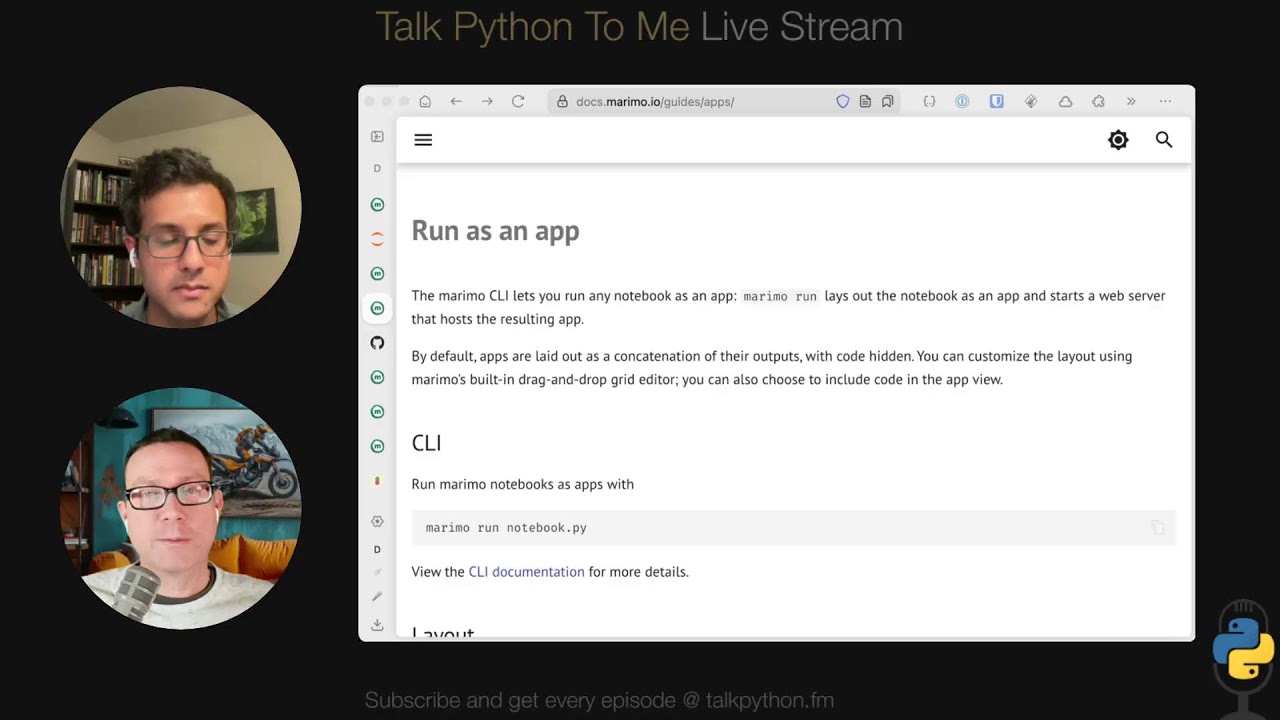

- Serving Marimo as Standalone Apps

With a single command (

marimo run notebook.py), developers can serve their notebook as a web application. Marimo also supports a WebAssembly approach using Pyodide, enabling purely static hosting of interactive notebooks without requiring a live server environment.- Links and Tools:

- Static Analysis vs. Runtime Tracing

Akshay explains Marimo's preference for static analysis of Python ASTs over runtime tracing. This design choice yields more predictable performance and avoids the complexity of capturing all references at runtime, an especially tricky requirement with dynamic Python features.

- Links and Tools:

- Open Source and Funding Path

Marimo's early development was supported by Stanford's SLAC National Accelerator Laboratory. They later received investment from venture and angel investors, including notable names from Kaggle and Google Brain, ensuring the project remains open source and sustainable long-term.

- Links and Tools:

- Future of Notebooks and Machine Learning While machine learning has advanced quickly, the data science tooling and ecosystem still require user-friendly, reproducible workflows. Marimo's blend of reactivity, version control readiness, and AI-driven development points to a future where notebooks can do even more for novices and experts alike.

- Links and Tools:

Interesting Quotes and Stories

"The code of a cell is a caption for its output." , Akshay, referring to inspiration from Pluto.jl's approach to reactive notebooks

"We found ourselves having to jump through hoops with Jupyter's out-of-order execution. It's just not reproducible enough for larger projects." , Akshay, on the motivation behind Marimo

Key Definitions and Terms

- Reactive Notebook: A notebook environment that automatically updates dependent cells when a variable or cell they rely on changes.

- Static Analysis: Analyzing source code to discover relationships (variable reads/writes) without running the code.

- Data Flow Graph (DAG): A directed graph structure representing how data moves from one computation (or cell) to another, common in distributed computing frameworks like TensorFlow.

- Caching: Storing previously computed results to avoid re-running expensive processes, especially valuable in iterative data exploration.

Learning Resources

- Python for Absolute Beginners: Perfect if you're new to Python's core syntax and concepts.

- Reactive Web Dashboards with Shiny: Learn about transparent reactive programming concepts for Python-based dashboards.

- Data Science Jumpstart with 10 Projects: Practice data-focused projects and grow your exploration skills.

Overall Takeaway

Marimo aims to combine the intuitive, iterative nature of notebooks with the rigor of best-practice software development. By storing notebooks in Python files and using a reactive model to enforce reproducibility, Marimo eliminates many of the pitfalls that can plague Jupyter-powered workflows. For data scientists, academic researchers, and even novices keen on reproducible and collaborative work, Marimo stands out as a next-gen approach to Python notebooks.

Links from the show

YouTube: youtube.com

Source: github.com

Docs: marimo.io

Marimo: marimo.io

Discord: marimo.io

WASM playground: marimo.new

Experimental generate notebooks with AI: marimo.app

Pluto.jl: plutojl.org

Observable JS: observablehq.com

Watch this episode on YouTube: youtube.com

Episode #501 deep-dive: talkpython.fm/501

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 Have you ever spent an afternoon wrestling with a Jupyter notebook, hoping that you ran all the cells in just the right order, only to realize your outputs were completely out of sync?

00:08 Today's guest has a fresh take on solving that exact problem.

00:12 Akshay Agrawal is here to introduce Marimo, a reactive Python notebook that ensures your code and outputs always stay in lockstep.

00:20 And that's just the start.

00:21 We'll also dig into Axie's background at Google Brain and Stanford, what it's like to work on cutting-edge AI, and how Marimo is uniting the best of data science exploration and real software engineering.

00:33 This is Talk Python To Me, episode 501, recorded Thursday, February 27th, 2025.

00:41 Are you ready for your host, please?

00:43 You're listening to Michael Kennedy on Talk Python To Me.

00:46 Live from Portland, Oregon, and this segment was made with Python.

00:53 Welcome to Talk Python To Me, a weekly podcast on Python.

00:56 This is your host, Michael Kennedy.

00:58 Follow me on Mastodon, where I'm @mkennedy, and follow the podcast using @talkpython, both accounts over at fosstodon.org, and keep up with the show and listen to over nine years of episodes at talkpython.fm.

01:12 If you want to be part of our live episodes, you can find the live streams over on YouTube.

01:16 subscribe to our YouTube channel over at talkpython.fm/youtube and get notified about upcoming shows.

01:23 This episode is sponsored by Worth Recruiting.

01:26 Worth Recruiting specializes in placing senior level Python developers and data scientists.

01:31 Let Worth help you find your next Python opportunity at talkpython.fm/Worth.

01:36 Akshay, welcome to Talk Python To Me. Awesome to have you here.

01:39 Thanks, Michael. Really happy to be here.

01:41 Yeah, it's data science hour, isn't it?

01:44 Yeah, I guess it is.

01:46 In a sense, but also in a sense, kind of bringing data science a little closer to traditional, formal computer science or more.

01:55 I don't know.

01:55 I don't know what to think about computer science.

01:57 Software engineering.

01:58 Software engineering.

01:59 Yeah, that's our goal.

02:00 Blending data science with sort of the rigor reproducibility of software engineering.

02:05 Yeah.

02:05 And I think people are going to find it pretty interesting.

02:08 It's definitely gained a lot of traction lately.

02:10 So it's going to be really fun to dive into Marimo.

02:13 Before we do, though, who are you?

02:15 What are you doing here? Tell people about yourself.

02:18 Sure. So I'm Akshay. I'm one of the co-founders and developers of Marimo.

02:24 I started working on Marimo a few years ago, I guess, a little over two years ago, right after I finished a PhD at Stanford where I was working on machine learning research.

02:35 And before that, I was at Google Brain where I worked on TensorFlow.

02:39 And I guess for the past several years, I've been wearing different hats, It's either a computer systems hat where I build open source software and domain specific languages for people who do machine learning or I just do machine learning.

02:51 And for the past few years, it's been the first hat.

02:54 So working on the Marimo notebook, which is our attempt to blend the best parts of interactive computing with the reusability and reproducibility of regular Python software.

03:05 Well, that's very cool.

03:06 You've been right at the cutting edge of this whole machine learning thing, I guess, right?

03:11 If you go back a handful of years, it's different today than it was five years ago.

03:16 Oh, yeah.

03:16 It's very different.

03:17 I was at Google Brain 2017 to 2018.

03:21 So I think we had Transformers, but we didn't really appreciate all that they could do.

03:27 Yeah.

03:29 But it was a really exciting time to be at Google Brain where it was pure research, just like one, that you could just work on anything that you wanted to work on.

03:37 And I was an engineer there on a, actually on a small sub team inside of TensorFlow where we were working on TensorFlow too, which is sort of making TensorFlow more like PyTorch crudely.

03:47 And that was a lot of fun, but yeah, got to go to like a bunch of talks by researchers and it was a special time for sure.

03:54 Yeah, I bet it was.

03:55 Well, you know, that's such an interesting background.

03:58 I think maybe give people a sense of what is Google Brain and all that and maybe just Transformers, right?

04:04 It's the foundation of so much surprising and unexpected tech these days.

04:12 Yeah, yeah, I'm happy to.

04:14 So Google Brain, what is Google Brain?

04:16 So I guess technically Google Brain no longer exists as it did back then.

04:20 Now it's just DeepMind with DeepMind and Google Brain merged.

04:23 But back then Google Brain was, so there was, I guess, two researchers under the alphabet umbrella, Google Brain and DeepMind.

04:31 And Google Brain was a place where it was really special.

04:34 So there were folks working on all kinds of research projects from you, like using transformers for code generation, but also like systems research projects.

04:42 Like I was there when the JAX machine learning library, which is an alternative to TensorFlow and PyTorch, was still being in the early days of development.

04:51 And I was able to meet with like Roy Frostig, Matt Johnson.

04:56 And that was really cool sort of coming up to speed on machine learning systems in that era.

05:03 I was only there for a year because I decided I wanted to go back and do a PhD myself in machine learning research where I used actually TensorFlow and PyTorch to work on new sort of like machine learning modules.

05:16 Did you feel bad when you used PyTorch?

05:18 You're like, oh, I'm cheating.

05:20 No, no.

05:21 I mean, a little, I guess.

05:23 But, you know, it's the thing like everyone else used PyTorch.

05:27 And we had just started working on TensorFlow too when I joined, whereas PyTorch had already been mature.

05:31 So it was the responsible thing to do.

05:36 And Transformers, what are these Transformer things?

05:39 They came out of Google, right?

05:41 Yeah, yeah, they did.

05:42 Attention is all you need.

05:45 These Transformer things, I guess, are the backbone of all these large language models that we're seeing today.

05:51 And they proved to have like an incredible capacity for modeling, I guess, in a generative way, all kinds of text.

05:59 And in a way that, honestly, if I had to admit, surprised me.

06:03 At the time in 27 to 18, we were doing good work in machine translation and stuff.

06:08 But even back then, there was researchers who were working on, at Google Brain, code generation, writing a bunch of unit tests, stuff that people do regularly today, actually, with large language models.

06:20 I put on my systems engineer hat back then, and I was a little skeptical, to be honest.

06:24 And I've just totally proved wrong, and I'm happy to have been proved wrong.

06:28 So it's been phenomenal seeing sort of that, the success of that particular model.

06:34 And yeah, having quite a small part in it.

06:37 Yeah, I was, I've been a long time skeptic of machine learning in the sense that for so long, it was, it's kind of like fusion.

06:43 It's going to be amazing, but it's 10, 20, 30 years out and maybe, you know, and it was all almost like knowledge, knowledge-based type things.

06:55 And just, I mean, it was interesting.

06:57 it looked kind of relevant and possibly useful in some of the cases, but not like it is today.

07:02 Like today, people who don't even care about technology are blown away by this stuff, and they use it all day long, and it's gone so much farther and so much faster than I expected it to.

07:13 I'm all about it these days.

07:15 I still write most of my code, but any time there's something tricky instead of going to Google and hunting through Stack Overflow, let's see what chat says about it or something like that.

07:27 And then maybe we'll go to Google if we don't get a good answer, you know?

07:29 Yeah.

07:30 No, no, I'm the same way.

07:31 I spent more time than I care to admit last night migrating from Vim to NeoVim.

07:37 And I know I'm late to the party, but perplexity and other tools just made it a lot easier to start from scratch installation without bringing over my VimRC.

07:49 And you can ask it detailed questions, right?

07:51 For example, I needed some way to reset the initial offset in DaVinci Resolve timelines.

07:56 It was, okay, well, here's your four options, and these are the way you can do it, and these are the tradeoffs.

08:00 It's not just you might get an, you know, here is the switch, but you get an analysis.

08:05 And I'm pretty impressive.

08:08 So the reason I'm going into this is I want to ask you, having been on the inside at the early days, what is your perspective of seeing this trajectory or trajectory is the way I should point it.

08:20 Upwards, not logarithmic.

08:22 Yeah.

08:23 I guess what I will say is I think one thing that's like really changed, like, so back when I was at Brain, even the models were advanced, like, I felt like in order to use machine learning well, you really did need to be somewhat trained in machine learning.

08:39 You know, it was important to understand what a loss function, like basic things, like what a

08:42 loss function was, what's training, what's validation.

08:46 These days, like, you don't need to, like, at all, actually.

08:49 I think especially to use LLMs, right?

08:52 Like, you just need to know inputs and outputs.

08:54 It's like a function call that sort of has fuzzy outputs.

08:57 You know, it's a mathematical function in quotes.

09:00 Yeah.

09:01 So I think that's one thing that's totally...

09:04 I guess it kind of shows that artificial intelligence is transitioning more from, I guess, like a science to a technology, and that tool that people can just readily use without

09:13 really needing to understand how it works.

09:18 As for the trajectory, I've been bad at making predictions in the past.

09:22 I think I will be bad at predicting anything right now.

09:26 Surely, these models are very good for coding.

09:31 for massaging marketing copy and like you know i do like the random tasks where like i have a bio i wrote in for first person and someone asked for it in third person and i'm too lazy to change and i just give it to a chat model um yeah but if you ask for a trajectory of agi or asi which i don't even remember what the s stands for these days but i i'm not sure i can predict anything on those timelines.

09:56 Yeah. I hear you. I'm, I'm, I'm with you on the projects prediction, but I would say that the adoption and effectiveness of it has far outpaced what I would have guessed five years ago.

10:08 Yeah. I mean, even local models, like it's kind of exciting, like what you can just run on your Mac book.

10:13 I also didn't really anticipate that. yeah.

10:16 All right. I don't want to make this the AI show cause it's not about AI,

10:19 but your background is so interesting. So when you were working initially on transformers did you perceive you as a group not you individually you guys perceive the need for the scale of the computation like you know to train gpt01 i don't know how much compute was involved but probably more than cities use in terms of energy sometimes you know what i mean it was a lot and how much compute were you all using back then versus now i guess is what i'm getting that so

10:50 i guess yeah and i think you kind of said it but just just to clarify or to make it clear that i personally didn't work on transformers i was working on tensorflow so that yeah

10:59 the yeah the i guess embedded dsl for for training machine learning models that was used within google and still used today um i can't give you specific numbers to be honest because i was pretty deep in the systems part of working on tensorflow and not paying too much attention for like standing up distributed clusters.

11:21 I did things like adding support to TensorFlow for creating a function library.

11:27 TensorFlow is like a Dataflow graph engine, and it didn't have functions, so I added support for functions, partitioning functions over devices, but other teams used that work to go and train the big models.

11:38 I know it was a lot of compute, but a lot versus a lot, all caps versus just capital case.

11:46 Yeah, I'm sure there's a delta.

11:48 I just, I don't know how much.

11:49 Yeah.

11:50 Well, today they're all talking about restarting nuclear reactors and plugging them straight to the data center.

11:55 So it's a weird time.

11:57 Let's go back.

11:59 Maybe a little bit before then, 2012 or so, I believe is maybe the right timeframe.

12:05 And let's talk about notebooks.

12:07 And, you know, around then, Jupyter notebooks burst on the scene.

12:12 And I think they fundamentally changed the ecosystem of Python and basically scientific work in general, right?

12:22 By changing Python, I mean, there are so many people in Python who are here because they got pulled into a notebook.

12:28 They wrote a little bit of code.

12:30 They got incredible results.

12:32 They were never, ever a programmer, but now they're the maintainer of an open source project or something like that.

12:36 You know what I mean?

12:38 They're just like, oh, this is kind of neat.

12:40 oh I'll create a biology library or whatever and then all the all of a sudden you know they're they're deep in it and so jupiter I think was really the start of that maybe there was some precursors that I'm not familiar with

12:54 yeah jupiter was essentially the start of it especially for python like I think

12:58 the idea of computational yeah notebooks has been around but uh yeah the ipython repl I guess the ipython project with the ipython repl I think was early 2000s and um And Jupyter, I think you're right, like 2012-ish, as he's burst onto the scene with putting the IPython REPL into a web browser with multiple REPL cells, and all of a sudden you could do more than what you could do in the console and also made it a lot more accessible.

13:25 Yeah.

13:26 Going back to that sixth cell you entered on your REPL and editing it, that is not a way to draw beginners in.

13:35 No, definitely not.

13:37 Up arrow key six times, no.

13:38 Yeah, 2012, coincidentally, is when I started college as an undergrad.

13:44 So I had been using, I kind of grew up, as long as I used Python, I think I probably was using Jupyter Notebooks.

13:52 And I even started using Google CoLab before it was made public, because when I was interning at Google, and I think the year I started working at Google full-time is when they made it public to the world.

14:03 So, yeah, notebooks have sort of shaped the way that I think and interact with code quite a bit.

14:09 Yeah, absolutely.

14:11 So maybe you could give us some perspective.

14:14 It sounds like you were there, like, right at the right time.

14:16 You know, what was the goal and sort of the job of Jupyter Notebooks and those kinds of things then?

14:23 And how has that changed now?

14:25 And maybe how does it still solve that problem or not solve it so much?

14:29 Yeah, I think, so stepping back.

14:32 So you mentioned like how many people who might not have traditionally, you know, worked with code sort of came into it because of Jupyter Notebooks.

14:41 And I think that's like really, I think you're onto something there.

14:43 I think that's correct.

14:44 And I think the special thing about Notebooks is that they like mix code and visuals and like narrative text in like this interactive programming environment.

14:55 And that interactivity in particular, I think is really important for anyone who works with data.

15:01 It's where you got to like first like, you know, run some queries against your data to see the general shape of it, you know, run some transformations to see like, you know, basically like explore your data, like hold it in your hands.

15:14 Right. And like, you know, REPLs before and then Jupyter Notebooks in particular lets you do that in a way that you just can't easily do in a script because it holds the variables in memory.

15:23 And so I think the role that it played was that it enabled data scientists or computational scientists, biologists, astrophysicists, all these different types of people who work with data.

15:34 It let them rapidly experiment with code and models and importantly, get some kind of like artifact out of it.

15:45 And in particular, this was like a static HTML type artifact, right, where you have some text documenting what experiments you're running.

15:53 you have Python code, and then you have like Matplotlib plots and other artifacts.

15:59 And I think for the sciences in particular early on, that was super important and transformative.

16:07 And we were talking about other computational notebook environments.

16:10 And it's interesting to mention this because things like this existed sort of before, like Mathematica's workbooks, I think they

16:17 called them.

16:17 I might have done that wrong.

16:19 But I think just like the open source nature of Python and the accessibility of it just like made it way easier to get started.

16:26 And also the fact that it was like browser based, right, so that like you can easily share like these, the HTML artifacts that come out, I think was really transformative.

16:37 And insofar as like what they're used for today, they're used for a lot.

16:41 It's kind of remarkable how much they're used.

16:44 Like I remember seeing a statistic for Google CoLab alone, which is just one particular hosted version of a Jupyter notebook.

16:53 And it was two years ago, and they said something about like having 10 million monthly active users.

16:58 And so the number of people who use Jupyter on a monthly basis is surely larger than that.

17:04 Oh, yeah.

17:05 And they're used for the sciences, but they're also used for like things where you want, like the traditional rigor and reproducibility of software engineering.

17:14 So they're increasingly used for like data pipelines and like ETL jobs.

17:19 And like, so anyone who's in Databricks, for example, runs like, they call them workflows, Databricks workflows, through essentially Jupyter Notebooks.

17:29 They're used for training machine learning models.

17:31 Like I've trained many models in a Jupyter Notebook.

17:35 I've developed algorithms in Jupyter Notebooks.

17:37 I've produced scientific figures that go straight into my paper from a Jupyter notebook.

17:42 And the reason people do this is because, again, the interactivity and the visuals that come out is very liberating compared to using a script.

17:51 I think there's also a developing understanding that happens from them.

17:56 Because when you're writing just a straight program, you're like, okay, this step, this step, this step, this step, and then we get the answer.

18:02 Whereas notebooks, it's like step one, step two.

18:05 Let me look at it.

18:06 oh, maybe that's different.

18:07 Let me go back and change.

18:08 It's more iterative from an exploratory perspective.

18:12 Yeah, I think that's exactly right.

18:15 Because you have that, when you're writing a software system, you kind of know the state of the system.

18:20 You know what you want to change it to.

18:22 But when you're working with data, there's that unknown, what will my algorithm do to my data?

18:26 You just have to run it to find out.

18:27 Yeah, absolutely.

18:28 And graphs and tables and iterative stuff like that is really nice.

18:33 So why create something instead of Jupyter?

18:37 Why is Jupyter not enough for the world?

18:40 Yeah.

18:41 I think there's a...

18:42 So just, you know, you're the creator of Marimo, along with the rest of the team and so on.

18:48 But yeah, it's like, why create this thing when Jupyter exists?

18:53 Yeah.

18:53 So I think there's quite a few reasons.

18:58 So Jupyter notebooks are like, I guess, It's more like Jupyter Notebooks powered by the IPython kernel, just to put a finger on it.

19:07 Right, because it could be different.

19:08 It could be like a C++ kernel or.NET kernel or whatever.

19:12 Yeah, exactly.

19:13 But this particular form of notebooks where you get a page and then in the front end shows you a bunch of cells that you execute one at a time imperatively, even though you have a sequence of cells, it still is essentially a REPL.

19:27 And like it's the onus falls on the developer, the scientist, whoever's using it to like run each cell on their own and like maintain the state of their kernel.

19:38 So like you run a cell, say you have three cells, you know, you decide to go rerun the second cell.

19:43 You have to remember to rerun whatever cell depends on it.

19:47 Maybe it's the third cell.

19:48 Maybe it's the first cell because you wrote things out of order.

19:50 And so you can easily get into a state with Jupyter Notebooks where your code on the page doesn't match the outputs that were

19:57 created.

19:58 Yeah. So if you were to like basically go to the menu option, say rerun all cells, you would get different answers.

20:03 Exactly. And this is like it's actually been studied and like it happens a kind of a shockingly large amount of times that like.

20:11 So there's one study in 2019 by Pimentel, I'm going to probably pronounce his name wrong, but by I think four professors.

20:20 And they studied a bunch of notebooks on GitHub.

20:22 And they found that only like a quarter of the notebooks that were on GitHub, they were able to run it all.

20:28 And then like either 4% of those or 4% of all of them, only 4% of them like when they ran recreated the same results that were serialized in the notebook.

20:38 Meaning like maybe people ran sales out of order when they originally created the notebook and then committed it.

20:45 Or maybe they didn't like create a requirements.txt that faithfully captured the package environment they used.

20:53 So there's like this recognition that Jupyter Notebooks suffer from a reproducibility crisis, which is rather unfortunate for a tool that is used for science and data science and data engineering, like things where reproducibility is paramount.

21:07 And so that was one issue.

21:08 Reproducibility was like one main thing that sort of we wanted to solve for with Marima notebooks.

21:13 And the other thing I think that we alluded to earlier is that Jupyter notebooks are stored as like a JSON file where like the outputs, like the plots, et cetera, are serialized as these binary strings.

21:25 And like the, you know, the one downside of that is that you can't really use these things like you can use like traditional software, at least not without jumping through extra hoops.

21:33 So like you make a small change to your Jupyter notebook, you get a gigantic git diff because, you know, the binary representation of some object changed by a large amount.

21:43 You want to reuse the Python code in that Jupyter notebook when you have to run it through like a Jupytext or some other thing to strip it out.

21:51 And then so you oftentimes you get this like situation where people just like end up copy pasting a bunch of things across a bunch of notebooks and it quickly gets really messy.

22:00 And that was another thing we wanted to solve for, making notebooks like Git-friendly and interoperable with other Python tooling like Git, pytest, etc.

22:10 Yeah, there's some things that will run them kind of function module-like, but yeah, it's not really the intended use case, is it?

22:18 Yeah, it's not, but people want to, which is, I think, like the, it's funny because like the intended use case, I think, of like traditional notebooks like Jupyter was like interactive REPL, explore data, produce like simple narrative text.

22:33 But then like they just got used for so much more than that in situations where reproducibility, reusability is like really important.

22:41 And I think that that's what we're trying to solve for.

22:45 This portion of Talk Python To Me is brought to you by Worth Recruiting.

22:49 Are you tired of applying for jobs and never hearing back?

22:53 Have you been getting the runaround or having trouble making it past the AI resume screeners that act as the new gatekeepers for your next level Python job?

23:01 You should reach out to Worth Recruiting.

23:04 Worth Recruiting specializes in placing senior level Python developers and data scientists.

23:09 They work directly with hiring managers at startups, helping them grow their software engineering and data science teams.

23:16 with Worth it's not just connecting you with the company it will guide you through the interview process and help make sure you're ready with their detailed preparation approach they can even coach you on salary negotiations and other important decision making processes so if you're ready to see what new opportunities are out there for you reach out to Worth Recruiting let them be your partner and specialist to find the right Python developer or data scientist position for you fill out their short contact form at talkpython.fm/worth. It only takes a minute. That's talkpython.fm/worth.

23:50 The link is in your podcast player's show notes. Thank you to Worth Recruiting for supporting the show. I was just wondering out loud, you know, that study. I know it was not your study, so I'm not asking you for answers. Just, just what do you think? If it's that low of a percentage of reproducibility because people fell down on their like rigorous software engineering, for example, like no requirements file or hard-coded pass instead of relative pass or whatever.

24:16 If it's that number for notebooks, I wonder about just plain Python files that are also meant to act to solve like science problems and stuff that just didn't happen to be in notebooks.

24:27 I wonder if they're any better.

24:28 Yeah, it's a good question.

24:31 I think they will be better.

24:33 I can just give an anecdote to why I think they would be better.

24:36 And so one example, and this happened to me many times, But like one particular example is like when I was working on my PhD thesis, which was about vector embeddings, it was me and a couple of co-authors.

24:48 And, you know, both of us did our, like created the examples for this embedding algorithm in like Jupyter Notebooks.

24:57 And these notebooks produced a bunch of plots, et cetera.

25:00 And so at the end of the, once the thesis was written, I was putting up all our code on GitHub.

25:04 And so I asked one of my co-authors, like, hey, like, can you share your notebooks with me?

25:09 And this co-author is amazing.

25:10 He's really smart, but not necessarily trained as a software engineer.

25:14 And he shares his Jupyter notebook with me.

25:16 And I run it.

25:17 It doesn't work at all.

25:19 And the reason it doesn't work at all is that there's like multiple cells.

25:22 And there's like this branching path where like it's clear that like he ran like, you know, you see the execution order serialized in the notebook.

25:29 And it's not like one, two, three, four.

25:31 It's like one, two, seven, four.

25:33 It's like these cells were ran in this path dependent way.

25:36 And then also it's like there's like three cells in the middle.

25:39 He ran just one of them.

25:40 I'm not exactly sure which one.

25:42 And like, whereas if you had a Python script, there's only one way to, I mean, sure, you can have

25:46 data files.

25:46 That's a good point.

25:47 You do not really get a choice.

25:49 It runs top to bottom.

25:51 Exactly.

25:51 Depending on the data.

25:52 Okay.

25:53 So yeah, that is something that's really, it's just very counter to the concept of reproducibility is that you

26:00 can run them in any order and there's not, you know, I feel like Jupyter Notebooks should almost have like a red bar to the cross, like an out of order bar.

26:10 warning across the top if it's not top to bottom.

26:14 It doesn't have to be 1, 2, 3.

26:15 It could be 1, 5, 6, 9.

26:17 But it should be a monotonic increase in function as you do the numbers top

26:22 to bottom.

26:24 Otherwise, it should be like a big warning or like a weird, like some kind of indicator, like, hey, you're in the danger zone.

26:29 You know what I mean?

26:30 Yeah, and there's actually another thing that actually has happened to me way more often than I should care to admit while working with Jupyter Notebooks is that it's not just the order.

26:40 you run the cells it's also like what happens if you delete a cell because when you delete a cell you no longer so say a cell declares a variable x and you deleted that cell you're like i don't want x anymore but you may forget that just by deleting the cell um you're not removing the variables from memory and so like x still exists and so then you're running other cells that depend on x everything's fine uh but then you come back and you run your notebook and everything's broken and you don't You have no idea.

27:07 The first time this happened to me, it took me forever to debug.

27:11 I can imagine that is so rough.

27:13 Yeah.

27:13 Later on, you realize, okay, this is a pattern that happens.

27:17 But it's like, yeah, so that's another thing that we also wanted to solve for in Marimo notebooks.

27:23 Awesome.

27:23 All right.

27:23 Well, tell us about Marimo.

27:26 Okay.

27:27 So let's see.

27:28 So Marimo is, it's an open source notebook for Python.

27:31 The main thing that's different between Marimo and Jupyter is that Marimo is reactive.

27:38 And what that means is that unlike a Jupyter notebook where you can run cells in any arbitrary order you like, in Marimo, when you run one cell, it knows what other cells need to be run in order to make sure that your code and outputs are synchronized.

27:55 So it's kind of like Excel, right?

27:56 So you have two cells.

27:58 One declares X.

27:59 Another one references X.

28:00 you run the cell that declares X or defines X, all other cells that reference X will automatically run or they'll be marked as stale.

28:09 You can configure it if you're scared of automatic execution.

28:12 But the point is that it manages your variables for you so that you don't have to worry about outputs becoming decohered from your code.

28:22 And similarly, if you delete a cell, the variable will be scrubbed from memory and then cells that are dependent on it will either be marked as stale a big warning or automatically run and invalidate it.

28:34 So that reactivity provides some amount of reproducibility.

28:38 And then it also, some of the key features, and then we can dive into each of them, is an additional reactivity.

28:44 Marima notebooks are stored as pure Python files, which makes them easy to version with Git.

28:50 And then we've also made it easy to run Marima notebooks as Python scripts and then share them as interactive web apps with little UI elements.

28:58 Yeah, I'm excited to talk about that.

29:00 Some cool stuff there.

29:01 Okay, so when you create a variable, if you say like x equals one, it's not just a pi long pointer in memory, right?

29:09 It's something that kind of looks at the read and write that get in the set of it and creates like a relationship where it says if you were to push a change into it, it says, okay, I have been changed.

29:21 Who else is interested in reading from me?

29:23 And it can sort of work that out, right?

29:25 How does that work?

29:25 Actually, it's not really implemented in that way.

29:29 Okay.

29:30 So, okay.

29:31 So let's see.

29:32 Yeah, this is interesting to dive into because there's like two ways that you can like get at reactivity.

29:37 Like so in Marima, what we actually do is we do static analysis.

29:41 So we look for definitions and references on like of global variables.

29:46 And then so we just make a graph out of that.

29:48 So for every cell, we look at the, I guess, the loads in the ASTs and then like

29:53 the assignments.

29:57 And so we can see statically who declares what and who reads what, but who is a cell.

30:04 And so that makes it like performant and also predictable of like how your notebook is going to run.

30:10 A alternative, I think what you were getting at was like runtime tracing.

30:14 More like a JavaScript front end sort of deal, like a view binding model binding type thing or something like that.

30:20 Yeah. So yeah, we, everything we do is static, is done with static analysis.

30:27 this there was another project called ipyflow which was like a reactive kernel for jupiter it's still around um they took like the runtime tracing approach of like checking on every assignment like okay

30:39 okay there's a read and now where is that where is that object in memory and then running cells that depend on it um i think in practice you know i was talking to steven mackie the author of that article of that extension and then also like based on some work that i saw at Google TensorFlow, that's really hard to get 100% right.

30:58 It's basically impossible to get 100% right.

31:00 So you will miss some low references and definitions.

31:06 And so there's this weird usability cliff as a user where when you run a cell, you're not sure what else will run.

31:12 Whereas if you do it based on static analysis, you can give guarantees on what will run and what won't.

31:18 Yeah, I guess the static analysis is a good choice because there's only so many cells.

31:23 You don't have to track it down to like, well, within the DOM and all the JavaScript objects, we're linking all these together and they can do whatever they want from all these different angles.

31:32 It's just like, when this cell runs, what other cells do we need to think about?

31:35 So it's kind of like, what does this create?

31:38 What does it change?

31:39 And then push that along, right?

31:40 So it's a little more constrained.

31:42 Exactly, yeah.

31:43 So basically, yeah, that's exactly right.

31:45 And so it's a data flow graph where the data flowing on the edges is the variables across cells.

31:51 Yeah, I don't know.

31:52 I think I've been thinking about Dataflow Grass for a long time, ever since TensorFlow, doing my PhD too when I worked on CVXPY.

31:58 So it's just a thing that I enjoy thinking about and working on.

32:02 Yeah, well, what about your experience working on TensorFlow and at Google and at Stanford and stuff that sort of influenced this project?

32:11 Yeah, a couple of things.

32:14 So definitely a big thing that influenced this project was working as a scientist at Stanford where like I and my colleagues use Jupyter Notebooks like on almost a daily basis because they want to see our data while we worked on it.

32:29 And so really valued the iterative programming environment, really didn't like all the bugs that we kept on running into, which are

32:37 like kind of our fault, right?

32:38 Because of like, oh, we forget to run a cell, et cetera.

32:42 Didn't like that it was not easy to reuse the code in a notebook in just other Python modules.

32:49 Didn't like that I couldn't share my artifacts in a meaningful way with my PhD advisor who can't run Python notebooks.

32:55 I couldn't make a little app to showcase my research project.

32:59 It's so interesting how PhD advisors and just professors in general, how they're either embracing or their inability to embrace or their prejudices for or against the technology so influence science, the people in the research teams, everything.

33:15 It's like, well, we'd like to use this, but the principal author really doesn't like it, doesn't want to run it, so we're not using that.

33:21 Or, you know, just these little edge cases.

33:24 Like, I was made to learn Fortran in college.

33:26 I'm still sour about it.

33:29 That's funny.

33:30 Yeah, no, it's true.

33:31 Yeah, it does have a big effect.

33:33 And then at Google, I guess the things that influenced – so we used Notebooks 2 there.

33:39 Since I was doing the systems engineering work at Google, I personally didn't use them too much, although I did use like Google Colab for like training, like creating training courses and stuff for, for like other engineers and for demos.

33:50 But I guess the part of Google that influenced it was just like thinking about data flow graphs for a

33:55 year. And you know, I, we were, there was like a couple of parable projects, like one that was using runtime tracing for like figuring out like how to make a DAG. And it was just, I don't know, kind of traumatic just because you like you miss things and the user experience is just like it kind of falls over.

34:13 And so I guess the part from there just made me not want to use runtime tracing,

34:19 made me embrace static analysis for this.

34:22 Yeah.

34:22 Yeah, it makes a lot of sense.

34:24 All right.

34:25 Well, let's talk some features here.

34:27 What are the, I guess probably the premier feature is the reactivity, in which case it doesn't matter which cell you run, they're never going to get out of date, right?

34:37 Yeah, that's correct.

34:38 So the reactivity, in other words, the data flow graph behind it is the premier feature.

34:45 And it lets you reason about your code locally, right, which is really nice.

34:48 Like you can just look at your one cell.

34:49 You don't really have to worry about, you know, you know what variables it reads and what it defines.

34:54 And you don't have to worry about, oh, but what cells do I need to run before this?

34:58 So not only does it like make sure your code and outputs are in sync, it also like makes it really like fun and useful.

35:08 to really rapidly experiment with ideas.

35:11 As long as your cells aren't too expensive, if you enable automatic execution, you can change a parameter, run a particular cell, and it'll run only what's required to update subsequent outputs.

35:27 And then another consequence of reactivity that I think is really neat and our users really like is it makes it far easier to use interactive UI widgets than it is in like a Jupyter notebook.

35:40 And the way that this works is that, so Marimo is like both a notebook and also a library.

35:47 So you can import Marimo as Mo into your notebook.

35:50 And when you do this, you get access to a bunch of things, including a bunch of different UI widgets, ranging from the simple ones like sliders, drop-down menus, to like cooler ones like interactive selectable charts and data frame transformers that automatically generate the Python code needed for your transformation.

36:12 And the way that reactivity comes into here is that because we know when you create a UI element, as long as you bind it to a global variable, just say my slider equals mo.ui.slider, then when you interact with the slider anywhere on the screen, well, we can just say, okay, that slider is bound to X.

36:31 We just need to run all other cells that depend on X.

36:33 all of a sudden you have really nice interactivity, interactive elements controlling your code execution without ever having to write a callback.

36:42 And so I think people find that really liberating too.

36:44 So you don't really have to hit backspace, change a character value for your variable, shift, enter, shift, enter, shift, enter.

36:52 Just change a number and everything automatically recalculates.

36:56 Yeah.

36:56 In this dependency flow, is it possible to have a cyclical graph?

37:02 Like if I did this in Jupyter, I could go down to the third cell and define X and then go back up and then use X.

37:09 If I run it in the right order, it won't know that there's a problem.

37:13 Imagine it's only possible to go one way, right?

37:16 You can't get into a weird cycle.

37:19 Yeah.

37:19 So we do give people escape patches.

37:22 We ask them, please don't use it unless you really know what you're doing and you really want to do this.

37:27 Because in most cases we find, especially for like if your goal is to just like work with data data scientist, data engineer scientist. We feel you don't need that cycles. You don't need cycles.

37:40 But if you're making an app, and I guess I should mention Marimo lets you run any notebook as well a notebook, but also from the command line, you can serve your notebook as a web app, like similar to Streamlit if you've seen that. In those cases, sometimes you do want state basically, right? Like cyclic references. So by default, Marimo actually checks for cycles. If you have a cycle across cells, politely tells you, hey, that's not really cool in Marimo.

38:07 Please break your cycle.

38:08 Here's some suggestions how to do that.

38:11 But if you're really insistent, then you can go to our docs and learn about the state object that we have that does let you get into some runtime-based cell execution.

38:20 You can update a state object and kind of in a React from 10D way, it'll run dependence of that state.

38:26 Okay, interesting.

38:27 Yeah, I guess that makes sense for apps.

38:28 You definitely need some sort of global state there.

38:32 Yeah, I think we've seen that sometimes our users will reach for state in call VIX just because that's what they're used to.

38:41 And that's what they maybe were required to use in Jupyter, et cetera.

38:45 So we're trying to tell them, hey, in most cases, you actually don't need this.

38:49 Yeah, very cool.

38:50 So when you create a Jupyter notebook, you don't really create Python files.

38:56 you create the notebook files, and those notebook files are JSON with both embedded cell execution bits, the code, but also the answers that go below.

39:05 That's why if you go to GitHub and you look at a notebook, you can instantly see what could be super expensive computation, but pictures and stuff there because it's residual in the artifact, right?

39:16 Yeah.

39:17 If you guys do Python files, do you have a way to save that state in a sort of presentation style or something like that?

39:26 You know what I mean?

39:26 Is there like an associated state file?

39:29 Yeah, so that's a really good question.

39:31 And the answer is yes, but that is the biggest trade-off, I think, of our file format.

39:35 So like a surprising number of people actually have come to us and told us, I didn't expect this, but they tell us the reason we decided to try Marima Notebooks is because you say it is versionable with Git.

39:47 Like that's like, they're like, that's the one reason I, especially like people in software and industry.

39:52 And then they stay for all the other things.

39:55 So that's what the pure Python file format allows, in addition to modularity, running it as a script.

40:02 For seeing outputs, yeah, they're not saved in the Python file.

40:05 So we have a couple of ways to get around that.

40:09 So one, you can export any Marimo notebook to an IPython notebook file, actually.

40:14 So you can set up this, we have a feature, automatic snapshotting.

40:18 You can automatically snapshot your notebook to like a parallel IPIMB file that's stored in a subdirectory of your notebook folder.

40:28 And so you can push that up or share that if you like.

40:32 When working locally, though, we actually have this cool feature that my co-finder Miles recently built is that we actually – so when you're working, you're working with your notebook, the outputs are actually saved.

40:45 The representation of the outputs are saved in this subdirectory, underscore underscore marimo.

40:51 So that the next time you open the notebook, it just picks up those outputs and then like loads them into the browser so that you can see where you left off.

41:01 So I see. So kind of like PyCache.

41:04 Yeah.

41:04 I would actually assume given the name, but I haven't actually looked into PyCache too much.

41:09 Well, I mean, just in the sense that it's saved like right there in your project using that directory.

41:14 Oh, yes, yes. You have a PyCache folder. Exactly.

41:16 Yeah, yeah, yeah. Exactly.

41:18 And we have one other feature that another contributor, his name is Dylan, has been developing, which I think is really cool, which is along those same lines, but based on Nick's style caching.

41:32 So it'll actually save the Python objects themselves using a variety of different protocols.

41:40 And because we have the DAG, he can actually guarantee consistency of the cache.

41:47 But that means not only are your outputs automatically loaded, also the variables are loaded, so you can literally pick up where you left off.

41:54 Yeah, it sounds like some sweet SQLite could be in action there instead of just flat files or whatever.

42:01 Yeah.

42:02 Yeah, very cool.

42:02 So you talked about when the reactivity fires, it can sometimes be expensive to recompute the cells.

42:10 Sometimes they're super simple.

42:12 Sometimes they're trained in the machine learning model for two days.

42:16 Yeah.

42:17 Are there caching mechanisms?

42:19 You know, in a Python script, we've got functools.lrucache, and then there's other things that are kind of cool, in-process caches, like PyMocha is kind of like the functool stuff, but way more flexible and so on.

42:33 And you could put those onto functions that then would have really interesting caching characteristics, like, hey, if you're going to rerun it, but I've run it before with this other value, here's the same answer back, right?

42:43 Is there a way to set up that kind of caching or performance memoization type stuff?

42:50 Yeah, definitely.

42:51 And that same contributor, Dylan.

42:53 So he's implemented these and is continuing to build it out.

42:57 But we have, I guess, two options.

43:02 One is in-memory cache, which is basically the API is the same as functools.cache, but is designed to work in an iterative programming environment.

43:10 If you use functools.cache naively, if a cell defining a function reruns, your cache gets busted.

43:18 So modoc.cache is a little smarter, and using the DAG can know whether or not it needs to bust the cache.

43:25 But to the user, it feels the same.

43:28 And then we also have a persistent cache, which is the one that I was alluding to when I mentioned you can automatically pick up where you left off by loading objects from disk.

43:37 And so you can put an entire cell in a context manager with MoDub persistent cache.

43:44 It'll sort of do the right thing.

43:46 We've been talking about experimenting with letting users opt into global cell-wide caching, just automatically memorize cells or just have a UI element

43:55 to do something like that.

43:57 But we haven't quite done that yet.

43:59 Yeah, I mean, it sounds real tricky for Jupyter.

44:01 But because you all know the inputs and the outputs, It's almost as if you could sort of drive a hidden LRU cache equivalent at the cell level.

44:11 You know what I mean?

44:12 That's exactly right.

44:13 Yeah.

44:14 I think, yeah, because we know the inputs and outputs, that's kind of how everything flows from a project.

44:19 You can think about projects like if you turn a notebook into a data flow graph, what can you do?

44:24 And yeah, caching is, smart caching is definitely one of them.

44:27 Yeah.

44:27 I mean, it's not a, I make a sense like, well, you know, the inputs, outputs, so it's fine.

44:32 But, you know, you could have the same data frame, but you could have added a column to the data frame, and the LRU cache goes, is it the same pointer?

44:39 It is.

44:40 We're good to go.

44:40 It's like, well, yes.

44:41 However, it's not the same.

44:44 You know, you almost got to, like, hash it or do something funky.

44:47 Yeah, it's not perfect.

44:48 Automatic sounds hard.

44:49 No.

44:50 Yeah, yeah, exactly.

44:51 That's why we haven't quite enabled it.

44:54 Yeah, it is hard.

44:56 Yeah.

44:56 Side effects, too, network requests, all these things.

44:59 All right.

45:00 I have three more topics I want to cover before we run out of time here.

45:04 We may cover more, but three are required.

45:07 First one, because this is the one I'm going to forget most likely.

45:10 You were at Google, and then by way of going through a PhD, you now are not at Google creating this project, which is open source on GitHub.

45:20 And I don't see a pricing page at the top, but I do see it for enterprises.

45:24 Like, how is this sustaining itself?

45:27 Like, what's the business model?

45:29 So when we first started right after my PhD, so that was early 2022, we were actually lucky enough to get funding from a national lab associated with Stanford.

45:42 So the Stanford Linear Accelerator.

45:44 So I was talking with some scientists there and we were talking about, I knew I wanted to make this thing.

45:50 And they were like, what are you up to?

45:51 And I was like, oh, well, I want to make this like notebook thing that like, you know, fixes all notebooks inspired by Pluto JL.

45:57 And they were like, that's awesome.

45:59 We're scientists.

45:59 We use notebooks every day and we're getting a little tired of reproducibility issues.

46:03 We want to make apps.

46:04 So they're like, we'll fund you to do this.

46:06 And so we...

46:07 Awesome.

46:07 So they kind of wrote you into one of their larger grants, something like that.

46:11 We got like a subcontract that like was covered by one of their grants.

46:14 Yeah.

46:15 So that wasn't enough for me and my co-founder, Miles, to work on it for two years full time alone.

46:21 And like, I think it really let us polish the product and develop it.

46:27 And then mid last year, in July. One of our users is like one of our probably biggest power users actually is Anthony Goldblum, who is the founder and former CEO of Kaggle. So

46:40 really into data science. So he's been one of our biggest power users. And at a certain point he last year became, I guess, passionate enough about our project that he reached out to us and asked us, hey, like, can this venture fund I'm part of, can we invest?

46:58 And so that was July of last year.

47:00 And so we raised money from them as well as from a bunch of prominent angel investors like Jeff Dean from Google, Clem from Hugging Face, Lucas B.

47:09 Walt from Weights & Bias and others.

47:12 So now our team is funded primarily through the venture funding.

47:18 Right now our business model is build open source software based on our venture

47:22 funding.

47:23 We do have plans for commercialization, but we're just not at a point where it makes sense to work on that right now.

47:28 But what I can promise is that Marima will always be open source Apache 2.0 license.

47:33 We never plan to sell the notebook itself, but instead plan to work on complementary infrastructure.

47:39 Yeah, I can already think of two good ones.

47:43 Cool.

47:44 Yeah.

47:45 Awesome.

47:45 Okay. The reason I ask is, you know, people always just want to know either

47:49 how, what is kind of the success story of open source, but sustainable, you know, as a full-time thing, or if they're buying into it, how likely is that going to stay open source or like what's the catch, you know, that kind of stuff?

48:06 Yeah, no, it's a good question.

48:08 I mean, honestly, I think when we raised money, it actually assuaged some of our users because they were like, oh, Miles and Akshay are really cool.

48:18 They're building this really cool stuff, but how are they paying rent?

48:22 And so when we told them, yeah, we raised a few million dollars from X Ventures, they were like, okay, that's great.

48:28 You deserve it.

48:29 Please continue building.

48:31 Nice.

48:32 Yeah.

48:33 Yeah, that's awesome.

48:34 All right.

48:35 Let's see, which one are we going to talk about next?

48:37 Not this one yet.

48:39 We'll talk about this because there's a nice comment from Amir out in the audience.

48:42 I love the new AI feature in Mario.

48:44 So you have an AI capability.

48:47 And then the other thing I want to talk about is, so we save some time for it, is publishing or running your Reactive Notebook as an app.

48:55 But let's talk AI first because we started the show that way.

48:59 I don't want to start to round it out that way, you know?

49:02 So we have like a few different ways that AI is, I guess, integrated into the project.

49:08 So one thing that we're trying really hard to do is like build like a modern editor, like designed specifically for working with data.

49:15 And I think these days modern means one of the requirements is you have AI stuff built in.

49:20 So you can like generate code with AI.

49:22 You can like even tell the, you can like tag like data frames and like tables that you have in memory and like give them as context, give their schemas as context to your assistant.

49:32 in like a cursor-like way.

49:35 We're also like experimenting with a new service right now like marimo.amp.ai where you can go type a little prompt like generate me a notebook that plots a 3D quadratic surface with Matplotlib because I always forget how to do that.

49:51 And then it'll do its best to in one shot create that notebook for you and then you can play around with it and then you can either download it locally or share it out.

50:04 Yeah, very cool.

50:05 I'll put a link to the online AI feature that people can play with.

50:09 It looks really, really nice and it worked super quick when I asked it to go.

50:12 Now, one thing I want to talk about really quick now that I'm looking at this is you've got the code, but it's below the presentation of the result of the code.

50:22 Yeah.

50:23 So that is a stylistic choice, which is configurable because people told us, Many of them told us we don't like this.

50:30 Please just put the output below.

50:31 But other people like it.

50:33 Okay, you love it.

50:34 Okay, yeah.

50:34 And so this is...

50:35 Okay, well, what is the point?

50:36 Is the point for me to see the code or the answer?

50:39 The point is to see the graph and the tables and if I care, I'll look at the code.

50:44 So the way that I...

50:46 So I think I mentioned Pluto JL really briefly.

50:49 So Pluto JL is one of our biggest inspirations.

50:51 It's a Julia project, a reactive notebook.

50:54 Now, a lot of the great ideas that I think are great about Marimo came, honestly, very straight from pluto and one thing that fawns like the creator of pluto's you know he likes to say is he said code is the code of a cell is a caption for its output and that's the that's the way you think about it and when you think about it that way it makes a lot of sense um but i think like pluto and then observable also both have outputs on top i don't know it's like i feel like if you it's like one of those heuristics you look at a notebook output on top it's a reactive notebook otherwise it's imperative

51:23 i gotcha gotcha yeah i can see why you might not like it but i like it so i think

51:30 it's pretty cool yeah and then this um marmo.app slash ai once you create one of these you have a shareable link that you can hand off to other people right yeah

51:40 so you can open a new tab which i think will so actually this all of this actually interestingly enough is running in our WebAssembly playground um

51:48 yeah so there's that url right there which you can just share You can also do like the little, there's a hamburger menu, which you can click on to get a permalink that's shorter.

51:58 But yeah, honestly, we just built this generate with AI feature like a little over a week ago.

52:05 And just curious to see what people use it for.

52:08 There's a lot more we could invest here.

52:10 I mean, you know, everyone talks about agents.

52:12 You can think about, oh, data science agent.

52:14 But for now, we're just trying to one shot thing and see how it lands with folks.

52:19 Yeah, sure.

52:20 Let's start with that.

52:20 Well, it looks really great.

52:21 And the UI is super nice.

52:23 So well done.

52:25 Appreciate it.

52:25 I'll pass that on to Miles.

52:26 He built this as a weeknight project.

52:30 Sometimes you just get inspired.

52:32 Just like, you know what?

52:33 I'm doing it.

52:34 I'm just taking two days off the regular work and I'm just doing this.

52:38 You know what I mean?

52:38 Yeah.

52:39 Yeah, definitely.

52:40 Yeah.

52:41 All right.

52:41 So the final thing I want to talk about, which you kind of hinted at a little bit there with the WebAssembly stuff, is I want to run my app.

52:50 So tell us about, as I'm fumbling around to find a place to show you.

52:55 Anyway, tell us about running the app.

52:58 Yeah, so there's a few different ways.

53:02 The sort of traditional way, if you have a client-server architecture, so you have your notebook

53:09 file, notebook.py.

53:12 It has some UI elements, et cetera.

53:14 It has some code.

53:15 You get an app, but just by default, you hide all the code.

53:18 Now you have text, outputs, UI elements.

53:20 You can think of it as an app.

53:21 So if you type marimorun, notebook.py, at the command line, it'll start a web server that's serving your notebook in a read-only session that you can connect to, et cetera.

53:36 Can you do the widgets?

53:40 Like if it's read-only, can I slide the widgets to see it do stuff?

53:44 You know what I mean?

53:44 Yeah, yeah, yeah.

53:45 So you can slide the widgets, see it do stuff.

53:47 You just can't change the underlying Python code.

53:49 Like you can't like, got it.

53:51 But yes, that's true.

53:53 That is real.

53:53 Exact RM dash RF.

53:55 Okay.

53:55 Let's go.

53:56 Yeah.

53:56 Yeah.

53:57 So that is not allowed.

53:58 And so that's the traditional way client server.

54:01 but, last year we actually, I think it was last year.

54:05 we, we added support for, WebAssembly through the PyDype project, which is a port of CPython to, to Wasm slash Unscriptum.

54:14 And so now you can actually take any Marima notebook.

54:17 And as long as you satisfy some constraints, which are documented on our website, you can export it as like static HTML and some assets and just throw it up on GitHub pages or server it wherever you like.

54:28 And that's also what our online playground is powered by.

54:31 And we found this to be just like a really easy way to share notebooks, easy for folks in industry, easy for educators, and also just incredibly satisfying.

54:39 In our docs, we have tons of little Marima notebooks iframed into various API pages, etc., that just let you actually interact with the code as opposed to just reading it statically.

54:52 Yeah, that's nice.

54:53 That's super cool.

54:54 So it's pretty low effort, it sounds like.

54:57 And it's a lot of work to run a server and maintain it, especially if you're going to put it up there so other people can interact with it.

55:04 And you've got to worry about abuse and all that kind of stuff.

55:07 If you can ship it as a static website, people are just abusing themselves if they mess with it anyway.

55:12 Exactly.

55:13 I had to give huge props to the PyDive maintainers.

55:19 They are amazing, really responsive, and just building this labor of love.

55:24 They are really pushing the needle on the accessibility of Python.

55:32 Yeah, that's awesome.

55:33 Have you done anything with PyScript?

55:35 I haven't done anything with it.

55:37 I know of them.

55:37 So they also use Pyodide underneath the hood.

55:40 Yeah, they let you pick between Pyodide for more data science-y stuff and

55:43 MicroPython for more faster stuff.

55:47 I am pretty not educated about MicroPython.

55:51 I read about it, but I'd be curious on your take also on choosing one versus the end.

55:56 Yeah, well, I think it's brilliant if you want a front-end framework equivalent.

56:01 If you want PyView or PyReact or whatever, You don't need all the extras.

56:07 You kind of need the Python language and a little bit of extras and the ability to call APIs, right?

56:12 And so MicroPython is a super cut-down version that's built to run on like little tiny chips and self-contained systems on a chip type things.

56:22 So it's just way lighter.

56:23 It's 100K versus 10 megs or something like that.

56:26 And if it solves the problem, right, if it gives you enough Python, I guess is the way to think of it, then it's a really cool option for front-end things.

56:35 I don't know that it works for you guys, right?

56:37 Because you want as much compatibility with like Matplotlib and Seaborn and all these things.

56:43 But if your goal is like, I want to replace the JavaScript language with the Python language, and I know I'm in a browser, so I'm willing to make concessions, I think it's a pretty good option.

56:52 Yeah, that makes sense.

56:53 Yeah, you're right.

56:53 We want max compatibility.

56:55 Like we have one demo where like you can load sklearn, take PCA of these like images of numerical digits, put it in all Terraplot, select them, get it back as a data frame.

57:05 That's all running in the browser.

57:06 And, like, it's magical.

57:08 It's on your home screen.

57:09 People can watch the little embeddings explore.

57:12 It's super cool.

57:13 It's very cool.

57:14 It's honestly magical what PyDat has been able to enable.

57:18 Yeah.

57:19 I imagine it's just getting started.

57:21 I think so.

57:22 Yeah.

57:22 Yeah.

57:23 They're constantly shipping.

57:24 They just added support for Wasm GC.

57:27 So performance is increasing.

57:29 Expose the bug in WebKit.

57:31 along the way.

57:33 Wow, okay.

57:34 That's good.

57:35 Yeah.

57:36 Cool.

57:37 All right, well, we're getting to the end of our time here.

57:40 So, you know, people are interested in this.

57:42 They might want to try it for themselves.

57:44 Maybe they're part of a science team or a data science team at a company.

57:49 What do you tell them if they're interested in Marmo and they want to check it out?

57:53 The easiest way, I think, is to just start running it locally.

57:57 And so our sort of source of truth is our GitHub repo.

58:01 So github.com, marimoteam.com, or if it's easy to remember, our homepage is marimo.io, which links to our GitHub.

58:09 But then you can install from pip or uv or whatever your favorite package manager is and just sort of go to town.

58:15 If you really just don't have the ability to do that for whatever reason, you can go to marimo.new, which will create a blank Marimo notebook powered by WebAssembly in your browser.

58:25 It has links to various Marimo tutorials, as an example, notebooks.

58:31 We didn't even get to talk about the uv integration, which may be another time, but that's another way that Marimo makes notebooks reproducible down to the packages.

58:39 Oh, yeah, okay.

58:41 uv is fantastic.

58:42 I'm a huge fan of uv and Charlie Marsh and team.

58:45 Yeah, but GitHub, Marimo.io, and docs.marimo.io is what I would recommend.

58:51 Thank you so much for being on the show.

58:52 Congratulations on the project.

58:55 It's really come a long way, it sounds like, so it looks great.

58:58 Thanks, Michael. I appreciate it.

58:59 It was a lot of fun to chat.

59:00 Yeah, you bet. Bye.

59:02 Bye.

59:03 This has been another episode of Talk Python To Me.

59:06 Thank you to our sponsors.

59:08 Be sure to check out what they're offering.

59:09 It really helps support the show.

59:11 This episode is sponsored by Worth Recruiting.

59:14 Worth Recruiting specializes in placing senior-level Python developers and data scientists.

59:19 Let Worth help you find your next Python opportunity at talkpython.fm/worth.

59:25 Want to level up your Python?

59:26 We have one of the largest catalogs of Python video courses over at Talk Python.

59:30 Our content ranges from true beginners to deeply advanced topics like memory and async.

59:35 And best of all, there's not a subscription in sight.

59:38 Check it out for yourself at training.talkpython.fm.

59:41 Be sure to subscribe to the show, open your favorite podcast app, and search for Python.

59:46 We should be right at the top.

59:47 You can also find the iTunes feed at /itunes, the Google Play feed at /play, and the direct RSS feed at /rss on talkpython.fm.

59:57 We're live streaming most of our recordings these days.

59:59 If you want to be part of the show and have your comments featured on the air, be sure to subscribe to our YouTube channel at talkpython.fm/youtube.

01:00:08 This is your host, Michael Kennedy.

01:00:09 Thanks so much for listening.

01:00:10 I really appreciate it.

01:00:12 Now get out there and write some Python code.