Outlier Detection with Python

Discount code for Outlier Detection in Python book: talkpython45 (45% off, no expiration date).

Episode Deep Dive

Guest Introduction and Background

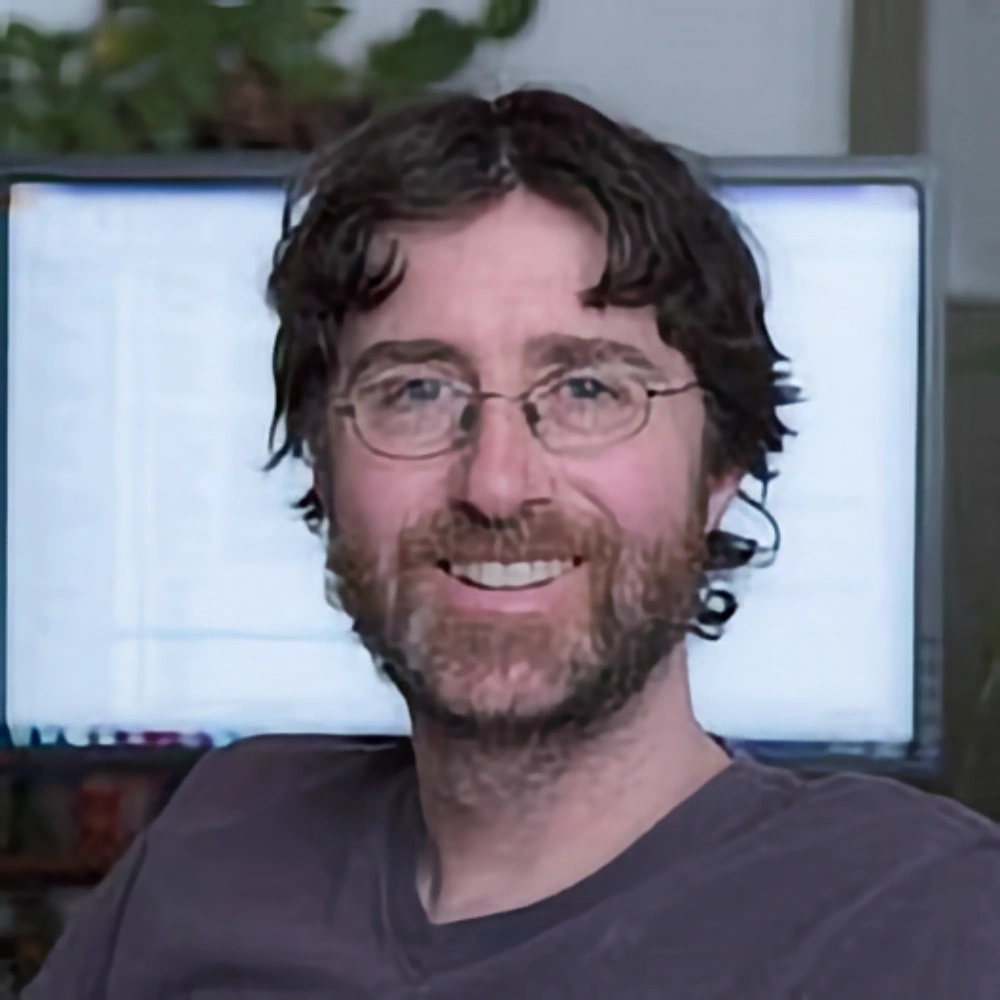

Brett Kennedy is a seasoned data scientist, software developer, and the author of Outlier Detection with Python from Manning Publications. He has over 30 years of experience in software, including extensive work on data-intensive projects in financial auditing, scientific research, and beyond. Brett earned his master's degree at the University of Toronto, focusing on artificial intelligence during a period sometimes known as an AI "winter." He has since devoted a significant portion of his career to outlier detection and data analytics, contributing original research and practical solutions for enterprise and scientific applications.

What to Know If You're New to Python

If you're newer to Python and want to follow along with the outlier detection tools and libraries mentioned, here are a few tips and resources:

- Make sure you're comfortable with virtual environments (

venvorconda) and basic package management (e.g.,pip install scikit-learn). - Familiarize yourself with tabular data handling in Python via pandas (simple data exploration,

.head(),.describe(), etc.). - Try out an IDE or editor like PyCharm or VS Code for an easier debugging and code-completion experience.

Key Points and Takeaways

- Why Outlier Detection Matters

Outlier detection is about discovering data points or patterns that deviate significantly from the rest. These anomalies can indicate anything from errors or fraud to genuinely novel phenomena. In fields such as finance, security, and research, quickly identifying outliers can save companies money and help drive scientific discoveries. Python's ecosystem provides an especially rich set of tools to find and interpret those anomalies.

- Tools mentioned:

- Real-World Applications

Brett emphasized how outlier detection is widely used in industries like credit card fraud detection, financial auditing, industrial process monitoring, and astronomy. Fraud detection benefits from flagging unusual transactional patterns, while scientific fields use anomalies to identify new phenomena, such as unique celestial events in astronomy. In manufacturing, outliers in sensor data can catch machine failures before major problems occur.

- Example contexts:

- Credit card transactions

- Auditing and compliance data

- IoT sensor readings in factories

- Astronomy data pipelines

- Example contexts:

- Astronomy & Large-Scale Data

Astronomers deal with massive datasets, sometimes petabytes per night. Manually finding unusual occurrences (e.g., pulsars, supernovae) is nearly impossible. Automated outlier detection steps in to pinpoint the rare but significant events for scientists to investigate further.

- Mentioned use case:

- Pulsars discovered by scanning large amounts of signal data

- Mentioned use case:

- Core Python Libraries for Outlier Detection

Many outlier detection solutions start with well-known libraries like scikit-learn (LOF, Isolation Forest, Elliptic Envelope) and PyOD. While scikit-learn has a few built-in anomaly detection methods, PyOD wraps a huge variety (including deep-learning-based models) in a consistent API.

- Tools:

- Local Outlier Factor (LOF)

- Isolation Forest

- Elliptic Envelope

- Autoencoders and GANs in PyOD

- Tools:

- Creating Ensembles of Outlier Detectors

Because each detector tends to excel at finding certain types of anomalies, combining multiple detectors (e.g., LOF + Isolation Forest + KNN-based methods) can yield more robust results. Many practitioners run these detectors in parallel or sequence and then look for data points flagged by multiple methods as truly suspicious or worthy of deeper inspection.

- Tip:

- Using short-circuit logic in sequence can reduce computation for very large datasets.

- Tip:

- Time Series Anomaly Detection with Prophet

Time series anomalies often appear when actual values deviate significantly from a forecasted trend. Brett mentioned Prophet (originally from Facebook) as a popular tool for forecasting with built-in support for seasonality and trends. By comparing actual time series data to the predicted values, significant deviations become potential anomalies.

- Use cases:

- Server load spikes

- Unusual weather patterns

- Surprising changes in demand or user engagement

- Use cases:

- Deep Learning Approaches

Python's deep learning ecosystem (PyTorch, TensorFlow, Keras) has methods for outlier detection, such as autoencoders or GANs that learn "normal" patterns and identify points that deviate. PyOD even includes some basic deep learning outlier detection implementations. However, these can be more resource-intensive and might require larger datasets to be effective.

- Example approach:

- Autoencoder reconstructs typical data well, but reconstruction error spikes for anomalies.

- Example approach:

- Handling Large Datasets: Parallelization

For extremely large data (millions of rows or more), outlier detection can be accelerated through parallel processing with frameworks like Dask (mentioned briefly in the conversation). Techniques such as sampling, incremental learning, and GPU acceleration (via Cython or specialized libraries) may also be necessary for speed and memory management.

- Tools:

- Dask (dask.org)

- HPC or GPU-based libraries in PyOD for deep learning

- Tools:

- Interpretability and Domain Knowledge

A unique challenge in outlier detection is interpreting why something is unusual. While black-box algorithms can flag anomalies, domain expertise is essential to confirm whether the outlier is an error, a genuine new insight, or an expected extreme. Clear explanation techniques (e.g., partial dependence for tree-based methods) help build trust in the final results.

- Tips:

- Combine algorithmic scores with human-in-the-loop analysis

- Track features most responsible for anomaly scores

- Tips:

- A Practical Workflow Brett's recommended approach starts by training or calibrating on known, mostly "clean" data, then scoring new (or the same) data for anomalies. Iteratively refine the model: remove obvious errors and re-train so the definition of "normal" becomes more precise. Always use domain context to interpret flagged anomalies.

- Steps:

- Initial data exploration and cleaning

- Outlier detection pass (e.g., PyOD)

- Manual review of top anomalies

- Retrain and finalize

Interesting Quotes and Stories

"Python is probably, arguably, the richest set of tools for outlier detection of any language. A very, very large percent is done in Python." , Brett Kennedy

"Astronomy is a good example where we collect such phenomenal volumes of data that if you want to find anything interesting in there, the most effective way is just run an anomaly detection." , Brett Kennedy

"One of the steps you might often perform is say, well, do outlier detection and see, you know, what are the strangest rows in my data … you can do this for image data or audio data or, you know, data of really any type." , Brett Kennedy

Key Definitions and Terms

- Outlier (Anomaly): A data point that deviates significantly from the majority distribution.

- Isolation Forest: A tree-based anomaly detection method that isolates outliers by randomly partitioning feature space.

- Local Outlier Factor (LOF): Algorithm measuring local deviation of density; data points in low-density areas are outliers.

- Autoencoders: Neural networks that learn to reconstruct data. High reconstruction error suggests an outlier.

- Prophet: A forecasting library developed by Facebook, used here for time series anomaly detection by comparing actual vs. predicted data.

Learning Resources

Here are a few resources discussed or referenced during the episode for deeper study or hands-on practice:

- PyOD: Python's comprehensive outlier detection library for tabular data.

- scikit-learn: Machine learning toolkit with built-in outlier/novelty detection tools.

- Prophet (Facebook): Library for detecting anomalies in time series through forecasting.

- Python for Absolute Beginners: If you need a foundation in Python before diving into outlier detection and advanced libraries.

Overall Takeaway

Outlier detection in Python opens the door to discovering hidden and potentially critical insights in your data. As Brett highlights, Python's libraries, particularly scikit-learn, PyOD, and even deep learning frameworks, offer both powerful and flexible methods to isolate anomalies in everything from industrial sensor data to astronomical surveys. The ultimate success, however, depends on pairing these algorithmic capabilities with strong domain knowledge and interpretability, ensuring you truly understand why certain data points stand out and what real-world actions they might demand.

Links from the show

PyOD: github.com

Prophet: github.com

Outlier Detection in Python Book: manning.com

Episode #497 deep-dive: talkpython.fm/497

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 Have you ever wondered why certain data points stand out so dramatically?

00:03 They might hold the key to everything from fraud detection to groundbreaking discoveries.

00:08 This week on Talk Python To Me, we dive into the world of outlier detection with Brett Kennedy.

00:13 You'll learn how outliers can signal errors, highlight novel insights, or even reveal hidden patterns lurking in the data you thought you understood.

00:21 We'll explore fresh research developments, practical use cases, and how outlier detection compares to other core data science tasks like prediction and clustering.

00:31 If you're ready to spot those game-changing anomalies in your own projects, stay tuned.

00:36 This is "Talk Python To Me," episode 497, recorded January 21st, 2025.

00:43 - Are you ready for your host, please?

00:46 - You're listening to Michael Kennedy on "Talk Python To Me." Live from Portland, Oregon, and this segment was made with Python.

00:55 - Welcome to "Talk Python To Me," a weekly podcast on Python. This is your host, Michael Kennedy. Follow me on mastodon where I'm @mkennedy and follow the podcast using @talkpython, both accounts over at fosstodon.org.

01:09 And keep up with the show and listen to over nine years of episodes at talkpython.fm. If you want to be part of our live episodes, you can find the live streams over on YouTube.

01:19 Subscribe to our YouTube channel over at talkpython.fm/youtube and get notified about upcoming shows. This by Posit Connect from the makers of Shiny.

01:30 Publish, share and deploy all of your data projects that you're creating using Python.

01:34 Streamlit, Dash, Shiny, Bokeh, FastAPI, Flask, Quarto, Reports, Dashboards and APIs.

01:41 Posit Connect supports all of them.

01:43 Try Posit Connect for free by going to talkpython.fm/posit, P-O-S-I-T.

01:49 Brett, welcome to Talk Python To Me.

01:51 - Well, thank you very much for having me.

01:53 - It's awesome to have you here.

01:54 A pair of Kennedys on the show.

01:56 - First time ever, that's pretty awesome.

01:58 - Yep, well it should be much more common.

02:00 - Indeed, well thank you for coming on the show.

02:03 I'm really interested to talk some data science and mathy type things with Python.

02:09 Python is being used for data science and analysis and just general science and mathematics so much these days.

02:16 I wonder if people have maybe a perception out there of thinking, well Python is kind of like, yeah people use that for the web and stuff and true, but these days it's data science, machine learning, those things.

02:27 Other languages are used a fair amount.

02:29 Statistical analysis are still used a lot.

02:32 And for high performance, machine learning, people will look at other languages, C++ is used a lot and like Mojo and things are coming, coming through now that are, but still a very, very large percent is done in Python.

02:47 Like all the work we're seeing with large language models, for example, like that's pretty much all, all Python.

02:53 And a lot of standard traditional machine learning is done in a very large portion of

02:59 it's done in Python.

03:00 Yeah.

03:01 Yeah.

03:01 And outlier detection as well.

03:03 Yeah.

03:03 I think Python is probably, I mean, arguably, but I think probably the richest set of tools for outlier detection of any language, again, R has quite a good suite and, and you get good, good tools in Java and in some other places.

03:18 But Python is probably where you find the strongest, just the sheer number of And also it covers a lot of the deep learning techniques and some kind of approaches to outlier detection

03:30 aren't really as well covered in other languages.

03:32 - Well, we're gonna dive into it.

03:34 You've written a whole book on it and a bunch of cool techniques you pull down.

03:37 We're talking about some libraries and things like that, as well as some other examples.

03:42 But before we get into that, let's just hear a quick introduction about who you are and what you do these days.

03:47 - Yes, well, my name is Brett Kennedy.

03:49 So I've worked in software in one capacity or another for about 30 years now.

03:53 I did my master's degree quite a long time ago at the University of Toronto.

03:58 And that was actually, well, I was around '98, '99.

04:02 So during one of the times when AI was being a bit of a winter.

04:05 Yeah, that was a very, very good experience going there.

04:08 And yeah, I've worked for one company doing software that was used for financial auditing.

04:14 So and that's where I really started to get into data science.

04:17 And that was about 15 years ago.

04:19 of what is a little bit not as mainstream as it is today. I mean, there's certainly a lot of other people doing it, but it wasn't the gigantic industry that it is now.

04:29 >> Yeah, there was definitely some inflection points or places where it's really taken some steps, significant steps up in the Python data science and ML space. Probably one is the Pandas NumPy era around 2006, something around 2012. I don't know really what caused And certainly in the machine learning, AI is a whole nother spike of growth there.

04:50 So you were doing, it was kind of before mainstream data science, right?

04:54 It is starting to become mainstream, but like when working with text, for example, there's certain, there were spaCy and word2vec and glove and things like that.

05:05 But this is long before GPT, but scikit-learn existed and yeah.

05:11 So we're actually using a lot of the same tools that you would, you would use now, but they're a little bit earlier versions of them.

05:17 They're, yeah, sure.

05:18 Yeah.

05:19 So things have, have, have progressed certainly a lot.

05:22 Though some things just, just work and have, have been, been fairly stable.

05:27 In that space.

05:28 I imagine a lot of the work that's been done on the faster CPython initiative is probably paying benefits.

05:34 Yeah.

05:35 I mean, one of the things about Python is it, it is, does have a reputation as being not, not the fastest language you can use, you know, old versions of Python were certainly slower.

05:44 It's, it is getting faster.

05:46 Yeah.

05:46 C++ is probably about as performant as you can get in it, but you know, a lot of like scikit-learn for example, is, uses Cython so you get C code.

05:56 Characteristics.

05:56 Yeah.

05:57 Yeah.

05:57 It also goes back to what are you measuring?

05:59 What is your metric for fast?

06:01 Yeah.

06:01 What are your expectations?

06:03 And yeah, I could make a really fast thing in two weeks in C++ or I could make a somewhat slower thing, but get you the answer in half a day in Python.

06:11 It might run five times longer, but you'll have the answer today.

06:14 You know what I mean?

06:14 like that kind of thing.

06:15 There's really a trade off.

06:16 And I think, I mean, it's kind of the, well, it's, it's really the approach we've taken in data sciences to really privilege the data scientists time over the computation time.

06:27 Yeah.

06:27 Yeah.

06:27 And when it really does become a problem, there's a lot of stuff written in C or Rust or whatever.

06:32 Polars, pandas, et cetera.

06:33 Yeah.

06:34 That, you know, Polars is a big breakthrough too.

06:36 Yeah.

06:36 Are you a fan of Polars?

06:37 They've been using any?

06:38 I use it when, when there's a need.

06:39 I mean, I'm still a big fan of pandas and I still use it quite a lot.

06:43 But when there's a performance issue, yeah, Polars is one of the things I'll turn to.

06:47 >> Yeah, excellent. So we're going to talk about outlier detection.

06:51 I think maybe a good way to introduce this topic is to maybe understand the problem space just a tiny bit.

06:59 Because sometimes you might think, well, if the mean is really weird or the median is really weird or something like that, then we might have an outlier and we can go check it out.

07:08 But it's really a lot more subtle than that.

07:10 And I want to maybe go back to a guest I had, was that six months ago or something like that, Stephanie Molin, and she has this really cool project called Datamorph, which I'll link to on GitHub, and we talked about it then.

07:21 But if you pull up her GitHub repo, it's an animated GIF, I believe, of a whole bunch of different, very clear concrete shapes, like a star, literally a panda, not pandas, but the animal, and continuous animation, a bunch of data points that go from one to the other.

07:38 And it shows the mean over time.

07:40 It's the same.

07:40 The standard deviation is the same.

07:42 The correlation, the same effectively do like four to the thousandth sort of thing.

07:47 And it just shows you this is not as simple as finding stuff that's just off the that's linear interpolation line or something like that, right?

07:55 Yeah.

07:55 It's a good example.

07:56 We're using a single or a handful of metrics to evaluate a data set can be a bit oversimplistic.

08:04 You can miss a lot of nuance when you do that.

08:06 Yeah, and you do definitely get into the same sort of issues when you're looking at outliers in a lot of areas of data science where you can kind of be misled by looking at metrics that maybe are relevant, but aren't just don't tell the tell the full story.

08:19 It's one view, but it maybe it's not.

08:22 It doesn't for sure.

08:22 Like you said, doesn't tell the whole story.

08:24 So let's talk about what is this outlier detection stuff and really why do people care what kind of problems are being solved with it and so on.

08:34 When people start to learn machine learning, one of the early things you'll learn is there's supervised machine learning and there's unsupervised machine learning. And when you get into unsupervised machine learning, the two bigger areas of it are probably clustering and outlier detection.

08:50 And sometimes dimensionality reduction is kind of considered unsupervised as well. It's kind of like there's a whole host of reasons you might want to do clustering. It's a little but like that is that it's just one of those things that turns up time and again, when you're doing machine learning.

09:06 Outlier detection can be done for, for example, if you have a data set and you just want to say, you just want to try and understand the data.

09:15 One hand you can say, well, if you want to understand the data, there's really kind of two main parts of that.

09:19 There's what are trying to figure out what are the general patterns in the data and what are the exceptions to those?

09:25 And once you have both of those, you say, well, here's the patterns and Here's the extent to which those patterns hold true in the data, which is the outliers.

09:34 That kind of gives you a good sense of what the data is about.

09:37 Often when you're checking for data quality, outlier tests are very useful.

09:42 It gives you, like if you have say a table of whatever it is, credit card transactions or whatever, you can do this for image data or audio data or data of really any type.

09:51 But let's take a simple example of tabular data.

09:55 You might have a spreadsheet with a few million rows of credit card transactions or SQL table.

10:00 If you want to check it for errors before you go on to do other sort of analysis out of the data mining or building predictive models or the like, one thing we often do is try and see, well, what kind of data quality am I dealing with here?

10:12 And there's a number of ways to do that, but one of the steps you might often perform is say, we'll do outlier detection and see what are the strangest rows in my data.

10:21 And if you look at those, you see the strangest credit card transactions in there, Or if you could be a little more involved in analysis, you might look at the most unusual string of transactions for a given user or a given store or something like that.

10:36 And if you say, well, okay, these are the weirdest ones and they're only this weird, then we can have some sort of sense of what sort of range of anomalousness might be in your data.

10:45 Yes, it's used, alert detection is used a lot as well in security.

10:49 We're gonna be used like checking for fraud, checking for errors.

10:53 I mean, it's used in scientific analysis a lot.

10:56 It's very common for scientific projects for just to collect data through sensors or hand collecting data or whatever.

11:04 In the case of astronomy, it's through telescopes.

11:07 Astronomy is a good example where we collect such phenomenal volumes of data that if you want to find anything interesting in there, we used to be able to find interesting phenomena in astronomy data just by manually going through it.

11:21 example given the book is that's how pulsars were discovered. The low-hanging fruit's gone, probably. To really find something really, really interesting, given that we might collect petabytes of data per night, really the most effective way to do it is just go through an anomaly, run an anomaly detection on the data and say, "What are the strangest things in this set of data?" And those are odds are there was going to be the most interesting there that maybe things we've never seen before or haven't seen a whole lot. At least some examples.

11:53 - Yeah.

11:53 - Testing for online video games as well as...

11:56 - When I talked to Sarah Eisen about imaging a black, the black hole, the first

12:00 black hole image with Python, she talked about shipping, actually shipping hard drives around to sync up the data because there was so much data that it was faster to put it onto a plane and send it from all the different locations around the world. Like it literally couldn't keep up the internet. And so box them up into these big crates and ship them around.

12:21 When you're talking that much data, you gotta get a plane involved, it's pretty wild.

12:24 - We weren't doing anomaly detection at the time, but yeah, one of the companies I've worked with, just dealing with financial data, we

12:29 actually collected so much, it's actually faster to mail it.

12:33 - Really, you ended up doing that as well?

12:34 - At times, yeah.

12:35 - Yeah, how much of a hard drive would you put-- - Oh, gosh.

12:39 - In the mail?

12:39 I mean, it wouldn't be just a terabyte, it would have to be a pretty big hard drive, or set of hard drives, right?

12:43 - Well, part of the explanation of why we're doing this was this was about 15 years ago.

12:47 - I see, a terabyte sounds like a whole lot.

12:50 - Yeah, I think the internet we were paying for at the time was, you know, having sort of speeds we have with the internet now, the costs were prohibitive back

12:57 then.

12:57 - I have gigabit up and down at home for 75 bucks, which you could have sent it over that probably, but it's not quite back when the internet had a sound.

13:06 Do you remember when the internet had a sound?

13:08 You would connect to it and you would be like, okay, that's a good connection.

13:11 We're gonna have some stuff happening today.

13:13 That's early 90s when the internet stopped having a sound, mid 90s, it stopped having a sound.

13:18 >>

13:18 That's about when I first started getting a little, just as it's being opened up.

13:24 Remember the early days, it's basically university students that were on there.

13:27 >> What I would do when I was in high school is, my brother and I, we would call the local university and they had a library dial-up for dial-up modems, and then you get on to Telnet and go for an Archie.

13:42 This is before the World Wide Web existed, before Mosaic, it was, for people that know I'm talking about.

13:47 >> TP.

13:48 >> Yeah, exactly.

13:49 >> Telnet, yes.

13:50 >> Unencrypted FTP. By the way, we had so much trust. We were so naive.

13:56 >> Yeah.

13:56 >> But yeah, when you would connect, it would go beep, beep, beep, beep,

14:01 [NOISE]

14:01 and depending on the sound, you would get to where you would go, "Oh, that means I connect to this bit rate or that bit rate," right? It's so wild.

14:08 >> Yeah, those are >> I'm nostalgic for it and I want nothing to do with it. I'm so happy we are where we are. >> Yeah, there's a certain charm to it.

14:14 But if you went back to it, you would hate it because you would watch the images load.

14:19 You would almost see the progressive scan lines come in.

14:22 I mean, it was really, it wasn't ideal.

14:24 No, I had a roommate once who used to download images.

14:28 These are just JPEGs, the same sort of files we have today, but it would take literally overnight to download a

14:35 JPEG.

14:36 They were interesting times, but we've certainly moved on.

14:39 So that's all good.

14:41 This portion of Talk Python To Me is brought to you by the folks at Posit.

14:45 Posit has made a huge investment in the Python community lately. Known originally for RStudio, they've been building out a suite of tools and services for Team Python.

14:54 Today, I want to tell you about a new way to share your data science assets, Posit Connect Cloud.

15:00 Posit Connect Cloud is an online platform that simplifies the deployment of data applications and documents. It might be the simplest way to share your Python content. Here's how it works in three easy steps. One, push your Python code to a public or private GitHub repo. Two, tell Posit Connect Cloud which repo contains your source code. Three, click deploy. That's it.

15:22 Posit Connect Cloud will clone your code, build your asset, and host it online at a URL for you to share. Best of all, Posit Connect Cloud will update your app as you push code changes to GitHub.

15:34 If you've dreamed of Git-based continuous deployment for your projects, Posit Connect Cloud is here to deliver. Any GitHub user can create a free Posit Connect Cloud account. You don't even need a special trial to see if it's a good fit.

15:47 So if you need a fast, lightweight way to share your data science content, try Posit Connect Cloud. And as we've talked about before, if you need these features, but on-prem, check out Posit Connect. Visit talkpython.fm/connect-cloud, See if it's a good fit.

16:04 That's talkpython.fm/connect-cloud.

16:07 The link is in your podcast players show notes.

16:10 Thank you to Posit for supporting Talk Python To Me.

16:13 You talked about some examples where you're looking for outliers for fraud, like credit card, and you talked about mailing around the financial data for those kinds of things.

16:22 It seems to me that outliers might also reveal new science or new information that you didn't expect.

16:29 Like it's an outlier because it doesn't match our model, but something is happening over here. And I think you pointed out astronomy, right? Like that part is really bizarrely bright. Why is that so bright? Is that a, you know, oh, that's a quasar. That's what that is.

16:41 - Brightness you're not expecting or a shape of a galaxy you're not expecting, that sort of thing.

16:44 - That nebula turns out to be a galaxy. We thought there was only one galaxy. Now there's many, right? That kind of thing.

16:50 - It's definitely a case, I think science is definitely a good example where outliers is something you want to find. Yeah, it's like biological data. It's used a lot too.

16:59 Well, I guess a lot of areas, the CERN particle accelerator at CERN uses outlier detection a lot just to find interesting things and to check that things are within certain bounds of what they expect or not.

17:12 But yeah, I mean, when you're dealing with certain domains, like one example that's kind of maybe the other end of the spectrum would be when you have, say you're monitoring industrial systems, like an assembly line or something like this, that's an environment where you really want something, we want the whole system to work in a specific way. And any kind of veering from that is probably a problem or indications of a potential problem down the road.

17:39 Right. You've got some IoT thing or you've got a more industrial type of robot and all of a sudden it's drilling holes outside of where you used to have it drill holes. You probably want it to stop.

17:48 It's behavior is unusual or just the sensors picking up temperatures that are unusual or vibrations or sound levels that are higher than normal or oscillating faster than normal or something like that.

17:59 Yeah, yeah, yeah.

18:00 Quite often, yeah, an outlier can be something interesting or it can be a problem.

18:05 Yeah, it really depends on the context.

18:06 But in most cases, there's something that's, for one reason or other, it's common for them to be worth investigating for one, yeah, to expand your knowledge of the system or just to find fared out problems.

18:18 - Sure, what's the scale of data that outlier detection makes sense for?

18:23 If I've got 20 measurements, does it make sense to ask this question?

18:26 If I've got 200 billion, is that too much to ask the question for?

18:30 Like, what's the story there?

18:31 - That's a good question.

18:32 I mean, when you have a very, I mean, it's like a lot of areas that could be, I guess the same thing with clustering or predictive models or generative models, anything along those lines.

18:40 You have too little data or you can have too much.

18:42 And there is kind of a sweet spot where the results are statistically stable, but it doesn't take you forever to find

18:48 them.

18:49 - They're statistically stable, but they can be computed.

18:51 - Yeah, it's not a lot of problems.

18:54 Like if you have, say you're checking a large company and you're just checking your accounting data for anomalies, there can be a lot of value to it.

19:02 And you can probably, on a lot of cases, you can measure it in dollar value.

19:06 If you find, well, if you find fraud, like you're a high level fraud where your CFO is misrepresenting the company or something, - Or low level fraud where your staff are ripping you off or just errors or inefficiencies or not

19:20 follow up.

19:20 - Contractors or what, subcontractors or something, yeah.

19:23 - Yeah, they can often, when contractors are working on you some way, they'll be doing kind of a concert with maybe some of your staff.

19:29 There's could be some collusion or things like that.

19:32 So looking at your staff behavior, it could be worth a lot of money and there could be a lot of benefit in it.

19:37 But if it takes you 600 hours to run a process and you find one thing, It may or may not be cost-effective.

19:45 Well, that example wouldn't be cost-effective.

19:47 Yeah, so there's kind of a balancing act you want to follow.

19:50 If you have an extremely small number of points, you can say that one is anomalous, given the others.

19:56 If you have a bunch of, say, you're going through your, maybe just looking at, just thinking of the example of staff and errors at a company.

20:04 Say you have, on staff, you have a bunch of people employed.

20:07 They're purchasers, they're just employed to buy supplies from your suppliers.

20:13 And some, maybe you have 10 of them and most of them make an average of about four purchases a day.

20:19 And you have one that every Friday makes 200 purchases a day.

20:24 So you only have like 10 points there, but one of them is so off the scale that you can say with some confidence, this is not necessarily a problem, but it is anomalous and it is worth understanding.

20:35 Either there's some errors or fraud or inefficiency or something, or the other people are just, They're the anomaly.

20:41 They're not doing their--

20:43 They're not doing their job.

20:44 Yeah, they're not buying enough.

20:45 You're definitely right, too.

20:46 You can have the other problem where you just have so much data, it's hard to go through.

20:50 Are there techniques that people use for, like--

20:52 use, for example, Dask or QPi, like the CUDA stuff for GPUs?

20:59 Are there sort of like really high-end compute stuff that people are using for these problems?

21:03 Yeah, and that's-- especially if you're using a deep learning-- well, I guess only.

21:08 But if you're using something that has a lot of matrix operations, a GPU will speed that up.

21:14 And Dask will often work out, especially have memory limitations as well.

21:19 Outlier detection can be done in parallel, which can speed things up.

21:23 Outlier detection works a little bit like predictive models in that there's a train step, and then there's a inference step.

21:29 OK.

21:30 You train it what's normal, and then you ask it if things seem normal or not.

21:33 OK.

21:34 Exactly.

21:34 So normally what you do with outlier detection of data. So, well, if you take the example of an industrial process, so say you monitor your, let's say in this example, you have sensors that are collecting a lot of data, so they're collecting temperature and sound and humidity and so on, information. And, you know, say you have a process that's run for weeks without any problem. So during that time, you know that everything was running properly. So you can take that data and give it to an outlier detection to train on, and then that builds up a sense of what's normal. In this case, it's not only statistically normal, but it's also kind of like a platonic norm. It's desirable as well. That's your inference step.

22:16 And a lot of times, that's only done once a month or every few months or something. And so, it's not terrible if it takes a little bit of time to train. In other contexts, in like a real-time situation, you can have streaming environments where you're just constantly reading in data and it's your sense of what's the new normal. You might be retraining every few minutes or a few hours in which case, yeah, the training time becomes a lot more relevant. It can be done offline, but then it's your inference time and that can be really relevant.

22:47 There are certain ways to keep down your training time as well. Sometimes if you have like months of sensor readings, you might not need to train on all of them. You can just take a sample of them or a certain time slice or something like that.

22:59 And that's good enough to establish a decent sense of what's normal.

23:03 Right. It seems to me that the training could be done on a subset and that would probably be OK.

23:09 But I feel like the detection might need to run nearly on everything.

23:13 Right. Because it might be that that one in a million sort of thing.

23:16 Right. Astronomically, you're looking for that one little bright spot or that one little dip in brightness for a transit of a planet or something.

23:23 or I've had my credit card put on a block until I unblocked it because I bought something in a vending machine for a dollar with a credit card. And they're like, well, a lot of people who steal cards, they'll take them to vending machines to try them because it's a safe place with no one there to see if the stolen card still works. And then they'll go do bad stuff.

23:41 Well, it's hard to say what they're doing behind the scenes. Why they flagged it, it could have been because they do run outlier detection routines. They could have been done that or they could have been using like a rule-based system like you're describing where If it's in a certain location for a certain dollar value at a certain time of day and so on, they might have just flagged it because of that.

23:58 >> It was very shady.

23:59 I was at the university.

24:00 >> Yeah, it's definitely the case that most of the time when you're running outlier detection, yeah, you want to check all of your data because otherwise it kind of – the question is, are there any anomalies in my data?

24:10 There are exceptions to that where you just want to check – you just kind of want to spot check your data and just want to make sure it's largely free of outliers or that there's not – I mean, depending on how you define them.

24:19 example, a sensor readings, like you always expect a certain number of point anomalies, like a certain point in time where something spikes up and it's not it is an anomaly, but it's not a big deal. So in some cases you might just want to make sure that your number of point anomalies is normal. So you don't have an unusual number of unusual events in that case or cases like that or if you're just doing data quality checks, for example, you might just want to make sure your data is large.

24:46 How do you know that you don't get outliers in your If you just feed it like a stream of data, you're like, well, this is what's normal.

24:53 Actually, there were outliers, but you weren't ready to detect them yet, and now it thinks they're normal.

24:57 Most of the time when you train, you do assume that there's a certain number of outliers in your data.

25:05 Yeah, so what you're training on is often a statistical norm.

25:09 It's what's normative.

25:11 It's not necessarily what's ideal and clean.

25:14 It's very hard to get data that's really clean.

25:16 And if you do, it often biases the data in one way or the other, which kind of undermines outlier detection sometimes.

25:24 It's almost like overtraining, maybe.

25:25 Yeah, similar idea to that in that it learns very specific patterns as being normal.

25:31 Actually, what's fairly common in outlier detection is to train and to inference on the same data with the idea that there's outliers in there anyway.

25:42 So in some cases, like, well, go back to the example of a, let's say a credit card company.

25:47 So you have, you want to check all your transactions for the last day.

25:52 So you have a hundred gazillion.

25:54 You can train on that.

25:55 And then you can also run inference on that.

25:58 So you can say, relative to today's transactions, which are the unusual ones, unusual relative to the other ones.

26:05 So it's something you wouldn't do in prediction problems, but you actually can.

26:09 It's fairly common to do that note letter detection.

26:11 just with the idea that as long as most of the data is typical, most algorithms will be able to find the records that are the most extreme or the most unusual in one way or another relative to the other ones in the data.

26:25 Yeah, that's very interesting.

26:25 You can train on the data and then ask it to find the outliers in that same data.

26:31 That seems very counterintuitive.

26:32 Yeah.

26:33 It is a little counterintuitive, but like, well, I guess the simple example I gave earlier where you have 10 purchasers for your company.

26:42 You can use those 10 to establish a sense of what's normal.

26:46 And it would say, well, about four purchases a day is normal.

26:49 And this one person that has 200 every Friday, that's unusual.

26:53 So it's actually doing, you can do that in the same data.

26:56 Now having said that, what you do do in outlier detection is you sometimes do that a little bit iteratively where you say, start with the original data and find the most anomalous records in there.

27:06 And then you might inspect them manually and say, "Okay, these are things, yeah, that we wouldn't normally expect to happen.

27:13 So we can remove those." Or there are things you say, "They're extreme, but

27:16 there's nothing wrong with them.

27:19 They could happen again." Or maybe they're a lightning flash when you're looking through a telescope.

27:24 It's really bright.

27:25 Well, you get a lot of, like, when you're dealing with anything like that, you get a lot of data artifacts where it's not that the phenomena that happened that you're monitoring was unusual.

27:34 the equipment you use to monitor it had some sort of power surge or something like that.

27:39 And that's a data artifact, you might want to remove it. So sometimes you just, you kind of iteratively make your data cleaner and cleaner and gets kind of more pristine sense of what's normal. But you can overfit doing that, you can go too far doing that.

27:52 Yeah, would you maybe find, sort of do a, like you say, a loop a couple times, say, train it and then ask it what's abnormal and figure out, well, these things are truly not part of the things we want to consider. So we'll take them out and then train it again.

28:04 excluding those and kind of like iterate on it that way?

28:07 - It's not imperative, but that would be a fairly, fairly standard way to do things, yeah.

28:11 - We haven't properly introduced your book yet, Outlier Detection in Python.

28:16 - Yes. - From Manning.

28:17 And I want to give you a chance to give a shout out about it and then I want to talk to you about a couple of things that you've covered in your book, of course.

28:24 So I know that we have some discount code for the book that Manning has given us.

28:29 I'll put that in the show notes for people if they're interested.

28:32 Well, yeah, tell us, tell us about your book of it and why do you write it and what people can learn from it?

28:36 One job I worked at a few years ago, I just spent an enormous amount of time.

28:42 I was managing a research team and there's about 10 of us on the team.

28:46 And we looked into a lot of areas of machine learning too, but I, outlier detection was the biggest one for us.

28:52 And so we were doing a lot of work around that.

28:55 For, well, this is the company, as I mentioned, that was doing financial auditing. So we're building tools that would allow financial auditors to, when performing an audit, examine their client's data and just in a fairly easy way, see what's unusual in their data. The idea that, for example, if you're looking through their financial transactions, like if you're an accounting firm and you're auditing spatula world, this example I used to always give for the word elf fans, I guess, is they would have a set of sales and a set of purchases and set of payroll transactions and so on. So you can go, what auditors would often do is just find the most anomalous of those with the idea that those are the ones that are among the most important to investigate, to see if there's anything off with them that could suggest an error or something like that.

29:47 So we spent a lot of time doing that. We were working with regulators around the world, not just audit firms, but also regulators and bodies like that.

29:55 And we spent a lot of time looking at how can we justify the outliers that we're finding?

30:01 Because if we run some of these, most of these algorithms are a little bit like black boxes, blocks algorithms.

30:07 So like if you're doing a predictive model and you send something through an XGBoost model or a neural net or something, it gives you a prediction.

30:13 And it's hard to say why it made that prediction.

30:16 So a lot of outlier detection algorithms are like that, but it's kind of an issue sometimes because in outlier detection, probably more than with prediction or classification or something, or clustering, I should say, it's important to have an explanation of why something is unusual.

30:33 Like if you're flagging something as maybe being potentially fraudulent, or if you're doing security and you're flagging some web activity as being problematic, or a person in some sort of security context is possibly being some sort of security risk.

30:49 You gotta know why in order to investigate effectively and quickly.

30:55 So we spent a lot of time looking at how to make Outlier Detection 1 interpretable and also just kind of justify the results that we're finding if we assess the tables of data and come back with a summary of them saying, these are the most unusual records in this data.

31:14 But it's actually a hard problem to try and explain why that was the case.

31:18 So I ended up just reading hundreds of, I think like literally hundreds of papers on the subject.

31:24 And we did a lot of original research and it ended up building up quite a lot of expertise.

31:29 But also during that time, it was just a really fascinating, interesting problem.

31:34 Realized outlier detection, it's just a really, it just kind of grabs you because yeah, It's just kind of something fascinating, but you're trying to figure out if you have a collection of images or a collection of sound files or a collection of, like one job I had, we were working with network data, like social networks in that case, connection kind of graph models.

31:54 What are the most unusual records in there?

31:57 This is really challenging.

31:59 There's no definitive answer, but there's certainly good answers.

32:03 And there's ways to do it efficiently and interpretably and accurately, But it's difficult, but it's just really rewarding when you kind of get something, a hard problem

32:13 like that working well.

32:15 - Yeah, I imagine it's the kind of thing that if you have those answers, you kind of become real good friends with the CEO or the decision makers.

32:22 They're like, this person can look at it and answer the questions that we can't even know to ask.

32:28 - Yeah, exactly.

32:28 It does open up different ways of thinking about things.

32:32 And yeah, it's just, yeah.

32:34 It has a lot of benefits when you're looking at data.

32:37 - I imagine you could probably also make really important decisions for where to put your energy in your company, right?

32:44 Like you had spatula world as an example.

32:46 Like if some product all of a sudden seems like it's doing really well and that's an outlier, like you should be able to answer, this is actually gaining a lot of traction, we should manufacture a bunch.

32:57 Or no, that's just some weird glitch and that's not gonna be, that's not predictive of, should we order 100,000 green spatulas or is that just something weird that happened some restaurant come and bought all the ones at a store.

33:08 So it's really hard to, but important to be able to quantify these things and just give some context of

33:13 you could end up in a bad place if you make an investment.

33:16 Yeah.

33:17 Yeah.

33:17 So it's the curse of, of half understanding of a little bit of knowledge.

33:21 I guess.

33:22 I've got a great idea.

33:24 Yeah.

33:24 All right.

33:24 Let's talk about some of the tools that you highlighted in your book.

33:28 Like the PI OD, Python outlier detection.

33:31 Yeah.

33:31 Let's talk about this one.

33:33 What was the story of this thing?

33:34 It's used quite a bit, used by 4,000 people and 8.8 thousand GitHub stars.

33:39 That's pretty, pretty well used.

33:40 It is.

33:41 Yeah.

33:41 It's really the, it's the go-to one for outlier detection in Python.

33:46 Okay.

33:46 Anyone's doing any kind of machine learning work in, in Python.

33:49 I mean, the one tool there they'll know is scikit-learn.

33:53 And scikit-learn comes with four outlier, well, it comes to four outlier detectors.

33:57 And also some tools that make it relatively easy to do some other types of outlier detection, like as a KDE tool.

34:03 we'll see kernel density estimation, which makes it fairly easy to find outliers to just points in space that have low density as well clustering method called Gaussian mixture models and GM, which makes it fairly easy to do outlier detection using that as well.

34:19 But PIIOD, yeah, so PIIOD has those, has basically everything in scikit-learn, wraps it in its own interface.

34:26 And I think a couple dozen more, and it also has a number of deep learning based ones.

34:32 So for tabular data, it's really where you want to go first, probably.

34:37 So yeah, in terms of deep learning, it has well autoencoders, variational autoencoders, GANs.

34:42 There are some limitations with PYAD, and I do get into that in the book.

34:46 It assumes you're dealing with strictly numeric data, for example, which if you're dealing with tabular data, realistically, you have mixed data, categorical columns, date columns.

34:57 So PYAD does not handle that.

34:59 And it's strictly for tabular data.

35:01 So if you're working with time series data or image data or audio data, that sort of thing, there are other tools involved for that.

35:08 But yeah, for tabular data, it's pretty much the most used one.

35:11 - Okay, got the NLP 80 bench for NLP anomaly detection.

35:17 So I'm guessing this is in text.

35:19 I'm not sure, you'll have to tell us what kind of stuff you detect with that, but I wanna give it some articles or maybe give it social media posts or something along those lines, then ask it.

35:28 What kind of questions could you ask it about that?

35:30 NLP is kind of a, it's a dicey area without layer detection.

35:35 And I shouldn't, dicey is too strong a word.

35:37 It's a harder area.

35:38 It's certainly a harder area to work with because like if you have tables of data again, like say accounting data, for example, if a bunch of transactions, it's difficult to say which are the most unusual transactions in your data, but it's kind of, like if you see something that's very unusual, you can say, okay, that transaction is unusual.

36:00 With text, that is harder to say.

36:02 I mean, if it's a completely different language or something like that, or the tone, or the writing style is completely

36:09 different.

36:10 - And you have curse words, and people might think it's angry, but in fact, it's just sort of a coarse way of expressing excitement, right?

36:17 Like, oh, these people are really angry.

36:19 Like, no, they're actually really happy, but they don't really express it super properly.

36:23 - Right, but if the rest of the corpus did not have any expletives, then in a sense that's anomalous in that way.

36:31 Yeah, with text, there's just so many ways that text can be just a little bit different.

36:35 I would consider it one of the more challenging areas of machine learning of outlier detection, yeah.

36:41 - I'm sure, we'll talk about LLMs as well and see how that changes things or not in a little bit.

36:45 But the other one you mentioned is scikit-learn.

36:48 Like this is kind of the granddaddy of a lot of the data science type of stuff.

36:53 And they've got a whole section on novelty and outlier detection.

36:56 maybe I'm headed up on the screen for a little bit, but give people a sense of kind of problems you can ask with this one or problems you solve questions you can ask.

37:03 It's a little bit like PIOD, it's less so, but it doesn't have like 30 or so detectors, it has four, but includes two of them.

37:12 So again, it's just for tabular data.

37:14 And again, it's just numeric tabular data, but it has two of the go-to algorithms.

37:19 They're called one's called isolation forest.

37:21 And the other is called local outlier factor.

37:24 I think most of the time with outlier detection, if you're doing tabular data, if you're only gonna use two algorithms, those are the two.

37:31 Outlier detection is a little bit different than say prediction, because if you're building a predictive model, say on tabular data, most of the time you would use, say an XGBoost model or a CatBoost model or a random forest or something like that.

37:45 You would tend to use one model.

37:47 Sometimes if you really wanna squeeze all the accuracy you can out of it, you'd create an ensemble.

37:52 Actually, internally is an ensemble of, it's a boosted ensemble of decision trees, but normally you wouldn't, sometimes you do, but probably more often we don't create an ensemble of a KNN and an SVM and a neural net and a random forest.

38:08 In outlier detection, that's a lot more common to do that.

38:11 And the reason is that each outlier detection algorithm, it can be a little bit limited in one way or another.

38:18 They're not in an extreme way, but they each look for different types of outliers and they each look for outliers in different ways.

38:25 And it's just, there's a lot more advantage to using multiple detection algorithms with outlier detection than you would with prediction.

38:33 And you can do it in just really simple ways.

38:35 If you say, scikit-learn has four outlier detectors, it has isolation forest, it has local outlier factor, has one called one class SVM and has one called elliptic envelope.

38:47 So if I run the four of those on my data, and let's say they're all four of them are appropriate and work well. Any records that are scored highly by all four of those detectors, you can say, well, these records are really

38:59 weird.

39:00 You can say, yeah.

39:02 Yeah, you can let it say, we've checked many different ways and we've got the same answer that it's an outlier in these different aspects.

39:08 And so it's really likely.

39:09 It's really likely. It's not necessarily a problem, but it is statistically unusual.

39:15 And yeah, and I think a lot of time, if you're just, if you don't have a lot of intense outlier detection work to do, you just have a small data set and you just wanna quickly get a sense of what are the most anomalous records in it.

39:28 Just using scikit-learn with the assumption that you, you're using scikit-learn anyways for a lot of your work.

39:34 Just using that is probably gonna be, yeah, sufficient for a lot of cases.

39:38 If you want to do a little bit more thorough job using more detectors again, and just using detectors that can be a little bit more, PIOD has ones that are a little bit more performative, has some that are a little more interpretable.

39:53 It could be a good decision just to use PIOD and use a bunch of the detectors there.

39:58 One of the nice things about PIOD is it has the same API signature for all of its detectors.

40:02 So if you write code, and it's usually only like four or five lines, it's really kind of boilerplate easy, other than the hyperparameters for them.

40:11 It's really quite easy to just kind of swap one out and swap in another and just try a whole bunch of them.

40:17 One thing I do get into the book is that there's a lot of algorithms beyond even what's in PIOD that can be very useful to use.

40:25 The ones in PIOD, they're good and they're sufficient quite often, but they're limited into how interpretable they are.

40:32 So again, if you're in a situation where you're saying, well, this looks like a security threat or this looks like failing equipment this looks like a novel specimen. Say from astronomy, this looks like a novel transit.

40:45 This is a transit that's not normal. You want to know why. So a lot of the detectors from PIOD don't provide that necessarily to the degree you would wish. So I do suggest a bunch of others that could be useful for that purpose and that support categorical data

41:01 as well.

41:02 That's non-numeric stuff like male, female or high income, low income or whatever.

41:08 - Exactly.

41:08 - We talked about time series a little bit.

41:10 You said that time series are pretty challenging and I guess it depends on how rapidly the time series data is coming in as well.

41:16 - Yeah, that's actually one of the things about time series data is that it can be streaming and coming in extremely fast.

41:23 And yeah, so just like I was saying, like very often we use ensembles of outlier detectors to try and analyze the data.

41:30 By their nature, ensembles can be a little bit slower.

41:32 Now you can run them in parallel.

41:33 - Yeah, right, 'cause it's basically, you take three or four or a thousand decision trees them all what their answers are and then you kind of like decide what the, it's like a voting process or something almost, right?

41:43 And that would be exactly how you would do it in a predictive model. So with an outlier detection model, well there's probably the closest thing to what you just described with outlier detection is there's an algorithm called isolation forest, which is a forest of isolation trees. So it's kind of similar ideas. It's probably close to, a little bit close to random forests, probably a little closer to extra trees for anyone who's familiar with predictive models. That's isolation forest. So it's kind of the outlier detection equivalent of extra trees model. And like that is that it's composed of a whole bunch of trees and depending on the implementation of it, not scikit-learns, but depending on the implementation of it, you can run all those trees in parallel, which can give you your answers quite fast if you have sufficient hardware.

42:30 What can also be done with outlier detection too is, which can actually, depending on your environment, work a little faster, can actually be faster to run your detectors in sequence, believe it or not, because what you can do then is, depending on how you define your outliers, but say you say, I'm only concerned with things that are flagged by the isolation forest and the local outlier fracture model and the KNN model and the autoencoder model.

42:58 You can run them in, so anything that's filtered out by the first model doesn't need to be sent to the second model and then, or the third model or the fourth model, so you can run it, you can kind of put the fastest one out first and then the.

43:09 Yeah.

43:09 That's interesting.

43:10 Almost like thinking about it, like an if statement or a while loop or something in programming where, where the ands short circuit, so like you want to do the simplest test first and then the next one, the next one, and you're only going to evaluate it until you hit a false and then you stop, be a positive in this case or something.

43:26 Yeah.

43:26 That's a good way to put it.

43:27 Yeah, if you do it in sequence, that's, that'll definitely speed it up.

43:30 Rather than taking all the data, run it in parallel across all the cores.

43:34 You're like, well, look how awesome it is.

43:35 It's using all the cores, but if you're like, no, we just run less of it.

43:40 So we don't have to do it so much.

43:41 There will still be benefit to that.

43:42 If you want to know specifically how anomalous things are, which you might, like, if you're not looking for to flag the individual anomalies, you're trying, you're more interested in collecting statistics about the volume of anomalies.

43:53 Like you're, it's more data monitoring project or something as opposed to picking out the individual anomalies.

44:00 You may still want to do that, but if you just want to pick out the really anomalous records, then yes, they might be the ones that are flagged by all of your, or almost all of your detectors, say.

44:11 - Right, well, you don't even have to do, you could even do it partially.

44:13 If it's not flagged by three out of five, then we're not gonna pass it further down the line, right?

44:18 Like you could still do some sort of intermediate, more squishy analysis like that, but you could still short circuit it some.

44:25 - That would be a good balance, I think, yeah.

44:27 I mean, it's like anything, there's gonna be false positives and false negatives, but you

44:32 want to get a good balance of that.

44:33 - One of the other projects that you called out is Profit, time series anomaly detection with Profit, and it's based on the Facebook's open source library, Profit.

44:44 This is Profit anomaly detection versus just straight Profit, which is a tool for producing high quality forecasts for time series data that has multiple seasonality with linear and nonlinear growth.

44:56 I imagine that Facebook probably has a lot of data in its time series.

45:00 - If anyone does, yes, they would have phenomenal amounts.

45:02 And there's probably all sorts of things they wanna forecast.

45:05 - So tell people about this project and how they might use it.

45:08 - There's a number of ways to do outlier detection with time series data.

45:12 It's a little bit like I was saying with tabular data, you can look at your data in all kinds of different ways and they might all be legitimate.

45:18 They just find different types of outliers.

45:20 A really, really simple case, which can be useful, despite its simplicity is if you just have just a timeline over, over time of points.

45:31 So say you have a, well, say you're a social media BMF that has a half of humanity signed up.

45:36 You might want to look at your server usage or ad revenues.

45:41 And there's, there's all, just all kinds of things that you're, you're predicting.

45:43 One thing that things will be going viral.

45:45 And so maybe we should boost that in the algorithm and show it to more people, which could be very good or not so good, but anyway, it still works with the incentives and the goals of, "Hey, we want more engagement on the platform," right?

45:56 I guess one would be simple example, maybe weather data or going outside of a social media company where you just tracked temperature over time and there's certain seasonality to that.

46:06 What is that plot there? That is... Hard to say what that is.

46:09 Their example is, let's see if they call it out.

46:12 Oh, that could be weather data or it could be stock.

46:15 They don't really say. Have they named their... All their data is named DF

46:18 and stuff.

46:20 - They do, the way profit works is that there's only, you work with tables data and they only have two columns.

46:25 There's the time column and there's the value column.

46:29 And you can't expand much.

46:31 - The legend says anomaly actual predicted base.

46:33 There's no information here that tells you what data this is but it still gives you a, it's still a cool picture to show you how it works.

46:39 It's like, here's the prior block of data like last week or something.

46:43 And now here's the next week.

46:46 And are we gonna predict if this is an anomaly or not?

46:49 That is kind of the nature of time series data is it doesn't really matter if it's weather data or stock data or bird migration data. As long as there's certain patterns to it that can be found, you can work with that kind of equivalently. What I think they're showing there is something you often do in time series data is you have a certain pattern that's existed in time. So there's a certain downward trend in that and there's some seasonality.

47:14 So maybe those might be days of the week or yeah, those look like days of the week.

47:20 So maybe things are busier on a weekend or something like that.

47:24 So given the patterns that have occurred in the past where you know, look at the time of the day and the day of the week, the month, the year and the like, you can make projections into the future forecasts.

47:36 And then you look at your actual data.

47:39 If your forecast is generally correct, but you have some points that are way off from your projection, that's a form of anomaly.

47:47 So that's something that's often done for outlier detection.

47:49 It's just saying, given the history of what we've seen, we would have really expected a value of 10 here, but we see a value of 38.

47:58 So there's something unusual there.

48:00 It just doesn't follow the normal seasonality and normal trends that we've seen.

48:05 >> Yeah. It probably can't necessarily give you too much insight as to why, but you should go look at it, right?

48:11 'Cause it's just the quantity and time, right?

48:14 It's pretty sparse data.

48:16 - You might be able to see why you made the prediction you did because it's just based on how you, there's a lot of ways to do, profit works a certain way and there's a lot of ways to do time series forecasting, but generally you're just looking at the regular patterns, the general trend and maybe just like lag features and things like that.

48:33 So you can figure out why you made the prediction you did, but yeah, why it actually had the actual value that it did.

48:38 Yeah, you'll probably have to go and investigate.

48:41 Sure.

48:41 Maybe pull up some, some different tools and different algorithms now that you can focus in on that.

48:45 All right, Brett, let's talk about one more thing while we're here.

48:49 What's the story with LLMs and outlier detection?

48:52 And I think there's two angles I'd like to talk about this with you pretty, pretty quickly, just briefly.

48:57 One, can I just throw a ton of data at ChatGPT and I'm not talking the ChatGPT four model.

49:04 I'm, I'm talking like, Oh, one reason the higher end model that can take could hold all the data potentially.

49:09 And it's a little bit more thorough.

49:11 You can ask it, find me the outliers.

49:12 Like, is that a world, a thing that you might, might work?

49:15 And the other is, could I give it some data and say, describe the data and say, okay, here's the situation.

49:21 Here's what this data means.

49:23 Recommend me algorithms and tools and libraries and, and even techniques to analyze it.

49:28 Like, does chat make sense for either of these?

49:30 I mean, it's going to be like a lot of things.

49:32 If you have an, if you have an expertise in these, sort of areas, you're you're gonna be better off just to take advantage of that.

49:38 But like a lot of areas of data science, if you're working into an area that you're, you don't happen to have spent years thinking about, these, yeah, these tools can be a good place to get you started.

49:48 I've worked with them a bit.

49:49 And what I've found is, though I have to admit, I haven't used O1 yet.

49:55 I used tools that were a little bit older.

49:57 And what I was finding is it was suggesting outliers, but in a really perfectly legitimate, but a limited way.

50:04 So it would suggest doing like a Z score test on your numeric columns or checking interquartile ranges and things like that, which are good ways to find outliers.

50:16 And I think it may be suggested using an isolation forest and a couple of things.

50:21 So I think it went over the basics reasonably well.

50:26 I kept trying to do a lot of prompt engineering to try and get it to push it towards more sophisticated analysis of the data.

50:34 and that wasn't happening.

50:35 Though, who knows, maybe if not this version, maybe the next one, a version of the LMS.

50:41 I think we're heading to that point where it can start to do that.

50:45 And again, yeah, same with trying to summarize the data and find the patterns in there.

50:49 It's able to do fairly well.

50:52 It's not at the level of like what a real, certainly not at the level of someone with domain expertise that really understands the data is gonna be able to do.

51:00 But it can provide some rough summarization of the data.

51:03 maybe point you in the right direction to find some tools you don't know maybe and you could go use them.

51:08 I'm kind of like leaning towards saying it's not going to be doing the best.

51:12 But having said that, they work a lot better for when I have tested them for outlier detection than a year ago or something like that.

51:20 So it's heading in the direction where they might be able to do something quite useful.

51:23 Yeah, I've started using the O1 model and it's a lot slower, but you just got to change your mindset.

51:28 It's not a chat conversation.

51:30 and it's like I've given an intern a job and I expect it to come back with me a little later.

51:35 It's getting better and better.

51:37 It's a crazy time.

51:38 - It's remarkable.

51:39 Yeah, mixed feelings.

51:41 - I know.

51:42 Let's close this whole thing out with maybe just a final call to action.

51:47 People are interested in this topic.

51:48 They wanna do more.

51:50 Maybe another shout out to your book if you want.

51:52 And yeah, people are interested.

51:54 They wanna do more, go deeper.

51:56 What do you tell 'em?

51:56 - I would recommend the book, of course.

51:58 I really, it's on Manning.

52:00 I really wanted to write, when I was looking to write with Manning, it's just a publishing company I always thought highly of.

52:08 Yeah, if you just go to manning.com and look for outlier detection, and I think, well, the affiliate link, that'll give you 45% off too, which is a nice link.

52:18 I think, I mean, the book has gotten quite good feedback.

52:22 One thing I was really happy with is some of the people that are architects of the most important algorithms in outlier detection.

52:30 So things like isolation forest, local outlier factor, extended isolation forest.

52:35 They gave some really nice feedback on the book.

52:38 It's gotten quite good feedback so far.

52:41 And I think it's probably as comprehensive a book as you would need.

52:45 - I'll say it's quite comprehensive.

52:47 I was going through it.

52:48 - It covers what you would, yeah.

52:50 If you're not doing time series analysis, for example, you can probably skip the chapter on time series analysis, but, or deep learning.

52:56 But most of it, I think, is actually-- there's a lot of gotchas with outlier detection.

53:01 I mean, they're not too hard to get your head around, but you do have to think about them.

53:04 And so it does cover pretty much anything you would need to know.

53:08 I think for almost any case, you wouldn't need to look for too many other resources after

53:13 reading this.

53:13 Excellent.

53:14 And PyOD is also another good resource maybe to get started with, you think?

53:17 Depending on your situation, either just scikit-learn or PyOD.

53:21 And then if you really need to go into deep learning, I would go in deep OD or even just pyod.

53:26 And for time series, there's quite a number of libraries you can use as well.

53:30 - Okay, excellent.

53:32 Well, thank you so much for being on the show and sharing your work.

53:35 - Well, thank you very much for having me.

53:37 Yeah, that was very good.

53:37 - Yeah, you bet.

53:38 Bye now.

53:39 This has been another episode of "Talk Python To Me." Thank you to our sponsors.

53:44 Be sure to check out what they're offering.

53:46 It really helps support the show.

53:48 This episode is sponsored by Posit Connect from the makers of Shiny.

53:52 publish, share, and deploy all of your data projects that you're creating using Python.

53:56 Streamlit, Dash, Shiny, Bokeh, FastAPI, Flask, Quarto, Reports, Dashboards, and APIs.

54:03 Posit Connect supports all of them.

54:05 Try Posit Connect for free by going to talkpython.fm/posit, P-O-S-I-T.

54:11 Want to level up your Python?

54:13 We have one of the largest catalogs of Python video courses over at Talk Python.

54:17 Our content ranges from true beginners to deeply advanced topics like memory and async.

54:22 And best of all, there's not a subscription in sight.

54:24 Check it out for yourself at training.talkpython.fm.

54:28 Be sure to subscribe to the show, open your favorite podcast app, and search for Python.

54:32 We should be right at the top.

54:34 You can also find the iTunes feed at /itunes, the Google Play feed at /play, and the Direct RSS feed at /rss on talkpython.fm.

54:43 We're live streaming most of our recordings these days.

54:46 If you wanna be part of the show and have your comments featured on the air, be sure to subscribe to our YouTube channel at talkpython.fm/youtube.

54:54 This is your host, Michael Kennedy.

54:56 Thanks so much for listening.

54:57 I really appreciate it.

54:58 Now get out there and write some Python code.