Data Science from the Command Line

On this episode, you'll meed Jeroen Janssens. He wrote the book Data Science on The Command Line Book and there are a bunch of fun and useful small utilities that will make your life simpler that you can run immediately in the terminal. For example, you can query a CSV file with SQL right from the command line.

Episode Deep Dive

Guest Introduction and Background

Jeroen Janssens is a seasoned data scientist and the author of Data Science at the Command Line. Jeroen has a broad background in data, having worked with many programming languages including Python, R, and more. He’s deeply involved in the data science community, often speaking about empowering developers to leverage the terminal for efficient data workflows.

What to Know If You're New to Python

Below are a few tips to help you dive into this episode's discussion about using Python on the command line. These points will clarify the basics so you can focus on how Python interacts with tools mentioned throughout the show.

- Python Environments: Know how to create or activate a virtual environment (e.g.

venv) so that installing Python packages (like CSVKit) won’t interfere with your system Python. - CLI Arguments: Many Python scripts accept parameters via the command line (e.g.

sys.argv). Familiarize yourself with passing arguments rather than hardcoding values. - Bash vs Zsh: Mac users may see Zsh or Bash as their default shell. Just note they’re both shells, and Python commands or scripts usually work the same way under either.

- Subprocess Module: You may hear about automating tasks by calling other commands in Python. This is typically done using

subprocess.run(...).

Key Points and Takeaways

- Why the Command Line for Data Science?

The command line is often seen as old-school, but it shines as a “super glue” for automating data pipelines and orchestrating small yet powerful utilities. By chaining commands (via pipes) or using scripting languages like Python, you can perform data science tasks quickly without heavy frameworks.

- Tools and Resources:

- Shell Customization and Productivity

Simple tweaks—like switching from the default Terminal on macOS to iTerm2 and from Bash to Zsh or Fish—can transform your productivity. The conversation highlighted the importance of short aliases, color prompts, and commands like McFly for better command history.

- Tools and Resources:

- Oh My Zsh

- FASD for quick directory and file navigation

- Oh My Posh (especially for Windows + PowerShell)

- Tools and Resources:

- Creating Python-powered CLI Tools

Turning Python code into CLI executables can simplify repetitive tasks or data transformations. You just add a “shebang” (

#!) at the top and make it executable withchmod +x myscript.py. Coupling that with argument parsers like Click or Typer leads to maintainable command-line utilities. - CSVKit and Data Wrangling

While Python’s pandas library is powerful, you can handle many CSV tasks with command-line utilities. The show featured CSVKit, which includes tools like

csvcut,csvsql, andcsvstatthat understand the structure of CSVs.- Tools and Resources:

- CSVKit GitHub Repo

- XSV (fast CSV tool in Rust)

- Tools and Resources:

- Querying CSV with SQL

One standout CSVKit tool is

csvsql, which runs SQL queries directly on CSV data. This technique is helpful if you’re comfortable with SQL but don’t want to spin up a full database or open Python for quick data exploration.- Tools and Resources:

csvsqlin CSVKit- SQLite Docs (conceptually similar approach)

- Tools and Resources:

- Parallelizing Tasks with GNU Parallel

Python’s GIL limits multithreading, but spinning up multiple processes with commands like GNU Parallel is a straightforward workaround. You can process large sets of files or data chunks in parallel by chaining Python scripts or other shell commands.

- Tools and Resources:

- GNU Parallel

- Docker (to isolate workloads)

- Tools and Resources:

- Subprocess and Polyglot Data Science

The conversation covered the

subprocessmodule for calling out to other utilities from Python. You can mix languages—like Ruby or R-based command-line programs—so long as they accept text in and out, bridging everything with Python for orchestration.- Tools and Resources:

- subprocess.run(...) Docs

- explain shell for clarifying commands

- Tools and Resources:

- Jupyter Meets the Terminal

Jupyter notebooks can call shell commands by prefixing them with

!. Meanwhile,%%bashcells allow multi-line shell scripts. It’s a nifty way to merge interactive Python data analysis with classic terminal commands.- Tools and Resources:

- Visualizing on the Command Line

While not always the first choice, the terminal can display charts if you integrate the right tools. Jeroen mentioned using R's

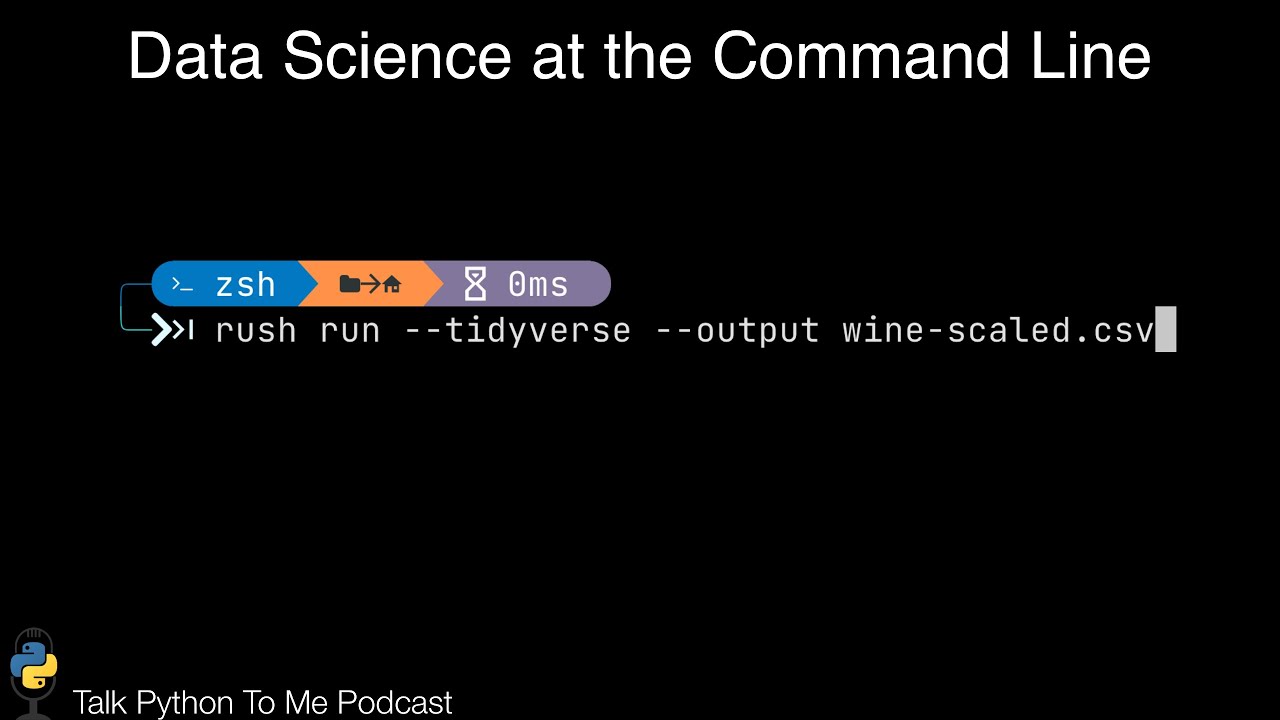

ggplot2with special backends, or bridging Python’s plotting libraries via small wrappers for quick visual checks.- Tools and Resources:

- ggplot2 in R

- Plotnine (Python port of ggplot2)

- Tools and Resources:

- Docker for Experimentation Docker containers are an easy way to isolate your environment when you’re experimenting with new command-line tools. You can spin up containers preloaded with your data science tools, ensuring you don’t accidentally break your main setup.

- Tools and Resources:

Interesting Quotes and Stories

“I still find it an interesting juxtaposition of these two terms, data science and the command line.” – Jeroen

“It can be very efficient to just whip up a command on the command line using a couple of tools if it solves the job.” – Jeroen

Key Definitions and Terms

- Shebang (

#!): A special line at the top of a script indicating which interpreter (e.g.,/usr/bin/env python3) should execute the file. - POSIX: A family of standards that define interoperability between Unix-like operating systems, shaping many shell behaviors.

- Alias: A short command (e.g.,

alias ll='ls -la') that saves keystrokes in the shell. - Pipe (

|): A command-line feature that feeds the output of one command into the input of another. - GNU Parallel: A command-line tool that executes jobs in parallel using multiple CPU cores or remote machines.

- CSVKit: A collection of utilities for cleaning, transforming, and analyzing CSV data from the terminal.

Learning Resources

If you want to go deeper into Python, data science, or bridging the gap between spreadsheets and Pythonic workflows, consider these courses from Talk Python Training:

- Python for Absolute Beginners – Ideal if you’re brand new to programming in Python.

- Move from Excel to Python with Pandas – Learn to handle CSV files and data workflows with Python instead of spreadsheets.

- Data Science Jumpstart with 10 Projects – A great primer on essential data science concepts in Python.

Overall Takeaway

Embracing the terminal can significantly boost your workflow for data analysis and beyond. By combining quick command-line utilities with Python’s flexibility, you can handle data manipulation, visualization, and automation in a more streamlined and composable way. Whether you’re just getting started with Python or you’re looking to sharpen your command-line chops, remembering that “the shell doesn’t care which language you use” opens up enormous possibilities for creativity and efficiency.

Links from the show

Jeroen on LinkedIn: linkedin.com

Jeroen cohort-based course, Embrace the Command Line. Listeners can use coupon code TALKPYTHON20 for a 20% discount: maven.com

Data Science on The Command Line Book: datascienceatthecommandline.com

McFly Shell History Tool: github.com

Explain Shell: explainshell.com

CSVKit: csvkit.readthedocs.io

sql2csv: csvkit.readthedocs.io

pipx: github.com

PyProject.toml to add entry points: github.com

rich-cli: github.com

Typer: typer.tiangolo.com

FasD: github.com

Nerd Fonts: nerdfonts.com

Xonsh: xon.sh

iTerm: iterm2.com

Windows Terminal: microsoft.com

ohmyposh: ohmyposh.dev

ohmyz: ohmyz.sh

Watch this episode on YouTube: youtube.com

Episode #392 deep-dive: talkpython.fm/392

Episode transcripts: talkpython.fm

---== Don't be a stranger ==---

YouTube: youtube.com/@talkpython

Bluesky: @talkpython.fm

Mastodon: @talkpython@fosstodon.org

X.com: @talkpython

Michael on Bluesky: @mkennedy.codes

Michael on Mastodon: @mkennedy@fosstodon.org

Michael on X.com: @mkennedy

Episode Transcript

Collapse transcript

00:00 When you think of data science, Jupyter Notebooks and associated tools probably come to mind.

00:04 But I want to broaden your tool set a bit and encourage you to look around at other tools that

00:09 are literally at your fingertips, the Terminal and the Shell command line tools. On this episode,

00:14 you'll meet Joran Janssen, who wrote the book Data Science on the Command Line. And there are a bunch

00:19 of fun and useful small utilities that will make your life simpler that you can run immediately in

00:25 the terminal. For example, you can query a CSV file with SQL right on the command line.

00:30 That and much more on this episode 392 of Talk Python To Me, recorded November 28th, 2022.

00:50 Welcome to Talk Python To Me, a weekly podcast on Python. This is your host, Michael Kennedy.

00:55 Follow me on Mastodon, where I'm @mkennedy and follow the podcast using @talkpython,

01:01 both on fosstodon.org. Be careful with impersonating accounts on other instances. There are many.

01:07 Keep up with the show and listen to over seven years of past episodes at talkpython.fm.

01:12 We've started streaming most of our episodes live on YouTube. Subscribe to our YouTube channel over

01:17 at talkpython.fm/youtube to get notified about upcoming shows and be part of that episode.

01:23 This episode is sponsored by Sentry. Don't let those errors go unnoticed. Use Sentry. Get started

01:30 at talkpython.fm/sentry. And it's brought to you by Microsoft for Startups Founders Hub.

01:36 Check them out at talkpython.fm/foundershub to get early support for your startup.

01:42 Transcripts for this episode are sponsored by Assembly AI, the API platform for state-of-the-art

01:48 AI models that automatically transcribe and understand audio data at a large scale. To learn more,

01:54 visit talkpython.fm/assemblyai.

01:57 Yerun, welcome to Talk Python To Me.

01:59 Hey, thank you. I'm very happy to be here.

02:02 I saw your book and the title was Data Science at the Command Line. I thought, okay, that's

02:08 different. You know, there's a lot of people that talk about data science tools and Jupyter

02:12 Labs, amazing. And like, if you look over the fence, like our studio and those kinds of things.

02:17 And yet, so much of what we can kind of do and orchestrate and create as a building block

02:22 happens in the terminal. And bringing some of these data science ideas and some of these

02:28 concepts from the terminal to support data scientists, I think is a really cool idea. So

02:32 we're going to have a great time talking about it.

02:33 Yeah. Yeah. I love to talk about this. And yeah, you're right. I still find it an interesting

02:38 juxtaposition of these two terms, data science and the command line. One being, well, nowadays,

02:45 let's say 10 years old, at least the term is. And the other one, the command line is over 50 years old.

02:51 The command line is, it's ancient in computer terms, right? It's one of the absolute very first

02:58 ways of interacting with computers. You've got cards where you programmed on paper,

03:03 and then you had the shell, right? Right after that.

03:06 Exactly. Before there were any screens, really.

03:08 Yeah. When we all, when computers were green, they were all green. It was amazing. So I'm looking

03:13 forward to diving into that. And it's going to be a lot of fun. And I just want to also put out there

03:18 for people who are like, but I'm not a data scientist. So should I check out? I actually

03:22 think there's a ton of cool ideas in there from just for people who do all sorts of Python and other

03:28 types of programming. It's not just data scientists, right?

03:31 No, absolutely. And I mean, even, I mean, I don't really care much for titles, but even when you're

03:38 an engineer or a developer, you would be surprised if you really think about how much data you actually

03:45 work with. I mean, just log files on the server. Yeah.

03:49 That's data too. So there are still a lot of opportunities to use the command line, even if

03:56 you don't consider yourself to be a data scientist per se.

04:00 Yeah, I totally agree. All right. Let's start with your story. How'd you get into programming

04:04 and data science and Python? I know you do Python and R and some other things. So how'd you get into

04:10 programming? Yeah, it actually started when I was about 12 years old. We got this old computer. It

04:18 was already old by then, 286. And I opened up this program. I wanted to write a story. And I was just

04:27 typing. I was journaling. Then I got all these error messages. Turns out the program that I had opened

04:33 was QBasic. And I didn't really like what I had to say. And then I started reading the help. And then

04:41 I realized like, hey, I can make this computer do things. It just needs a particular language.

04:47 And that's really how I got into programming. Yeah. And then of course, there's a whole range of

04:53 programming languages that then come by. Visual Basic at a certain point. Pascal, Java, C.

05:01 And you know what? I've forgotten most of it. So if this sounds intimidating,

05:06 please don't worry. But yeah, nowadays, Python plays a big role in my professional career. Also R,

05:14 right? And those two happen to be the most popular programming languages for doing data science and

05:21 JavaScript, obviously, when you're doing some more front-end work.

05:24 JavaScript finds its ways into all these little cracks. You're like, why JavaScript? Come on. I was

05:29 just looking at programmable dynamic DNS as a service. And the way you program it is you, I know,

05:36 and you jam in little bits of JavaScript to make a decision on how to like route a DNS query. I'm like,

05:41 JavaScript.

05:42 Oh, yeah. I'm now using Eleventy, which is a static site generator. And ironically enough,

05:50 it uses JavaScript.

05:51 Yeah, sure.

05:52 So yeah.

05:53 That's fantastic. I've heard really good things about Eleventy. And I just started using Hugo,

05:58 which is also a static site generator, but that one's written in Go. And I just decided I care

06:03 about writing in Markdown and I want a static site. And I don't care as long as I run a command on the

06:07 terminal. Actually, I want to tell the story a little bit about sort of coordinating over the

06:12 shell for some of these static site things. But I decided I don't care if it's the guts of it are in

06:17 a language that I can program. It's a tool. If it's a good tool for me, I'm going to use it.

06:21 Okay. So that's how you got into programming. How about day to day? What are you doing these days?

06:25 Yeah. So at this very moment, as we're recording this, I have my own company called Data Science

06:32 Workshops, where I give training at companies to developers and researchers and occasionally managers.

06:39 But I have decided to stop with that. Okay. So in a couple of weeks, I'll actually join another company.

06:47 So in the past six years, I have, we can talk about how that company came about. And it's probably

06:53 related to, it's related to everything, of course. But, but I just want to say that this is actually the

06:59 very first time that I'm talking about this, but I'm going to be a machine learning engineer.

07:05 Okay. Two reasons why I decided to, to stop with, with my own company is that first of all, I really

07:11 miss working with people, working with colleagues. Yeah. And secondly, I miss building things. So

07:18 that's why I'm, I'm joining, well, January 1st, I'm joining Xomnia, a consultancy based in Amsterdam,

07:25 the Netherlands. Excellent. Well, that sounds, that sounds really fun. I also run my own company and I'd

07:31 really enjoy it, but I completely get what you're saying. It's sometimes it's nice to be with a team

07:37 and you also, it makes you learn different skills or hone different skills to show up at a client

07:43 company where they've got, you know, a million requests in an hour trying to answer something,

07:48 which machine learning versus, you know, doing some research and talking about how to improve the

07:53 shell, right? These are very, two very different jobs. And so it's, it's cool to sort of mix,

07:57 mix up the career across those. Say again? It's great to mix up your career and do,

08:01 do some of both, right? Because if all you do is work at a consultant, you'd be like,

08:04 I can't wait to start my own company and do something else. Right, right, right. And you know,

08:08 the company was just, just happened. That's actually thanks to the book that I wrote a long time ago.

08:14 I, once it was done, the first edition that is in 2014, and we're talking about data science at the

08:21 command line here, I was asked to give a workshop and I'd never given a workshop before, but I was asked by,

08:27 a games company in Barcelona to give a one day workshop. And I liked it. And I liked it so much

08:34 that I started doing this more often. Yeah. So I decided to do this full time. So I didn't choose

08:39 the company life, the startup life. Although you can't really think of it as a startup, but yeah.

08:46 Yeah, sure. Yeah. These things happen.

08:47 The independent, the independent life. That's right.

08:49 Yeah, exactly.

08:50 Yeah. Cool. All right. Well, let's talk about the terminal. People on Windows might know it as the

08:56 command prompt. Although you, as I would also recommend that people generally stay away from

09:01 the command prompt in, at least for some of these tools, but we do have the Windows terminal,

09:07 which is relatively new and much, much nicer, much, much closer to the way Mac.

09:13 Yeah. Well, there's the PowerShell, but then there's also a new Windows terminal application.

09:20 And then it can even do things like bash into Windows subsystem for Linux, right? So if you

09:26 wanted to use some of these tools, you could fire up Windows subsystem for Linux, and then you would

09:29 literally have the same tool chain because it's just Ubuntu or something.

09:32 Oh, right. Yeah. I mean, I have, I'm familiar with WSL, but I haven't tried out this new Windows.

09:39 Yeah. The new Windows terminal is pretty nice. Well, let me see if I can pull it up for everybody.

09:44 Windows terminal, but yeah, it's just in the Windows 11 store, I guess you call it. I don't

09:50 know, but it's, it's a lot closer to a lot closer to the other tools. So if you're on Windows,

09:55 you owe it to yourself to not use cmd.exe, but get this instead. So what I want to talk about just

10:02 real quickly to set the stage is I just went through a period of, oh, my computer has been

10:09 the same setup for a couple of years. It's getting crufty. I'm going to just format it,

10:13 not restore from some backup, but format it and reset up everything. So it's completely fresh and

10:19 like better. Cause I really made some mistakes when I first set it up. Now it's better, but I opened up

10:24 the terminal and it's this tiny font, dreadful white background with white, with black text. And

10:30 it has some old version of bash. And so I kind of wanted to get your thoughts on like,

10:36 what do you do to make your terminal better? Right. You probably do something. You probably

10:42 install some extras and other things to make your experience on the terminal nicer.

10:47 Yeah. I'm guessing you're on macOS then? Yes. I do macOS and I do a Linux for the servers.

10:53 And I think some combination thereof is pretty common for most of the listeners.

10:58 So for macOS, the biggest gain you get when you install iTerm. Okay. A different terminal,

11:05 right? The application that would launch your shell.

11:08 The macOS term. Yeah. The terminal emulator. I, what do they call it? The macOS terminal replacement.

11:15 Yeah. This is, I'd say the most popular one on macOS. There are others, but yeah, that's what I start

11:22 with. You mentioned the shell, which is it still bash? Is that still the default one on macOS?

11:28 Yeah. It's still bash by default. Yeah. Yeah. And I think it's an old version of bash.

11:32 So yeah, there are other shells out there. The Z shell is quite popular, largely compatible with bash.

11:40 I've heard good things about fish. Yes. Fish is good. Yeah. Yeah. Which actually it's not really

11:47 POSIX compliant as they say. So, so it's quite different from what you might get from bash or the

11:54 Z shell. But from what I've seen, the syntax, especially a looping might appeal to the Python

12:01 developer out there. It's closer to Python, but I haven't tried it myself.

12:06 There's also the conch shell. Is that how you say it? X-O-N dot. If you're willing to give up

12:13 POSIX, then this is like literally Python in the shell. You can just type like import JSON and do a

12:19 for loop, right? Yeah.

12:21 I've never, I've not gone this far. I'm still, I'm on the Z shell side of things. I really like how that

12:26 works. But if you really wanted to embrace the sort of Python in the shell.

12:30 Exactly. It's this trade-off of how far do you want to go? How much do you, do you want to deviate from

12:37 what is then considered to be the default, right? Because you mentioned you also work a lot on servers.

12:44 And there you're then presented with a completely different shell, perhaps, and set of tools.

12:50 It's a trade-off. And also how much time do you really want to spend customizing this? Because

12:56 yeah, our time is precious.

12:58 Yeah. Yeah. And William out in the audience says for the, for the Windows people,

13:03 Oh My Posh, which, have you done stuff with Oh My Posh? This is also really nice.

13:09 So I guess Posh is for the, oh no, any shell. So not just the PowerShell.

13:14 Yes. This, I think it came out of the PowerShell. So the Posh part, I think it originally was for that,

13:20 but I use this with Z shell and Oh My ZSH together. And it's basically that controls my prompt and Z,

13:28 Oh My Z shell is like all the plugins and, you know, complete your Git branches type of thing.

13:33 But yeah, this, this is really, this is really pretty neat too. Works well for, for Windows people.

13:38 I'd say then indeed the, the, so if your terminal is one thing where you can get a lot of benefit from

13:44 customizing your prompt so that it gives you a little bit more information and context of where

13:51 you are or what your state, in which state your Git repo is in or which virtual environment you're

13:57 working, that can be helpful too, because that is something that you lose easily when you're working

14:03 at the command line is, is context.

14:05 Right. I ran this command and it's not working because actually I forgot to activate the virtual

14:10 environment. So it doesn't have the dependencies or the environmental variables that I set up in that

14:15 virtual environment. Right.

14:16 Exactly.

14:16 Let me give one more shout out for one other thing while people are thinking about making their,

14:21 their stuff better is nerd fonts.

14:24 I'm always eager to learn these things. There is so much out there.

14:28 So nerd fonts, if you're going to get like, Oh, my posh and some of these other extensions that you

14:34 want to make your shell better. So many of them depend on having what are called nerd fonts. Because

14:40 if you look at say on the, Oh, my posh page, there's like these arrows with gaps in them. I mean,

14:46 what font could possibly have like a Git branch symbol and as these like connecting arrows that have

14:53 colors and are woven and all that stuff is nerd fonts. So if you're going to try to run them,

14:58 download and install one of these nerd fonts, and then those will work. Otherwise they're like

15:02 those, I don't understand Unicode square blocks, you know, like when emojis go bad.

15:08 Oh, you still got to install individual fonts. Yeah. So, so it's kind of like you take Consolata or

15:13 something or some other font and it's patched with these additional.

15:17 Some, yeah, something like that. Yeah. So it does, you only need one, but you have to set your terminal

15:23 to one of these to make a choice, set it to one of them and then it'll work. But if you don't,

15:28 then you'll, you'll end up with just like these, these, a lot of these extensions don't work.

15:32 This portion of Talk Python, I mean, is brought to you by Sentry.

15:37 How would you like to remove a little stress from your life? Do you worry that users may be

15:42 encountering errors, slowdowns, or crashes with your app right now? Would you even know it until

15:48 they sent you that support email? How much better would it be to have the error or performance details

15:53 immediately sent to you, including the call stack and values of local variables and the active user

15:58 recorded in the report? With Sentry, this is not only possible, it's simple. In fact, we use Sentry on all

16:05 the Talk Python web properties. We've actually fixed a bug triggered by a user and had the upgrade ready

16:11 to roll out as we got the support email. And that was a great email to write back. Hey, we already saw

16:16 your error and have already rolled out the fix. Imagine their surprise. Surprise and delight your

16:22 users. Create your Sentry account at talkpython.fm/sentry. And if you sign up with the code

16:28 Talk Python, all one word, it's good for two free months of Sentry's business plan, which will give

16:34 you up to 20 times as many monthly events as well as other features. Create better software, delight your

16:40 users and support the podcast. Visit talkpython.fm/sentry and use the coupon code Talk Python.

16:50 Yeah. So when it comes to customizing your shell, then if you still want to talk about that.

16:55 Yeah, yeah, yeah. Let's keep going.

16:56 Right. One of the things I think everybody does most often is navigating around. So moving from one

17:04 directory to another. And it can be quite cumbersome to keep on retyping all these long and deeply nested

17:11 directories. So there are a number of solutions that can help with that. I use FASD. Okay.

17:20 So that keeps track of what you've been visiting most often, most recently. So it also, I don't think if,

17:27 I wonder if that one also allows you to set bookmarks. That's what I used to do. I would keep,

17:33 I would have this set of custom shells, shells functions, which actually made it into a plugin,

17:40 about nine years ago into OhMyZSH. So if you, if you have OhMyZSH and the jump plugin is still

17:46 in there. Yeah. That's a, I see. Yep. Yep. So you can just jump around. I see.

17:52 Yeah. You would say, you would say mark, mark this directory under this alias, although it's not really

17:58 an alias, but it's like a bookmark. And then you say, okay, jump to this directory. So that really helps.

18:04 Right. So maybe the source directory for Talk Python, I would just mark it as TP. And I could say on the,

18:09 on the terminal, I could say J space TP. And it would take me this super long, complex directory,

18:15 just bam, you're there, right? Exactly. So I like it. Okay. I might need to try this out. And it comes

18:20 with OhMyZSH? This one? No, this one, it doesn't. It's a separate tool. Okay. I believe.

18:25 Although it might even be a plugin. By now, I don't even know. It's been, it's been a long time since I

18:31 installed it, but FASD. That's what you want to look for. Okay. Very, very cool. I have one that I

18:38 use a lot called McFly. Have you, have you seen McFly? No. So it's similar. And what you do is,

18:44 you know, if you type control R, it'll give you reverse incremental search or whatever that is.

18:49 And I'm, so this overrides that. So if you type control R, it brings up an Emacs, like autocomplete

18:55 type thing that has fuzzy searching. So you could type SSH and then like part of a domain name,

19:01 and it would find you type SSH, you know, root at some that, that, that, that domain name. And it'll,

19:07 it'll give you a list of like all these smart options looking through your history.

19:10 Yeah. Yeah. That's amazing. And even now, as we talk, I've learned like a dozen new things.

19:15 One thing I have noticed though, is that, you know, you may, the next time you're installing,

19:21 you're setting up your system, you may feel very productive and, and lead like,

19:27 right. When you're installing all these tools, but you still got to make use of them, right?

19:32 Yeah.

19:32 You got to turn that into some kind of a habit. And what I have noticed for me, at least what works

19:38 best is to just take it one tool at a time, make a little cheat sheet for yourself on a piece of paper,

19:45 and just see if that, if you like that tool, if you can, you know, if you get any benefit from it.

19:50 Yeah, absolutely. So related to this actually is, is the concept of aliases, right? In a more generic

19:56 sense, in the pure shell sense that you can define an alias that would then be expanded into some command

20:04 with zero or more arguments. Yeah. So if you have, if you have commands that you would often type like

20:11 a LS for listing your, your files, and you have all these arguments that you don't want to keep on

20:17 typing, then aliases is the way to go. I go crazy with aliases. I absolutely love this. Yeah, I have

20:24 probably 100, 150 aliases in my RC file. Oh, that's nice. Yeah, that's nice. So at some point,

20:31 I, what you, well, what you may have done is go through, through your history and then see how often

20:36 you use these aliases. Yeah. That's always a fun thing to do. Yeah. For me, it's kind of frustration.

20:42 I'm like, God, I want to do this. Or, you know, I've got to remember, I got to type, oh no, I got

20:46 to go into this directory. And then I got to first type this command, and then I can do this other

20:51 thing. So for example, we talked about the static site generators. So one of the things I have to do

20:56 in order to create new content and see how it looks in the browser is I have to go to a certain directory,

21:01 directory, not where the content is, but a couple up, run Hugo dash D server there, and then it'll

21:08 auto reload. And as I edit the markdown, it'll just refresh. So instead of always remembering how to find

21:14 that directory and then go into the right sort of parent directory and run it, I just now just type

21:18 Hugo right. And that's an alias. And just, it does that. Boom. It just, it just pops open and it's okay.

21:23 It's, it's running. I do my thing. I'm going to, then I got to do a whole bunch of automation in Python

21:28 on top of it and then build it and ship it to the Git and push it for a continuous deployment.

21:33 Now I have just Hugo publish. Boom. And these are all like aliases. The other thing you talked about,

21:38 a single commands is maybe talk about chaining commands and multiple commands.

21:42 Yeah. Because you just mentioned automation in Python. And then I, of course, immediately go like,

21:47 hmm, what's going on there?

21:49 Yeah. So I've got a couple of, I guess they're go commands because they're Hugo. And then I've got

21:56 some Python code that generates a tag cloud and then a Git command that'll publish it. So it's like,

22:00 Hugo, Hugo, Git. No. Hugo, Git. Hugo, Python, Hugo, Git is that all in one alias, right? Which is,

22:09 is beautiful. Oh, nice.

22:10 Yeah. It's beautiful. You know, I don't know if we've exactly, I guess I opened a little bit talking

22:14 about your book, but one of the really core ideas of your book is that the shell can be the integration

22:19 environment across technologies like Go, Python, and Git.

22:24 Exactly. Exactly. The command line doesn't care in what language something has been written. It's like a

22:31 super glue or duct tape, more really, that binds everything together.

22:36 Yeah.

22:37 Yeah. To a certain extent, right? Like duct tape.

22:40 Yeah. Well, it's, it's a, you know, loosely bound, but it's, there's a ton of flexibility in there. And

22:45 if you think, well, I really just want to do these four things, maybe that would be a macro in Excel,

22:50 or some kind of like scripting replay in Windows. But this is, it's on the terminal programs can run

22:58 it. You can run it. It's clearly editable. It's not some weird specific type of macro, right? You're

23:03 like, I want to do these four things. I just type thing and go, I'm sure many people know,

23:07 but if you have multiple commands, you want to run one than the other, you can just say

23:10 ampersand, ampersand between them. And it'll say, run this first thing, then run the other.

23:14 Those are independent. You can also pipe inputs and outputs between them. Right. I see that.

23:19 That's correct.

23:20 You've got some really interesting ways to do that multi-line stuff in your book as well.

23:24 Yeah. Well, yeah. So it depends on what kind of tools you want to combine, right? So you,

23:29 you just mentioned a double ampersand. So that should be used when you only want to run the

23:35 second command when the first one has succeeded, right? If you want to run the second one, regardless

23:41 of what the first one did, you can just use a semi-column. Or if you only want to run the second

23:46 command when the first one failed, there might be a situation where you want to do that. You can use

23:52 double pipe. So for four. Interesting. Okay. And then, yeah. And then you just mentioned piping

23:59 and that's, well, a whole nother story. That's when you want to use the output from the first command

24:07 as input to the second command. And this is where, or data again, comes into play. And this is, so you just

24:15 also mentioned macros, right? Another way to think of them are our functions that you then combine.

24:23 Yeah. Incredibly powerful, but that goes a little bit beyond then of course you should be working with

24:29 commands that produce some text that you want to then further work on. Yeah. You also talk about

24:36 creating bash scripts, which is pretty interesting. I think many people probably know about that or shell

24:40 scripts, .sh files. I guess it could be zshell scripts as well. Yeah. So you gave an interesting

24:46 presentation back at the Strata conference and you had a lot of fun ideas that I think are relevant

24:53 here. So maybe let me just throw out some one-liners and you could maybe riff on that a little bit.

24:57 Okay. Yeah. Yeah. Sure. One of the reasons you said you gave 50 reasons that the shell was awesome.

25:02 And I want to just point out a couple, highlight a couple, let you speak to them. So you said the

25:07 shell is like a REPL that lets you just play with your data. We know the REPL from Python and also

25:13 from Jupyter, but I never really thought of the shell as a REPL, but it kind of is, right?

25:16 Yeah. I think that the shell is the mother of all REPLs. The read, eval, print loop.

25:22 Yep. Right? Having this short feedback loop of doing things and seeing output and then elaborating on

25:29 that I think that is tremendously valuable. And Python users, of course, may recognize this from

25:36 Python itself, right? If you just execute Python, you get a REPL, IPython or Jupyter console. And to a

25:42 certain extent also, Jupyter notebook or JupyterLab is there are some similarities there where you again,

25:49 have this quick feedback loop. And it's a very different experience from writing a script from top to

25:54 bottom or starting at the top and then executing that script from the start every time you want to test

26:01 something. So yeah, it's a different work, different way of working. And I'm not saying one is better than

26:07 the other. But what I do want to say is that there are situations where having such a tight feedback loop can

26:14 be very efficient. Yeah. Especially in the exploration stage, right? Yeah, exactly. Once you go to production,

26:21 right? Once you whatever that means, right? Once you want things to be a bit more stable,

26:26 you don't want to just use duct tape, but you want to use a proper construction. Then, then yeah,

26:34 then, of course, the command line can have different roles there. Yeah. Yeah. But it's kind of the,

26:39 the rad GUI, the rapid application development GUI, but for data exploration, right? These,

26:45 these REPLs and you know, that's, that's probably why Jupyter is so popular. It just lets you play and see and

26:50 then try. And it just was that, that quick feedback loop is amazing. Another reason said,

26:54 it's awesome. Close to the file system. Yeah. I mean, in the end, it's all files,

26:57 right? Whether you're producing code that lives somewhere, it's in a file, or whether you're working

27:03 with images or log files that get written to something, or you have some configuration, it's all

27:09 files. And we got to do things with these files. We have to move them around. We have to rename them,

27:15 delete them, put them into Git. Yeah. So you want to be close to your file system. You don't want to be

27:22 importing a whole bunch of libraries before you can start doing things with these files.

27:28 Also, when you're doing data science, often it starts with this kind of ingest and understanding

27:34 files, right? CSV or text or others. Yeah. I mean, I sometimes try to immediately do read CSV in Pandas,

27:44 but then, you know, very often I get presented, I get some Unicode error, or it turns out it's the comma

27:52 is not the file, is not the limiter being used. And yeah, you can do that in a sort of trial and error

27:59 way. You can fix that. But it really helps to just being able to look at a file as it is, no parsing,

28:06 just boom, there's my file. And then, yep, once you're comfortable, once you're confident, like,

28:12 okay, this is what my file looks like. This is its structure. Then of course, you can always move on

28:18 to using some other package like Pandas. Okay. Another one that you've said,

28:23 another recommendation you had or sort of way for playing with this was to use Docker. I don't know

28:29 how many people out there who haven't done this for are really familiar, but basically when you start

28:34 up a Docker image, you might say dash it bash or ZSH. And what you get is just you get a basic shell

28:41 inside the Docker container. But in that space, then you can kind of go crazy and do whatever you want

28:46 to the shell and try it out, right? Exactly. Yeah. So there are two scenarios that I can think of. So

28:52 when you're just starting out with the command line, it's a very intimidating environment. And it's quite

28:57 easy to wreck your system if you're not careful. So being inside an isolated environment that

29:04 is sort of shielded off your host operating system can be comforting. So that's one recommendation

29:11 that I would say that why I think you should use Docker. And the other one is reproducibility. Also in

29:17 Python, right, we're dealing with packages that get updated, that get different version numbers,

29:23 where APIs change. And being able to reproduce a certain environment so that you get consistent results

29:33 is also very valuable. Yeah. And I'd like to sort of highlight the converse as well. You said playing

29:40 with Docker containers is a cool way to experiment with the shell. If you care about Docker containers,

29:45 you need to know the shell to do things to it. Because you might think, oh, I'm just going to make a Docker

29:49 file. I don't need to know the shell. Like what goes in the Docker file, a whole bunch of commands that

29:54 many of them look like exactly what you would run on the shell. You just put it in a certain location

30:00 or as a command argument to some configuration thing in there. And so you really, if you're going to do

30:05 things with containers, the way you speak to them is mostly through shell-like commands.

30:10 This portion of Talk Python To Me is brought to you by Microsoft for Startups Founders Hub.

30:18 Starting a business is hard. By some estimates, over 90% of startups will go out of business in just their first

30:24 year. With that in mind, Microsoft for Startups set out to understand what startups need to be successful and to

30:31 create a digital platform to help them overcome those challenges. Microsoft for Startups Founders Hub was born.

30:37 Founders Hub provides all founders at any stage with free resources to solve their startup challenges.

30:44 The platform provides technology benefits, access to expert guidance and skilled resources, mentorship and networking

30:50 connections, and much more. Unlike others in the industry, Microsoft for Startups Founders Hub doesn't require startups to be

30:58 investor-backed or third-party validated to participate. Founders Hub is truly open to all.

31:04 So what do you get if you join them? You speed up your development with free access to GitHub and Microsoft

31:10 cloud computing resources and the ability to unlock more credits over time. To help your startup innovate,

31:16 Founders Hub is partnering with innovative companies like OpenAI, a global leader in AI research and development,

31:22 to provide exclusive benefits and discounts. Through Microsoft for Startups Founders Hub,

31:27 Founders Hub is no longer about who you know. You'll have access to their mentorship network,

31:31 giving you a pool of hundreds of mentors across a range of disciplines and areas like idea validation,

31:38 fundraising, management and coaching, sales and marketing, as well as specific technical stress points.

31:43 You'll be able to book a one-on-one meeting with the mentors, many of whom are former founders themselves.

31:48 So one of the cool tools that you had in that presentation was you talked about explainshell.com.

32:12 Yeah. What is this?

32:13 Well, you can try out. So what you see here on the screen is explainshell.com and it will break down

32:21 a long command and start explaining. So it will, it will, what I think the authors have done is they have

32:30 used all these manual pages and extracted bits and pieces that they then present to you in a, in an order

32:37 that corresponds to the command that you're pasting into this. So if you see, you know, on Stack Overflow,

32:42 you see this, this incantation and you're like, all right, what does it mean? And you don't want to go through the manual page yourself.

32:50 Right. Okay. So what's, what does dash F mean? What is this X, Z, V, F for the tar command mean?

32:58 Then explainshell can do this trick for you.

33:00 Yeah. It's amazing. When I first thought, I thought, okay, well this, what this is going to be is this

33:04 is going to be like the man page. So if you type LS, it'll show you a simple list directory contents and

33:10 you click on it, it'll give you additional arguments you can pass. But you could then say, like you said,

33:16 you could say dash L and it'll say the LS means list contents. The L means use the long listing format.

33:22 And you're like, oh, okay, hold on. What if I said, get, get checkout main. And you'll say, okay,

33:28 well get checkout does this. And then main it'll actually parse it apart. And there's some really

33:33 wild examples on here that like right on the page that are highlighted on the homepage of that site.

33:39 You click it and boom, it gives you this cool graph of like, what the heck? It even shows like

33:44 the ampersand at the double ampersand and the double or combining, as you mentioned before.

33:49 Yeah, it is. It is. It's really useful, especially when you're just, you know,

33:55 getting started with the command line and you're overwhelmed, like we all are in the beginning,

33:59 and sometimes still are then, you know, adding adding some context like this really helps.

34:04 I once wrote a utility that allowed you to use explain shell.com from the command line. So you would

34:10 just, you wouldn't leave the command line. I don't think it works any longer. But yeah, that was a fun

34:16 exercise. Yeah. Oh, yeah. Very neat. One of the things that I learned was parallel.

34:23 Oh, yeah. So tell us about parallel. Like this is a command you can run on the terminal. And it

34:30 sounds like it does stuff in parallel. That sounds amazing.

34:32 Yeah. Yeah. Like the name implies parallel is a tool. And we're talking about GNU parallel here.

34:37 There's another version out there that is similar, but different. GNU parallel, this tool that doesn't

34:44 doesn't do anything by itself, but it multiplies. It's a force multiplier for all the other tools.

34:50 So what this tool is able to do is will parallelize your pipeline. It will be able to run jobs on

35:00 multiple cores and even distribute them to other machines if you have those available. Right. So,

35:07 Michael, you mentioned you're working on a server. Well, if you can SSH into other servers as well,

35:15 you can leverage those. That's something that GNU parallel can do. The way it works is that you feed

35:20 it a list of something. Could be a list of file names. Could be a list of URLs. Could be your log files.

35:27 If you can then think of the problem that you want to solve. If you can break it down into smaller chunks,

35:34 then GNU parallel might be able to help you out there. So these jobs should be working independently

35:41 from each other. Yeah. There can be, yeah, it's nearly impossible to have those two jobs communicate

35:47 with each other. But let's say you have for your blog, right? In Hugo, you have a whole bunch of

35:53 ping files that you want to convert to to JPEGs. WebP or something. Yeah, sure. Yeah. I mean,

35:58 it's a bad example because this particular tool that I would then use already supports doing multiple

36:04 files. But let's just assume that this tool can only handle one file at a time. Yeah. Then you would

36:11 specify your command and then at certain places where necessary use placeholders. Yeah. So,

36:17 okay, this is where the file name goes and this is the file where the file name goes with a new

36:21 extension. So it's one of my favorite tools really. Yeah. That's fantastic. So for example, if you had

36:27 a bunch of web pages and you wanted to compute the sentiment analysis, right, as a data scientist,

36:32 you want to download it, compute the sentiment analysis, and then save that to a CSV or pin it to a CSV.

36:38 Yeah. You know, maybe somebody gave you that script and it's only written to talk to one thing and you

36:43 don't want to rewrite it or touch it or get involved with it, right? This is your way to unlock the

36:48 parallel of something, right? Yeah. In fact, let's talk a little bit more about this because I think

36:52 this is an important point in that I'm sure that we've all come across when we're working in Python

36:57 and you're thinking like, okay, I can speed this up. I want to do things in parallel. You know what?

37:03 I'm going to do multi-threading or what is it that you use these days in Python?

37:08 Yeah. Async and await maybe if it's I/O or something like that. Yeah.

37:11 You've got your pool of workers or I don't know. Basically, you're programming it yourself from the

37:16 ground up. Right. Multi-processing potentially.

37:18 Probably the closest. Right. Right. Right. Right. Right.

37:21 The trick then is to realize that there is already a tool out there that can do that for you. All that you

37:27 need to do is make sure that your Python code becomes a command line tool. Yeah.

37:33 And we can talk a little bit more about that, but there are just five, six steps needed to make that

37:38 happen. Once you realize that, then you can start turning existing Python code into command line tools

37:44 and start combining it with all the other tools that are already available, including parallel.

37:49 Yeah. It's awesome. I think it's a really cool idea because maybe the person working with the code

37:55 doesn't understand multi-processing and thread synchronization and all these tricky concepts.

38:01 Like, just give me a thing that does it once with command line arguments and I got it.

38:05 it. You know, like you, or you've, you picked it up from somewhere out in the audience. The question

38:10 is, is there a GIL associated with this? And I mean, technically yes, but it's not interfering with the

38:16 computation because it's multiple processes. It's not threads within a process. Right. So it should be

38:21 able to just run. Yeah. There will be one GIL per Python process. Right. Yeah. That's right. And so it

38:28 doesn't matter because if you say there's five jobs, you have five processes, right? There's no contention there.

38:33 Yeah. Yeah, absolutely. All right. Oh, so yeah. Let's talk a little bit about this idea of turning Python

38:40 scripts into command line tools. Yeah. I think that that's really valuable for people. It is. And

38:45 we can then put it in the show notes. I might have already given a talk about this. I'm actually not

38:51 sure if it's publicly available. Anyway, there are only a couple of steps and it's not that difficult.

38:58 So first of all, let's assume that you have some Python code out there. Yeah. You have it in a

39:03 file and let's just for simplicity's sake, assume it's a single file. Right. So what would you then

39:10 need to do to turn this into a command line tool, something that can be run on the command line. So

39:16 the way that you can currently run this is by saying, okay, Python, and then the name of the file,

39:21 right? That doesn't really sound like it's a command line tool. So the very first thing here then is to

39:28 add one line at the very top that would then start with a hash and an exclamation mark or a

39:35 hash bang or a shebang as it's called. These are two special characters and they instruct the shell.

39:43 This can be executed. What is the binary that's going to do the executing? Right. Yeah.

39:47 Executing. Right. Yeah. Exactly. That's what then would come after that. So you would have hash bang,

39:53 and then it would point to the Python executable. Yeah. Right. There's some details there.

39:58 It could be a certain version. It could be out of a virtual environment, potentially. And I think it

40:02 could go wherever, right? You don't want to overcomplicate it probably, but like you could point

40:06 to, you could point to different versions of Python. You could point, because you, you give it a full path to

40:11 executable. Exactly. Exactly. There's some, there's some compatibility issues there, but essentially is

40:17 you tell your shell, okay, which program should interpret my code. And that is some Python out

40:23 there that you have installed. So that's the first step. Then after you've done this, you no longer

40:29 need to type Python anymore because the file itself contains which executable should be, should be run.

40:36 But then you'll notice that you don't have the necessary permissions. What you need to do is you

40:40 need to enable the execution bit. This would give you as the user permission to actually execute this file.

40:48 You do that, of course, with a command line tool. It's called SHMOD, C-H-M-O-D for change mode. And then

40:56 U plus X, the name of the file, right? These details are, if you're really interested, one place where you can

41:01 find them is in chapter four of my book, data science at the command line, which you can read for free.

41:06 Okay. But let's say that you've enabled these, the execution bit. Now you can,

41:11 now you can run it. You would still need to type period and a slash because this file is presumably

41:20 not yet on your search path. So your search path is a, is a list of directories where your shell will be

41:27 looking for the executable that you want to run. Where is your tool located? Well, it should be

41:32 somewhere on the search path. So either you add another path to the search path or you move the tool

41:39 to one of the existing directories out there. That's about it for making your code executable. But then you

41:46 want to change one or two things about the code itself. Yeah. So one thing to do is look for any

41:53 hard coded values that you actually want to be, want to make dynamic, right? These should be turned

42:01 into command line arguments. And actually you can take that one step further. If one portion of your file

42:07 is doing something that can be done by another command line tool, then consider removing that. For example,

42:14 downloading a file. Yeah. There is of course a tool for that on the command line. Why would you then

42:20 write this yourself? Of course, there's a time and a place for that, but let's say, okay, a very

42:25 contrived example is a Python program that would count words. Yeah. Right. Right. If your code has some

42:33 hard coded website. Yeah. I mean, why you would make your tool more generic by getting rid of that hard

42:39 code URL and we'll turn it into a command line argument. Okay. Which website would you like to

42:44 download or to go one step further is to think, okay, you know what? I don't really care where the

42:50 text is coming from. I just want to count words. Give me text somehow. Yeah. Sorry. Just give me the

42:56 text. Don't tell me the URL. Yeah. So your tool should then be reading from standard input, which is a special

43:03 channel from which you can receive data. And this is also where the piping would come in. Yeah. So you

43:09 would first use a tool that would get this text, right? Maybe it's some log file. So you want to count

43:15 your errors or it's another website and you want to do stuff to that. It doesn't really matter, but you

43:22 would then that would write to its standard output. Yeah. And you would combine the standard output from

43:28 the first tool with your standard input using the pipe operator. So that's then basically it for,

43:34 I mean, of course, if you want to take this further, you can think about, you know, adding some help,

43:40 some nice looking help. Yeah. Think about the arguments themselves. Do you want to use short options or long

43:46 options? Exactly. Right. So something like Typer or Click or one of these formal CLI frameworks. Yeah.

43:53 Probably really. Python, of course, has ArgParse, but there are packages out there that can really help

44:00 you build beautiful command line tools. Typer is one of them. I'm currently using Click. Also,

44:07 Click combination with Rich. Mm-hmm . So of course, the author of Rich was on the show a couple of episodes ago.

44:15 Yeah. Will McGoogan. Very good stuff. Yeah. Why we're talking about that? You know,

44:20 the other thing that's really pretty interesting is the Rich CLI. Have you played with Rich CLI?

44:25 Which, oh, oh yeah. Okay. So that's indeed a command line tool in itself that can do a whole bunch of

44:31 things. Yeah. You want to tell us something about that? No, I haven't done much with it,

44:34 but you can do things like if you install the Rich CLI, then you can say things, there's lots of ways to

44:41 install it. You could say like Rich and then a Python file or a JavaScript file or a JSON file,

44:46 and it'll give you pretty printed color, you know, syntax highlighted printout. You can say,

44:51 Rich, some CSV file, and it'll give you a formatted table inside your terminal with colors and everything

44:57 of it understands markdown and like renders markdown. And there's all sorts. So if you're kind of

45:03 exploring files and you're happy with Python things and like installing the Rich CLI is a pretty neat way

45:09 to go as well. Yeah. It's a nifty tool, but just not to get confused. So this tool is provided by Rich

45:16 and it uses Rich to produce, you know, nice looking output. But just imagine that you can write your own

45:22 command line tools that would also produce this nice looking output. And for that, you can then use

45:28 this package called Rich. Right. In combination, perhaps with things like Typer or Click. And

45:34 DocOpt is another way you can go. There are so many tools out there. Yeah, there absolutely are. One other

45:42 thing I would like to point out that, so just taking the script and making it executable and put it in the

45:47 path, that's kind of a great way to take scripts that you have and make them CLI commands for you.

45:54 If you want to like formalize this a little bit more, I recently ran across this project called

45:59 the Twitter Archive Parser. And I don't know if you've noticed, but there's a lot of turmoil at Twitter.

46:04 And so what you can do is you go to Twitter and download your entire history of like thousands of

46:10 tweets or whatever as HTML file and some JSON files, and you can save them for yourself.

46:16 But the content of like all of the links are the shortened to.co Twitter short links. And if

46:25 Twitter were to go away, you'd have no idea what any of your links you've ever mentioned ever were.

46:29 And also the images that you get are the low res images, and you can get the high res images if you

46:34 know how to download them. So this guy, Tim Hutton created this really cool utility that you can

46:39 down, you can take that downloaded archive and upgrade it to standalone with high res images and full

46:45 full resolved links, not shortened links. Pretty cool, right? But if you look at the way to like,

46:50 how do you use it? Okay, where does it say this? Not sure where it is. Yeah. So how do I use it? I

46:55 download my Twitter archive and unzip it fine. And then I download the Python file to the working

47:01 directory. And then I go in there and I type Python that file. Wouldn't it be better if I could just,

47:08 you know, it has dependencies that has to install in order for it to run? Wouldn't it be better if I

47:12 could just use this as a command? So what I did is I forked this. And I said, I'm going to add a

47:17 pyproject.toml to turn this into a package. And then under the pyproject.toml, you say project.scripts,

47:25 Twitter archive markdown, Twitter archive images, and you, you map into your package and then functions

47:30 that you want to call. And then once you pip install this, these commands become just CLI commands.

47:36 And it doesn't matter how that happened long as your Python packages are in the path, which they

47:41 generally have to be anyway, because you want to do things like pytest and black, then if you just

47:45 pip install this project, it adopts all these commands here, which is pretty cool. Nice. Is it

47:50 then necessary to add this bin directory once to your search path? Because it lives, it would live

47:58 somewhere on their site packages, right? Yes, exactly. And so if you have a Python installation and you try

48:04 to pip install something, you'll get a warning that the site packages are not in the path. So you do have

48:08 to do that. And then go one further, you could do pip x. I don't know if you played with pip x. pip x is

48:15 awesome. So it'll generate the package environments and install the dependencies in an isolated

48:20 environment. And it'll set up the path if you just say ensure path. Then so if you pip x install the

48:24 thing with the commands in it, those automatically get managed and upgraded by pip x as just part of

48:31 your CLI, which like that's a perfect chain of like a four, but you've got to have a formal package

48:36 and like a place to install it from like get or pipe I or whatever. But it's still, it's still a,

48:41 like a neat pro level type of thing. I think. Yeah. Yeah. You can take this pretty far,

48:45 make it really professional. And before you know it, you start maintaining it for.

48:50 Yeah, exactly. Why am I doing PRs on this silly thing? I don't know.

48:54 Yeah. But just to clarify, if you say for a one off or a two off, you want to make something that is

49:01 reproducible, right? So a reusable command line tool, not reproducible, reusable. You don't really need

49:07 any other packages. You can use a sys.argv, right? You import sys and then you have your sys.argv.

49:15 And I do that. I do that a fair amount of times. Yeah. It's only for me, I've created an alias. So

49:21 it always gets the right argument. There's like, there's no ambiguity. Sys.argv bracket one, let's go.

49:26 Exactly. Yeah. Yeah. We've talked a lot about sort of around all the cool things we can do with

49:31 the command line. But in your book, you actually talked about a bunch of surprising tools. So like,

49:37 one of the things you talked about is obtaining data and you hinted at this before, like you can

49:41 just use curl for downloading those kinds of things. But if you get a little bit farther,

49:46 like under scrubbing data, you talk about grec, rep, and awk that a lot of people maybe know.

49:52 But then if we go a tad further over to say exploring data, then all of a sudden you can

49:57 type things like head of some CSV file and it kind of does the same thing as Jupyter. Or there's things

50:05 like CSV cut and SQL, CSV, CSV, SQL. Talk about some of these maybe more direct data science tools that

50:13 people can use. Right. So let's see then where to begin. You mentioned a couple of tools, right?

50:20 The head and awk and grep. Those are, you know, I would consider them the classic command line tools,

50:28 right? I would too. Part of core utils, GNU core utils, right? You can, if you have a fresh install,

50:35 then you can expect those tools to be present. Yeah. If you're not on Windows. So those tools,

50:42 they operate on text, on plain text, and they have no notion of any other structure that might be

50:49 present in this data. Say CSV for when you have some rectangular structure or JSON, when you can have

50:57 a potentially deeply nested data structure. These tools know nothing about that. That doesn't make

51:02 them entirely useless, right? There are ways to work around them, around that issue. But there are nowadays

51:10 plenty of tools available that are able to work with this structure. Right. And one of them is actually a

51:18 suite of tools. It's called CSV kit. And you can install it as a Python package. Okay. Through pip,

51:25 which of course we do at the command line. CSV kit, you say? Yeah, exactly. And then you get a whole bunch of

51:33 tools that understand that lines are rows. The first line is a header and all these fields are delimited

51:42 by default by a comma. And then you can do things like extract columns or sort a file according to a

51:50 certain column. Yeah. So this is more difficult for when you're working with core data utils. And of course,

51:57 all of these things you can do in Pandas, and it might even be faster in Pandas as opposed to these

52:05 CSV tools, not as opposed to the classic command line tools. But I mean, in order to get started with

52:14 Pandas, right, just imagine that you're given this file by your colleague and you're asked to quickly

52:21 to sum things together, right? And in order to just get started with Pandas, what are then the things

52:26 that you need to do? Yeah, fire up JupyterLab, import Pandas, and maybe a bunch of other things. There is,

52:33 of course, also a time and a place for that. Definitely, definitely. I always use the tool that gets

52:39 the job done. Don't get me wrong here. But it's just so incredibly powerful to just, if it solves the job,

52:45 just whip up a command on the command line using a couple of tools there. If you're going that route,

52:53 then CSV kit is not the only suite of tools that you should know about. XSV written in Rust, but yeah,

53:01 you shouldn't care about that because the command line doesn't care. It's generally faster. One thing

53:06 that CSV kit can do, by the way, and I'm actually kind of proud that I have been able to contribute

53:12 that tool to the suite of tools is CSV SQL. And it allows you to run a SQL query directly on the CSV file.

53:22 Yeah. So if you are familiar with the SQL, then you can leverage that knowledge directly at the command

53:30 line without first having to create a new database and import that CSV file in there and so forth.

53:35 All right. So one of the things you can do on the command line is basically just give it,

53:39 like, here's a SQLite file database, and now go insert all the things from the CSV file into it.

53:47 Here in this example, it has this create table statement. Does it figure that out from the CSV,

53:52 or do you need to write that? It figures it out. Yeah. It does some, it looks at the first, say, a thousand rows and then figure out like, okay, this is a number. This is text.

54:02 I see. Yeah. Oh, cool.

54:04 But I was actually talking about the other tool and that's SQL to CSV. I always mix those up.

54:11 The reverse. Yeah.

54:11 Yeah. Yes, exactly. This one. And there it still uses SQLite under the hood,

54:17 but you don't need to worry about that. It takes care of the, of all that boilerplate for you.

54:21 You just say, okay, you know, select these columns from standard input, order them by this column. This

54:28 is the file or I've piped. Yeah. That's cool. Yeah. It's pretty cool. Yeah. I mean, maybe you've got

54:34 like some production database and you want to filter out. I just need this table with this particular

54:40 query, right? It's like, I only want to focus on my region of this data, give it to me as a CSV file,

54:46 and then you can go work on it all you want. You don't have to be connected to the database or near it

54:52 or any of those things, right? Potentially, if it doesn't have any sensitive data, you could share that,

54:56 right? You would never share the connection string to your database. That'd be insane.

55:00 Yeah. Yeah, exactly. Okay. Very cool. So what are some of the other tools? Well, if we go back,

55:06 if I go back to the CSV kit, you can see there's some of these you talked about. There's into CSV.

55:14 That one takes an Excel, XSL or XSLX and converts it to a CSV just on the command prompt or the terminal,

55:21 right? Yep. Yeah. Okay. Also, I should point out that I'm not the author of CSV kit,

55:27 right? I just contributed a small portion to it because of the ingredients that were already there.

55:33 Still proud of it though, but it's being created by many other people.

55:39 Sure. Of course. Some other things it has is like, CSV stat and CSV rep. Yeah. A lot of,

55:46 a lot of cool command line options to point at these things, right? Let's see. I pulled out, some others.

55:53 Rush. So one of the areas that they, the graph, the basically plotting to do, we're basically out of

55:59 time, but I want to, I want to talk about two things really quick. Right. The, some of this,

56:03 which chapter did you put it under where you have the pictures? Oops. seven. So seven,

56:09 visualizing, exploring data and then yeah. So if you, so tell us a little bit about this,

56:15 like you can plot stuff in your terminal. Yeah. Yeah. It's kind of crazy. I should say that rush

56:21 is a proof of concept, right? It's one of those projects that have a lot of potential, but don't

56:28 necessarily have enough users. And I don't necessarily have enough time to maintain it properly, but it does

56:35 prove the concept rush the name. I mean, it's for when you're in a rush, it's R on the shell and,

56:43 what it does, it, it leverages R under the hood and, for plotting, it leverages a particular R package,

56:51 GG plot two, which is the data visualization package for when you're working with R. Yeah.

56:57 Kind of the sibling or where Matt plot lib is a little bit derived from that, I believe. Right.

57:03 Well, well, well, now you're mentioning that actually map, it is very different. It's a map

57:10 out. It is very low level and gives you a lot of flexibility, but also requires a lot of work.

57:15 Now, if you're, if you want to visualize data in Python in a similar way that GG plot uses,

57:22 then I can recommend plot mine. So that's a Python package that is, modeled after GG plot to API,

57:31 but, that was a little bit, a little segue there. Now somebody else created a backend for

57:40 GG plot that allows you to create visualizations on the command line. What I then did was create this

57:47 interface. So something that would translate arguments and their values to the appropriate

57:54 function call. And also does a lot of border plate when it comes to reading in the CSV file that you

57:59 provide, right? If you were to do this in R itself, it would require, let's say about five lines of code

58:08 in order to get started. Right. And then the same also for Python, right? So similar concept, right?

58:14 import the appropriate packages or modules, reading in some file and there's all this setup. And you know,

58:21 again, that is probably what you want when you want things to be a little bit more robust, but when you

58:26 want to get stuff done quickly, yeah, it really helps to be able to do that as a one liner on a command line.

58:32 So I make use of all this, yeah, elaborate machinery, you know, in R just to, use that at the command line.

58:44 So a beautiful little wrapper around this complex thing, but it hides the complex complexity, right?

58:50 Exactly. Exactly.

58:51 Yeah. So you can do beautiful, like bar plots. There's a lot of neat stuff in here. I really like this.

58:57 It is really nice. And, now that I see this again, I get excited again. There is

59:04 definitely potential there, but you know, it's, it's again, yet another open source project that has to be,

59:10 maintained. And unfortunately my time is limited like, like everybody else's.

59:17 Yeah, of course. Of course. Yeah. All right. The last, last thing we have time for is this

59:21 polyglot data science. Tell us a little bit about this. Yeah. So polyglot data science is the idea

59:27 that in order to get things done, you might need to use multiple tools, multiple languages really. And,

59:36 throughout the book up until then, up until that chapter, we have mainly been focusing on using other

59:44 languages from the command line, but this chapter considers the other way around, right? Using the

59:50 command line from another language. So there might've been a situation where you're working in Python and

59:57 then all of a sudden like, ah, now I got to do this, this regular expression, or I got to do some globing

01:00:03 and, or I got to call, I have to call this, this other tool that is not written in Python, but can be

01:00:10 called from the command line, right? You would maybe use sub process, sub process module for that. These are

01:00:16 situations where you want to leverage the command line, where you want to break out of Python and do parts of

01:00:23 your computation on the command line. And in that chapter, chapter 10, I demonstrate this not only for

01:00:31 Python itself, but also in other languages and tools, including Jupyter lab, where you can pass around,

01:00:38 say a variable as standard input or, and also retrieve the output then so that you can continue working in

01:00:46 Python again with the output. So, and, what is still very interesting to me is that even new

01:00:54 languages and tools somehow still offer a way to leverage the command line. So Spark, Apache Spark,

01:01:03 has a pipe method where you can pass an entire dataset, right? RDD through a command line tool. And that,

01:01:12 I think that is just, it is, maybe it was just a fun little hack what the authors did. I don't know.

01:01:18 I tried to, to view it as a, as a compliment, like, okay, some, sometimes we just need to go back to the

01:01:25 basics and, and use the command line because once you're there, you're back in this environment where

01:01:31 you can use everything else. So everything we've spoken about so far is now accessible as a command,

01:01:36 be it go or Python or your own script or whatever. Exactly. So let's say,

01:01:41 you've written this, you've come across this really nice tool, but it's written in Ruby. Oh no. What

01:01:48 you're gonna, what are you gonna do? Are you gonna all of a sudden become, you know, involved into Ruby?

01:01:54 No. Assuming that this tool can be used from the command line, you can of course, relax, just use the

01:01:59 sub process module and still you incorporate that Ruby tool into your own script. That's the idea.

01:02:06 Yeah. I do want to maybe point out just really quickly here, like this has got a little bit of a,

01:02:12 a little Bobby tables warning asterisk by it, you know?

01:02:15 Yeah. Yeah. Yeah. Right. So for example, one of the things that's awesome here is I could run

01:02:20 Jupyter console as you show. And then if you say exclamation mark command that pumps it straight to

01:02:25 the shell. So you could say bang date, and it would tell you the day you go a bang pip install --upgrade

01:02:31 request and that'll go and execute that command. Don't do that with user input. Right? Who knows

01:02:37 what they're going to do. You can also do that within Jupyter notebooks you point out, right? So you can do,

01:02:43 what is it? Percent percent bash and then some interesting complicated thing there, right?

01:02:49 That's pretty. Yeah. Yeah. That's indeed the magic command that you can use in, in Jupyter notebook.

01:02:55 Right. And then higher cell is bash. Yeah. And so then you take what's left of that and then you head

01:03:00 over to explain shell and figure out what the heck it means. Yeah. Maybe do that before you run it. Yeah.

01:03:07 Yeah. That's a good idea. And then also in, in Python using sub process is something that I've done

01:03:14 several times. I need to automate generating some big import of say 150 video files across a bunch of

01:03:22 directories to build a course that we're going to offer. Well, and to the database I have to put,

01:03:26 how long is each one of those? I have no idea how to get the duration out of an MP4 or MOV file.

01:03:33 You know what? There's a really cool command line program I can run. It'll tell me. So I just use

01:03:38 sub process and call that. And then I can script out the rest in Python and it's, you know,

01:03:42 sub process is not to be underestimated. I think. Yeah. Yeah, exactly. No, it makes a lot of sense. I mean,

01:03:48 at a certain point, shell scripts can get a little bit too hairy to work with being able to automate

01:03:54 your things and use Python as your super glue, right? So a little bit stronger than duct tape,

01:04:01 I think makes a lot of sense. Yeah. We talked to the beginning about how

01:04:04 you're in this exploration stage and you just want to just run a bunch of stuff on the command line and

01:04:08 figure it out. But when you go to production and you said, whatever that means, like this could be one

01:04:12 of one thing that it means we're going to write formal Python code and then use sub process to

01:04:17 kind of bring in some of this functionality potentially. Yeah, exactly. I mean, the command